|

External Sorting

External sorting is a class of sorting algorithms that can handle massive amounts of data. External sorting is required when the data being sorted do not fit into the main memory of a computing device (usually RAM) and instead they must reside in the slower external memory, usually a disk drive. Thus, external sorting algorithms are external memory algorithms and thus applicable in the external memory model of computation. External sorting algorithms generally fall into two types, distribution sorting, which resembles quicksort, and external merge sort, which resembles merge sort. The latter typically uses a hybrid sort-merge strategy. In the sorting phase, chunks of data small enough to fit in main memory are read, sorted, and written out to a temporary file. In the merge phase, the sorted subfiles are combined into a single larger file. Model External sorting algorithms can be analyzed in the external memory model. In this model, a cache or internal memory of size ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sorting

Sorting refers to ordering data in an increasing or decreasing manner according to some linear relationship among the data items. # ordering: arranging items in a sequence ordered by some criterion; # categorizing: grouping items with similar properties. Ordering items is the combination of categorizing them based on equivalent order, and ordering the categories themselves. Sorting information or data In , arranging in an ordered sequence is called "sorting". Sorting is a common operation in many applications, and efficient algorithms to perform it have been developed. The most common uses of sorted sequences are: * making lookup or search efficient; * making merging of sequences efficient. * enable processing of data in a defined order. The opposite of sorting, rearranging a sequence of items in a random or meaningless order, is called shuffling. For sorting, either a weak order, "should not come after", can be specified, or a strict weak order, "should come before" (s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Big O Notation

Big ''O'' notation is a mathematical notation that describes the limiting behavior of a function when the argument tends towards a particular value or infinity. Big O is a member of a family of notations invented by Paul Bachmann, Edmund Landau, and others, collectively called Bachmann–Landau notation or asymptotic notation. The letter O was chosen by Bachmann to stand for '' Ordnung'', meaning the order of approximation. In computer science, big O notation is used to classify algorithms according to how their run time or space requirements grow as the input size grows. In analytic number theory, big O notation is often used to express a bound on the difference between an arithmetical function and a better understood approximation; a famous example of such a difference is the remainder term in the prime number theorem. Big O notation is also used in many other fields to provide similar estimates. Big O notation characterizes functions according to their growth rates: d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Blocking (data Storage)

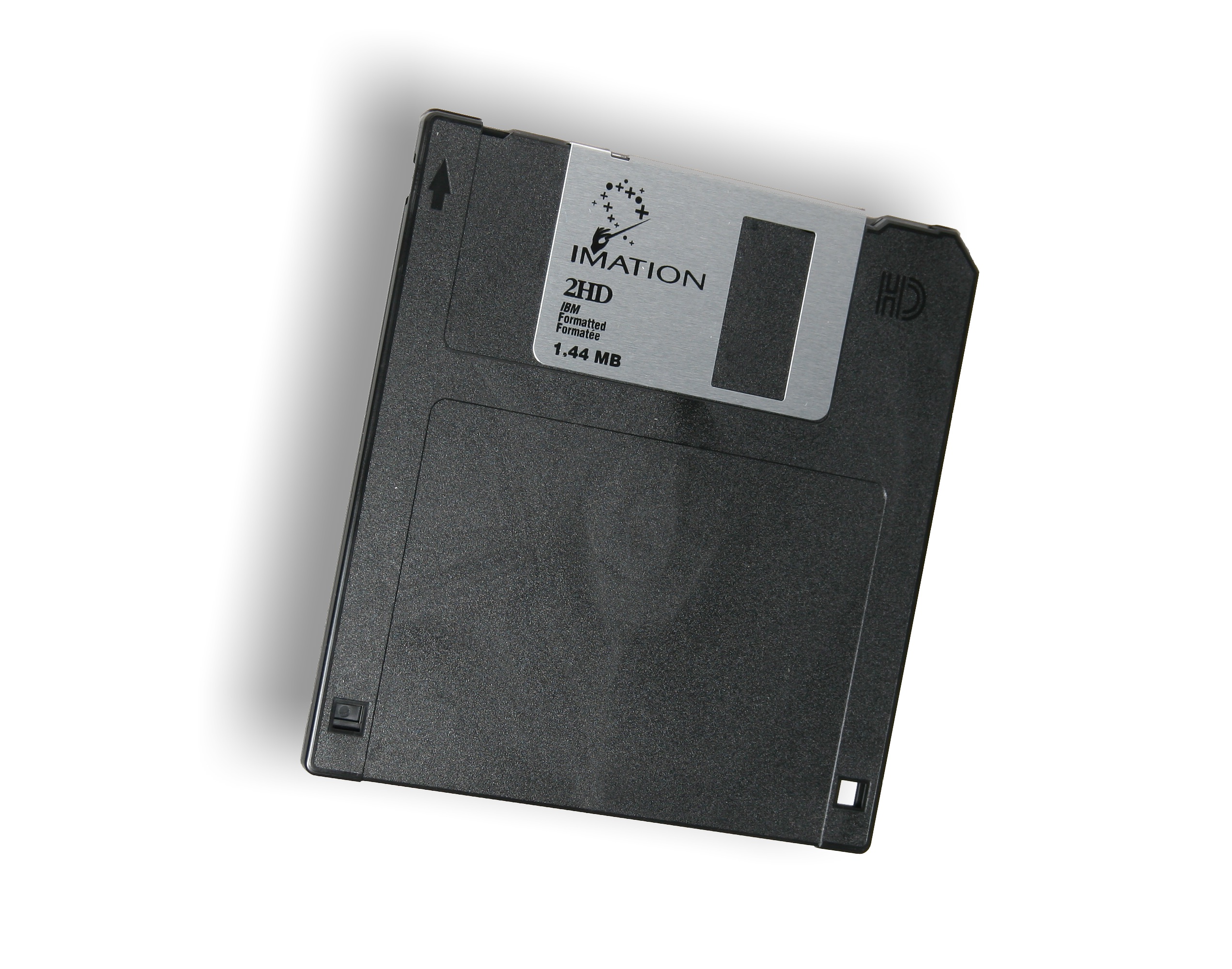

In computing (specifically data transmission and data storage), a block, sometimes called a physical record, is a sequence of bytes or bits, usually containing some whole number of records, having a maximum length; a ''block size''. Data thus structured are said to be ''blocked''. The process of putting data into blocks is called ''blocking'', while ''deblocking'' is the process of extracting data from blocks. Blocked data is normally stored in a data buffer, and read or written a whole block at a time. Blocking reduces the overhead and speeds up the handling of the data stream. For some devices, such as magnetic tape and CKD disk devices, blocking reduces the amount of external storage required for the data. Blocking is almost universally employed when storing data to 9-track magnetic tape, NAND flash memory, and rotating media such as floppy disks, hard disks, and optical discs. Most file systems are based on a block device, which is a level of abstraction for the hardw ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Solid-state Drive

A solid-state drive (SSD) is a solid-state storage device that uses integrated circuit assemblies to store data persistently, typically using flash memory, and functioning as secondary storage in the hierarchy of computer storage. It is also sometimes called a semiconductor storage device, a solid-state device or a solid-state disk, even though SSDs lack the physical spinning disks and movable read–write heads used in hard disk drives (HDDs) and floppy disks. SSD also has rich internal parallelism for data processing. In comparison to hard disk drives and similar electromechanical media which use moving parts, SSDs are typically more resistant to physical shock, run silently, and have higher input/output rates and lower latency. SSDs store data in semiconductor cells. cells can contain between 1 and 4 bits of data. SSD storage devices vary in their properties according to the number of bits stored in each cell, with single-bit cells ("Single Level Cells" or " ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Solid-state Disk

A solid-state drive (SSD) is a solid-state storage device that uses integrated circuit assemblies to store data persistently, typically using flash memory, and functioning as secondary storage in the hierarchy of computer storage. It is also sometimes called a semiconductor storage device, a solid-state device or a solid-state disk, even though SSDs lack the physical spinning disks and movable read–write heads used in hard disk drives (HDDs) and floppy disks. SSD also has rich internal parallelism for data processing. In comparison to hard disk drives and similar electromechanical media which use moving parts, SSDs are typically more resistant to physical shock, run silently, and have higher input/output rates and lower latency. SSDs store data in semiconductor cells. cells can contain between 1 and 4 bits of data. SSD storage devices vary in their properties according to the number of bits stored in each cell, with single-bit cells ("Single Level Cells" or "SLC") ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hard Disk Drive

A hard disk drive (HDD), hard disk, hard drive, or fixed disk is an electro-mechanical data storage device that stores and retrieves digital data using magnetic storage with one or more rigid rapidly rotating platters coated with magnetic material. The platters are paired with magnetic heads, usually arranged on a moving actuator arm, which read and write data to the platter surfaces. Data is accessed in a random-access manner, meaning that individual blocks of data can be stored and retrieved in any order. HDDs are a type of non-volatile storage, retaining stored data when powered off. Modern HDDs are typically in the form of a small rectangular box. Introduced by IBM in 1956, HDDs were the dominant secondary storage device for general-purpose computers beginning in the early 1960s. HDDs maintained this position into the modern era of servers and personal computers, though personal computing devices produced in large volume, like cell phones and tablets, rely ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Disk Seek

Higher performance in hard disk drives comes from devices which have better performance characteristics. These performance characteristics can be grouped into two categories: access time and data transfer time (or rate). Access time The ''access time'' or ''response time'' of a rotating drive is a measure of the time it takes before the drive can actually transfer data. The factors that control this time on a rotating drive are mostly related to the mechanical nature of the rotating disks and moving heads. It is composed of a few independently measurable elements that are added together to get a single value when evaluating the performance of a storage device. The access time can vary significantly, so it is typically provided by manufacturers or measured in benchmarks as an average. The key components that are typically added together to obtain the access time are: * Seek time * Rotational latency * Command processing time * Settle time Seek time With rotating drives, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

K-way Merging

Merge algorithms are a family of algorithms that take multiple sorted lists as input and produce a single list as output, containing all the elements of the inputs lists in sorted order. These algorithms are used as subroutines in various sorting algorithms, most famously merge sort. Application The merge algorithm plays a critical role in the merge sort algorithm, a comparison-based sorting algorithm. Conceptually, the merge sort algorithm consists of two steps: # Recursively divide the list into sublists of (roughly) equal length, until each sublist contains only one element, or in the case of iterative (bottom up) merge sort, consider a list of ''n'' elements as ''n'' sub-lists of size 1. A list containing a single element is, by definition, sorted. # Repeatedly merge sublists to create a new sorted sublist until the single list contains all elements. The single list is the sorted list. The merge algorithm is used repeatedly in the merge sort algorithm. An example merge ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Megabyte

The megabyte is a multiple of the unit byte for digital information. Its recommended unit symbol is MB. The unit prefix ''mega'' is a multiplier of (106) in the International System of Units (SI). Therefore, one megabyte is one million bytes of information. This definition has been incorporated into the International System of Quantities. In the computer and information technology fields, other definitions have been used that arose for historical reasons of convenience. A common usage has been to designate one megabyte as (220 B), a quantity that conveniently expresses the binary architecture of digital computer memory. The standards bodies have deprecated this usage of the megabyte in favor of a new set of binary prefixes, in which this quantity is designated by the unit mebibyte (MiB). Definitions The unit megabyte is commonly used for 10002 (one million) bytes or 10242 bytes. The interpretation of using base 1024 originated as technical jargon for the byte multiples that ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Recursion

Recursion (adjective: ''recursive'') occurs when a thing is defined in terms of itself or of its type. Recursion is used in a variety of disciplines ranging from linguistics to logic. The most common application of recursion is in mathematics and computer science, where a function being defined is applied within its own definition. While this apparently defines an infinite number of instances (function values), it is often done in such a way that no infinite loop or infinite chain of references ("crock recursion") can occur. Formal definitions In mathematics and computer science, a class of objects or methods exhibits recursive behavior when it can be defined by two properties: * A simple ''base case'' (or cases) — a terminating scenario that does not use recursion to produce an answer * A ''recursive step'' — a set of rules that reduces all successive cases toward the base case. For example, the following is a recursive definition of a person's ''ancestor''. One's anc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sartaj Sahni

Professor Sartaj Kumar Sahni (born July 22, 1949, in Pune, India) is a computer scientist based in the United States, and is one of the pioneers in the field of data structures. He is a distinguished professor in the Department of Computer and Information Science and Engineering at the University of Florida. Education Sahni received his BTech degree in electrical engineering from the Indian Institute of Technology Kanpur. Following this, he undertook his graduate studies at Cornell University in the USA, earning a PhD degree in 1973, under the supervision of Ellis Horowitz. Research and publications Sahni has published over 280 research papers and written 15 textbooks. His research publications are on the design and analysis of efficient algorithms, data structures, parallel computing, interconnection networks, design automation, and medical algorithms. With his advisor Ellis Horowitz, Sahni wrote two widely used textbooks, ''Fundamentals of Computer Algorithms'' and ''F ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ellis Horowitz

Ellis Horowitz is an American computer scientist and Professor of Computer Science and Electrical Engineering at the University of Southern California (USC). Horowitz is best known for his computer science textbooks on data structures and algorithms, co-authored with Sartaj Sahni. At USC, Horowitz was chairman of the Computer Science Department from 1990 to 1999. During his tenure he significantly improved relations between Computer Science and the Information Sciences Institute (ISI), hiring senior faculty, and establishing the department's first industrial advisory board. From 1983 to 1993 with Lawrence Flon he co-founded Quality Software Products which designed and built UNIX application software. Their products included two spreadsheet programs, Q-calc and eXclaim, a project management system, MasterPlan, and a floating license server, Maitre D. The company was sold to Island Graphics. Education * B.S. (Mathematics) Brooklyn College, 1964. * M.S. (Computer Science) Universit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |