|

Explicit Multi-threading

Explicit Multi-Threading (XMT) is a computer science paradigm for building and programming parallel computers designed around the parallel random-access machine (PRAM) parallel computational model. A more direct explanation of XMT starts with the rudimentary abstraction that made serial computing simple: that any single instruction available for execution in a serial program executes immediately. A consequence of this abstraction is a step-by-step (inductive) explication of the instruction available next for execution. The rudimentary parallel abstraction behind XMT, dubbed Immediate Concurrent Execution (ICE) in , is that indefinitely many instructions available for concurrent execution execute immediately. A consequence of ICE is a step-by-step (inductive) explication of the instructions available next for concurrent execution. Moving beyond the serial von Neumann computer (the only successful general-purpose platform to date), the aspiration of XMT is that computer science will ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Science

Computer science is the study of computation, automation, and information. Computer science spans theoretical disciplines (such as algorithms, theory of computation, information theory, and automation) to practical disciplines (including the design and implementation of hardware and software). Computer science is generally considered an area of academic research and distinct from computer programming. Algorithms and data structures are central to computer science. The theory of computation concerns abstract models of computation and general classes of problems that can be solved using them. The fields of cryptography and computer security involve studying the means for secure communication and for preventing security vulnerabilities. Computer graphics and computational geometry address the generation of images. Programming language theory considers different ways to describe computational processes, and database theory concerns the management of repositories ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

XMTC

XMTC (for explicit multi-threading C) is a shared-memory parallel programming language. It is an extension of the C programming language which strives to enable easy PRAM-like programming based on the explicit multi-threading paradigm. It is developed as part of thXMT PRAM-On-Chip visionby a research team at the University of Maryland, College Park, led by Dr. Uzi Vishkin Uzi Vishkin (born 1953) is a computer scientist at the University of Maryland, College Park, where he is Professor of Electrical and Computer Engineering at the University of Maryland Institute for Advanced Computer Studies (UMIACS). Uzi Vishkin .... The philosophy of XMTC and the whole XMT project is that parallel programming is a hard intellectual task and the approach of building a hardware system first and then figuring out how to program them has not had much success. For that reason a robust algorithmic theory and a reasonably easy hardware abstraction should be the specifications that guide how to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Triconnected Component

In graph theory, a branch of mathematics, the triconnected components of a biconnected graph are a system of smaller graphs that describe all of the 2-vertex cuts in the graph. An SPQR tree is a tree data structure used in computer science Computer science is the study of computation, automation, and information. Computer science spans theoretical disciplines (such as algorithms, theory of computation, information theory, and automation) to practical disciplines (includin ..., and more specifically graph algorithms, to represent the triconnected components of a graph. The SPQR tree of a graph may be constructed in linear time; . and has several applications in dynamic graph algorithms and graph drawing. The basic structures underlying the SPQR tree, the triconnected components of a graph, and the connection between this decomposition and the planar embeddings of a planar graph, were first investigated by ; these structures were used in efficient algorithms by seve ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Biconnected Graph

In graph theory, a biconnected graph is a connected and "nonseparable" graph, meaning that if any one vertex were to be removed, the graph will remain connected. Therefore a biconnected graph has no articulation vertices. The property of being 2-connected is equivalent to biconnectivity, except that the complete graph of two vertices is usually not regarded as 2-connected. This property is especially useful in maintaining a graph with a two-fold redundancy, to prevent disconnection upon the removal of a single edge (or connection). The use of biconnected graphs is very important in the field of networking (see Network flow), because of this property of redundancy. Definition A biconnected undirected graph is a connected graph that is not broken into disconnected pieces by deleting any single vertex (and its incident edges). A biconnected directed graph is one such that for any two vertices ''v'' and ''w'' there are two directed paths from ''v'' to ''w'' which have no vert ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Connectivity (graph Theory)

In mathematics and computer science, connectivity is one of the basic concepts of graph theory: it asks for the minimum number of elements (nodes or edges) that need to be removed to separate the remaining nodes into two or more isolated subgraphs. It is closely related to the theory of network flow problems. The connectivity of a graph is an important measure of its resilience as a network. Connected vertices and graphs In an undirected graph , two '' vertices'' and are called connected if contains a path from to . Otherwise, they are called disconnected. If the two vertices are additionally connected by a path of length , i.e. by a single edge, the vertices are called adjacent. A graph is said to be connected if every pair of vertices in the graph is connected. This means that there is a path between every pair of vertices. An undirected graph that is not connected is called disconnected. An undirected graph ''G'' is therefore disconnected if there exist two ver ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Maximum Flow Problem

In optimization theory, maximum flow problems involve finding a feasible flow through a flow network that obtains the maximum possible flow rate. The maximum flow problem can be seen as a special case of more complex network flow problems, such as the circulation problem. The maximum value of an s-t flow (i.e., flow from source s to sink t) is equal to the minimum capacity of an s-t cut (i.e., cut severing s from t) in the network, as stated in the max-flow min-cut theorem. History The maximum flow problem was first formulated in 1954 by T. E. Harris and F. S. Ross as a simplified model of Soviet railway traffic flow. In 1955, Lester R. Ford, Jr. and Delbert R. Fulkerson created the first known algorithm, the Ford–Fulkerson algorithm.Ford, L.R., Jr.; Fulkerson, D.R., ''Flows in Networks'', Princeton University Press (1962). In their 1955 paper, Ford and Fulkerson wrote that the problem of Harris and Ross is formulated as follows (see p. 5):Consider a rail network con ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Von Neumann Architecture

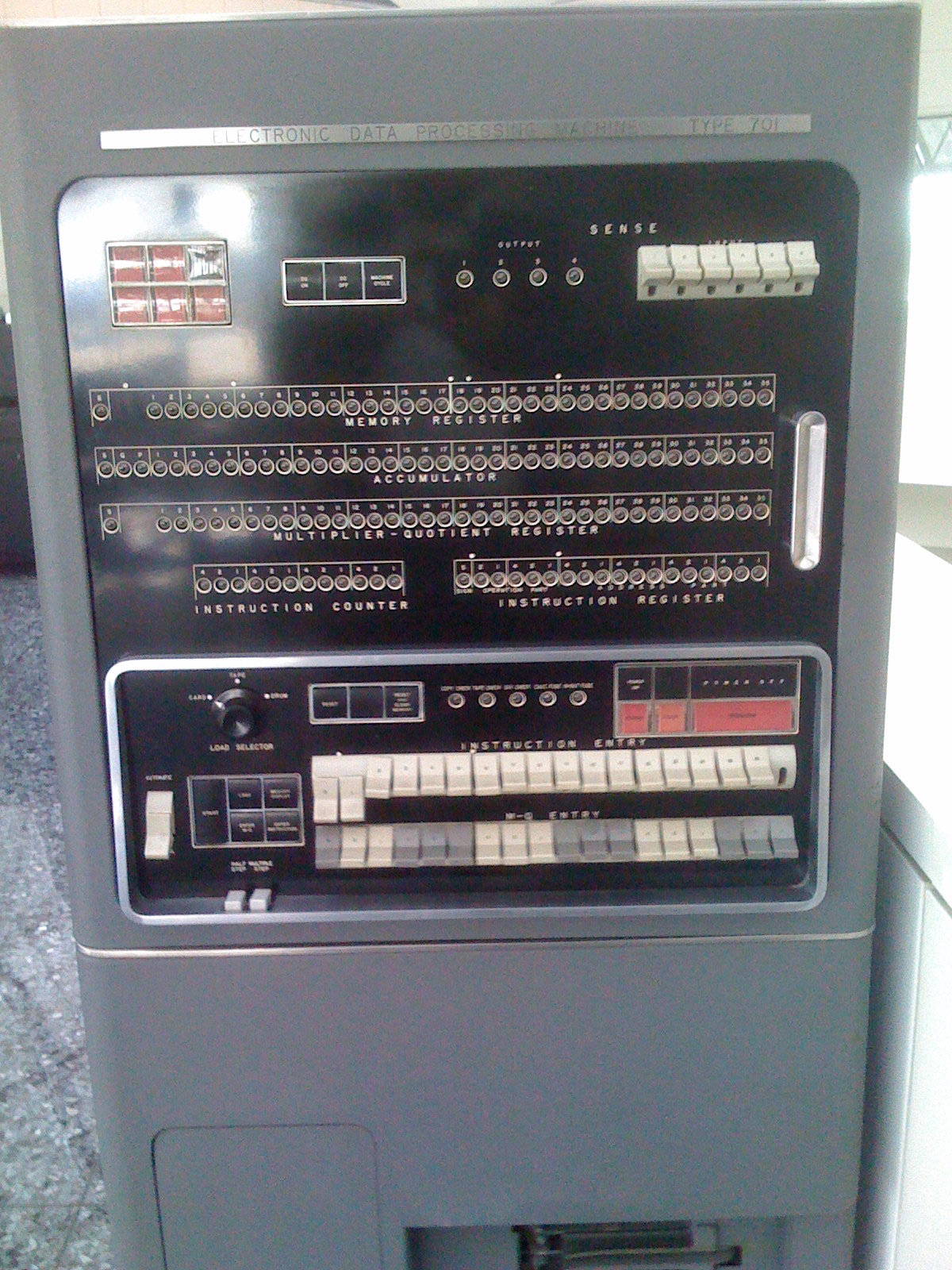

The von Neumann architecture — also known as the von Neumann model or Princeton architecture — is a computer architecture based on a 1945 description by John von Neumann, and by others, in the '' First Draft of a Report on the EDVAC''. The document describes a design architecture for an electronic digital computer with these components: * A processing unit with both an arithmetic logic unit and processor registers * A control unit that includes an instruction register and a program counter * Memory that stores data and instructions * External mass storage * Input and output mechanisms.. The term "von Neumann architecture" has evolved to refer to any stored-program computer in which an instruction fetch and a data operation cannot occur at the same time (since they share a common bus). This is referred to as the von Neumann bottleneck, which often limits the performance of the corresponding system. The design of a von Neumann architecture machine is simpler than in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Program Counter

The program counter (PC), commonly called the instruction pointer (IP) in Intel x86 and Itanium microprocessors, and sometimes called the instruction address register (IAR), the instruction counter, or just part of the instruction sequencer, is a processor register that indicates where a computer is in its program sequence. Usually, the PC is incremented after fetching an instruction, and holds the memory address of ("points to") the next instruction that would be executed. Processors usually fetch instructions sequentially from memory, but ''control transfer'' instructions change the sequence by placing a new value in the PC. These include branches (sometimes called jumps), subroutine calls, and returns. A transfer that is conditional on the truth of some assertion lets the computer follow a different sequence under different conditions. A branch provides that the next instruction is fetched from elsewhere in memory. A subroutine call not only branches but saves the prec ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Uzi Vishkin

Uzi Vishkin (born 1953) is a computer scientist at the University of Maryland, College Park, where he is Professor of Electrical and Computer Engineering at the University of Maryland Institute for Advanced Computer Studies (UMIACS). Uzi Vishkin is known for his work in the field of parallel computing. In 1996, he was inducted as a Fellow of the Association for Computing Machinery, with the following citation: "One of the pioneers of parallel algorithms research, Dr. Vishkin's seminal contributions played a leading role in forming and shaping what thinking in parallel has come to mean in the fundamental theory of Computer Science." Biography Uzi Vishkin was born in Tel Aviv, Israel. He completed his B.Sc. (1974) and M.Sc. in Mathematics at the Hebrew University, before earning his D.Sc. in Computer Science at the Technion (1981). He then spent a year working at the IBM Thomas J. Watson Research Center in Yorktown Heights, New York. From 1982 to 1984, he worked at the departme ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parallel Random-access Machine

In computer science, a parallel random-access machine (parallel RAM or PRAM) is a shared-memory abstract machine. As its name indicates, the PRAM is intended as the parallel-computing analogy to the random-access machine (RAM) (not to be confused with random-access memory). In the same way that the RAM is used by sequential-algorithm designers to model algorithmic performance (such as time complexity), the PRAM is used by parallel-algorithm designers to model parallel algorithmic performance (such as time complexity, where the number of processors assumed is typically also stated). Similar to the way in which the RAM model neglects practical issues, such as access time to cache memory versus main memory, the PRAM model neglects such issues as synchronization and communication, but provides any (problem-size-dependent) number of processors. Algorithm cost, for instance, is estimated using two parameters O(time) and O(time × processor_number). Read/write conflicts Read/write conf ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multi-core

A multi-core processor is a microprocessor on a single integrated circuit with two or more separate processing units, called cores, each of which reads and executes program instructions. The instructions are ordinary CPU instructions (such as add, move data, and branch) but the single processor can run instructions on separate cores at the same time, increasing overall speed for programs that support multithreading or other parallel computing techniques. Manufacturers typically integrate the cores onto a single integrated circuit die (known as a chip multiprocessor or CMP) or onto multiple dies in a single chip package. The microprocessors currently used in almost all personal computers are multi-core. A multi-core processor implements multiprocessing in a single physical package. Designers may couple cores in a multi-core device tightly or loosely. For example, cores may or may not share caches, and they may implement message passing or shared-memory inter-core comm ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parallel Computing

Parallel computing is a type of computation in which many calculations or processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: bit-level, instruction-level, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling.S.V. Adve ''et al.'' (November 2008)"Parallel Computing Research at Illinois: The UPCRC Agenda" (PDF). Parallel@Illinois, University of Illinois at Urbana-Champaign. "The main techniques for these performance benefits—increased clock frequency and smarter but increasingly complex architectures—are now hitting the so-called power wall. The computer industry has accepted that future performance increases must largely come from increasing the number of processors (or cores) on a die, rather tha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |