|

Evolutionary Database Design

Evolutionary database design involves incremental improvements to the database schema so that it can be continuously updated with changes, reflecting the customer's requirements. People across the globe work on the same piece of software at the same time hence, there is a need for techniques that allow a smooth evolution of database as the design develops. Such methods utilize automated refactoring and continuous integration so that it supports agile methodologies for software development. These development techniques are applied on systems that are in pre-production stage as well on systems that have already been released. These techniques not only cover relevant changes in the database schema according to customer's changing needs, but also migration of modified data into the database and also customizing the database access code accordingly without changing the data semantics. History After using the waterfall model for a long time, the software industry has witnessed a rise i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Incrementalism

:''In politics, the term "incrementalism" is also used as a synonym for Gradualism#Politics and society, Gradualism.'' Incrementalism is a method of working by adding to a project using many small wikt:incremental, incremental changes instead of a few (extensively planned) large jumps. Logical incrementalism implies that the steps in the process are sensible. Logical incrementalism focuses on "the Power-Behavioral Approach to planning rather than to the Formal Systems Planning Approach". In public policy, incrementalism is the method of change by which many small policy changes are enacted over time in order to create a larger broad based policy change. Political scientist Charles E. Lindblom developed this theoretical policy of rationality in the 1950s as a middle way between the rational actor model and bounded rationality, as both long term, goal-driven policy rationality and satisficing were not seen as adequate. Origin Most people use incrementalism without ever needing a n ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Table (database)

A table is a collection of related data held in a table format within a database. It consists of columns and rows. In relational databases, and flat file databases, a ''table'' is a set of data elements (values) using a model of vertical columns (identifiable by name) and horizontal rows, the cell being the unit where a row and column intersect. A table has a specified number of columns, but can have any number of rows. Each row is identified by one or more values appearing in a particular column subset. A specific choice of columns which uniquely identify rows is called the primary key. "Table" is another term for "relation"; although there is the difference in that a table is usually a multiset (bag) of rows where a relation is a set and does not allow duplicates. Besides the actual data rows, tables generally have associated with them some metadata, such as constraints on the table or on the values within particular columns. The data in a table does not have to be ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Configuration Management

Configuration management (CM) is a process for establishing and maintaining consistency of a product's performance, functional, and physical attributes with its requirements, design, and operational information throughout its life. The CM process is widely used by military engineering organizations to manage changes throughout the system lifecycle of complex systems, such as weapon systems, military vehicles, and information systems. Outside the military, the CM process is also used with IT service management as defined by ITIL, and with other domain models in the civil engineering and other industrial engineering segments such as roads, bridges, canals, dams, and buildings. Introduction CM applied over the life cycle of a system provides visibility and control of its performance, functional, and physical attributes. CM verifies that a system performs as intended, and is identified and documented in sufficient detail to support its projected life cycle. The CM process f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

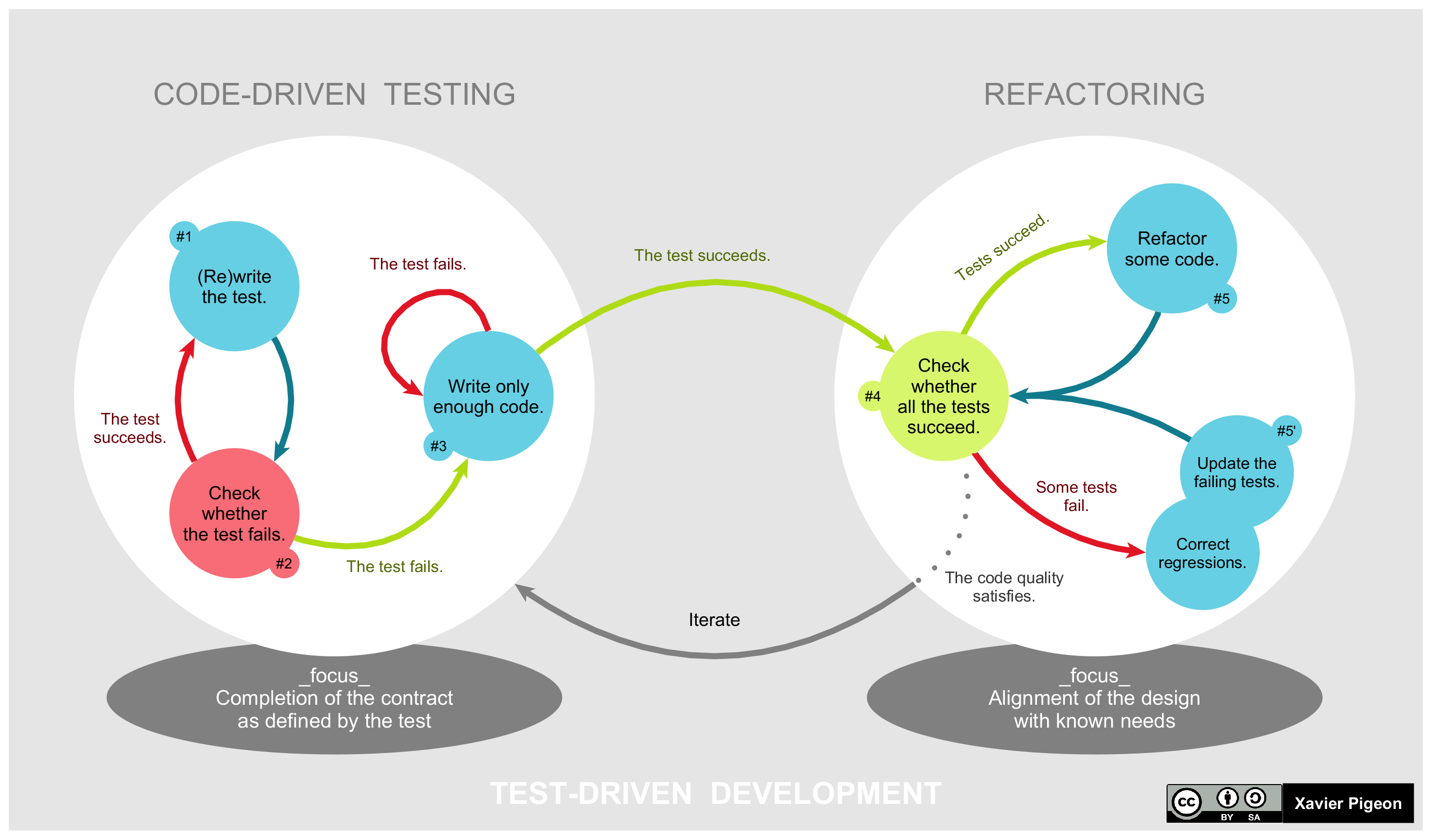

Test-first Development

Test-driven development (TDD) is a software development process relying on software requirements being converted to test cases before software is fully developed, and tracking all software development by repeatedly testing the software against all test cases. This is as opposed to software being developed first and test cases created later. Software engineer Kent Beck, who is credited with having developed or "rediscovered" the technique, stated in 2003 that TDD encourages simple designs and inspires confidence. Test-driven development is related to the test-first programming concepts of extreme programming, begun in 1999, but more recently has created more general interest in its own right.Newkirk, JW and Vorontsov, AA. ''Test-Driven Development in Microsoft .NET'', Microsoft Press, 2004. Programmers also apply the concept to improving and debugging legacy code developed with older techniques.Feathers, M. Working Effectively with Legacy Code, Prentice Hall, 2004 Test-driv ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Test Case

In software engineering, a test case is a specification of the inputs, execution conditions, testing procedure, and expected results that define a single test to be executed to achieve a particular software testing objective, such as to exercise a particular program path or to verify compliance with a specific requirement. Test cases underlie testing that is methodical rather than haphazard. A battery of test cases can be built to produce the desired coverage of the software being tested. Formally defined test cases allow the same tests to be run repeatedly against successive versions of the software, allowing for effective and consistent regression testing. Formal test cases In order to fully test that all the requirements of an application are met, there must be at least two test cases for each requirement: one positive test and one negative test. If a requirement has sub-requirements, each sub-requirement must have at least two test cases. Keeping track of the link between ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Regression Testing

Regression testing (rarely, ''non-regression testing'') is re-running functional and non-functional tests to ensure that previously developed and tested software still performs as expected after a change. If not, that would be called a ''regression''. Changes that may require regression testing include bug fixes, software enhancements, configuration changes, and even substitution of electronic components. As regression test suites tend to grow with each found defect, test automation is frequently involved. Sometimes a change impact analysis is performed to determine an appropriate subset of tests (''non-regression analysis''). Background As software is updated or changed, or reused on a modified target, emergence of new faults and/or re-emergence of old faults is quite common. Sometimes re-emergence occurs because a fix gets lost through poor revision control practices (or simple human error in revision control). Often, a fix for a problem will be " fragile" in that it fix ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Referential Integrity

Referential integrity is a property of data stating that all its references are valid. In the context of relational databases, it requires that if a value of one attribute (column) of a relation (table) references a value of another attribute (either in the same or a different relation), then the referenced value must exist. For referential integrity to hold in a relational database, any column in a base table that is declared a foreign key can only contain either null values or values from a parent table's primary key or a candidate key. In other words, when a foreign key value is used it must reference a valid, existing primary key in the parent table. For instance, deleting a record that contains a value referred to by a foreign key in another table would break referential integrity. Some relational database management systems (RDBMS) can enforce referential integrity, normally either by deleting the foreign key rows as well to maintain integrity, or by returning an error and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Validation And Reconciliation

Industrial process data validation and reconciliation, or more briefly, process data reconciliation (PDR), is a technology that uses process information and mathematical methods in order to automatically ensure data validation and reconciliation by correcting measurements in industrial processes. The use of PDR allows for extracting accurate and reliable information about the state of industry processes from raw measurement data and produces a single consistent set of data representing the most likely process operation. Models, data and measurement errors Industrial processes, for example chemical or thermodynamic processes in chemical plants, refineries, oil or gas production sites, or power plants, are often represented by two fundamental means: # Models that express the general structure of the processes, # Data that reflects the state of the processes at a given point in time. Models can have different levels of detail, for example one can incorporate simple mass or compound con ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Structure

In computer science, a data structure is a data organization, management, and storage format that is usually chosen for Efficiency, efficient Data access, access to data. More precisely, a data structure is a collection of data values, the relationships among them, and the functions or operations that can be applied to the data, i.e., it is an algebraic structure about data. Usage Data structures serve as the basis for abstract data types (ADT). The ADT defines the logical form of the data type. The data structure implements the physical form of the data type. Different types of data structures are suited to different kinds of applications, and some are highly specialized to specific tasks. For example, Relational database, relational databases commonly use B-tree indexes for data retrieval, while compiler Implementation, implementations usually use hash tables to look up identifiers. Data structures provide a means to manage large amounts of data efficiently for uses such a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Attribute (computing)

In computing, an attribute is a specification that defines a property of an object, element, or file. It may also refer to or set the specific value for a given instance of such. For clarity, attributes should more correctly be considered metadata. An attribute is frequently and generally a property of a property. However, in actual usage, the term attribute can and is often treated as equivalent to a property depending on the technology being discussed. An attribute of an object usually consists of a name and a value; of an element, a type or class name; of a file, a name and extension. * Each named attribute has an associated set of rules called operations: one doesn't sum characters or manipulate and process an integer array as an image object—one doesn't process text as type floating point ( decimal numbers). * It follows that an object definition can be extended by imposing data typing: a representation format, a default value, and legal operations (rules) and restri ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Entity–attribute–value Model

Entity–attribute–value model (EAV) is a data model to encode, in a space-efficient manner, entities where the number of attributes (properties, parameters) that can be used to describe them is potentially vast, but the number that will actually apply to a given entity is relatively modest. Such entities correspond to the mathematical notion of a sparse matrix. EAV is also known as object–attribute–value model, vertical database model, and open schema. Data structure This data representation is analogous to space-efficient methods of storing a sparse matrix, where only non-empty values are stored. In an EAV data model, each attribute–value pair is a fact describing an entity, and a row in an EAV table stores a single fact. EAV tables are often described as "long and skinny": "long" refers to the number of rows, "skinny" to the few columns. Data is recorded as three columns: * The ''entity'': the item being described. * The ''attribute'' or ''parameter'': typically imple ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Modeling

Data modeling in software engineering is the process of creating a data model for an information system by applying certain formal techniques. Overview Data modeling is a process used to define and analyze data requirements needed to support the business processes within the scope of corresponding information systems in organizations. Therefore, the process of data modeling involves professional data modelers working closely with business stakeholders, as well as potential users of the information system. There are three different types of data models produced while progressing from requirements to the actual database to be used for the information system.Simison, Graeme. C. & Witt, Graham. C. (2005). ''Data Modeling Essentials''. 3rd Edition. Morgan Kaufmann Publishers. The data requirements are initially recorded as a conceptual data model which is essentially a set of technology independent specifications about the data and is used to discuss initial requirements ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |