|

Estimators

In statistics, an estimator is a rule for calculating an estimate of a given quantity based on observed data: thus the rule (the estimator), the quantity of interest (the estimand) and its result (the estimate) are distinguished. For example, the sample mean is a commonly used estimator of the population mean. There are point and interval estimators. The point estimators yield single-valued results. This is in contrast to an interval estimator, where the result would be a range of plausible values. "Single value" does not necessarily mean "single number", but includes vector valued or function valued estimators. ''Estimation theory'' is concerned with the properties of estimators; that is, with defining properties that can be used to compare different estimators (different rules for creating estimates) for the same quantity, based on the same data. Such properties can be used to determine the best rules to use under given circumstances. However, in robust statistics, statisti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Robust Statistics

Robust statistics are statistics that maintain their properties even if the underlying distributional assumptions are incorrect. Robust Statistics, statistical methods have been developed for many common problems, such as estimating location parameter, location, scale parameter, scale, and regression coefficient, regression parameters. One motivation is to produce statistical methods that are not unduly affected by outliers. Another motivation is to provide methods with good performance when there are small departures from a Parametric statistics, parametric distribution. For example, robust methods work well for mixtures of two normal distributions with different standard deviations; under this model, non-robust methods like a t-test work poorly. Introduction Robust statistics seek to provide methods that emulate popular statistical methods, but are not unduly affected by outliers or other small departures from Statistical assumption, model assumptions. In statistics, classical e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

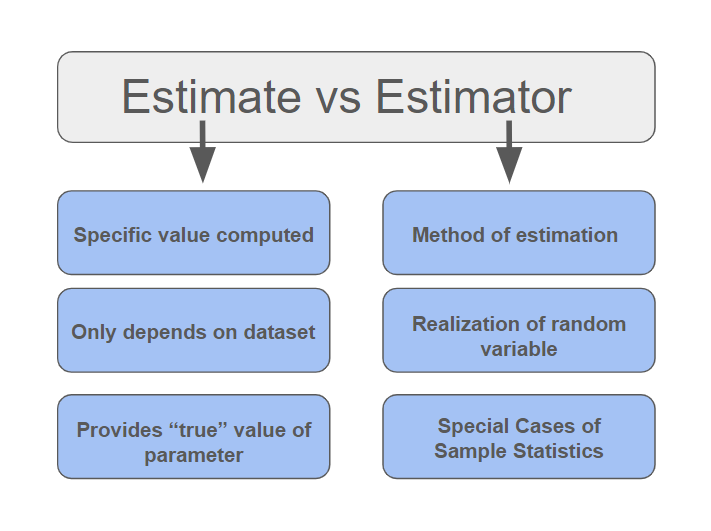

Estimator Vs Estimate

In statistics, an estimator is a rule for calculating an estimate of a given quantity based on Sample (statistics), observed data: thus the rule (the estimator), the quantity of interest (the estimand) and its result (the estimate) are distinguished. For example, the sample mean is a commonly used estimator of the population mean. There are point estimator, point and interval estimators. The point estimators yield single-valued results. This is in contrast to an interval estimator, where the result would be a range of plausible values. "Single value" does not necessarily mean "single number", but includes vector valued or function valued estimators. ''Estimation theory'' is concerned with the properties of estimators; that is, with defining properties that can be used to compare different estimators (different rules for creating estimates) for the same quantity, based on the same data. Such properties can be used to determine the best rules to use under given circumstances. Howeve ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Unbiasedness

In statistics, the bias of an estimator (or bias function) is the difference between this estimator's expected value and the true value of the parameter being estimated. An estimator or decision rule with zero bias is called ''unbiased''. In statistics, "bias" is an property of an estimator. Bias is a distinct concept from consistency: consistent estimators converge in probability to the true value of the parameter, but may be biased or unbiased (see bias versus consistency for more). All else being equal, an unbiased estimator is preferable to a biased estimator, although in practice, biased estimators (with generally small bias) are frequently used. When a biased estimator is used, bounds of the bias are calculated. A biased estimator may be used for various reasons: because an unbiased estimator does not exist without further assumptions about a population; because an estimator is difficult to compute (as in unbiased estimation of standard deviation); because a biased estima ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Point Estimate

In statistics, point estimation involves the use of sample data to calculate a single value (known as a point estimate since it identifies a point in some parameter space) which is to serve as a "best guess" or "best estimate" of an unknown population parameter (for example, the population mean). More formally, it is the application of a point estimator to the data to obtain a point estimate. Point estimation can be contrasted with interval estimation: such interval estimates are typically either confidence intervals, in the case of frequentist inference, or credible intervals, in the case of Bayesian inference. More generally, a point estimator can be contrasted with a set estimator. Examples are given by confidence sets or credible sets. A point estimator can also be contrasted with a distribution estimator. Examples are given by confidence distributions, randomized estimators, and Bayesian posteriors. Properties of point estimates Biasedness “Bias” is define ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Consistent Estimator

In statistics, a consistent estimator or asymptotically consistent estimator is an estimator—a rule for computing estimates of a parameter ''θ''0—having the property that as the number of data points used increases indefinitely, the resulting sequence of estimates converges in probability to ''θ''0. This means that the distributions of the estimates become more and more concentrated near the true value of the parameter being estimated, so that the probability of the estimator being arbitrarily close to ''θ''0 converges to one. In practice one constructs an estimator as a function of an available sample of size ''n'', and then imagines being able to keep collecting data and expanding the sample ''ad infinitum''. In this way one would obtain a sequence of estimates indexed by ''n'', and consistency is a property of what occurs as the sample size “grows to infinity”. If the sequence of estimates can be mathematically shown to converge in probability to the true value '' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mean Square Error

In statistics, the mean squared error (MSE) or mean squared deviation (MSD) of an estimator (of a procedure for estimating an unobserved quantity) measures the average of the squares of the errors—that is, the average squared difference between the estimated values and the true value. MSE is a risk function, corresponding to the expected value of the squared error loss. The fact that MSE is almost always strictly positive (and not zero) is because of randomness or because the estimator does not account for information that could produce a more accurate estimate. In machine learning, specifically empirical risk minimization, MSE may refer to the ''empirical'' risk (the average loss on an observed data set), as an estimate of the true MSE (the true risk: the average loss on the actual population distribution). The MSE is a measure of the quality of an estimator. As it is derived from the square of Euclidean distance, it is always a positive value that decreases as the error app ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Estimation Theory

Estimation theory is a branch of statistics that deals with estimating the values of Statistical parameter, parameters based on measured empirical data that has a random component. The parameters describe an underlying physical setting in such a way that their value affects the distribution of the measured data. An ''estimator'' attempts to approximate the unknown parameters using the measurements. In estimation theory, two approaches are generally considered: * The probabilistic approach (described in this article) assumes that the measured data is random with probability distribution dependent on the parameters of interest * The set estimation, set-membership approach assumes that the measured data vector belongs to a set which depends on the parameter vector. Examples For example, it is desired to estimate the proportion of a population of voters who will vote for a particular candidate. That proportion is the parameter sought; the estimate is based on a small random sa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German language, German: ', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of statistical survey, surveys and experimental design, experiments. When census data (comprising every member of the target population) cannot be collected, statisticians collect data by developing specific experiment designs and survey sample (statistics), samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Estimation Theory

Estimation theory is a branch of statistics that deals with estimating the values of Statistical parameter, parameters based on measured empirical data that has a random component. The parameters describe an underlying physical setting in such a way that their value affects the distribution of the measured data. An ''estimator'' attempts to approximate the unknown parameters using the measurements. In estimation theory, two approaches are generally considered: * The probabilistic approach (described in this article) assumes that the measured data is random with probability distribution dependent on the parameters of interest * The set estimation, set-membership approach assumes that the measured data vector belongs to a set which depends on the parameter vector. Examples For example, it is desired to estimate the proportion of a population of voters who will vote for a particular candidate. That proportion is the parameter sought; the estimate is based on a small random sa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Estimate

Estimation (or estimating) is the process of finding an estimate or approximation, which is a value that is usable for some purpose even if input data may be incomplete, uncertain, or unstable. The value is nonetheless usable because it is derived from the best information available.C. Lon Enloe, Elizabeth Garnett, Jonathan Miles, ''Physical Science: What the Technology Professional Needs to Know'' (2000), p. 47. Typically, estimation involves "using the value of a statistic derived from a sample to estimate the value of a corresponding population parameter".Raymond A. Kent, "Estimation", ''Data Construction and Data Analysis for Survey Research'' (2001), p. 157. The sample provides information that can be projected, through various formal or informal processes, to determine a range most likely to describe the missing information. An estimate that turns out to be incorrect will be an overestimate if the estimate exceeds the actual result and an underestimate if the estimate fa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parameter Space

The parameter space is the space of all possible parameter values that define a particular mathematical model. It is also sometimes called weight space, and is often a subset of finite-dimensional Euclidean space. In statistics, parameter spaces are particularly useful for describing parametric families of probability distributions. They also form the background for parameter estimation. In the case of extremum estimators for parametric models, a certain objective function is maximized or minimized over the parameter space. Theorems of existence and consistency of such estimators require some assumptions about the topology of the parameter space. For instance, compactness of the parameter space, together with continuity of the objective function, suffices for the existence of an extremum estimator. Sometimes, parameters are analyzed to view how they affect their statistical model. In that context, they can be viewed as inputs of a function, in which case the technical term for ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |