|

Estimation Of Distribution Algorithm

''Estimation of distribution algorithms'' (EDAs), sometimes called ''probabilistic model-building genetic algorithms'' (PMBGAs), are stochastic optimization methods that guide the search for the optimum by building and sampling explicit probabilistic models of promising candidate solutions. Optimization is viewed as a series of incremental updates of a probabilistic model, starting with the model encoding an uninformative prior over admissible solutions and ending with the model that generates only the global optima. EDAs belong to the class of evolutionary algorithms. The main difference between EDAs and most conventional evolutionary algorithms is that evolutionary algorithms generate new candidate solutions using an ''implicit'' distribution defined by one or more variation operators, whereas EDAs use an ''explicit'' probability distribution encoded by a Bayesian network, a multivariate normal distribution, or another model class. Similarly as other evolutionary algorithms, EDAs ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Eda Mono-variant Gauss Iterations

EDA or Eda may refer to: Computing * Electronic design automation * Enterprise Desktop Alliance, a computer technology consortium * Enterprise digital assistant * Estimation of distribution algorithm * Event-driven architecture * Exploratory data analysis Government and politics * Economic Development Administration, an agency of the United States government * Election Defense Alliance, an American voting integrity organization * European Defence Agency, a branch of the European Union * European Democratic Alliance, a former political group in the European Parliament * ( Federal Department of Foreign Affairs), a branch of the government of Switzerland * (Spanish Air Force), the air force of Spain * (United Democratic Left) (1951-1967, 1977-1985), a former Greek political party * Electoral District Association, a local unit of a political party in Canada People * Eda (given name), a given name * Eda (surname), a Japanese surname Places * Eda, Sweden * Eda glasbru ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Graphical Model

A graphical model or probabilistic graphical model (PGM) or structured probabilistic model is a probabilistic model for which a graph expresses the conditional dependence structure between random variables. They are commonly used in probability theory, statistics—particularly Bayesian statistics—and machine learning. Types of graphical models Generally, probabilistic graphical models use a graph-based representation as the foundation for encoding a distribution over a multi-dimensional space and a graph that is a compact or factorized representation of a set of independences that hold in the specific distribution. Two branches of graphical representations of distributions are commonly used, namely, Bayesian networks and Markov random fields. Both families encompass the properties of factorization and independences, but they differ in the set of independences they can encode and the factorization of the distribution that they induce. Undirected Graphical Model The undire ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cross-entropy Method

The cross-entropy (CE) method is a Monte Carlo method for importance sampling and optimization. It is applicable to both combinatorial and continuous problems, with either a static or noisy objective. The method approximates the optimal importance sampling estimator by repeating two phases:Rubinstein, R.Y. and Kroese, D.P. (2004), The Cross-Entropy Method: A Unified Approach to Combinatorial Optimization, Monte-Carlo Simulation, and Machine Learning, Springer-Verlag, New York . #Draw a sample from a probability distribution. #Minimize the ''cross-entropy'' between this distribution and a target distribution to produce a better sample in the next iteration. Reuven Rubinstein developed the method in the context of ''rare event simulation'', where tiny probabilities must be estimated, for example in network reliability analysis, queueing models, or performance analysis of telecommunication systems. The method has also been applied to the traveling salesman, quadratic assignmen ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

CMA-ES

Covariance matrix adaptation evolution strategy (CMA-ES) is a particular kind of strategy for numerical optimization. Evolution strategies (ES) are stochastic, derivative-free methods for numerical optimization of non-linear or non- convex continuous optimization problems. They belong to the class of evolutionary algorithms and evolutionary computation. An evolutionary algorithm is broadly based on the principle of biological evolution, namely the repeated interplay of variation (via recombination and mutation) and selection: in each generation (iteration) new individuals (candidate solutions, denoted as x) are generated by variation, usually in a stochastic way, of the current parental individuals. Then, some individuals are selected to become the parents in the next generation based on their fitness or objective function value f(x). Like this, over the generation sequence, individuals with better and better f-values are generated. In an evolution strategy, new candidate ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hierarchical Clustering

In data mining and statistics, hierarchical clustering (also called hierarchical cluster analysis or HCA) is a method of cluster analysis that seeks to build a hierarchy of clusters. Strategies for hierarchical clustering generally fall into two categories: * Agglomerative: This is a " bottom-up" approach: Each observation starts in its own cluster, and pairs of clusters are merged as one moves up the hierarchy. * Divisive: This is a "top-down" approach: All observations start in one cluster, and splits are performed recursively as one moves down the hierarchy. In general, the merges and splits are determined in a greedy manner. The results of hierarchical clustering are usually presented in a dendrogram. The standard algorithm for hierarchical agglomerative clustering (HAC) has a time complexity of \mathcal(n^3) and requires \Omega(n^2) memory, which makes it too slow for even medium data sets. However, for some special cases, optimal efficient agglomerative methods (of comp ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Family Of Sets

In set theory and related branches of mathematics, a collection F of subsets of a given set S is called a family of subsets of S, or a family of sets over S. More generally, a collection of any sets whatsoever is called a family of sets, set family, or a set system. The term "collection" is used here because, in some contexts, a family of sets may be allowed to contain repeated copies of any given member, and in other contexts it may form a proper class rather than a set. A finite family of subsets of a finite set S is also called a '' hypergraph''. The subject of extremal set theory concerns the largest and smallest examples of families of sets satisfying certain restrictions. Examples The set of all subsets of a given set S is called the power set of S and is denoted by \wp(S). The power set \wp(S) of a given set S is a family of sets over S. A subset of S having k elements is called a k-subset of S. The k-subsets S^ of a set S form a family of sets. Let S = \. An e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Joint Probability Distribution

Given two random variables that are defined on the same probability space, the joint probability distribution is the corresponding probability distribution on all possible pairs of outputs. The joint distribution can just as well be considered for any given number of random variables. The joint distribution encodes the marginal distributions, i.e. the distributions of each of the individual random variables. It also encodes the conditional probability distributions, which deal with how the outputs of one random variable are distributed when given information on the outputs of the other random variable(s). In the formal mathematical setup of measure theory, the joint distribution is given by the pushforward measure, by the map obtained by pairing together the given random variables, of the sample space's probability measure. In the case of real-valued random variables, the joint distribution, as a particular multivariate distribution, may be expressed by a multivariate cum ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Learning Rate

In machine learning and statistics, the learning rate is a Hyperparameter (machine learning), tuning parameter in an Mathematical optimization, optimization algorithm that determines the step size at each iteration while moving toward a minimum of a loss function. Since it influences to what extent newly acquired information overrides old information, it metaphorically represents the speed at which a machine learning model "learns". In the adaptive control literature, the learning rate is commonly referred to as gain. In setting a learning rate, there is a trade-off between the rate of convergence and overshooting. While the descent direction is usually determined from the Gradient descent, gradient of the loss function, the learning rate determines how big a step is taken in that direction. A too high learning rate will make the learning jump over minima but a too low learning rate will either take too long to converge or get stuck in an undesirable local minimum. In order to ach ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stochastic Optimization

Stochastic optimization (SO) methods are optimization methods that generate and use random variables. For stochastic problems, the random variables appear in the formulation of the optimization problem itself, which involves random objective functions or random constraints. Stochastic optimization methods also include methods with random iterates. Some stochastic optimization methods use random iterates to solve stochastic problems, combining both meanings of stochastic optimization. Stochastic optimization methods generalize deterministic methods for deterministic problems. Methods for stochastic functions Partly random input data arise in such areas as real-time estimation and control, simulation-based optimization where Monte Carlo simulations are run as estimates of an actual system, and problems where there is experimental (random) error in the measurements of the criterion. In such cases, knowledge that the function values are contaminated by random "noise" leads natural ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Population-based Incremental Learning

In computer science and machine learning, population-based incremental learning (PBIL) is an optimization algorithm, and an estimation of distribution algorithm. This is a type of genetic algorithm where the genotype of an entire population (probability vector) is evolved rather than individual members. The algorithm is proposed by Shumeet Baluja in 1994. The algorithm is simpler than a standard genetic algorithm, and in many cases leads to better results than a standard genetic algorithm. Algorithm In PBIL, genes are represented as real values in the range ,1 indicating the probability that any particular allele appears in that gene. The PBIL algorithm is as follows: # A population is generated from the probability vector. # The fitness of each member is evaluated and ranked. # Update population genotype (probability vector) based on fittest individual. # Mutate. # Repeat steps 1–4 Source code This is a part of source code implemented in Java. In the paper, learnRate = ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

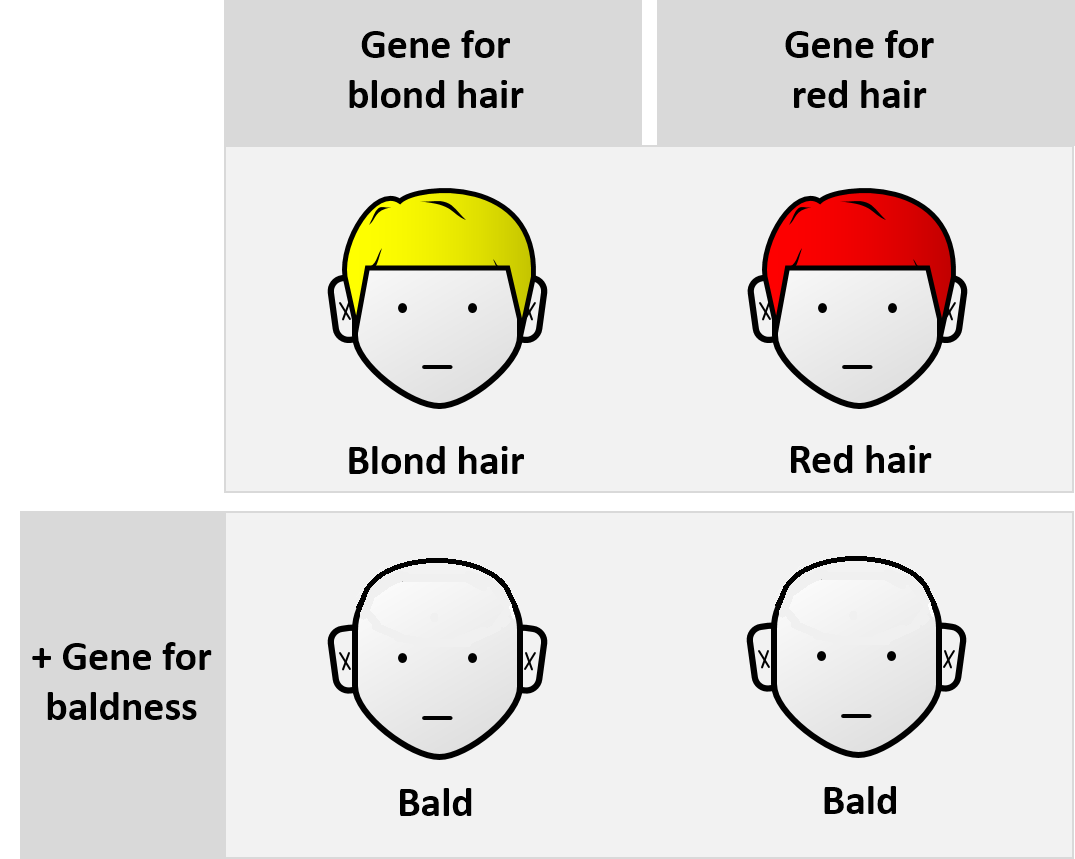

Epistasis

Epistasis is a phenomenon in genetics in which the effect of a gene mutation is dependent on the presence or absence of mutations in one or more other genes, respectively termed modifier genes. In other words, the effect of the mutation is dependent on the genetic background in which it appears. Epistatic mutations therefore have different effects on their own than when they occur together. Originally, the term ''epistasis'' specifically meant that the effect of a gene variant is masked by that of a different gene. The concept of ''epistasis'' originated in genetics in 1907 but is now used in biochemistry, computational biology and evolutionary biology. The phenomenon arises due to interactions, either between genes (such as mutations also being needed in regulators of gene expression) or within them (multiple mutations being needed before the gene loses function), leading to non-linear effects. Epistasis has a great influence on the shape of evolutionary landscapes, which lea ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |