|

Error Ellipse

In statistics, a confidence region is a multi-dimensional generalization of a confidence interval. For a bivariate normal distribution, it is an ellipse, also known as the error ellipse. More generally, it is a set of points in an ''n''-dimensional space, often represented as a hyperellipsoid around a point which is an estimated solution to a problem, although other shapes can occur. Interpretation The confidence region is calculated in such a way that if a set of measurements were repeated many times and a confidence region calculated in the same way on each set of measurements, then a certain percentage of the time (e.g. 95%) the confidence region would include the point representing the "true" values of the set of variables being estimated. However, unless certain assumptions about prior probabilities are made, it does not mean, when one confidence region has been calculated, that there is a 95% probability that the "true" values lie inside the region, since we do not ass ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German language, German: ', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of statistical survey, surveys and experimental design, experiments. When census data (comprising every member of the target population) cannot be collected, statisticians collect data by developing specific experiment designs and survey sample (statistics), samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Significance

In statistical hypothesis testing, a result has statistical significance when a result at least as "extreme" would be very infrequent if the null hypothesis were true. More precisely, a study's defined significance level, denoted by \alpha, is the probability of the study rejecting the null hypothesis, given that the null hypothesis is true; and the p-value, ''p''-value of a result, ''p'', is the probability of obtaining a result at least as extreme, given that the null hypothesis is true. The result is said to be ''statistically significant'', by the standards of the study, when p \le \alpha. The significance level for a study is chosen before data collection, and is typically set to 5% or much lower—depending on the field of study. In any experiment or Observational study, observation that involves drawing a Sampling (statistics), sample from a Statistical population, population, there is always the possibility that an observed effect would have occurred due to sampling error al ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Confidence Band

A confidence band is used in statistical analysis to represent the uncertainty in an estimate of a curve or function based on limited or noisy data. Similarly, a prediction band is used to represent the uncertainty about the value of a new data-point on the curve, but subject to noise. Confidence and prediction bands are often used as part of the graphical presentation of results of a regression analysis. Confidence bands are closely related to confidence intervals, which represent the uncertainty in an estimate of a single numerical value. "As confidence intervals, by construction, only refer to a single point, they are narrower (at this point) than a confidence band which is supposed to hold simultaneously at many points." Pointwise and simultaneous confidence bands Suppose our aim is to estimate a function ''f''(''x''). For example, ''f''(''x'') might be the proportion of people of a particular age ''x'' who support a given candidate in an election. If ''x'' is measured at ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Regression

In statistics, linear regression is a statistical model, model that estimates the relationship between a Scalar (mathematics), scalar response (dependent variable) and one or more explanatory variables (regressor or independent variable). A model with exactly one explanatory variable is a ''simple linear regression''; a model with two or more explanatory variables is a multiple linear regression. This term is distinct from multivariate linear regression, which predicts multiple correlated dependent variables rather than a single dependent variable. In linear regression, the relationships are modeled using linear predictor functions whose unknown model parameters are estimation theory, estimated from the data. Most commonly, the conditional mean of the response given the values of the explanatory variables (or predictors) is assumed to be an affine function of those values; less commonly, the conditional median or some other quantile is used. Like all forms of regression analysis, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

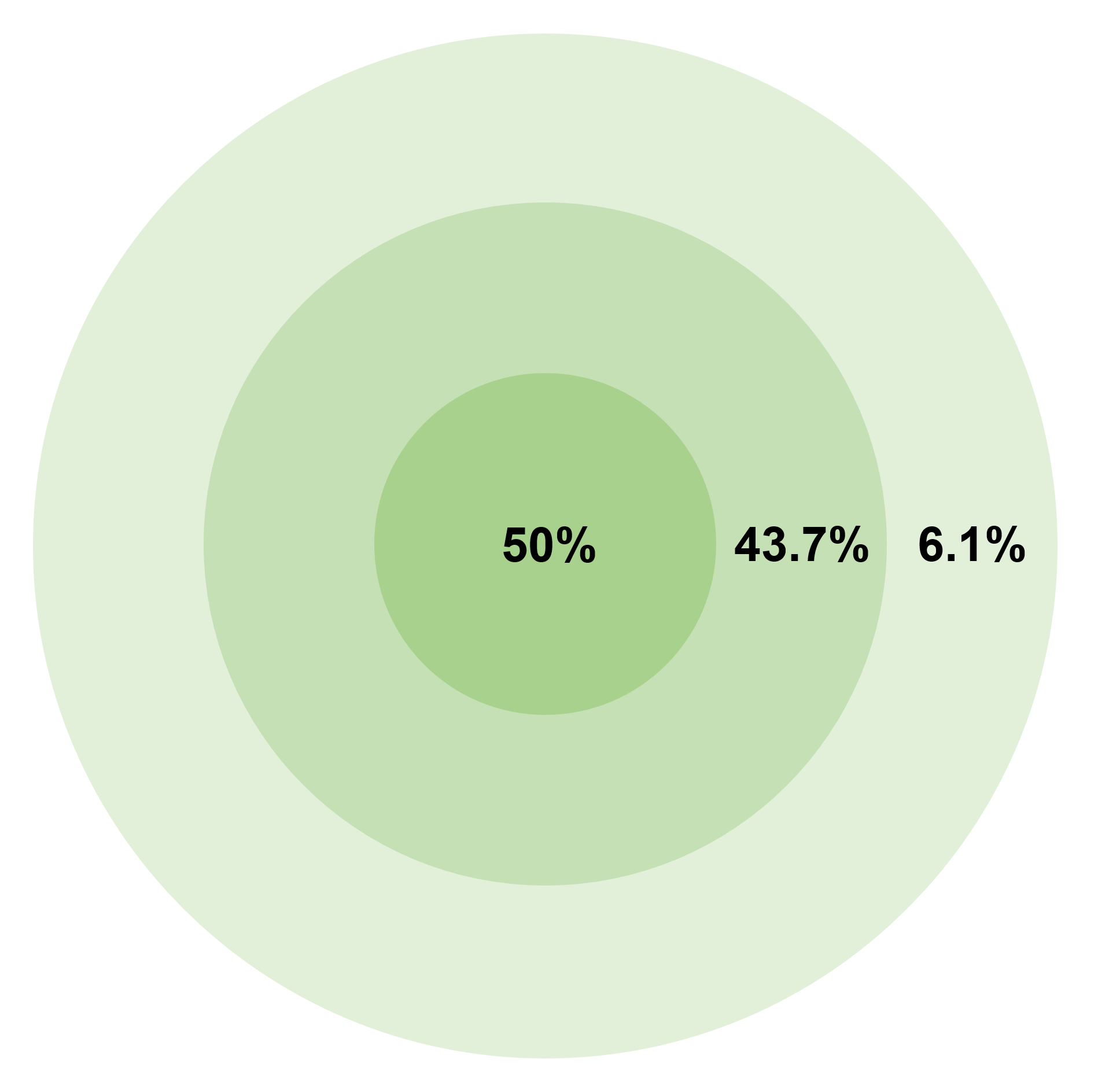

Circular Error Probable

Circular error probable (CEP),Circular Error Probable (CEP), Air Force Operational Test and Evaluation Center Technical Paper 6, Ver 2, July 1987, p. 1 also circular error probability or circle of equal probability, is a measure of a weapon system's Accuracy and precision, precision in the military science of ballistics. It is defined as the radius of a circle, centered on the aimpoint, that is expected to enclose the landing points of 50% of the Round (firearms), rounds; said otherwise, it is the median error radius, which is a 50% confidence interval. That is, if a given munitions design has a CEP of 100 m, when 100 munitions are targeted at the same point, an average of 50 will fall within a circle with a radius of 100 m about that point. There are associated concepts, such as the DRMS (distance root mean square), which is the square root of the average squared distance error, a form of the standard deviation. Another is the R95, which is the radius of the circle ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bootstrapping (statistics)

Bootstrapping is a procedure for estimating the distribution of an estimator by resampling (often with replacement) one's data or a model estimated from the data. Bootstrapping assigns measures of accuracy ( bias, variance, confidence intervals, prediction error, etc.) to sample estimates.software This technique allows estimation of the sampling distribution of almost any statistic using random sampling methods. Bootstrapping estimates the properties of an estimand (such as its ) by measuring those properties when sampling from an approximating distribution. One standard choice for an approximating distributi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Approximation

In mathematics, a linear approximation is an approximation of a general function (mathematics), function using a linear function (more precisely, an affine function). They are widely used in the method of finite differences to produce first order methods for solving or approximating solutions to equations. Definition Given a twice continuously differentiable function f of one real number, real variable, Taylor's theorem for the case n = 1 states that f(x) = f(a) + f'(a)(x - a) + R_2 where R_2 is the remainder term. The linear approximation is obtained by dropping the remainder: f(x) \approx f(a) + f'(a)(x - a). This is a good approximation when x is close enough to since a curve, when closely observed, will begin to resemble a straight line. Therefore, the expression on the right-hand side is just the equation for the tangent line to the graph of f at (a,f(a)). For this reason, this process is also called the tangent line approximation. Linear approximations in this case are ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Chi-squared Distribution

In probability theory and statistics, the \chi^2-distribution with k Degrees of freedom (statistics), degrees of freedom is the distribution of a sum of the squares of k Independence (probability theory), independent standard normal random variables. The chi-squared distribution \chi^2_k is a special case of the gamma distribution and the univariate Wishart distribution. Specifically if X \sim \chi^2_k then X \sim \text(\alpha=\frac, \theta=2) (where \alpha is the shape parameter and \theta the scale parameter of the gamma distribution) and X \sim \text_1(1,k) . The scaled chi-squared distribution s^2 \chi^2_k is a reparametrization of the gamma distribution and the univariate Wishart distribution. Specifically if X \sim s^2 \chi^2_k then X \sim \text(\alpha=\frac, \theta=2 s^2) and X \sim \text_1(s^2,k) . The chi-squared distribution is one of the most widely used probability distributions in inferential statistics, notably in hypothesis testing and in constru ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Off-diagonal Elements

In geometry, a diagonal is a line segment joining two vertices of a polygon or polyhedron, when those vertices are not on the same edge. Informally, any sloping line is called diagonal. The word ''diagonal'' derives from the ancient Greek διαγώνιος ''diagonios'', "from corner to corner" (from διά- ''dia-'', "through", "across" and γωνία ''gonia'', "corner", related to ''gony'' "knee"); it was used by both Strabo and Euclid to refer to a line connecting two vertices of a rhombus or cuboid, and later adopted into Latin as ''diagonus'' ("slanting line"). Polygons As applied to a polygon, a diagonal is a line segment joining any two non-consecutive vertices. Therefore, a quadrilateral has two diagonals, joining opposite pairs of vertices. For any convex polygon, all the diagonals are inside the polygon, but for re-entrant polygons, some diagonals are outside of the polygon. Any ''n''-sided polygon (''n'' ≥ 3), convex or concave, has \tfrac ''total'' diagona ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Identity Matrix

In linear algebra, the identity matrix of size n is the n\times n square matrix with ones on the main diagonal and zeros elsewhere. It has unique properties, for example when the identity matrix represents a geometric transformation, the object remains unchanged by the transformation. In other contexts, it is analogous to multiplying by the number 1. Terminology and notation The identity matrix is often denoted by I_n, or simply by I if the size is immaterial or can be trivially determined by the context. I_1 = \begin 1 \end ,\ I_2 = \begin 1 & 0 \\ 0 & 1 \end ,\ I_3 = \begin 1 & 0 & 0 \\ 0 & 1 & 0 \\ 0 & 0 & 1 \end ,\ \dots ,\ I_n = \begin 1 & 0 & 0 & \cdots & 0 \\ 0 & 1 & 0 & \cdots & 0 \\ 0 & 0 & 1 & \cdots & 0 \\ \vdots & \vdots & \vdots & \ddots & \vdots \\ 0 & 0 & 0 & \cdots & 1 \end. The term unit matrix has also been widely used, but the term ''identity matrix'' is now standard. The term ''unit matrix'' is ambiguous, because it is also used for a matrix of on ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Covariance

In probability theory and statistics, covariance is a measure of the joint variability of two random variables. The sign of the covariance, therefore, shows the tendency in the linear relationship between the variables. If greater values of one variable mainly correspond with greater values of the other variable, and the same holds for lesser values (that is, the variables tend to show similar behavior), the covariance is positive. In the opposite case, when greater values of one variable mainly correspond to lesser values of the other (that is, the variables tend to show opposite behavior), the covariance is negative. The magnitude of the covariance is the geometric mean of the variances that are in common for the two random variables. The Pearson product-moment correlation coefficient, correlation coefficient normalizes the covariance by dividing by the geometric mean of the total variances for the two random variables. A distinction must be made between (1) the covariance of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Diagonal Matrix

In linear algebra, a diagonal matrix is a matrix in which the entries outside the main diagonal are all zero; the term usually refers to square matrices. Elements of the main diagonal can either be zero or nonzero. An example of a 2×2 diagonal matrix is \left begin 3 & 0 \\ 0 & 2 \end\right/math>, while an example of a 3×3 diagonal matrix is \left begin 6 & 0 & 0 \\ 0 & 5 & 0 \\ 0 & 0 & 4 \end\right/math>. An identity matrix of any size, or any multiple of it is a diagonal matrix called a ''scalar matrix'', for example, \left begin 0.5 & 0 \\ 0 & 0.5 \end\right/math>. In geometry, a diagonal matrix may be used as a '' scaling matrix'', since matrix multiplication with it results in changing scale (size) and possibly also shape; only a scalar matrix results in uniform change in scale. Definition As stated above, a diagonal matrix is a matrix in which all off-diagonal entries are zero. That is, the matrix with columns and rows is diagonal if \forall i,j \in \, i \ne j \ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |