|

Entropy

Entropy is a scientific concept, most commonly associated with states of disorder, randomness, or uncertainty. The term and the concept are used in diverse fields, from classical thermodynamics, where it was first recognized, to the microscopic description of nature in statistical physics, and to the principles of information theory. It has found far-ranging applications in chemistry and physics, in biological systems and their relation to life, in cosmology, economics, sociology, weather science, climate change and information systems including the transmission of information in telecommunication. Entropy is central to the second law of thermodynamics, which states that the entropy of an isolated system left to spontaneous evolution cannot decrease with time. As a result, isolated systems evolve toward thermodynamic equilibrium, where the entropy is highest. A consequence of the second law of thermodynamics is that certain processes are irreversible. The thermodynami ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Second Law Of Thermodynamics

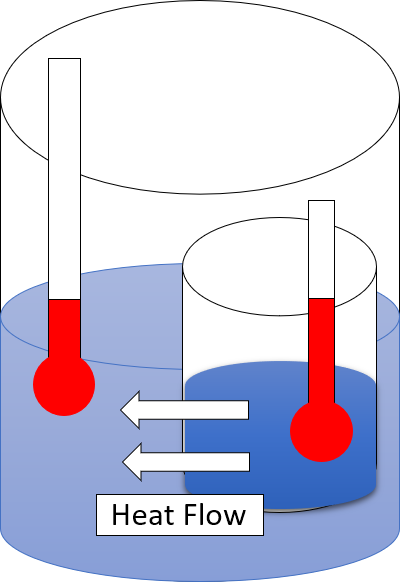

The second law of thermodynamics is a physical law based on Universal (metaphysics), universal empirical observation concerning heat and Energy transformation, energy interconversions. A simple statement of the law is that heat always flows spontaneously from hotter to colder regions of matter (or 'downhill' in terms of the temperature gradient). Another statement is: "Not all heat can be converted into Work (thermodynamics), work in a cyclic process."Young, H. D; Freedman, R. A. (2004). ''University Physics'', 11th edition. Pearson. p. 764. The second law of thermodynamics establishes the concept of entropy as a physical property of a thermodynamic system. It predicts whether processes are forbidden despite obeying the requirement of conservation of energy as expressed in the first law of thermodynamics and provides necessary criteria for spontaneous processes. For example, the first law allows the process of a cup falling off a table and breaking on the floor, as well as allowi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Theory

Information theory is the mathematical study of the quantification (science), quantification, Data storage, storage, and telecommunications, communication of information. The field was established and formalized by Claude Shannon in the 1940s, though early contributions were made in the 1920s through the works of Harry Nyquist and Ralph Hartley. It is at the intersection of electronic engineering, mathematics, statistics, computer science, Neuroscience, neurobiology, physics, and electrical engineering. A key measure in information theory is information entropy, entropy. Entropy quantifies the amount of uncertainty involved in the value of a random variable or the outcome of a random process. For example, identifying the outcome of a Fair coin, fair coin flip (which has two equally likely outcomes) provides less information (lower entropy, less uncertainty) than identifying the outcome from a roll of a dice, die (which has six equally likely outcomes). Some other important measu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Temperature

Temperature is a physical quantity that quantitatively expresses the attribute of hotness or coldness. Temperature is measurement, measured with a thermometer. It reflects the average kinetic energy of the vibrating and colliding atoms making up a substance. Thermometers are calibrated in various temperature scales that historically have relied on various reference points and thermometric substances for definition. The most common scales are the Celsius scale with the unit symbol °C (formerly called ''centigrade''), the Fahrenheit scale (°F), and the Kelvin scale (K), with the third being used predominantly for scientific purposes. The kelvin is one of the seven base units in the International System of Units (SI). Absolute zero, i.e., zero kelvin or −273.15 °C, is the lowest point in the thermodynamic temperature scale. Experimentally, it can be approached very closely but not actually reached, as recognized in the third law of thermodynamics. It would be impossible ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ludwig Boltzmann

Ludwig Eduard Boltzmann ( ; ; 20 February 1844 – 5 September 1906) was an Austrian mathematician and Theoretical physics, theoretical physicist. His greatest achievements were the development of statistical mechanics and the statistical explanation of the second law of thermodynamics. In 1877 he provided the current definition of entropy, S = k_ \ln \Omega, where Ω is the number of microstates whose energy equals the system's energy, interpreted as a measure of the statistical disorder of a system. Max Planck named the constant the Boltzmann constant. Statistical mechanics is one of the pillars of modern physics. It describes how macroscopic observations (such as temperature and pressure) are related to microscopic parameters that fluctuate around an average. It connects thermodynamic quantities (such as heat capacity) to microscopic behavior, whereas, in classical thermodynamics, the only available option would be to measure and tabulate such quantities for various mat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Classical Thermodynamics

Thermodynamics is a branch of physics that deals with heat, work, and temperature, and their relation to energy, entropy, and the physical properties of matter and radiation. The behavior of these quantities is governed by the four laws of thermodynamics, which convey a quantitative description using measurable macroscopic physical quantities but may be explained in terms of microscopic constituents by statistical mechanics. Thermodynamics applies to various topics in science and engineering, especially physical chemistry, biochemistry, chemical engineering, and mechanical engineering, as well as other complex fields such as meteorology. Historically, thermodynamics developed out of a desire to increase the efficiency of early steam engines, particularly through the work of French physicist Sadi Carnot (1824) who believed that engine efficiency was the key that could help France win the Napoleonic Wars. Scots-Irish physicist Lord Kelvin was the first to formulate a concise d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Heat

In thermodynamics, heat is energy in transfer between a thermodynamic system and its surroundings by such mechanisms as thermal conduction, electromagnetic radiation, and friction, which are microscopic in nature, involving sub-atomic, atomic, or molecular particles, or small surface irregularities, as distinct from the macroscopic modes of energy transfer, which are thermodynamic work and transfer of matter. For a closed system (transfer of matter excluded), the heat involved in a process is the difference in internal energy between the final and initial states of a system, after subtracting the work done in the process. For a closed system, this is the formulation of the first law of thermodynamics. Calorimetry is measurement of quantity of energy transferred as heat by its effect on the states of interacting bodies, for example, by the amount of ice melted or by change in temperature of a body. In the International System of Units (SI), the unit of measurement for he ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Irreversible Process

In thermodynamics, an irreversible process is a thermodynamic processes, process that cannot be undone. All complex natural processes are irreversible, although a phase transition at the coexistence temperature (e.g. melting of ice cubes in water) is well approximated as reversible. A change in the thermodynamic state of a system and all of its surroundings cannot be precisely restored to its initial state by infinitesimal changes in some property of the system without expenditure of energy. A system that undergoes an irreversible process may still be capable of returning to its initial state. Because entropy is a state function, the change in entropy of the system is the same whether the process is reversible or irreversible. However, the impossibility occurs in restoring the Environment (systems), environment to its own initial conditions. An irreversible process increases the total entropy of the system and its surroundings. The second law of thermodynamics can be used to dete ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Boltzmann Constant

The Boltzmann constant ( or ) is the proportionality factor that relates the average relative thermal energy of particles in a ideal gas, gas with the thermodynamic temperature of the gas. It occurs in the definitions of the kelvin (K) and the molar gas constant, in Planck's law of black-body radiation and Boltzmann's entropy formula, and is used in calculating Johnson–Nyquist noise, thermal noise in resistors. The Boltzmann constant has Dimensional analysis, dimensions of energy divided by temperature, the same as entropy and heat capacity. It is named after the Austrian scientist Ludwig Boltzmann. As part of the 2019 revision of the SI, the Boltzmann constant is one of the seven "Physical constant, defining constants" that have been defined so as to have exact finite decimal values in SI units. They are used in various combinations to define the seven SI base units. The Boltzmann constant is defined to be exactly joules per kelvin, with the effect of defining the SI unit ke ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Physics

In physics, statistical mechanics is a mathematical framework that applies statistical methods and probability theory to large assemblies of microscopic entities. Sometimes called statistical physics or statistical thermodynamics, its applications include many problems in a wide variety of fields such as biology, neuroscience, computer science, information theory and sociology. Its main purpose is to clarify the properties of matter in aggregate, in terms of physical laws governing atomic motion. Statistical mechanics arose out of the development of classical thermodynamics, a field for which it was successful in explaining macroscopic physical properties—such as temperature, pressure, and heat capacity—in terms of microscopic parameters that fluctuate about average values and are characterized by probability distributions. While classical thermodynamics is primarily concerned with thermodynamic equilibrium, statistical mechanics has been applied in non-equilibrium stat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Clausius

Rudolf Julius Emanuel Clausius (; 2 January 1822 – 24 August 1888) was a German physicist and mathematician and is considered one of the central founding fathers of the science of thermodynamics. By his restatement of Nicolas Léonard Sadi Carnot, Sadi Carnot's principle known as the Carnot cycle, he gave the theory of heat a truer and sounder basis. His most important paper, "On the Moving Force of Heat", published in 1850, first stated the basic ideas of the second law of thermodynamics. In 1865 he introduced the concept of entropy. In 1870 he introduced the virial theorem, which applied to heat. Life Clausius was born in Koszalin, Köslin (now Koszalin, Poland) in the Province of Pomerania (1815–1945), Province of Pomerania in Prussia. His father was a Protestant pastor and school inspector, and Rudolf studied in the school of his father. In 1838, he went to the Gymnasium (school), Gymnasium in Szczecin, Stettin. Clausius graduated from the University of Berlin in 1844 wh ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |