|

Empirical Likelihood

In probability theory and statistics, empirical likelihood (EL) is a nonparametric method for estimating the parameters of statistical models. It requires fewer assumptions about the error distribution while retaining some of the merits in likelihood-based inference. The estimation method requires that the data are independent and identically distributed (iid). It performs well even when the distribution is asymmetric or censored. EL methods can also handle constraints and prior information on parameters. Art Owen pioneered work in this area with his 1988 paper. Definition Given a set of n i.i.d. realizations y_i of random variables Y_i, then the empirical distribution function is \hat(y):=\sum_^n \pi_i I(Y_i [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Theory

Probability theory or probability calculus is the branch of mathematics concerned with probability. Although there are several different probability interpretations, probability theory treats the concept in a rigorous mathematical manner by expressing it through a set of axioms of probability, axioms. Typically these axioms formalise probability in terms of a probability space, which assigns a measure (mathematics), measure taking values between 0 and 1, termed the probability measure, to a set of outcomes called the sample space. Any specified subset of the sample space is called an event (probability theory), event. Central subjects in probability theory include discrete and continuous random variables, probability distributions, and stochastic processes (which provide mathematical abstractions of determinism, non-deterministic or uncertain processes or measured Quantity, quantities that may either be single occurrences or evolve over time in a random fashion). Although it is no ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Likelihood Function

A likelihood function (often simply called the likelihood) measures how well a statistical model explains observed data by calculating the probability of seeing that data under different parameter values of the model. It is constructed from the joint probability distribution of the random variable that (presumably) generated the observations. When evaluated on the actual data points, it becomes a function solely of the model parameters. In maximum likelihood estimation, the argument that maximizes the likelihood function serves as a point estimate for the unknown parameter, while the Fisher information (often approximated by the likelihood's Hessian matrix at the maximum) gives an indication of the estimate's precision. In contrast, in Bayesian statistics, the estimate of interest is the ''converse'' of the likelihood, the so-called posterior probability of the parameter given the observed data, which is calculated via Bayes' rule. Definition The likelihood function, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nelson–Aalen Estimator

The Nelson–Aalen estimator is a non-parametric estimator of the cumulative hazard rate function in case of censored data or incomplete data. It is used in survival theory, reliability engineering Reliability engineering is a sub-discipline of systems engineering that emphasizes the ability of equipment to function without failure. Reliability is defined as the probability that a product, system, or service will perform its intended functi ... and life insurance to estimate the cumulative number of expected events. An "event" can be the failure of a non-repairable component, the death of a human being, or any occurrence for which the experimental unit remains in the "failed" state (e.g., death) from the point at which it changed on. The estimator is given by :\tilde(t)=\sum_\frac, with d_i the number of events at time t_i and n_i the total individuals at risk at t_i. The curvature of the Nelson–Aalen estimator gives an idea of the hazard rate shape. A concave shape i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Jackknife (statistics)

In statistics, the jackknife (jackknife cross-validation) is a cross-validation technique and, therefore, a form of resampling. It is especially useful for bias and variance estimation. The jackknife pre-dates other common resampling methods such as the bootstrap. Given a sample of size n, a jackknife estimator can be built by aggregating the parameter estimates from each subsample of size (n-1) obtained by omitting one observation. The jackknife is a linear approximation of the bootstrap. The jackknife technique was developed by Maurice Quenouille (1924–1973) from 1949 and refined in 1956. John Tukey expanded on the technique in 1958 and proposed the name "jackknife" because, like a physical jack-knife (a compact folding knife), it is a rough-and-ready tool that can improvise a solution for a variety of problems even though specific problems may be more efficiently solved with a purpose-designed tool. A simple example: mean estimation The jackknife estimator of a param ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bootstrapping (statistics)

Bootstrapping is a procedure for estimating the distribution of an estimator by resampling (often with replacement) one's data or a model estimated from the data. Bootstrapping assigns measures of accuracy ( bias, variance, confidence intervals, prediction error, etc.) to sample estimates.software This technique allows estimation of the sampling distribution of almost any statistic using random sampling methods. Bootstrapping estimates the properties of an estimand (such as its ) by measuring those properties when sampling from an approximating distribution. One standard choice for an approximating distributi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Survival Analysis

Survival analysis is a branch of statistics for analyzing the expected duration of time until one event occurs, such as death in biological organisms and failure in mechanical systems. This topic is called reliability theory, reliability analysis or reliability engineering in engineering, duration analysis or duration modelling in economics, and event history analysis in sociology. Survival analysis attempts to answer certain questions, such as what is the proportion of a population which will survive past a certain time? Of those that survive, at what rate will they die or fail? Can multiple causes of death or failure be taken into account? How do particular circumstances or characteristics increase or decrease the probability of survival? To answer such questions, it is necessary to define "lifetime". In the case of biological survival, death is unambiguous, but for mechanical reliability, failure may not be well-defined, for there may well be mechanical systems in which failure ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

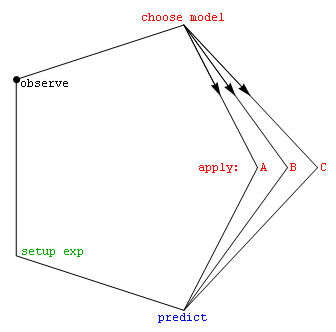

Model Selection

Model selection is the task of selecting a model from among various candidates on the basis of performance criterion to choose the best one. In the context of machine learning and more generally statistical analysis, this may be the selection of a statistical model from a set of candidate models, given data. In the simplest cases, a pre-existing set of data is considered. However, the task can also involve the design of experiments such that the data collected is well-suited to the problem of model selection. Given candidate models of similar predictive or explanatory power, the simplest model is most likely to be the best choice (Occam's razor). state, "The majority of the problems in statistical inference can be considered to be problems related to statistical modeling". Relatedly, has said, "How hetranslation from subject-matter problem to statistical model is done is often the most critical part of an analysis". Model selection may also refer to the problem of selecting ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Quantile Regression

Quantile regression is a type of regression analysis used in statistics and econometrics. Whereas the method of least squares estimates the conditional ''mean'' of the response variable across values of the predictor variables, quantile regression estimates the conditional ''median'' (or other '' quantiles'') of the response variable. here is also a method for predicting the conditional geometric mean of the response variable, Tofallis (2015). "A Better Measure of Relative Prediction Accuracy for Model Selection and Model Estimation", ''Journal of the Operational Research Society'', 66(8):1352-1362/ref>.] Quantile regression is an extension of linear regression used when the conditions of linear regression are not met. Advantages and applications One advantage of quantile regression relative to ordinary least squares regression is that the quantile regression estimates are more robust against outliers in the response measurements. However, the main attraction of quantile reg ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nuisance Parameter

In statistics, a nuisance parameter is any parameter which is unspecified but which must be accounted for in the hypothesis testing of the parameters which are of interest. The classic example of a nuisance parameter comes from the normal distribution, a member of the location–scale family. For at least one normal distribution, the variance(s), ''σ2'' is often not specified or known, but one desires to hypothesis test on the mean(s). Another example might be linear regression with unknown variance in the explanatory variable (the independent variable): its variance is a nuisance parameter that must be accounted for to derive an accurate interval estimate of the regression slope, calculate p-values, hypothesis test on the slope's value; see regression dilution. Nuisance parameters are often scale parameters, but not always; for example in errors-in-variables models, the unknown true location of each observation is a nuisance parameter. A parameter may also cease to be a " ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Maximum Entropy Probability Distribution

In statistics and information theory, a maximum entropy probability distribution has entropy that is at least as great as that of all other members of a specified class of probability distributions. According to the principle of maximum entropy, if nothing is known about a distribution except that it belongs to a certain class (usually defined in terms of specified properties or measures), then the distribution with the largest entropy should be chosen as the least-informative default. The motivation is twofold: first, maximizing entropy minimizes the amount of prior information built into the distribution; second, many physical systems tend to move towards maximal entropy configurations over time. Definition of entropy and differential entropy If X is a continuous random variable with probability density p(x), then the differential entropy of X is defined as H(X) = - \int_^\infty p(x) \log p(x) \, dx ~. If X is a discrete random variable with distribution given by ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lagrange Multiplier

In mathematical optimization, the method of Lagrange multipliers is a strategy for finding the local maxima and minima of a function (mathematics), function subject to constraint (mathematics), equation constraints (i.e., subject to the condition that one or more equations have to be satisfied exactly by the chosen values of the variable (mathematics), variables). It is named after the mathematician Joseph-Louis Lagrange. Summary and rationale The basic idea is to convert a constrained problem into a form such that the derivative test of an unconstrained problem can still be applied. The relationship between the gradient of the function and gradients of the constraints rather naturally leads to a reformulation of the original problem, known as the Lagrangian function or Lagrangian. In the general case, the Lagrangian is defined as \mathcal(x, \lambda) \equiv f(x) + \langle \lambda, g(x)\rangle for functions f, g; the notation \langle \cdot, \cdot \rangle denotes an inner prod ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Estimating Function

In statistics, the method of estimating equations is a way of specifying how the parameters of a statistical model should be estimated. This can be thought of as a generalisation of many classical methods—the method of moments, least squares, and maximum likelihood—as well as some recent methods like M-estimators. The basis of the method is to have, or to find, a set of simultaneous equations involving both the sample data and the unknown model parameters which are to be solved in order to define the estimates of the parameters. Various components of the equations are defined in terms of the set of observed data on which the estimates are to be based. Important examples of estimating equations are the likelihood equations. Examples Consider the problem of estimating the rate parameter, λ of the exponential distribution which has the probability density function: : f(x;\lambda) = \left\{\begin{matrix} \lambda e^{-\lambda x}, &\; x \ge 0, \\ 0, &\; x < 0. \end{matrix}\ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |