|

Edward G. Coffman, Jr.

Edward Grady "Ed" Coffman Jr. is a computer scientist. He began his career as a systems programmer at the System Development Corporation (SDC) during the period 1958–65. His PhD in engineering at UCLA in 1966 was followed by a series of positions at Princeton University (1966–69), The Pennsylvania State University (1970–76), Columbia University (1976–77), and the University of California, Santa Barbara (1977–79). In 1979, he joined the Mathematics Center at Bell Laboratories where he stayed until his retirement as a Distinguished Member of Technical Staff 20 years later. After a one-year stint at the New Jersey Institute of Technology, he returned to Columbia University in 2000 with appointments in Computer Science, Electrical Engineering, and Industrial Engineering and Operations Research. He retired from teaching in 2008 and is now a Professor Emeritus still engaged in research and professional activities. Research Coffman is best known for his seminal research ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Los Angeles

Los Angeles ( ; es, Los Ángeles, link=no , ), often referred to by its initials L.A., is the largest city in the state of California and the second most populous city in the United States after New York City, as well as one of the world's most populous megacities. Los Angeles is the commercial, financial, and cultural center of Southern California. With a population of roughly 3.9 million residents within the city limits , Los Angeles is known for its Mediterranean climate, ethnic and cultural diversity, being the home of the Hollywood film industry, and its sprawling metropolitan area. The city of Los Angeles lies in a basin in Southern California adjacent to the Pacific Ocean in the west and extending through the Santa Monica Mountains and north into the San Fernando Valley, with the city bordering the San Gabriel Valley to it's east. It covers about , and is the county seat of Los Angeles County, which is the most populous county in the United States with an estim ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

System Development Corporation

System Development Corporation (SDC) was a computer software company based in Santa Monica, California. Founded in 1955, it is considered the first company of its kind. History SDC began as the systems engineering group for the SAGE air-defense system at the RAND Corporation. In April 1955, the government contracted with RAND to help write software for the SAGE project. Within a few months, RAND's System Development Division had 500 employees developing SAGE applications. Within a year, the division had up to 1,000 employees. RAND spun off the group in 1957 as a non-profit organization that provided expertise for the United States military in the design, integration, and testing of large, complex, computer-controlled systems. SDC became a for-profit corporation in 1969, and began to offer its services to all organizations rather than only to the American military. The first two systems that SDC produced were the SAGE system, written for the IBM AN/FSQ-7 -7computer, and the SAG ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

NP-hard

In computational complexity theory, NP-hardness ( non-deterministic polynomial-time hardness) is the defining property of a class of problems that are informally "at least as hard as the hardest problems in NP". A simple example of an NP-hard problem is the subset sum problem. A more precise specification is: a problem ''H'' is NP-hard when every problem ''L'' in NP can be reduced in polynomial time to ''H''; that is, assuming a solution for ''H'' takes 1 unit time, ''H''s solution can be used to solve ''L'' in polynomial time. As a consequence, finding a polynomial time algorithm to solve any NP-hard problem would give polynomial time algorithms for all the problems in NP. As it is suspected that P≠NP, it is unlikely that such an algorithm exists. It is suspected that there are no polynomial-time algorithms for NP-hard problems, but that has not been proven. Moreover, the class P, in which all problems can be solved in polynomial time, is contained in the NP class. Defi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Approximation Algorithms

In computer science and operations research, approximation algorithms are efficient algorithms that find approximate solutions to optimization problems (in particular NP-hard problems) with provable guarantees on the distance of the returned solution to the optimal one. Approximation algorithms naturally arise in the field of theoretical computer science as a consequence of the widely believed P ≠ NP conjecture. Under this conjecture, a wide class of optimization problems cannot be solved exactly in polynomial time. The field of approximation algorithms, therefore, tries to understand how closely it is possible to approximate optimal solutions to such problems in polynomial time. In an overwhelming majority of the cases, the guarantee of such algorithms is a multiplicative one expressed as an approximation ratio or approximation factor i.e., the optimal solution is always guaranteed to be within a (predetermined) multiplicative factor of the returned solution. However, there are a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cellular Automaton

A cellular automaton (pl. cellular automata, abbrev. CA) is a discrete model of computation studied in automata theory. Cellular automata are also called cellular spaces, tessellation automata, homogeneous structures, cellular structures, tessellation structures, and iterative arrays. Cellular automata have found application in various areas, including physics, theoretical biology and microstructure modeling. A cellular automaton consists of a regular grid of ''cells'', each in one of a finite number of '' states'', such as ''on'' and ''off'' (in contrast to a coupled map lattice). The grid can be in any finite number of dimensions. For each cell, a set of cells called its ''neighborhood'' is defined relative to the specified cell. An initial state (time ''t'' = 0) is selected by assigning a state for each cell. A new ''generation'' is created (advancing ''t'' by 1), according to some fixed ''rule'' (generally, a mathematical function) that determines the new state of e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Network

A computer network is a set of computers sharing resources located on or provided by network nodes. The computers use common communication protocols over digital interconnections to communicate with each other. These interconnections are made up of telecommunication network technologies, based on physically wired, optical, and wireless radio-frequency methods that may be arranged in a variety of network topologies. The nodes of a computer network can include personal computers, servers, networking hardware, or other specialised or general-purpose hosts. They are identified by network addresses, and may have hostnames. Hostnames serve as memorable labels for the nodes, rarely changed after initial assignment. Network addresses serve for locating and identifying the nodes by communication protocols such as the Internet Protocol. Computer networks may be classified by many criteria, including the transmission medium used to carry signals, bandwidth, communications pro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

K-server Problem

The -server problem is a problem of theoretical computer science in the category of online algorithms, one of two abstract problems on metric spaces that are central to the theory of competitive analysis (the other being metrical task systems). In this problem, an online algorithm must control the movement of a set of ''k'' ''servers'', represented as points in a metric space, and handle ''requests'' that are also in the form of points in the space. As each request arrives, the algorithm must determine which server to move to the requested point. The goal of the algorithm is to keep the total distance all servers move small, relative to the total distance the servers could have moved by an optimal adversary who knows in advance the entire sequence of requests. The problem was first posed by Mark Manasse, Lyle A. McGeoch and Daniel Sleator (1988). The most prominent open question concerning the ''k''-server problem is the so-called ''k''-server conjecture, also posed by Manasse e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Queueing Theory

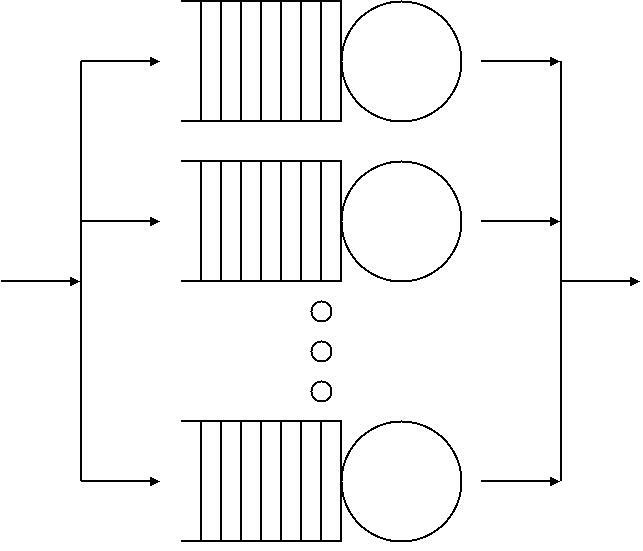

Queueing theory is the mathematical study of waiting lines, or queues. A queueing model is constructed so that queue lengths and waiting time can be predicted. Queueing theory is generally considered a branch of operations research because the results are often used when making business decisions about the resources needed to provide a service. Queueing theory has its origins in research by Agner Krarup Erlang when he created models to describe the system of Copenhagen Telephone Exchange company, a Danish company. The ideas have since seen applications including telecommunication, traffic engineering, computing and, particularly in industrial engineering, in the design of factories, shops, offices and hospitals, as well as in project management. Spelling The spelling "queueing" over "queuing" is typically encountered in the academic research field. In fact, one of the flagship journals of the field is ''Queueing Systems''. Single queueing nodes A queue, or queueing node ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dynamic Allocation

In computer science, manual memory management refers to the usage of manual instructions by the programmer to identify and deallocate unused objects, or garbage. Up until the mid-1990s, the majority of programming languages used in industry supported manual memory management, though garbage collection has existed since 1959, when it was introduced with Lisp. Today, however, languages with garbage collection such as Java are increasingly popular and the languages Objective-C and Swift provide similar functionality through Automatic Reference Counting. The main manually managed languages still in widespread use today are C and C++ – see C dynamic memory allocation. Description Many programming languages use manual techniques to determine when to ''allocate'' a new object from the free store. C uses the malloc function; C++ and Java use the new operator; and many other languages (such as Python) allocate all objects from the free store. Determining when an object ought to be cre ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Graph Algorithms

The following is a list of well-known algorithms along with one-line descriptions for each. Automated planning Combinatorial algorithms General combinatorial algorithms * Brent's algorithm: finds a cycle in function value iterations using only two iterators * Floyd's cycle-finding algorithm: finds a cycle in function value iterations * Gale–Shapley algorithm: solves the stable marriage problem * Pseudorandom number generators (uniformly distributed—see also List of pseudorandom number generators for other PRNGs with varying degrees of convergence and varying statistical quality): ** ACORN generator ** Blum Blum Shub ** Lagged Fibonacci generator ** Linear congruential generator ** Mersenne Twister Graph algorithms * Coloring algorithm: Graph coloring algorithm. * Hopcroft–Karp algorithm: convert a bipartite graph to a maximum cardinality matching * Hungarian algorithm: algorithm for finding a perfect matching * Prüfer coding: conversion between a labeled tree an ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bin Packing

The bin packing problem is an optimization problem, in which items of different sizes must be packed into a finite number of bins or containers, each of a fixed given capacity, in a way that minimizes the number of bins used. The problem has many applications, such as filling up containers, loading trucks with weight capacity constraints, creating file backups in media and technology mapping in Field-programmable gate array, FPGA semiconductor chip design. Computationally, the problem is NP-hard, and the corresponding decision problem - deciding if items can fit into a specified number of bins - is NP-complete. Despite its worst-case hardness, optimal solutions to very large instances of the problem can be produced with sophisticated algorithms. In addition, many approximation algorithms exist. For example, the First-fit bin packing, first fit algorithm provides a fast but often non-optimal solution, involving placing each item into the first bin in which it will fit. It require ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |