|

Dictionary Learning

Sparse coding is a representation learning method which aims at finding a sparse representation of the input data (also known as sparse coding) in the form of a linear combination of basic elements as well as those basic elements themselves. These elements are called ''atoms'' and they compose a ''dictionary''. Atoms in the dictionary are not required to be orthogonal, and they may be an over-complete spanning set. This problem setup also allows the dimensionality of the signals being represented to be higher than the one of the signals being observed. The above two properties lead to having seemingly redundant atoms that allow multiple representations of the same signal but also provide an improvement in sparsity and flexibility of the representation. One of the most important applications of sparse dictionary learning is in the field of compressed sensing or signal recovery. In compressed sensing, a high-dimensional signal can be recovered with only a few linear measurements pr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

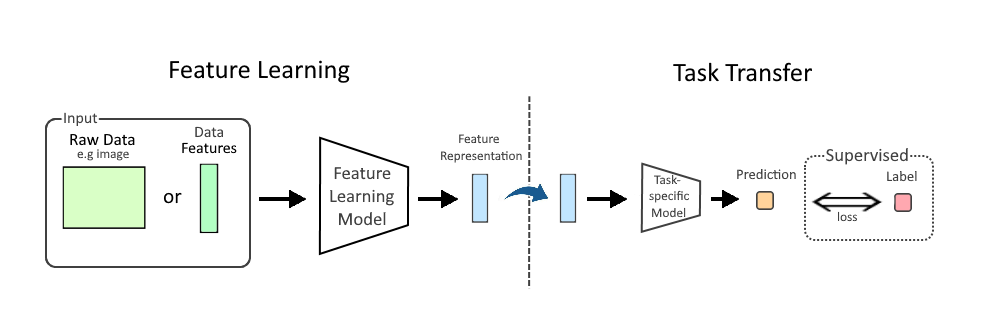

Representation Learning

In machine learning, feature learning or representation learning is a set of techniques that allows a system to automatically discover the representations needed for feature detection or classification from raw data. This replaces manual feature engineering and allows a machine to both learn the features and use them to perform a specific task. Feature learning is motivated by the fact that machine learning tasks such as classification often require input that is mathematically and computationally convenient to process. However, real-world data such as images, video, and sensor data has not yielded to attempts to algorithmically define specific features. An alternative is to discover such features or representations through examination, without relying on explicit algorithms. Feature learning can be either supervised, unsupervised or self-supervised. * In supervised feature learning, features are learned using labeled input data. Labeled data includes input-label pairs where the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

L0 Norm

In mathematics, the spaces are function spaces defined using a natural generalization of the -norm for finite-dimensional vector spaces. They are sometimes called Lebesgue spaces, named after Henri Lebesgue , although according to the Bourbaki group they were first introduced by Frigyes Riesz . spaces form an important class of Banach spaces in functional analysis, and of topological vector spaces. Because of their key role in the mathematical analysis of measure and probability spaces, Lebesgue spaces are used also in the theoretical discussion of problems in physics, statistics, economics, finance, engineering, and other disciplines. Applications Statistics In statistics, measures of central tendency and statistical dispersion, such as the mean, median, and standard deviation, are defined in terms of metrics, and measures of central tendency can be characterized as solutions to variational problems. In penalized regression, "L1 penalty" and "L2 penalty" refer to penaliz ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Singular Value Decomposition

In linear algebra, the singular value decomposition (SVD) is a factorization of a real or complex matrix. It generalizes the eigendecomposition of a square normal matrix with an orthonormal eigenbasis to any \ m \times n\ matrix. It is related to the polar decomposition. Specifically, the singular value decomposition of an \ m \times n\ complex matrix is a factorization of the form \ \mathbf = \mathbf\ , where is an \ m \times m\ complex unitary matrix, \ \mathbf\ is an \ m \times n\ rectangular diagonal matrix with non-negative real numbers on the diagonal, is an n \times n complex unitary matrix, and \ \mathbf\ is the conjugate transpose of . Such decomposition always exists for any complex matrix. If is real, then and can be guaranteed to be real orthogonal matrices; in such contexts, the SVD is often denoted \ \mathbf^\mathsf\ . The diagonal entries \ \sigma_i = \Sigma_\ of \ \mathbf\ are uniquely determined by and are known as the singular values of . The n ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

K-SVD

In applied mathematics, K-SVD is a dictionary learning algorithm for creating a dictionary for sparse representations, via a singular value decomposition approach. K-SVD is a generalization of the k-means clustering method, and it works by iteratively alternating between sparse coding the input data based on the current dictionary, and updating the atoms in the dictionary to better fit the data. It is structurally related to the expectation maximization (EM) algorithm. K-SVD can be found widely in use in applications such as image processing, audio processing, biology, and document analysis. K-SVD algorithm K-SVD is a kind of generalization of K-means, as follows. The k-means clustering can be also regarded as a method of sparse representation. That is, finding the best possible codebook to represent the data samples \^M_ by Nearest neighbor search, nearest neighbor, by solving : \quad \min \limits _ \ \qquad \text \forall i, x_i = e_k \text k. which is equivalent to : ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sparse Approximation

Sparse approximation (also known as sparse representation) theory deals with sparse solutions for systems of linear equations. Techniques for finding these solutions and exploiting them in applications have found wide use in image processing, signal processing, machine learning, medical imaging, and more. Sparse decomposition Noiseless observations Consider a linear system of equations x = D\alpha, where D is an underdetermined m\times p matrix (m < p) and . The matrix (typically assumed to be full-rank) is referred to as the dictionary, and is a signal of interest. The core sparse representation problem is defined as the quest for the sparsest possible representation satisfying . Due to the underdetermined nature of , this linear system admits in general infinitely many possible solutions, and among these we seek the one with the fewe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Frobenius Norm

In mathematics, a matrix norm is a vector norm in a vector space whose elements (vectors) are matrices (of given dimensions). Preliminaries Given a field K of either real or complex numbers, let K^ be the -vector space of matrices with m rows and n columns and entries in the field K. A matrix norm is a norm on K^. This article will always write such norms with double vertical bars (like so: \, A\, ). Thus, the matrix norm is a function \, \cdot\, : K^ \to \R that must satisfy the following properties: For all scalars \alpha \in K and matrices A, B \in K^, *\, A\, \ge 0 (''positive-valued'') *\, A\, = 0 \iff A=0_ (''definite'') *\left\, \alpha A\right\, =\left, \alpha\ \left\, A\right\, (''absolutely homogeneous'') *\, A+B\, \le \, A\, +\, B\, (''sub-additive'' or satisfying the ''triangle inequality'') The only feature distinguishing matrices from rearranged vectors is multiplication. Matrix norms are particularly useful if they are also sub-multiplicative: *\left\, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lasso (statistics)

In statistics and machine learning, lasso (least absolute shrinkage and selection operator; also Lasso or LASSO) is a regression analysis method that performs both variable selection and regularization in order to enhance the prediction accuracy and interpretability of the resulting statistical model. It was originally introduced in geophysics, and later by Robert Tibshirani, who coined the term. Lasso was originally formulated for linear regression models. This simple case reveals a substantial amount about the estimator. These include its relationship to ridge regression and best subset selection and the connections between lasso coefficient estimates and so-called soft thresholding. It also reveals that (like standard linear regression) the coefficient estimates do not need to be unique if covariates are collinear. Though originally defined for linear regression, lasso regularization is easily extended to other statistical models including generalized linear models, generali ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

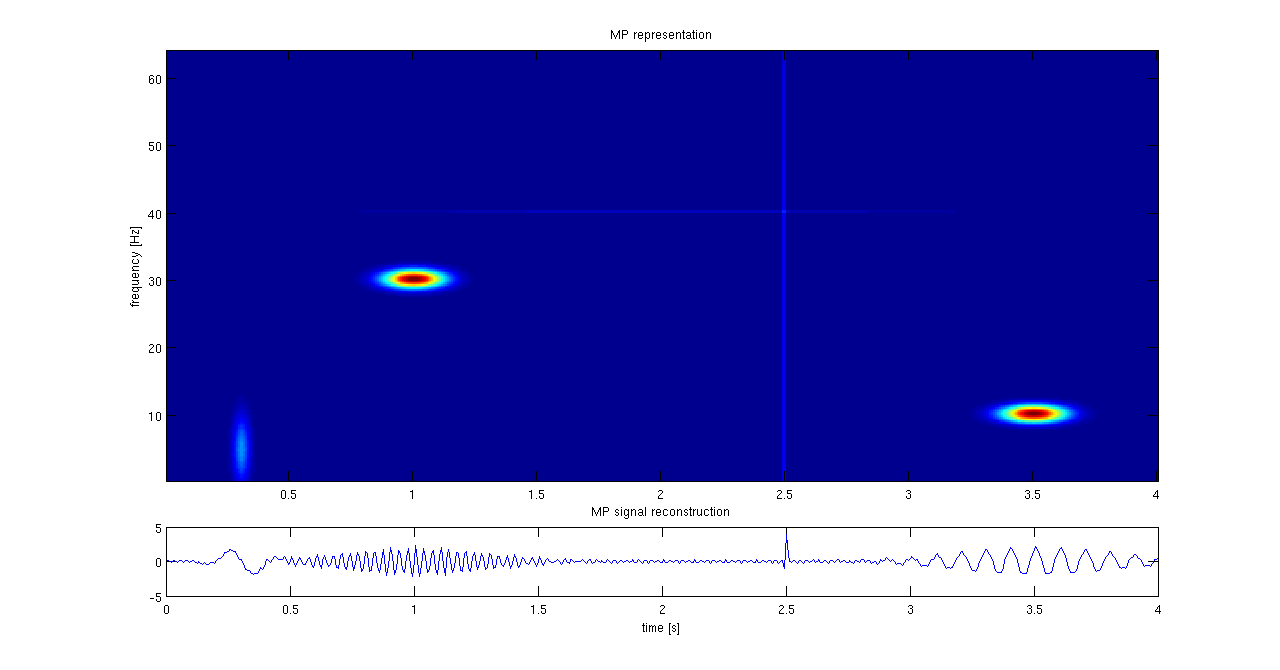

Matching Pursuit

Matching pursuit (MP) is a sparse approximation algorithm which finds the "best matching" projections of multidimensional data onto the span of an over-complete (i.e., redundant) dictionary D. The basic idea is to approximately represent a signal f from Hilbert space H as a weighted sum of finitely many functions g_ (called atoms) taken from D. An approximation with N atoms has the form : f(t) \approx \hat f_N(t) := \sum_^ a_n g_(t) where g_ is the \gamma_nth column of the matrix D and a_n is the scalar weighting factor (amplitude) for the atom g_. Normally, not every atom in D will be used in this sum. Instead, matching pursuit chooses the atoms one at a time in order to maximally (greedily) reduce the approximation error. This is achieved by finding the atom that has the highest inner product with the signal (assuming the atoms are normalized), subtracting from the signal an approximation that uses only that one atom, and repeating the process until the signal is satisfactorily d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sparse Approximation

Sparse approximation (also known as sparse representation) theory deals with sparse solutions for systems of linear equations. Techniques for finding these solutions and exploiting them in applications have found wide use in image processing, signal processing, machine learning, medical imaging, and more. Sparse decomposition Noiseless observations Consider a linear system of equations x = D\alpha, where D is an underdetermined m\times p matrix (m < p) and . The matrix (typically assumed to be full-rank) is referred to as the dictionary, and is a signal of interest. The core sparse representation problem is defined as the quest for the sparsest possible representation satisfying . Due to the underdetermined nature of , this linear system admits in general infinitely many possible solutions, and among these we seek the one with the fewe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Basis (linear Algebra)

In mathematics, a set of vectors in a vector space is called a basis if every element of may be written in a unique way as a finite linear combination of elements of . The coefficients of this linear combination are referred to as components or coordinates of the vector with respect to . The elements of a basis are called . Equivalently, a set is a basis if its elements are linearly independent and every element of is a linear combination of elements of . In other words, a basis is a linearly independent spanning set. A vector space can have several bases; however all the bases have the same number of elements, called the ''dimension'' of the vector space. This article deals mainly with finite-dimensional vector spaces. However, many of the principles are also valid for infinite-dimensional vector spaces. Definition A basis of a vector space over a field (such as the real numbers or the complex numbers ) is a linearly independent subset of that spans . This me ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Principal Component Analysis

Principal component analysis (PCA) is a popular technique for analyzing large datasets containing a high number of dimensions/features per observation, increasing the interpretability of data while preserving the maximum amount of information, and enabling the visualization of multidimensional data. Formally, PCA is a statistical technique for reducing the dimensionality of a dataset. This is accomplished by linearly transforming the data into a new coordinate system where (most of) the variation in the data can be described with fewer dimensions than the initial data. Many studies use the first two principal components in order to plot the data in two dimensions and to visually identify clusters of closely related data points. Principal component analysis has applications in many fields such as population genetics, microbiome studies, and atmospheric science. The principal components of a collection of points in a real coordinate space are a sequence of p unit vectors, where th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |