|

Dependency Network (graphical Model)

Dependency networks (DNs) are graphical models, similar to Markov networks, wherein each vertex (node) corresponds to a random variable and each edge captures dependencies among variables. Unlike Bayesian networks, DNs may contain cycles. Each node is associated to a conditional probability table, which determines the realization of the random variable given its parents. Markov blanket In a Bayesian network, the Markov blanket of a node is the set of parents and children of that node, together with the children's parents. The values of the parents and children of a node evidently give information about that node. However, its children's parents also have to be included in the Markov blanket, because they can be used to explain away the node in question. In a Markov random field, the Markov blanket for a node is simply its adjacent (or neighboring) nodes. In a dependency network, the Markov blanket for a node is simply the set of its parents. Dependency network versus Baye ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Graphical Model

A graphical model or probabilistic graphical model (PGM) or structured probabilistic model is a probabilistic model for which a Graph (discrete mathematics), graph expresses the conditional dependence structure between random variables. They are commonly used in probability theory, statistics—particularly Bayesian statistics—and machine learning. Types of graphical models Generally, probabilistic graphical models use a graph-based representation as the foundation for encoding a distribution over a multi-dimensional space and a graph that is a compact or Factor graph, factorized representation of a set of independences that hold in the specific distribution. Two branches of graphical representations of distributions are commonly used, namely, Bayesian networks and Markov random fields. Both families encompass the properties of factorization and independences, but they differ in the set of independences they can encode and the factorization of the distribution that they induce ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Markov Network

In the domain of physics and probability, a Markov random field (MRF), Markov network or undirected graphical model is a set of random variables having a Markov property described by an undirected graph. In other words, a random field is said to be a Andrey Markov, Jr., Markov random field if it satisfies Markov properties. The concept originates from the Spin glass#Sherrington–Kirkpatrick model, Sherrington–Kirkpatrick model. A Markov network or MRF is similar to a Bayesian network in its representation of dependencies; the differences being that Bayesian networks are directed acyclic graph, directed and acyclic, whereas Markov networks are undirected and may be cyclic. Thus, a Markov network can represent certain dependencies that a Bayesian network cannot (such as cyclic dependencies ); on the other hand, it can't represent certain dependencies that a Bayesian network can (such as induced dependencies ). The underlying graph of a Markov random field may be finite or infinite ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bayesian Network

A Bayesian network (also known as a Bayes network, Bayes net, belief network, or decision network) is a probabilistic graphical model that represents a set of variables and their conditional dependencies via a directed acyclic graph (DAG). Bayesian networks are ideal for taking an event that occurred and predicting the likelihood that any one of several possible known causes was the contributing factor. For example, a Bayesian network could represent the probabilistic relationships between diseases and symptoms. Given symptoms, the network can be used to compute the probabilities of the presence of various diseases. Efficient algorithms can perform inference and learning in Bayesian networks. Bayesian networks that model sequences of variables (''e.g.'' speech signals or protein sequences) are called dynamic Bayesian networks. Generalizations of Bayesian networks that can represent and solve decision problems under uncertainty are called influence diagrams. Graphical mode ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Markov Blanket

In statistics and machine learning, when one wants to infer a random variable with a set of variables, usually a subset is enough, and other variables are useless. Such a subset that contains all the useful information is called a Markov blanket. If a Markov blanket is minimal, meaning that it cannot drop any variable without losing information, it is called a Markov boundary. Identifying a Markov blanket or a Markov boundary helps to extract useful features. The terms of Markov blanket and Markov boundary were coined by Judea Pearl in 1988. Markov blanket A Markov blanket of a random variable Y in a random variable set \mathcal=\ is any subset \mathcal_1 of \mathcal, conditioned on which other variables are independent with Y: :Y\perp \!\!\! \perp\mathcal\backslash\mathcal_1 \mid \mathcal_1. It means that \mathcal_1 contains at least all the information one needs to infer Y, where the variables in \mathcal\backslash\mathcal_1 are redundant. In general, a given Markov blanket ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Markov Random Field

In the domain of physics and probability, a Markov random field (MRF), Markov network or undirected graphical model is a set of random variables having a Markov property described by an undirected graph. In other words, a random field is said to be a Markov random field if it satisfies Markov properties. The concept originates from the Sherrington–Kirkpatrick model. A Markov network or MRF is similar to a Bayesian network in its representation of dependencies; the differences being that Bayesian networks are directed and acyclic, whereas Markov networks are undirected and may be cyclic. Thus, a Markov network can represent certain dependencies that a Bayesian network cannot (such as cyclic dependencies ); on the other hand, it can't represent certain dependencies that a Bayesian network can (such as induced dependencies ). The underlying graph of a Markov random field may be finite or infinite. When the joint probability density of the random variables is strictly positive, it ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Joint Probability Distribution

Given two random variables that are defined on the same probability space, the joint probability distribution is the corresponding probability distribution on all possible pairs of outputs. The joint distribution can just as well be considered for any given number of random variables. The joint distribution encodes the marginal distributions, i.e. the distributions of each of the individual random variables. It also encodes the conditional probability distributions, which deal with how the outputs of one random variable are distributed when given information on the outputs of the other random variable(s). In the formal mathematical setup of measure theory, the joint distribution is given by the pushforward measure, by the map obtained by pairing together the given random variables, of the sample space's probability measure. In the case of real-valued random variables, the joint distribution, as a particular multivariate distribution, may be expressed by a multivariate cumulativ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gibbs Sampling

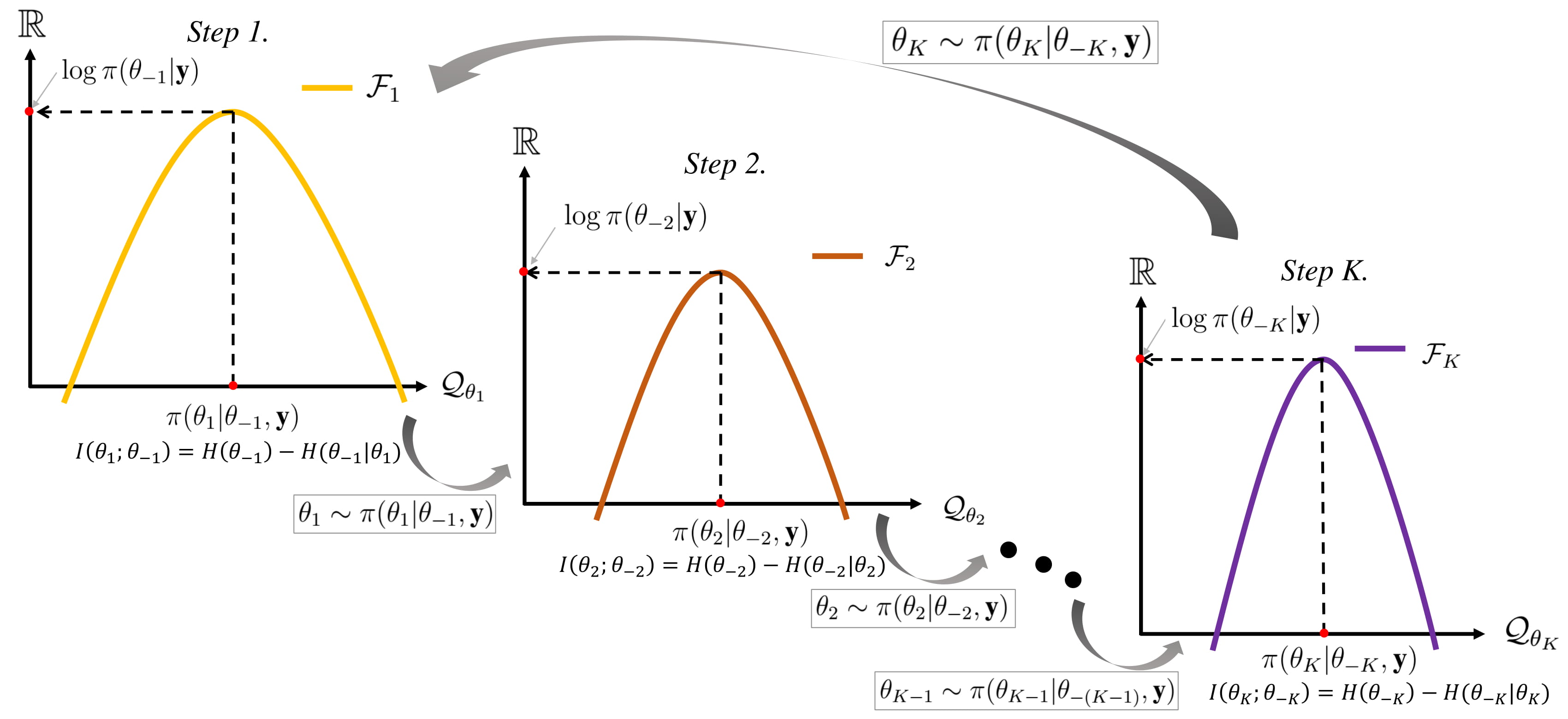

In statistics, Gibbs sampling or a Gibbs sampler is a Markov chain Monte Carlo (MCMC) algorithm for obtaining a sequence of observations which are approximated from a specified multivariate probability distribution, when direct sampling is difficult. This sequence can be used to approximate the joint distribution (e.g., to generate a histogram of the distribution); to approximate the marginal distribution of one of the variables, or some subset of the variables (for example, the unknown parameters or latent variables); or to compute an integral (such as the expected value of one of the variables). Typically, some of the variables correspond to observations whose values are known, and hence do not need to be sampled. Gibbs sampling is commonly used as a means of statistical inference, especially Bayesian inference. It is a randomized algorithm (i.e. an algorithm that makes use of random numbers), and is an alternative to deterministic algorithms for statistical inferenc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Collaborative Filtering

Collaborative filtering (CF) is a technique used by recommender systems.Francesco Ricci and Lior Rokach and Bracha ShapiraIntroduction to Recommender Systems Handbook Recommender Systems Handbook, Springer, 2011, pp. 1-35 Collaborative filtering has two senses, a narrow one and a more general one. In the newer, narrower sense, collaborative filtering is a method of making automatic predictions (filtering) about the interests of a user by collecting preferences or taste information from many users (collaborating). The underlying assumption of the collaborative filtering approach is that if a person ''A'' has the same opinion as a person ''B'' on an issue, A is more likely to have B's opinion on a different issue than that of a randomly chosen person. For example, a collaborative filtering recommendation system for preferences in television programming could make predictions about which television show a user should like given a partial list of that user's tastes (likes or dislikes ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Relational Dependency Network

Relational dependency networks (RDNs) are graphical models which extend dependency networks to account for relational data. Relational data is data organized into one or more tables, which are cross-related through standard fields. A relational database is a canonical example of a system that serves to maintain relational data. A relational dependency network can be used to characterize the knowledge contained in a database. Introduction Relational Dependency Networks (or RDNs) aims to get the joint probability distribution over the variables of a dataset represented in the relational domain. They are based on Dependency Networks (or DNs) and extend them to the relational setting. RDNs have efficient learning methods where an RDN can learn the parameters independently, with the conditional probability distributions estimated separately. Since there may be some inconsistencies due to the independent learning method, RDNs use Gibbs sampling to recover joint distribution, like ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |