|

Delayed Column Generation

Column generation or delayed column generation is an efficient algorithm for solving large linear programs. The overarching idea is that many linear programs are too large to consider all the variables explicitly. The idea is thus to start by solving the considered program with only a subset of its variables. Then iteratively, variables that have the potential to improve the objective function are added to the program. Once it is possible to demonstrate that adding new variables would no longer improve the value of the objective function, the procedure stops. The hope when applying a column generation algorithm is that only a very small fraction of the variables will be generated. This hope is supported by the fact that in the optimal solution, most variables will be non-basic and assume a value of zero, so the optimal solution can be found without them. In many cases, this method allows to solve large linear programs that would otherwise be intractable. The classical example of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Programming

Linear programming (LP), also called linear optimization, is a method to achieve the best outcome (such as maximum profit or lowest cost) in a mathematical model whose requirements and objective are represented by linear function#As a polynomial function, linear relationships. Linear programming is a special case of mathematical programming (also known as mathematical optimization). More formally, linear programming is a technique for the mathematical optimization, optimization of a linear objective function, subject to linear equality and linear inequality Constraint (mathematics), constraints. Its feasible region is a convex polytope, which is a set defined as the intersection (mathematics), intersection of finitely many Half-space (geometry), half spaces, each of which is defined by a linear inequality. Its objective function is a real number, real-valued affine function, affine (linear) function defined on this polytope. A linear programming algorithm finds a point in the po ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

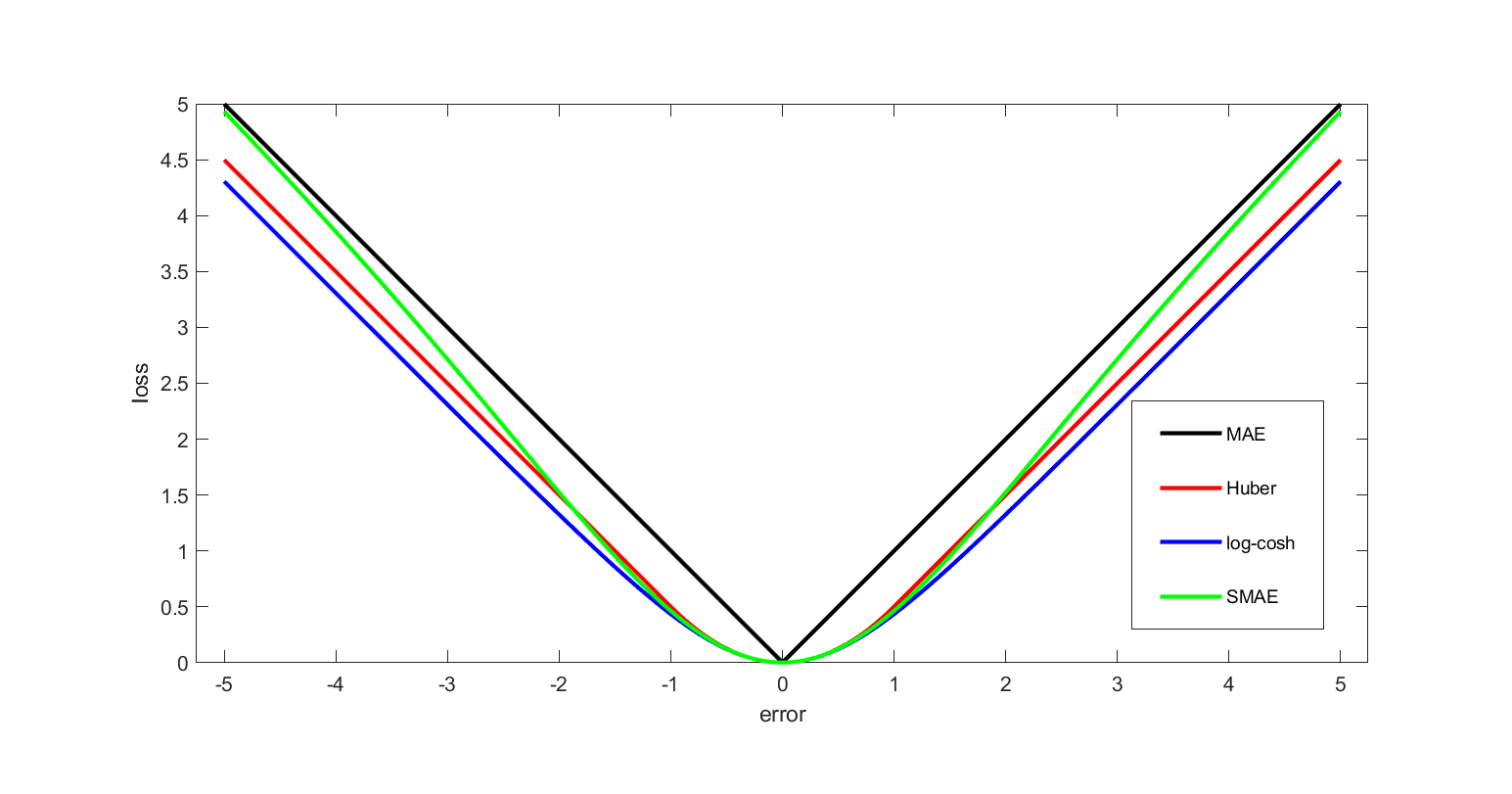

Objective Function

In mathematical optimization and decision theory, a loss function or cost function (sometimes also called an error function) is a function that maps an event or values of one or more variables onto a real number intuitively representing some "cost" associated with the event. An optimization problem seeks to minimize a loss function. An objective function is either a loss function or its opposite (in specific domains, variously called a reward function, a profit function, a utility function, a fitness function, etc.), in which case it is to be maximized. The loss function could include terms from several levels of the hierarchy. In statistics, typically a loss function is used for parameter estimation, and the event in question is some function of the difference between estimated and true values for an instance of data. The concept, as old as Laplace, was reintroduced in statistics by Abraham Wald in the middle of the 20th century. In the context of economics, for example, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cutting Stock Problem

In operations research, the cutting-stock problem is the problem of cutting standard-sized pieces of Inventory, stock material, such as paper rolls or sheet metal, into pieces of specified sizes while minimizing material wasted. It is an optimization (mathematics), optimization problem in mathematics that arises from applications in industry. In terms of Analysis of algorithms, computational complexity, the problem is an NP-hard problem reducible to the knapsack problem. The problem can be formulated as an integer linear programming problem. Illustration of one-dimensional cutting-stock problem A paper machine can produce an unlimited number of master (jumbo) rolls, each 5600 mm wide. The following 13 items must be cut, in the table below. The important thing about this kind of problem is that many different product units can be made from the same master roll, and the number of possible combinations is itself very large, in general, and not trivial to enumerate. The probl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dantzig–Wolfe Decomposition

Dantzig–Wolfe decomposition is an algorithm for solving linear programming problems with special structure. It was originally developed by George Dantzig and Philip Wolfe and initially published in 1960. Many texts on linear programming have sections dedicated to discussing this decomposition algorithm. Dantzig–Wolfe decomposition relies on delayed column generation for improving the tractability of large-scale linear programs. For most linear programs solved via the revised simplex algorithm, at each step, most columns (variables) are not in the basis. In such a scheme, a master problem containing at least the currently active columns (the basis) uses a subproblem or subproblems to generate columns for entry into the basis such that their inclusion improves the objective function. Required form In order to use Dantzig–Wolfe decomposition, the constraint matrix of the linear program must have a specific form. A set of constraints must be identified as "connecting" ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Crew Scheduling

Crew scheduling is the process of assigning crews to operate transportation systems, such as rail lines or airlines. Complex Most transportation systems use software to manage the crew scheduling process. Crew scheduling becomes more and more complex as you add variables to the problem. These variables can be as simple as 1 location, 1 skill requirement, 1 shift of work and 1 set roster of people. In the Transportation industries, such as Rail or mainly Air Travel, these variables become very complex. In Air Travel for instance, there are numerous rules or "constraints" that are introduced. These mainly deal with legalities relating to work shifts and time, and a crew member's qualifications for working on a particular aircraft. Add numerous locations to the equation and Collective Bargaining and Federal labor laws and these become new considerations for the problem solving method. Fuel is also a major consideration as aircraft and other vehicles require a lot of costly fuel t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vehicle Routing

The vehicle routing problem (VRP) is a combinatorial optimization and integer programming problem which asks "What is the optimal set of routes for a fleet of vehicles to traverse in order to deliver to a given set of customers?" It generalises the travelling salesman problem (TSP). It first appeared in a paper by George Dantzig and John Ramser in 1959, in which the first algorithmic approach was written and was applied to petrol deliveries. Often, the context is that of delivering goods located at a central depot to customers who have placed orders for such goods. The objective of the VRP is to minimize the total route cost. In 1964, Clarke and Wright improved on Dantzig and Ramser's approach using an effective greedy algorithm called the savings algorithm. Determining the optimal solution to VRP is NP-hard, so the size of problems that can be optimally solved using mathematical programming or combinatorial optimization can be limited. Therefore, commercial solvers tend to use ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reduced Cost

In linear programming, reduced cost, or opportunity cost, is the amount by which an objective function coefficient would have to improve (so increase for maximization problem, decrease for minimization problem) before it would be possible for a corresponding variable to assume a positive value in the optimal solution. It is the cost for increasing a variable by a small amount, i.e., the first derivative from a certain point on the polyhedron that constrains the problem. When the point is a vertex in the polyhedron, the variable with the most extreme cost, negatively for minimization and positively maximization, is sometimes referred to as the steepest edge. Given a system minimize \mathbf^T\mathbf subject to \mathbf\leq\mathbf, \mathbf\geq 0, the reduced cost vector can be computed as \mathbf - \mathbf^T \mathbf, where \mathbf is the dual cost vector. It follows directly that for a minimization problem, any non- basic variables at their lower bounds with strictly negative reduce ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Without Loss Of Generality

''Without loss of generality'' (often abbreviated to WOLOG, WLOG or w.l.o.g.; less commonly stated as ''without any loss of generality'' or ''with no loss of generality'') is a frequently used expression in mathematics. The term is used to indicate the assumption that what follows is chosen arbitrarily, narrowing the premise to a particular case, but does not affect the validity of the proof in general. The other cases are sufficiently similar to the one presented that proving them follows by essentially the same logic. As a result, once a proof is given for the particular case, it is trivial to adapt it to prove the conclusion in all other cases. In many scenarios, the use of "without loss of generality" is made possible by the presence of symmetry. For example, if some property ''P''(''x'',''y'') of real numbers is known to be symmetric in ''x'' and ''y'', namely that ''P''(''x'',''y'') is equivalent to ''P''(''y'',''x''), then in proving that ''P''(''x'',''y'') holds for every ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Combinatorial Algorithms

An algorithm is fundamentally a set of rules or defined procedures that is typically designed and used to solve a specific problem or a broad set of problems. Broadly, algorithms define process(es), sets of rules, or methodologies that are to be followed in calculations, data processing, data mining, pattern recognition, automated reasoning or other problem-solving operations. With the increasing automation of services, more and more decisions are being made by algorithms. Some general examples are; risk assessments, anticipatory policing, and pattern recognition technology. The following is a list of well-known algorithms. Automated planning Combinatorial algorithms General combinatorial algorithms * Brent's algorithm: finds a cycle in function value iterations using only two iterators * Floyd's cycle-finding algorithm: finds a cycle in function value iterations * Gale–Shapley algorithm: solves the stable matching problem * Pseudorandom number generators (uniformly dist ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Primal Problem

In mathematical optimization theory, duality or the duality principle is the principle that optimization problems may be viewed from either of two perspectives, the primal problem or the dual problem. If the primal is a minimization problem then the dual is a maximization problem (and vice versa). Any feasible solution to the primal (minimization) problem is at least as large as any feasible solution to the dual (maximization) problem. Therefore, the solution to the primal is an upper bound to the solution of the dual, and the solution of the dual is a lower bound to the solution of the primal. This fact is called weak duality. In general, the optimal values of the primal and dual problems need not be equal. Their difference is called the duality gap. For convex optimization problems, the duality gap is zero under a constraint qualification condition. This fact is called strong duality. Dual problem Usually the term "dual problem" refers to the ''Lagrangian dual problem'' but oth ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dual Linear Program

The dual of a given linear program (LP) is another LP that is derived from the original (the primal) LP in the following schematic way: * Each variable in the primal LP becomes a constraint in the dual LP; * Each constraint in the primal LP becomes a variable in the dual LP; * The objective direction is inversed – maximum in the primal becomes minimum in the dual and vice versa. The weak duality theorem states that the objective value of the dual LP at any feasible solution is always a bound on the objective of the primal LP at any feasible solution (upper or lower bound, depending on whether it is a maximization or minimization problem). In fact, this bounding property holds for the optimal values of the dual and primal LPs. The strong duality theorem states that, moreover, if the primal has an optimal solution then the dual has an optimal solution too, ''and the two optima are equal''. Pages 81–104. These theorems belong to a larger class of duality theorems in optimizat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |