|

DVI Specials

The device independent file format (DVI) is the output file format of the TeX typesetting program, designed by David R. Fuchs and implemented by Donald E. Knuth in 1982. Unlike the TeX markup files used to generate them, DVI files are not intended to be human-readable; they consist of binary data describing the visual layout of a document in a manner not reliant on any specific image format, display hardware or printer. DVI files are typically used as input to a second program (called a DVI ''driver'') which translates DVI files to graphical data. For example, most TeX software packages include a program for previewing DVI files on a user's computer display; this program is a driver. Drivers are also used to convert from DVI to popular page description languages (e.g. PostScript, PDF) and for printing. TeX markup may be at least partially reverse-engineered from DVI files, although this process is unlikely to produce high-level constructs identical to those present in the origi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Evince Previewing A DVI File

Evince (), also known as GNOME Document Viewer, is a free and open source document viewer supporting many document file formats including PDF, PostScript, DjVu, TIFF, XPS and DVI. It is designed for the GNOME desktop environment. The developers of Evince intended to replace the multiple GNOME document viewers with a single and simple application. The Evince motto sums up the project aim: "Simply a Document Viewer". GNOME releases have included Evince since GNOME 2.12 (September 2005). Evince's code is written mainly in C, with a small part (specifically, the interface with Poppler) written in C++. Many Linux distributions – including Ubuntu, Fedora Linux and Linux Mint – include Evince as the default document viewer. Evince is free and open-source software subject to the requirements of the GNU General Public License version 2 or later. The Evince FAQ highlights the meaning of the word "Evince" as "to show or express something clearly". History Evince began a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reverse-engineer

Reverse engineering (also known as backwards engineering or back engineering) is a process or method through which one attempts to understand through deductive reasoning how a previously made device, process, system, or piece of software accomplishes a task with very little (if any) insight into exactly how it does so. It is essentially the process of opening up or dissecting a system to see how it works, in order to duplicate or enhance it. Depending on the system under consideration and the technologies employed, the knowledge gained during reverse engineering can help with repurposing obsolete objects, doing security analysis, or learning how something works. Although the process is specific to the object on which it is being performed, all reverse engineering processes consist of three basic steps: Information extraction, Modeling, and Review. Information extraction refers to the practice of gathering all relevant information for performing the operation. Modeling refers to t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Graphics

Graphics () are visual images or designs on some surface, such as a wall, canvas, screen, paper, or stone, to inform, illustrate, or entertain. In contemporary usage, it includes a pictorial representation of data, as in design and manufacture, in typesetting and the graphic arts, and in educational and recreational software. Images that are generated by a computer are called computer graphics. Examples are photographs, drawings, line art, mathematical graphs, line graphs, charts, diagrams, typography, numbers, symbols, geometric designs, maps, engineering drawings, or other images. Graphics often combine text, illustration, and color. Graphic design may consist of the deliberate selection, creation, or arrangement of typography alone, as in a brochure, flyer, poster, web site, or book without any other element. The objective can be clarity or effective communication, association with other cultural elements, or merely the creation of a distinctive style. Graphics ca ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

ASCII

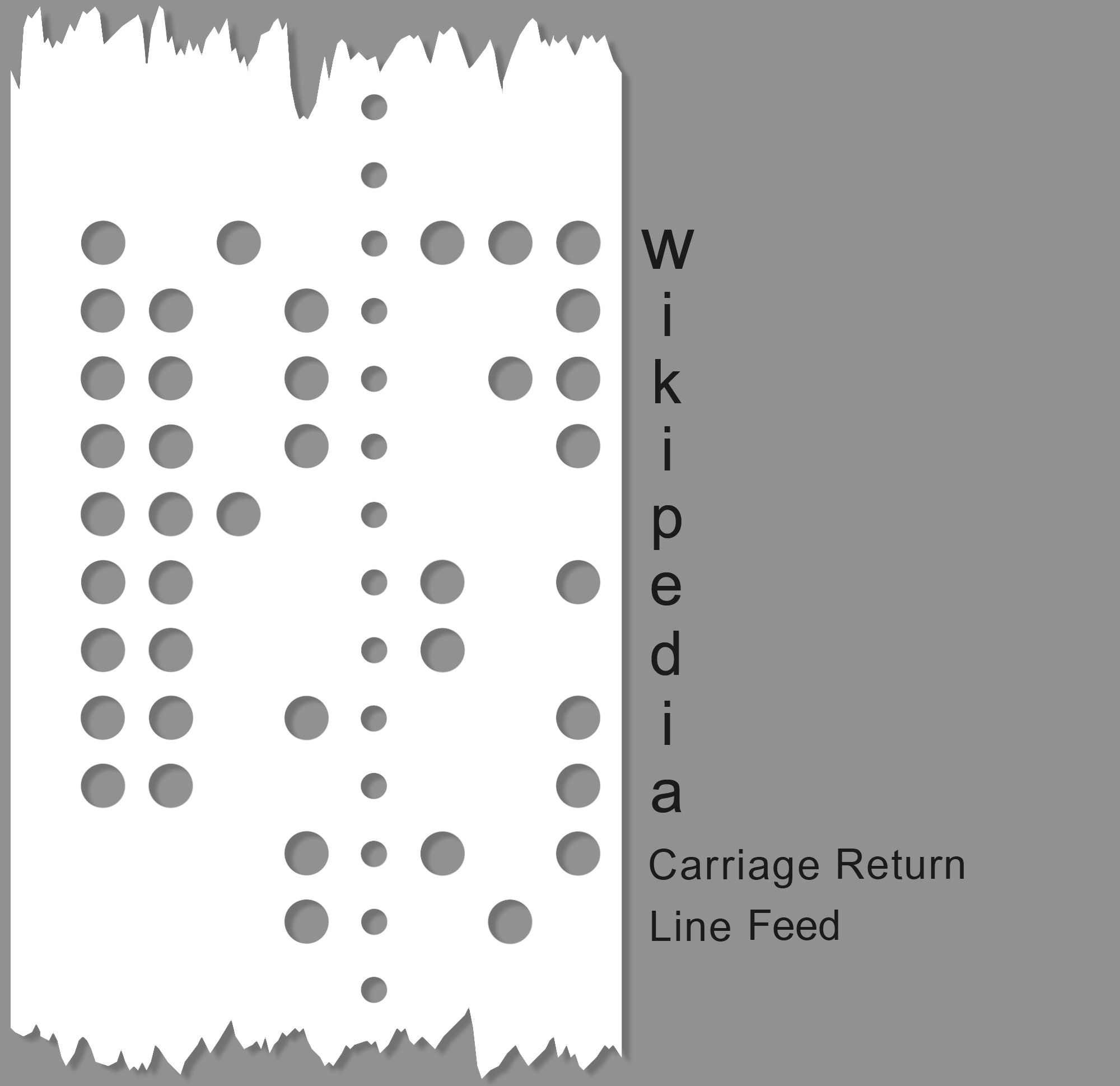

ASCII ( ), abbreviated from American Standard Code for Information Interchange, is a character encoding standard for electronic communication. ASCII codes represent text in computers, telecommunications equipment, and other devices. Because of technical limitations of computer systems at the time it was invented, ASCII has just 128 code points, of which only 95 are , which severely limited its scope. All modern computer systems instead use Unicode, which has millions of code points, but the first 128 of these are the same as the ASCII set. The Internet Assigned Numbers Authority (IANA) prefers the name US-ASCII for this character encoding. ASCII is one of the List of IEEE milestones, IEEE milestones. Overview ASCII was developed from telegraph code. Its first commercial use was as a seven-bit teleprinter code promoted by Bell data services. Work on the ASCII standard began in May 1961, with the first meeting of the American Standards Association's (ASA) (now the American Nat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

EBCDIC

Extended Binary Coded Decimal Interchange Code (EBCDIC; ) is an eight-bit character encoding used mainly on IBM mainframe and IBM midrange computer operating systems. It descended from the code used with punched cards and the corresponding six-bit binary-coded decimal code used with most of IBM's computer peripherals of the late 1950s and early 1960s. It is supported by various non-IBM platforms, such as Fujitsu-Siemens' BS2000/OSD, OS-IV, MSP, and MSP-EX, the SDS Sigma series, Unisys VS/9, Unisys MCP and ICL VME. History EBCDIC was devised in 1963 and 1964 by IBM and was announced with the release of the IBM System/360 line of mainframe computers. It is an eight-bit character encoding, developed separately from the seven-bit ASCII encoding scheme. It was created to extend the existing Binary-Coded Decimal (BCD) Interchange Code, or BCDIC, which itself was devised as an efficient means of encoding the two ''zone'' and ''number'' punches on punched cards into six bits. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Character Encoding

Character encoding is the process of assigning numbers to Graphics, graphical character (computing), characters, especially the written characters of Language, human language, allowing them to be Data storage, stored, Data communication, transmitted, and Computing, transformed using Digital electronics, digital computers. The numerical values that make up a character encoding are known as "code points" and collectively comprise a "code space", a "code page", or a "Character Map (Windows), character map". Early character codes associated with the optical or electrical Telegraphy, telegraph could only represent a subset of the characters used in written languages, sometimes restricted to Letter case, upper case letters, Numeral system, numerals and some punctuation only. The low cost of digital representation of data in modern computer systems allows more elaborate character codes (such as Unicode) which represent most of the characters used in many written languages. Character enc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

TeX Font Metric

TeX font metric (TFM) is a typeface, font file format used by the TeX typesetting system. It is a font metric format, not an outline font format like TrueType, because it provides only the information necessary to typeset the font such as each character's width, height and depth. The actual glyphs are stored elsewhere. This is not unique to TeX; Adobe's Adobe Font Metrics, AFM files and Windows' Printer Font Metrics, PFM (NTF on modern Windows PostScript driver) files use the same technique. TFM files contain all of the information TeX needs to produce its device-independent (DVI (file format), DVI) output. The actual glyphs are then inserted by the eventual DVI output driver or previewer, using, for instance, TrueType fonts, or fonts in the bitmap PK font, PK format derived from a METAFONT source. The format is designed to be extremely compact: in the original Computer Modern distribution, every font's TFM file is smaller than 2 kB. Specification The canonical specification of t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |