|

Duncan's Taxonomy

Duncan's taxonomy is a classification of computer architectures, proposed by Ralph Duncan in 1990.Duncan, Ralph, "A Survey of Parallel Computer Architectures", IEEE Computer. February 1990, pp. 5-16. Duncan suggested modifications to Flynn's taxonomyFlynn, M.J., "Very High Speed Computing Systems", Proc. IEEE. Vol. 54, 1966, pp.1901-1909. to include pipelined vector processes. Taxonomy The taxonomy was developed during 1988-1990 and was first published in 1990. Its original categories are indicated below. Synchronous architectures This category includes all the parallel architectures that coordinate concurrent execution in lockstep fashion and do so via mechanisms such as global clocks, central control units or vector unit controllers. Further subdivision of this category is made primarily on the basis of the synchronization mechanism. Pipelined vector processors ''Pipelined vector processors'' are characterized by pipelined functional units that accept a sequential stream of arr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Computer Architecture

In computer science and computer engineering, computer architecture is a description of the structure of a computer system made from component parts. It can sometimes be a high-level description that ignores details of the implementation. At a more detailed level, the description may include the instruction set architecture design, microarchitecture design, logic design, and implementation. History The first documented computer architecture was in the correspondence between Charles Babbage and Ada Lovelace, describing the analytical engine. While building the computer Z1 in 1936, Konrad Zuse described in two patent applications for his future projects that machine instructions could be stored in the same storage used for data, i.e., the stored-program concept. Two other early and important examples are: * John von Neumann's 1945 paper, First Draft of a Report on the EDVAC, which described an organization of logical elements; and *Alan Turing's more detailed ''Proposed Electron ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Flynn's Taxonomy

Flynn's taxonomy is a classification of computer architectures, proposed by Michael J. Flynn in 1966 and extended in 1972. The classification system has stuck, and it has been used as a tool in the design of modern processors and their functionalities. Since the rise of multiprocessing central processing units (CPUs), a multiprogramming context has evolved as an extension of the classification system. Vector processing, covered by Duncan's taxonomy, is missing from Flynn's work because the Cray-1 was released in 1977: Flynn's second paper was published in 1972. Classifications The four initial classifications defined by Flynn are based upon the number of concurrent instruction (or control) streams and data streams available in the architecture. Flynn defined three additional sub-categories of SIMD in 1972. Single instruction stream, single data stream (SISD) A sequential computer which exploits no parallelism in either the instruction or data streams. Single control unit (CU) fet ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Vector Processor

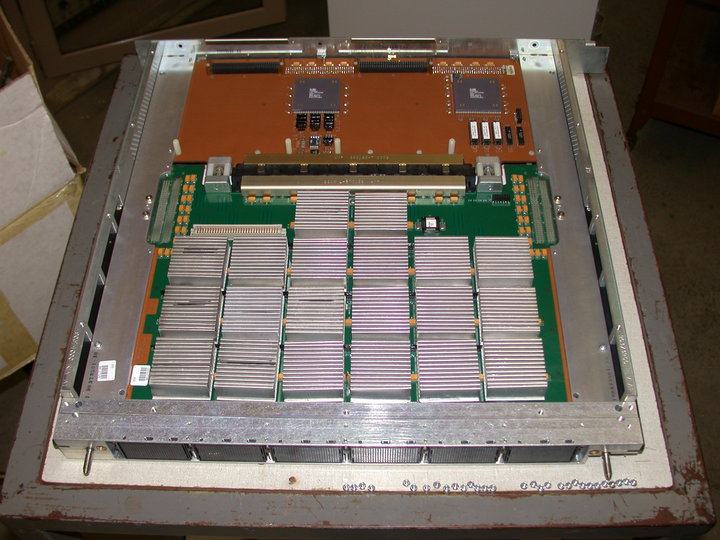

In computing, a vector processor or array processor is a central processing unit (CPU) that implements an instruction set where its instructions are designed to operate efficiently and effectively on large one-dimensional arrays of data called ''vectors''. This is in contrast to scalar processors, whose instructions operate on single data items only, and in contrast to some of those same scalar processors having additional single instruction, multiple data (SIMD) or SIMD within a register (SWAR) Arithmetic Units. Vector processors can greatly improve performance on certain workloads, notably numerical simulation, compression and similar tasks. Vector processing techniques also operate in video-game console hardware and in graphics accelerators. Vector machines appeared in the early 1970s and dominated supercomputer design through the 1970s into the 1990s, notably the various Cray platforms. The rapid fall in the price-to-performance ratio of conventional microprocessor de ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Pipeline (computing)

In computing, a pipeline, also known as a data pipeline, is a set of data processing elements connected in series, where the output of one element is the input of the next one. The elements of a pipeline are often executed in parallel or in time-sliced fashion. Some amount of buffer storage is often inserted between elements. Concept and motivation Pipelining is a commonly used concept in everyday life. For example, in the assembly line of a car factory, each specific task—such as installing the engine, installing the hood, and installing the wheels—is often done by a separate work station. The stations carry out their tasks in parallel, each on a different car. Once a car has had one task performed, it moves to the next station. Variations in the time needed to complete the tasks can be accommodated by "buffering" (holding one or more cars in a space between the stations) and/or by "stalling" (temporarily halting the upstream stations), until the next station becomes avai ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Parallelism (computing)

Parallel computing is a type of computation in which many calculations or processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: bit-level, instruction-level, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling.S.V. Adve ''et al.'' (November 2008)"Parallel Computing Research at Illinois: The UPCRC Agenda" (PDF). Parallel@Illinois, University of Illinois at Urbana-Champaign. "The main techniques for these performance benefits—increased clock frequency and smarter but increasingly complex architectures—are now hitting the so-called power wall. The computer industry has accepted that future performance increases must largely come from increasing the number of processors (or cores) on a die, rather than mak ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Functional Unit

In computer engineering, an execution unit (E-unit or EU) is a part of a processing unit that performs the operations and calculations forwarded from the instruction unit. It may have its own internal control sequence unit (not to be confused with a CPU's main control unit), some registers, and other internal units such as an arithmetic logic unit, address generation unit, floating-point unit, load–store unit, branch execution unit or other smaller and more specific components, and can be tailored to support a certain datatype, such as integers or floating-points. It is common for modern processing units to have multiple parallel functional units within its execution units, which is referred to as superscalar A superscalar processor (or multiple-issue processor) is a CPU that implements a form of parallelism called instruction-level parallelism within a single processor. In contrast to a scalar processor, which can execute at most one single in ... design. The si ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Vector Registers

A processor register is a quickly accessible location available to a computer's processor. Registers usually consist of a small amount of fast storage, although some registers have specific hardware functions, and may be read-only or write-only. In computer architecture, registers are typically addressed by mechanisms other than main memory, but may in some cases be assigned a memory address e.g. DEC PDP-10, ICT 1900. Almost all computers, whether load/store architecture or not, load items of data from a larger memory into registers where they are used for arithmetic operations, bitwise operations, and other operations, and are manipulated or tested by machine instructions. Manipulated items are then often stored back to main memory, either by the same instruction or by a subsequent one. Modern processors use either static or dynamic random-access memory (RAM) as main memory, with the latter usually accessed via one or more cache levels. Processor registers are normally ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Cray-1

The Cray-1 was a supercomputer designed, manufactured and marketed by Cray Research. Announced in 1975, the first Cray-1 system was installed at Los Alamos National Laboratory in 1976. Eventually, eighty Cray-1s were sold, making it one of the most successful supercomputers in history. It is perhaps best known for its unique shape, a relatively small C-shaped cabinet with a ring of benches around the outside covering the power supplies and the cooling system. The Cray-1 was the first supercomputer to successfully implement the vector processor design. These systems improve the performance of math operations by arranging memory and registers to quickly perform a single operation on a large set of data. Previous systems like the CDC STAR-100 and ASC had implemented these concepts but did so in a way that seriously limited their performance. The Cray-1 addressed these problems and produced a machine that ran several times faster than any similar design. The Cray-1's architect ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Control Data Corporation

Control Data Corporation (CDC) was a mainframe and supercomputer company that in the 1960s was one of the nine major U.S. computer companies, which group included IBM, the Burroughs Corporation, and the Digital Equipment Corporation (DEC), the NCR Corporation (NCR), General Electric, Honeywell, RCA, and UNIVAC. For most of the 1960s, the strength of CDC was the work of the electrical engineer Seymour Cray who developed a series of fast computers, then considered the fastest computing machines in the world; in the 1970s, Cray left the Control Data Corporation and founded Cray Research (CRI) to design and make supercomputers. In 1988, after much financial loss, the Control Data Corporation began withdrawing from making computers and sold the affiliated companies of CDC; in 1992, CDC established Control Data Systems, Inc. The remaining affiliate companies of CDC currently do business as the software company Dayforce. Background: World War II – 1957 During World War II the Un ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

CDC STAR-100

The CDC STAR-100 is a vector supercomputer that was designed, manufactured, and marketed by Control Data Corporation (CDC). It was one of the first machines to use a vector processor to improve performance on appropriate scientific applications. It was also the first supercomputer to use integrated circuits and the first to be equipped with one million words of computer memory. STAR is a blend of ''STrings'' (of binary digits) and ''ARrays.'' The 100 alludes to the nominal peak processing speed of 100 million floating point operations per second ( MFLOPS); the earlier CDC 7600 provided peak performance of 36 MFLOPS but more typically ran at around 10 MFLOPS. The design was part of a bid made to Lawrence Livermore National Laboratory (LLNL) in the mid-1960s. Livermore was looking for a partner who would build a much faster machine on their own budget and then lease the resulting design to the lab. It was announced publicly in the early 1970s, and on 17 August 1971, CDC ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Texas Instruments

Texas Instruments Incorporated (TI) is an American multinational semiconductor company headquartered in Dallas, Texas. It is one of the top 10 semiconductor companies worldwide based on sales volume. The company's focus is on developing analog chips and embedded processors, which account for more than 80% of its revenue. TI also produces digital light processing (DLP) technology and education technology products including calculators, microcontrollers, and multi-core processors. Texas Instruments emerged in 1951 after a reorganization of Geophysical Service Incorporated, a company founded in 1930 that manufactured equipment for use in the seismic industry, as well as defense electronics. TI produced the world's first commercial silicon transistor in 1954, and the same year designed and manufactured the first transistor radio. Jack Kilby invented the integrated circuit in 1958 while working at TI's Central Research Labs. TI also invented the hand-held calculator in 1967, and intr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Advanced Scientific Computer

The Advanced Scientific Computer (ASC) is a supercomputer designed and manufactured by Texas Instruments (TI) between 1966 and 1973. The ASC's central processing unit (CPU) supported vector processing, a performance-enhancing technique which was key to its high-performance. The ASC, along with the Control Data Corporation STAR-100 supercomputer (which was introduced in the same year), were the first computers to feature vector processing. However, this technique's potential was not fully realized by either the ASC or STAR-100 due to an insufficient understanding of the technique; it was the Cray Research Cray-1 supercomputer, announced in 1975 that would fully realize and popularize vector processing. The more successful implementation of vector processing in the Cray-1 would demarcate the ASC (and STAR-100) as first-generation vector processors, with the Cray-1 belonging in the second. History TI began as a division of Geophysical Service Incorporated (GSI), a company that perfor ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |