|

Duncan's New Multiple Range Test

In statistics, Duncan's new multiple range test (MRT) is a multiple comparison procedure developed by David B. Duncan in 1955. Duncan's MRT belongs to the general class of multiple comparison procedures that use the studentized range statistic ''q''''r'' to compare sets of means. David B. Duncan developed this test as a modification of the Student–Newman–Keuls method that would have greater power. Duncan's MRT is especially protective against false negative (Type II) error at the expense of having a greater risk of making false positive (Type I) errors. Duncan's test is commonly used in agronomy and other agricultural research. The result of the test is a set of subsets of means, where in each subset means have been found not to be significantly different from one another. This test is often followed by the Compact Letter Display (CLD) methodology that renders the output of such test much more accessible to non-statistician audiences. Definition Assumptions: 1.A sampl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German: '' Statistik'', "description of a state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of surveys and experiments.Dodge, Y. (2006) ''The Oxford Dictionary of Statistical Terms'', Oxford University Press. When census data cannot be collected, statisticians collect data by developing specific experiment designs and survey samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample to the population as a whole. An ex ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Loss Functions

In mathematical optimization and decision theory, a loss function or cost function (sometimes also called an error function) is a function that maps an event or values of one or more variables onto a real number intuitively representing some "cost" associated with the event. An optimization problem seeks to minimize a loss function. An objective function is either a loss function or its opposite (in specific domains, variously called a reward function, a profit function, a utility function, a fitness function, etc.), in which case it is to be maximized. The loss function could include terms from several levels of the hierarchy. In statistics, typically a loss function is used for parameter estimation, and the event in question is some function of the difference between estimated and true values for an instance of data. The concept, as old as Laplace, was reintroduced in statistics by Abraham Wald in the middle of the 20th century. In the context of economics, for example, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Student's T-test

A ''t''-test is any statistical hypothesis test in which the test statistic follows a Student's ''t''-distribution under the null hypothesis. It is most commonly applied when the test statistic would follow a normal distribution if the value of a scaling term in the test statistic were known (typically, the scaling term is unknown and therefore a nuisance parameter). When the scaling term is estimated based on the data, the test statistic—under certain conditions—follows a Student's ''t'' distribution. The ''t''-test's most common application is to test whether the means of two populations are different. History The term "''t''-statistic" is abbreviated from "hypothesis test statistic". In statistics, the t-distribution was first derived as a posterior distribution in 1876 by Helmert and Lüroth. The t-distribution also appeared in a more general form as Pearson Type IV distribution in Karl Pearson's 1895 paper. However, the T-Distribution, also known as Student's ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Tukey's Range Test

Tukey's range test, also known as Tukey's test, Tukey method, Tukey's honest significance test, or Tukey's HSD (honestly significant difference) test, Also occasionally as "honestly," see e.g. is a single-step multiple comparison procedure and statistical test. It can be used to find means that are significantly different from each other. Named after John Tukey, it compares all possible pairs of means, and is based on a studentized range distribution (''q'') (this distribution is similar to the distribution of ''t'' from the ''t''-test. See below).Linton, L.R., Harder, L.D. (2007) Biology 315 – Quantitative Biology Lecture Notes. University of Calgary, Calgary, AB Tukey's test compares the means of every treatment to the means of every other treatment; that is, it applies simultaneously to the set of all pairwise comparisons :\mu_i-\mu_j \, and identifies any difference between two means that is greater than the expected standard error. The confidence coefficient for ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Null Hypothesis

In scientific research, the null hypothesis (often denoted ''H''0) is the claim that no difference or relationship exists between two sets of data or variables being analyzed. The null hypothesis is that any experimentally observed difference is due to chance alone, and an underlying causative relationship does not exist, hence the term "null". In addition to the null hypothesis, an alternative hypothesis is also developed, which claims that a relationship does exist between two variables. Basic definitions The ''null hypothesis'' and the ''alternative hypothesis'' are types of conjectures used in statistical tests, which are formal methods of reaching conclusions or making decisions on the basis of data. The hypotheses are conjectures about a statistical model of the population, which are based on a sample of the population. The tests are core elements of statistical inference, heavily used in the interpretation of scientific experimental data, to separate scientific claims ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Type I Error Rate

In statistical hypothesis testing, a type I error is the mistaken rejection of an actually true null hypothesis (also known as a "false positive" finding or conclusion; example: "an innocent person is convicted"), while a type II error is the failure to reject a null hypothesis that is actually false (also known as a "false negative" finding or conclusion; example: "a guilty person is not convicted"). Much of statistical theory revolves around the minimization of one or both of these errors, though the complete elimination of either is a statistical impossibility if the outcome is not determined by a known, observable causal process. By selecting a low threshold (cut-off) value and modifying the alpha (α) level, the quality of the hypothesis test can be increased. The knowledge of type I errors and type II errors is widely used in medical science, biometrics and computer science. Intuitively, type I errors can be thought of as errors of ''commission'', i.e. the researcher unluck ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

John W

John is a common English name and surname: * John (given name) * John (surname) John may also refer to: New Testament Works * Gospel of John, a title often shortened to John * First Epistle of John, often shortened to 1 John * Second Epistle of John, often shortened to 2 John * Third Epistle of John, often shortened to 3 John People * John the Baptist (died c. AD 30), regarded as a prophet and the forerunner of Jesus Christ * John the Apostle (lived c. AD 30), one of the twelve apostles of Jesus * John the Evangelist, assigned author of the Fourth Gospel, once identified with the Apostle * John of Patmos, also known as John the Divine or John the Revelator, the author of the Book of Revelation, once identified with the Apostle * John the Presbyter, a figure either identified with or distinguished from the Apostle, the Evangelist and John of Patmos Other people with the given name Religious figures * John, father of Andrew the Apostle and Saint Peter * ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Henry Scheffé

Henry Scheffé (April 11, 1907 – July 5, 1977) was an American statistician. He is known for the Lehmann–Scheffé theorem and Scheffé's method. Education and career Scheffé was born in New York City on April 11, 1907, the child of German immigrants. The family moved to Islip, New York, where Scheffé went to high school. He graduated in 1924, took night classes at Cooper Union, and a year later entered the Polytechnic Institute of Brooklyn. He transferred to the University of Wisconsin in 1928, and earned a bachelor's degree in mathematics there in 1931. Staying at Wisconsin, he married his wife Miriam in 1934 and finished his PhD in 1935, on the subject of differential equations, under the supervision of Rudolf Ernest Langer. After teaching mathematics at Wisconsin, Oregon State University, and Reed College, Scheffé moved to Princeton University in 1941. At Princeton, he began working in statistics instead of in pure mathematics, and assisted the U.S. war effort as a c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

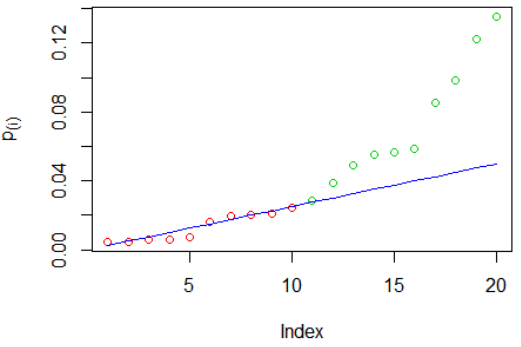

False Discovery Rate

In statistics, the false discovery rate (FDR) is a method of conceptualizing the rate of type I errors in null hypothesis testing when conducting multiple comparisons. FDR-controlling procedures are designed to control the FDR, which is the expected proportion of "discoveries" (rejected null hypotheses) that are false (incorrect rejections of the null). Equivalently, the FDR is the expected ratio of the number of false positive classifications (false discoveries) to the total number of positive classifications (rejections of the null). The total number of rejections of the null include both the number of false positives (FP) and true positives (TP). Simply put, FDR = FP / (FP + TP). FDR-controlling procedures provide less stringent control of Type I errors compared to family-wise error rate (FWER) controlling procedures (such as the Bonferroni correction), which control the probability of ''at least one'' Type I error. Thus, FDR-controlling procedures have greater power, at th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Yosi Hochberg

Yoshi is a fictional dinosaur who appears in video games published by Nintendo. Yoshi debuted in ''Super Mario World'' (1990) on the Super Nintendo Entertainment System as Mario and Luigi's sidekick. Yoshi is the title character of the List of Yoshi video games, ''Yoshi'' series and a supporting character in Mario (franchise), ''Mario'' spin-off games such as ''Mario Party'' and ''Mario Kart'', as well as many List of Mario sports games, ''Mario'' sports games. He also appears as a playable character in crossover fighting game ''Super Smash Bros.'' series. Yoshi is a member of the same-named species, which is distinguished for its wide range of colors. Yoshi was well-received, with some critics noting that he is one of the most recognizable characters and one of the best sidekicks in video games. Yoshi's image has also appeared on a range of products, including clothes and collectibles. Concept and creation Shigeru Miyamoto, the video game designer at Nintendo credited with ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Yoav Benjamini

Yoav Benjamini ( he, יואב בנימיני; born January 5, 1949) is an Israeli statistician best known for development (with Yosef Hochberg) of the “false discovery rate” criterion. He is currently The Nathan and Lily Silver Professor of Applied Statistics at Tel Aviv University. Early life Yoav graduated high school from the Hebrew Reali School in Haifa in 1966 and later studied mathematics and physics at the Hebrew University of Jerusalem, Israel graduating in 1973. His masters degree in Mathematics is from the same university in 1976. In 1981, he received his Ph.D. in Statistics from Princeton University, USA. Scientific fields of interest Benjamini's scientific work combines theoretical research in statistical methodology with applied research that involves complex problems with massive data. The methodological work is on selective and simultaneous inference (multiple comparisons), as well as on general methods for data analysis, data mining, and data visualization. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |