|

Distributed Cache

In computing, a distributed cache is an extension of the traditional concept of cache used in a single locale. A distributed cache may span multiple servers so that it can grow in size and in transactional capacity. It is mainly used to store application data residing in database and web session data. The idea of distributed caching has become feasible now because main memory has become very cheap and network cards have become very fast, with 1 Gbit now standard everywhere and 10 Gbit gaining traction. Also, a distributed cache works well on lower cost machines usually employed for web servers as opposed to database servers which require expensive hardware. An emerging internet architecture known as Information-centric networking (ICN) is one of the best examples of a distributed cache network. The ICN is a network level solution hence the existing distributed network cache management schemes are not well suited for ICN. In the supercomputer environment, distributed c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Computing

Computing is any goal-oriented activity requiring, benefiting from, or creating computer, computing machinery. It includes the study and experimentation of algorithmic processes, and the development of both computer hardware, hardware and software. Computing has scientific, engineering, mathematical, technological, and social aspects. Major computing disciplines include computer engineering, computer science, cybersecurity, data science, information systems, information technology, and software engineering. The term ''computing'' is also synonymous with counting and calculation, calculating. In earlier times, it was used in reference to the action performed by Mechanical computer, mechanical computing machines, and before that, to Computer (occupation), human computers. History The history of computing is longer than the history of computing hardware and includes the history of methods intended for pen and paper (or for chalk and slate) with or without the aid of tables. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Ehcache

Ehcache ( ) is an open source Java distributed cache for general-purpose caching, Java EE and . Ehcache is available under an Apache open source license. Ehcache was developed by Greg Luck starting in 2003. In 2009, the project was purchased by Terracotta, which provides paid support. The software is still open-source, but some new major functionalities (Fast Restartability Consistency) are available only in commercial products, like Enterprise Ehcache and BigMemory, which are not open source. In March 2011, the Wikimedia Foundation announced it would use Ehcache to improve the performance of its wiki projects. However this was quickly abandoned after testing revealed problems with the approach. The name Ehcache is a palindrome. See also * Terracotta, Inc. * Hazelcast * Memcached Memcached (pronounced variously /mɛmkæʃˈdiː/ ''mem-cash-dee'' or /ˈmɛmkæʃt/ ''mem-cashed'') is a general-purpose distributed memory-caching system. It is often used to speed up dynamic da ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Cache Stampede

A cache stampede is a type of cascading failure that can occur when massively parallel computing systems with caching mechanisms come under a very high load. This behaviour is sometimes also called dog-piling. To understand how cache stampedes occur, consider a web server A web server is computer software and underlying Computer hardware, hardware that accepts requests via Hypertext Transfer Protocol, HTTP (the network protocol created to distribute web content) or its secure variant HTTPS. A user agent, co ... that uses memcached to cache rendered pages for some period of time, to ease system load. Under particularly high load to a single URL, the system remains responsive as long as the resource remains cached, with requests being handled by accessing the cached copy. This minimizes the expensive rendering operation. Under low load, cache misses result in a single recalculation of the rendering operation. The system will continue as before, with the average load bein ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Cache-oblivious Algorithm

In computing, a cache-oblivious algorithm (or cache-transcendent algorithm) is an algorithm designed to take advantage of a processor cache without having the size of the cache (or the length of the cache lines, etc.) as an explicit parameter. An optimal cache-oblivious algorithm is a cache-oblivious algorithm that uses the cache optimally (in an asymptotic sense, ignoring constant factors). Thus, a cache-oblivious algorithm is designed to perform well, without modification, on multiple machines with different cache sizes, or for a memory hierarchy with different levels of cache having different sizes. Cache-oblivious algorithms are contrasted with explicit '' loop tiling'', which explicitly breaks a problem into blocks that are optimally sized for a given cache. Optimal cache-oblivious algorithms are known for matrix multiplication, matrix transposition, sorting, and several other problems. Some more general algorithms, such as Cooley–Tukey FFT, are optimally cache-oblivio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Cache Coherence

In computer architecture, cache coherence is the uniformity of shared resource data that is stored in multiple local caches. In a cache coherent system, if multiple clients have a cached copy of the same region of a shared memory resource, all copies are the same. Without cache coherence, a change made to the region by one client may not be seen by others, and errors can result when the data used by different clients is mismatched. A cache coherence protocol is used to maintain cache coherency. The two main types are snooping and directory-based protocols. Cache coherence is of particular relevance in multiprocessing systems, where each CPU may have its own local cache of a shared memory resource. Overview In a shared memory multiprocessor system with a separate cache memory for each processor, it is possible to have many copies of shared data: one copy in the main memory and one in the local cache of each processor that requested it. When one of the copies of data is c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

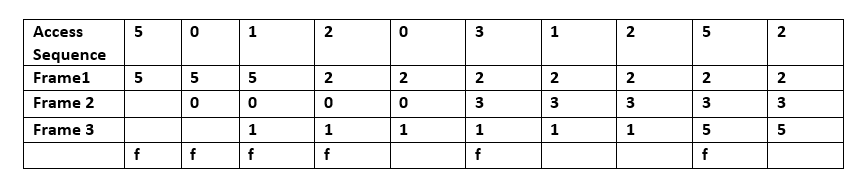

Cache Algorithms

In computing, cache replacement policies (also known as cache replacement algorithms or cache algorithms) are optimizing instructions or algorithms which a computer program or hardware-maintained structure can utilize to manage a cache of information. Caching improves performance by keeping recent or often-used data items in memory locations which are faster, or computationally cheaper to access, than normal memory stores. When the cache is full, the algorithm must choose which items to discard to make room for new data. __TOC__ Overview The average memory reference time is : T = m \times T_m + T_h + E where : m = miss ratio = 1 - (hit ratio) : T_m = time to make main-memory access when there is a miss (or, with a multi-level cache, average memory reference time for the next-lower cache) : T_h= latency: time to reference the cache (should be the same for hits and misses) : E = secondary effects, such as queuing effects in multiprocessor systems A cache has two primary figures o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Velocity (memory Cache)

Velocity is a measurement of speed in a certain direction of motion. It is a fundamental concept in kinematics, the branch of classical mechanics that describes the motion of physical objects. Velocity is a vector quantity, meaning that both magnitude and direction are needed to define it. The scalar absolute value (magnitude) of velocity is called , being a coherent derived unit whose quantity is measured in the SI (metric system) as metres per second (m/s or m⋅s−1). For example, "5 metres per second" is a scalar, whereas "5 metres per second east" is a vector. If there is a change in speed, direction or both, then the object is said to be undergoing an ''acceleration''. Definition Average velocity The average velocity of an object over a period of time is its change in position, \Delta s, divided by the duration of the period, \Delta t, given mathematically as\bar=\frac. Instantaneous velocity The instantaneous velocity of an object is the limit average velocity ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Tarantool

Tarantool is an in-memory computing platform with a flexible data schema, best used for creating high-performance applications. Two main parts of it are an in-memory database and a Lua application server. Tarantool maintains data in memory and ensures crash resistance with write-ahead logging and snapshotting. It includes a Lua interpreter and interactive console, but also accepts connections from programs in several other languages. History Mail.Ru, one of the largest Internet companies in Russia, started the project in 2008 as part of the development of Moy Mir (My World) social network. In 2010 for a project head it hired a former technical lead from MySQL. Open-source contributors have been active especially in the area of external-language connectors for C, Golang, Perl, PHP, Python, Ruby, and node.js. Tarantool became part of the Mail.Ru backbone, used for dynamic content such as user sessions, unsent instant messages, task queues, and a caching layer for tradition ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Redis

Redis (; Remote Dictionary Server) is an in-memory key–value database, used as a distributed cache and message broker, with optional durability. Because it holds all data in memory and because of its design, Redis offers low- latency reads and writes, making it particularly suitable for use cases that require a cache. Redis is the most popular NoSQL database, and one of the most popular databases overall. The project was developed and maintained by Salvatore Sanfilippo, starting in 2009. From 2015 until 2020, he led a project core team sponsored by Redis Ltd. Salvatore Sanfilippo left Redis as the maintainer in 2020. In 2021 Redis Labs dropped the Labs from its name and now is known simply as "Redis". In 2018, some modules for Redis adopted a modified Apache 2.0 with a Commons Clause. In 2024, the main Redis code switched from the open-source BSD-3 license to being dual-licensed under the Redis Source Available License v2 and the Server Side Public License v1. On May ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Riak

Riak (pronounced "ree-ack" ) is a distributed NoSQL key-value data store that offers high availability, fault tolerance, operational simplicity, and scalability. Riak moved to an entirely open-source project in August 2017, with many of the licensed Enterprise Edition features being incorporated. Riak implements the principles from Amazon's Dynamo paper with heavy influence from the CAP theorem. Written in Erlang, Riak has fault-tolerant data replication and automatic data distribution across the cluster for performance and resilience. Riak has a pluggable backend for its core storage, with the default storage backend being Bitcask. LevelDB is also supported, with other options (such as the pure-Erlang Leveled) available depending on the version. Riak was originally developed by engineers employed by Basho Technologies and maintained by them until 2017 when the rights were sold to bet365 after Basho went into receivership. Main features ;Fault-tolerant availabili ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Oracle Coherence

In computing, Oracle Coherence (originally Tangosol Coherence) is a Java-based distributed cache and in-memory data grid. It is claimed to be intended for systems that require high availability, high scalability and low latency, particularly in cases when traditional relational database management systems provide insufficient throughput, or insufficient performance. History Tangosol Coherence was created by Cameron Purdy and Gene Gleyzer, and initially released in December, 2001. Oracle Corporation acquired Tangosol Inc., the original owner of the product, in April 2007, at which point it had more than 100 direct customers. Tangosol Coherence was also embedded in a number of other companies' software products, some of which belonged to Oracle Corporation's competitors. Features Coherence provides mechanisms to integrate with other services using TopLink, Java Persistence API, Oracle Golden Gate and other platforms using APIs provided by Coherence. Coherence can be used to m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Memcached

Memcached (pronounced variously /mɛmkæʃˈdiː/ ''mem-cash-dee'' or /ˈmɛmkæʃt/ ''mem-cashed'') is a general-purpose distributed memory-caching system. It is often used to speed up dynamic database-driven websites by caching data and objects in RAM to reduce the number of times an external data source (such as a database or API) must be read. Memcached is free and open-source software, licensed under the Revised BSD license. Memcached runs on Unix-like operating systems (Linux and macOS) and on Microsoft Windows. It depends on the libevent library. Memcached's APIs provide a very large hash table distributed across multiple machines. When the table is full, subsequent inserts cause older data to be purged in least recently used (LRU) order. Applications using Memcached typically layer requests and additions into RAM before falling back on a slower backing store, such as a database. Memcached has no internal mechanism to track misses which may happen. However, some third p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |