|

Discrete Gaussian Kernel

In the areas of computer vision, image analysis and signal processing, the notion of scale-space representation is used for processing measurement data at multiple scales, and specifically enhance or suppress image features over different ranges of scale (see the article on scale space). A special type of scale-space representation is provided by the Gaussian scale space, where the image data in ''N'' dimensions is subjected to smoothing by Gaussian convolution. Most of the theory for Gaussian scale space deals with continuous images, whereas one when implementing this theory will have to face the fact that most measurement data are discrete. Hence, the theoretical problem arises concerning how to discretize the continuous theory while either preserving or well approximating the desirable theoretical properties that lead to the choice of the Gaussian kernel (see the article on scale-space axioms). This article describes basic approaches for this that have been developed in the liter ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Vision

Computer vision is an interdisciplinary scientific field that deals with how computers can gain high-level understanding from digital images or videos. From the perspective of engineering, it seeks to understand and automate tasks that the human visual system can do. Computer vision tasks include methods for acquiring, processing, analyzing and understanding digital images, and extraction of high-dimensional data from the real world in order to produce numerical or symbolic information, e.g. in the forms of decisions. Understanding in this context means the transformation of visual images (the input of the retina) into descriptions of the world that make sense to thought processes and can elicit appropriate action. This image understanding can be seen as the disentangling of symbolic information from image data using models constructed with the aid of geometry, physics, statistics, and learning theory. The scientific discipline of computer vision is concerned with the theory ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Discrete Fourier Transform

In mathematics, the discrete Fourier transform (DFT) converts a finite sequence of equally-spaced samples of a function into a same-length sequence of equally-spaced samples of the discrete-time Fourier transform (DTFT), which is a complex-valued function of frequency. The interval at which the DTFT is sampled is the reciprocal of the duration of the input sequence. An inverse DFT is a Fourier series, using the DTFT samples as coefficients of complex sinusoids at the corresponding DTFT frequencies. It has the same sample-values as the original input sequence. The DFT is therefore said to be a frequency domain representation of the original input sequence. If the original sequence spans all the non-zero values of a function, its DTFT is continuous (and periodic), and the DFT provides discrete samples of one cycle. If the original sequence is one cycle of a periodic function, the DFT provides all the non-zero values of one DTFT cycle. The DFT is the most important discret ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multi-scale Approaches

The scale space representation of a signal obtained by Gaussian smoothing satisfies a number of special properties, scale-space axioms, which make it into a special form of multi-scale representation. There are, however, also other types of "multi-scale approaches" in the areas of computer vision, image processing and signal processing, in particular the notion of wavelets. The purpose of this article is to describe a few of these approaches: Scale-space theory for one-dimensional signals For ''one-dimensional signals'', there exists quite a well-developed theory for continuous and discrete kernels that guarantee that new local extrema or zero-crossings cannot be created by a convolution operation. For ''continuous signals'', it holds that all scale-space kernels can be decomposed into the following sets of primitive smoothing kernels: * the ''Gaussian kernel'' :g(x, t) = \frac \exp() where t > 0, * ''truncated exponential'' kernels (filters with one real pole in the ''s''-plane ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pyramid (image Processing)

Pyramid, or pyramid representation, is a type of multi-scale signal representation developed by the computer vision, image processing and signal processing communities, in which a signal or an image is subject to repeated smoothing and subsampling. Pyramid representation is a predecessor to scale-space representation and multiresolution analysis. Pyramid generation There are two main types of pyramids: lowpass and bandpass. A lowpass pyramid is made by smoothing the image with an appropriate smoothing filter and then subsampling the smoothed image, usually by a factor of 2 along each coordinate direction. The resulting image is then subjected to the same procedure, and the cycle is repeated multiple times. Each cycle of this process results in a smaller image with increased smoothing, but with decreased spatial sampling density (that is, decreased image resolution). If illustrated graphically, the entire multi-scale representation will look like a pyramid, with the original i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Smoothing Filter Pole Zero Plot

In statistics and image processing, to smooth a data set is to create an approximating function that attempts to capture important patterns in the data, while leaving out noise or other fine-scale structures/rapid phenomena. In smoothing, the data points of a signal are modified so individual points higher than the adjacent points (presumably because of noise) are reduced, and points that are lower than the adjacent points are increased leading to a smoother signal. Smoothing may be used in two important ways that can aid in data analysis (1) by being able to extract more information from the data as long as the assumption of smoothing is reasonable and (2) by being able to provide analyses that are both flexible and robust. Many different algorithms are used in smoothing. Smoothing may be distinguished from the related and partially overlapping concept of curve fitting in the following ways: * curve fitting often involves the use of an explicit function form for the result, wher ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

FIR Filter

In signal processing, a finite impulse response (FIR) filter is a filter whose impulse response (or response to any finite length input) is of ''finite'' duration, because it settles to zero in finite time. This is in contrast to infinite impulse response (IIR) filters, which may have internal feedback and may continue to respond indefinitely (usually decaying). The impulse response (that is, the output in response to a Kronecker delta input) of an Nth-order discrete-time FIR filter lasts exactly N+1 samples (from first nonzero element through last nonzero element) before it then settles to zero. FIR filters can be discrete-time or continuous-time, and digital or analog. Definition For a causal discrete-time FIR filter of order ''N'', each value of the output sequence is a weighted sum of the most recent input values: :\begin y &= b_0 x + b_1 x -1+ \cdots + b_N x -N\\ &= \sum_^N b_i\cdot x -i \end where: * x /math> is the input signal, * y /math> is the output si ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

N-jet

An ''N''-jet is the set of (partial) derivatives of a function f(x) up to order ''N''. Specifically, in the area of computer vision, the ''N''-jet is usually computed from a scale space representation L of the input image f(x, y), and the partial derivatives of L are used as a basis for expressing various types of visual modules. For example, algorithms for tasks such as feature detection, feature classification, stereo matching, tracking and object recognition can be expressed in terms of ''N''-jets computed at one or several scales in scale space. See also * Scale space implementation * Jet (mathematics) In mathematics, the jet is an operation that takes a differentiable function ''f'' and produces a polynomial, the truncated Taylor polynomial of ''f'', at each point of its domain. Although this is the definition of a jet, the theory of jets regard ... References Computer vision Image processing {{mathanalysis-stub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Scale-space Axioms

In image processing and computer vision, a scale space framework can be used to represent an image as a family of gradually smoothed images. This framework is very general and a variety of scale space representations exist. A typical approach for choosing a particular type of scale space representation is to establish a set of scale-space axioms, describing basic properties of the desired scale-space representation and often chosen so as to make the representation useful in practical applications. Once established, the axioms narrow the possible scale-space representations to a smaller class, typically with only a few free parameters. A set of standard scale space axioms, discussed below, leads to the linear Gaussian scale-space, which is the most common type of scale space used in image processing and computer vision. Scale space axioms for the linear scale-space representation The linear scale space representation L(x, y, t) = (T_t f)(x, y) = g(x, y, t)*f(x, y) of signal ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Semi-group

In mathematics, a semigroup is an algebraic structure consisting of a set together with an associative internal binary operation on it. The binary operation of a semigroup is most often denoted multiplicatively: ''x''·''y'', or simply ''xy'', denotes the result of applying the semigroup operation to the ordered pair . Associativity is formally expressed as that for all ''x'', ''y'' and ''z'' in the semigroup. Semigroups may be considered a special case of magmas, where the operation is associative, or as a generalization of groups, without requiring the existence of an identity element or inverses. The closure axiom is implied by the definition of a binary operation on a set. Some authors thus omit it and specify three axioms for a group and only one axiom (associativity) for a semigroup. As in the case of groups or magmas, the semigroup operation need not be commutative, so ''x''·''y'' is not necessarily equal to ''y''·''x''; a well-known example of an operation that is ass ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Zero (complex Analysis)

In complex analysis (a branch of mathematics), a pole is a certain type of singularity (mathematics), singularity of a complex-valued function of a complex number, complex variable. In some sense, it is the simplest type of singularity. Technically, a point is a pole of a function if it is a zero of a function, zero of the function and is holomorphic function, holomorphic in some neighbourhood (mathematics), neighbourhood of (that is, complex differentiable in a neighbourhood of ). A function is meromorphic function, meromorphic in an open set if for every point of there is a neighborhood of in which either or is holomorphic. If is meromorphic in , then a zero of is a pole of , and a pole of is a zero of . This induces a duality between ''zeros'' and ''poles'', that is fundamental for the study of meromorphic functions. For example, if a function is meromorphic on the whole complex plane plus the point at infinity, then the sum of the multiplicity (mathematics ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

George Pólya

George Pólya (; hu, Pólya György, ; December 13, 1887 – September 7, 1985) was a Hungarian mathematician. He was a professor of mathematics from 1914 to 1940 at ETH Zürich and from 1940 to 1953 at Stanford University. He made fundamental contributions to combinatorics, number theory, numerical analysis and probability theory. He is also noted for his work in heuristics and mathematics education. He has been described as one of The Martians, an informal category which included one of his most famous students at ETH Zurich, John Von Neumann. Life and works Pólya was born in Budapest, Austria-Hungary, to Anna Deutsch and Jakab Pólya, Hungarian Jews who had converted to Christianity in 1886. Although his parents were religious and he was baptized into the Catholic Church upon birth, George eventually grew up to be an agnostic. He was a professor of mathematics from 1914 to 1940 at ETH Zürich in Switzerland and from 1940 to 1953 at Stanford University. He remained a Pr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

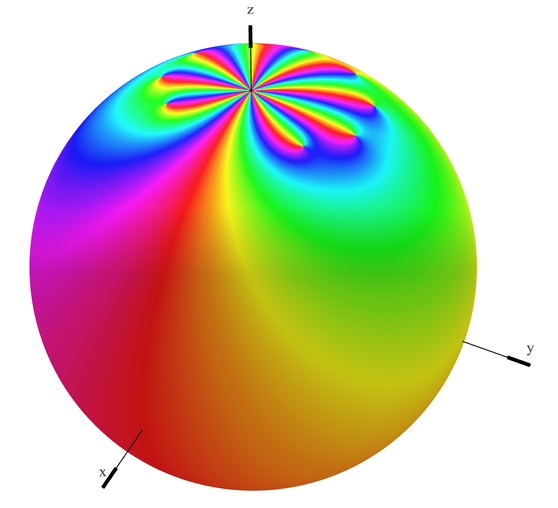

Pole (complex Analysis)

In complex analysis (a branch of mathematics), a pole is a certain type of singularity of a complex-valued function of a complex variable. In some sense, it is the simplest type of singularity. Technically, a point is a pole of a function if it is a zero of the function and is holomorphic in some neighbourhood of (that is, complex differentiable in a neighbourhood of ). A function is meromorphic in an open set if for every point of there is a neighborhood of in which either or is holomorphic. If is meromorphic in , then a zero of is a pole of , and a pole of is a zero of . This induces a duality between ''zeros'' and ''poles'', that is fundamental for the study of meromorphic functions. For example, if a function is meromorphic on the whole complex plane plus the point at infinity, then the sum of the multiplicities of its poles equals the sum of the multiplicities of its zeros. Definitions A function of a complex variable is holomorphic in an open domai ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

_(cropped).png)