|

Discovery System (AI Research)

A discovery system is an artificial intelligence system that attempts to discover new scientific concepts or laws. The aim of discovery systems is to automate scientific data analysis and the scientific discovery process. Ideally, an artificial intelligence system should be able to search systematically through the space of all possible hypotheses and yield the hypothesis - or set of equally likely hypotheses - that best describes the complex patterns in data. During the era known as the second AI summer (approximately 1978-1987), various systems akin to the era's dominant expert systems were developed to tackle the problem of extracting scientific hypotheses from data, with or without interacting with a human scientist. These systems included Autoclass, Automated Mathematician, Eurisko, which aimed at general-purpose hypothesis discovery, and more specific systems such as Dalton, which uncovers molecular properties from data. The dream of building systems that discover scientifi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Artificial Intelligence

Artificial intelligence (AI) is intelligence—perceiving, synthesizing, and inferring information—demonstrated by machines, as opposed to intelligence displayed by animals and humans. Example tasks in which this is done include speech recognition, computer vision, translation between (natural) languages, as well as other mappings of inputs. The ''Oxford English Dictionary'' of Oxford University Press defines artificial intelligence as: the theory and development of computer systems able to perform tasks that normally require human intelligence, such as visual perception, speech recognition, decision-making, and translation between languages. AI applications include advanced web search engines (e.g., Google), recommendation systems (used by YouTube, Amazon and Netflix), understanding human speech (such as Siri and Alexa), self-driving cars (e.g., Tesla), automated decision-making and competing at the highest level in strategic game systems (such as chess and Go). ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Glauber

Glauber is a scientific discovery method written in the context of computational philosophy of science. It is related to machine learning in artificial intelligence. Glauber was written, among other programs, by Pat Langley, Herbert A. Simon, G. Bradshaw and J. Zytkow to demonstrate how scientific discovery may be obtained by problem solving methods, in their book ''Scientific Discovery, Computational Explorations on the Creative Mind''. Their programs simulate historical scientific discoveries based on the empirical evidence known at the time of discovery. Glauber was named after Johann Rudolph Glauber, a 17th-century alchemist whose work helped to develop acid-base theory. Glauber (the method) rediscovers the law of acid-alkali reactions producing salts, given the qualities of substances and observed facts, the result of mixing substances. From that knowledge Glauber discovers that substances that taste bitter react with substances tasting sour, producing substances tast ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Applications Of Artificial Intelligence

Artificial intelligence (AI) has been used in applications to alleviate certain problems throughout industry and academia. AI, like electricity or computers, is a general purpose technology that has a multitude of applications. It has been used in fields of language translation, image recognition, credit scoring, e-commerce and other domains. Internet and e-commerce Search engines Recommendation systems A recommendation system predicts the "rating" or "preference" a user would give to an item.Francesco Ricci and Lior Rokach and Bracha ShapiraIntroduction to Recommender Systems Handbook Recommender Systems Handbook, Springer, 2011, pp. 1-35 Recommender systems are used in a variety of areas, such as generating playlists for video and music services, product recommendations for online stores, or content recommendations for social media platforms and open web content recommenders.Pankaj Gupta, Ashish Goel, Jimmy Lin, Aneesh Sharma, Dong Wang, and Reza Bosagh ZadeWT ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

QLattice

The QLattice is a software library which provides a framework for symbolic regression in Python. It works on Linux, Windows, and macOS. The QLattice algorithm is developed by the Danish/Spanish AI research company Abzu. Since its creation, the QLattice has attracted significant attention, mainly for the inherent explainability of the models it produces. At the GECCO conference in Boston, MA in July 2022, the QLattice was announced as the winner of the synthetic track of the SRBench competition. Features The QLattice works with data in categorical and numeric format. It allows the user to quickly generate, plot and inspect mathematical formulae that can potentially explain the generating process of the data. It is designed for easy interaction with the researcher, allowing the user to guide the search based on their preexisting knowledge. Scientific results The QLattice mainly targets scientists, and integrates well with the scientific workflow. It has been used in research int ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Symbolic Regression

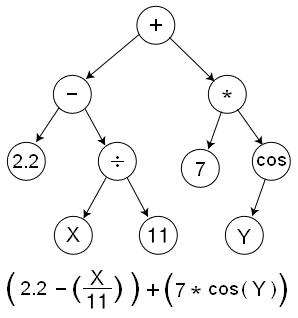

Symbolic regression (SR) is a type of regression analysis that searches the space of mathematical expressions to find the model that best fits a given dataset, both in terms of accuracy and simplicity. No particular model is provided as a starting point for symbolic regression. Instead, initial expressions are formed by randomly combining mathematical building blocks such as mathematical operators, analytic functions, constants, and state variables. Usually, a subset of these primitives will be specified by the person operating it, but that's not a requirement of the technique. The symbolic regression problem for mathematical functions has been tackled with a variety of methods, including recombining equations most commonly using genetic programming, as well as more recent methods utilizing Bayesian methods and neural networks. Another non-classical alternative method to SR is called Universal Functions Originator (UFO), which has a different mechanism, search-space, and buildin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Eureqa

Eureqa is a proprietary modeling engine originally created by Cornell's Artificial Intelligence Lab and later commercialized by Nutonian, Inc. The software uses evolutionary search to determine mathematical equations that describe sets of data in their simplest form. This task is generally referred to as symbolic regression in the literature. Origin and Development Since the 1970s, the primary way companies have performed data science has been to hire teams of data scientists, and equip them with tools like R, Python, SAS, and SQL to execute predictive and statistical modeling. In 2007, Michael Schmidt, then a PhD student in Computational Biology at Cornell, believed that the volume of data and complexity of problems that humans could solve were ever-increasing, and the number of data scientists was not. Instead of relying on more ''people'' to fill the data science gap, Schmidt and his advisor, Hod Lipson, invented Eureqa, believing machines could extract meaning from data ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hod Lipson

Hod Lipson (born 1967) is an Israeli - American robotics engineer. He is the director of Columbia University's Creative Machines Lab. Lipson's work focuses on evolutionary robotics, design automation, rapid prototyping, artificial life, and creating machines that can demonstrate some aspects of human creativity. His publications have been cited more than 26,000 times, and he has an h-index of 73, . Lipson is interviewed in the 2018 documentary on artificial intelligence ''Do You Trust This Computer?'' Biography Lipson received B.Sc. (1989) and Ph.D. (1998) degrees in Mechanical Engineering from Technion Israel Institute of Technology. Before joining the faculty of Columbia University in 2015, he was a professor at Cornell University for 14 years. Prior to Cornell, he was an assistant professor in the Computer Science Department at Brandeis University's, and a postdoctoral researcher at MIT's Mechanical Engineering Department. Research Lipson has been involved with machine l ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cornell University

Cornell University is a private statutory land-grant research university based in Ithaca, New York. It is a member of the Ivy League. Founded in 1865 by Ezra Cornell and Andrew Dickson White, Cornell was founded with the intention to teach and make contributions in all fields of knowledge—from the classics to the sciences, and from the theoretical to the applied. These ideals, unconventional for the time, are captured in Cornell's founding principle, a popular 1868 quotation from founder Ezra Cornell: "I would found an institution where any person can find instruction in any study." Cornell is ranked among the top global universities. The university is organized into seven undergraduate colleges and seven graduate divisions at its main Ithaca campus, with each college and division defining its specific admission standards and academic programs in near autonomy. The university also administers three satellite campuses, two in New York City and one in Educatio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lisp (programming Language)

Lisp (historically LISP) is a family of programming languages with a long history and a distinctive, fully parenthesized prefix notation. Originally specified in 1960, Lisp is the second-oldest high-level programming language still in common use, after Fortran. Lisp has changed since its early days, and many dialects have existed over its history. Today, the best-known general-purpose Lisp dialects are Common Lisp, Scheme, Racket and Clojure. Lisp was originally created as a practical mathematical notation for computer programs, influenced by (though not originally derived from) the notation of Alonzo Church's lambda calculus. It quickly became a favored programming language for artificial intelligence (AI) research. As one of the earliest programming languages, Lisp pioneered many ideas in computer science, including tree data structures, automatic storage management, dynamic typing, conditionals, higher-order functions, recursion, the self-hosting compiler, and the rea ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Expert System

In artificial intelligence, an expert system is a computer system emulating the decision-making ability of a human expert. Expert systems are designed to solve complex problems by reasoning through bodies of knowledge, represented mainly as if–then rules rather than through conventional procedural code. The first expert systems were created in the 1970s and then proliferated in the 1980s. Expert systems were among the first truly successful forms of artificial intelligence (AI) software. An expert system is divided into two subsystems: the inference engine and the knowledge base. The knowledge base represents facts and rules. The inference engine applies the rules to the known facts to deduce new facts. Inference engines can also include explanation and debugging abilities. History Early development Soon after the dawn of modern computers in the late 1940s and early 1950s, researchers started realizing the immense potential these machines had for modern society. One ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Black Box

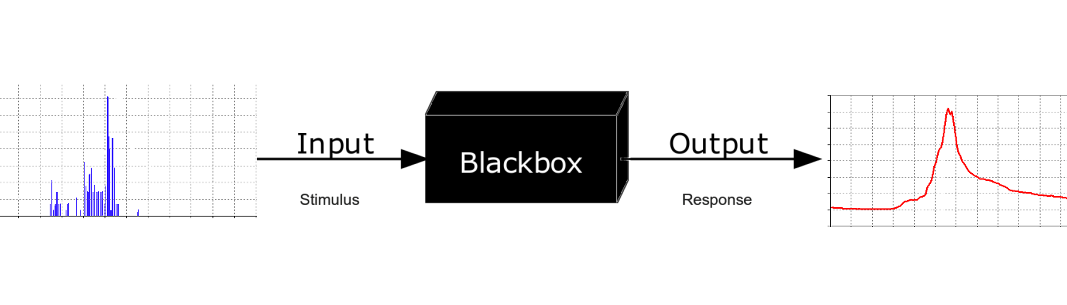

In science, computing, and engineering, a black box is a system which can be viewed in terms of its inputs and outputs (or transfer characteristics), without any knowledge of its internal workings. Its implementation is "opaque" (black). The term can be used to refer to many inner workings, such as those of a transistor, an engine, an algorithm, the human brain, or an institution or government. To analyse an open system with a typical "black box approach", only the behavior of the stimulus/response will be accounted for, to infer the (unknown) ''box''. The usual representation of this ''black box system'' is a data flow diagram centered in the box. The opposite of a black box is a system where the inner components or logic are available for inspection, which is most commonly referred to as a white box (sometimes also known as a "clear box" or a "glass box"). History The modern meaning of the term "black box" seems to have entered the English language around 1945. In elect ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Neural Network

A neural network is a network or neural circuit, circuit of biological neurons, or, in a modern sense, an artificial neural network, composed of artificial neurons or nodes. Thus, a neural network is either a biological neural network, made up of biological neurons, or an artificial neural network, used for solving artificial intelligence (AI) problems. The connections of the biological neuron are modeled in artificial neural networks as weights between nodes. A positive weight reflects an excitatory connection, while negative values mean inhibitory connections. All inputs are modified by a weight and summed. This activity is referred to as a linear combination. Finally, an activation function controls the amplitude of the output. For example, an acceptable range of output is usually between 0 and 1, or it could be −1 and 1. These artificial networks may be used for predictive modeling, adaptive control and applications where they can be trained via a dataset. Self-learning re ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |