|

Digital Imaging And Communications In Medicine

Digital Imaging and Communications in Medicine (DICOM) is the standard for the communication and management of medical imaging information and related data. DICOM is most commonly used for storing and transmitting medical images enabling the integration of medical imaging devices such as scanners, servers, workstations, printers, network hardware, and picture archiving and communication systems (PACS) from multiple manufacturers. It has been widely adopted by hospitals and is making inroads into smaller applications such as dentists' and doctors' offices. DICOM files can be exchanged between two entities that are capable of receiving image and patient data in DICOM format. The different devices come with DICOM Conformance Statements which state which DICOM classes they support. The standard includes a file format definition and a network communications protocol that uses TCP/IP to communicate between systems. The National Electrical Manufacturers Association (NEMA) holds the cop ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Transmission

Data transmission and data reception or, more broadly, data communication or digital communications is the transfer and reception of data in the form of a digital bitstream or a digitized analog signal transmitted over a point-to-point or point-to-multipoint communication channel. Examples of such channels are copper wires, optical fibers, wireless communication using radio spectrum, storage media and computer buses. The data are represented as an electromagnetic signal, such as an electrical voltage, radiowave, microwave, or infrared signal. Analog transmission is a method of conveying voice, data, image, signal or video information using a continuous signal which varies in amplitude, phase, or some other property in proportion to that of a variable. The messages are either represented by a sequence of pulses by means of a line code (''baseband transmission''), or by a limited set of continuously varying waveforms (''passband transmission''), using a digital modulat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Transmission Control Protocol

The Transmission Control Protocol (TCP) is one of the main protocols of the Internet protocol suite. It originated in the initial network implementation in which it complemented the Internet Protocol (IP). Therefore, the entire suite is commonly referred to as TCP/IP. TCP provides reliable, ordered, and error-checked delivery of a stream of octets (bytes) between applications running on hosts communicating via an IP network. Major internet applications such as the World Wide Web, email, remote administration, and file transfer rely on TCP, which is part of the Transport Layer of the TCP/IP suite. SSL/TLS often runs on top of TCP. TCP is connection-oriented, and a connection between client and server is established before data can be sent. The server must be listening (passive open) for connection requests from clients before a connection is established. Three-way handshake (active open), retransmission, and error detection adds to reliability but lengthens latency. Applica ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lempel–Ziv–Welch

Lempel–Ziv–Welch (LZW) is a universal lossless data compression algorithm created by Abraham Lempel, Jacob Ziv, and Terry Welch. It was published by Welch in 1984 as an improved implementation of the LZ78 algorithm published by Lempel and Ziv in 1978. The algorithm is simple to implement and has the potential for very high throughput in hardware implementations. It is the algorithm of the widely used Unix file compression utility compress and is used in the GIF image format. Algorithm The scenario described by Welch's 1984 paper encodes sequences of 8-bit data as fixed-length 12-bit codes. The codes from 0 to 255 represent 1-character sequences consisting of the corresponding 8-bit character, and the codes 256 through 4095 are created in a dictionary for sequences encountered in the data as it is encoded. At each stage in compression, input bytes are gathered into a sequence until the next character would make a sequence with no code yet in the dictionary. The co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Run-length Encoding

Run-length encoding (RLE) is a form of lossless data compression in which ''runs'' of data (sequences in which the same data value occurs in many consecutive data elements) are stored as a single data value and count, rather than as the original run. This is most efficient on data that contains many such runs, for example, simple graphic images such as icons, line drawings, Conway's Game of Life, and animations. For files that do not have many runs, RLE could increase the file size. RLE may also be used to refer to an early graphics file format supported by CompuServe for compressing black and white images, but was widely supplanted by their later Graphics Interchange Format (GIF). RLE also refers to a little-used image format in Windows 3.x, with the extension rle, which is a run-length encoded bitmap, used to compress the Windows 3.x startup screen. Example Consider a screen containing plain black text on a solid white background. There will be many long runs of white pixel ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

JPEG 2000

JPEG 2000 (JP2) is an image compression standard and coding system. It was developed from 1997 to 2000 by a Joint Photographic Experts Group committee chaired by Touradj Ebrahimi (later the JPEG president), with the intention of superseding their original JPEG standard (created in 1992), which is based on a discrete cosine transform (DCT), with a newly designed, wavelet-based method. The standardized filename extension is .jp2 for ISO/IEC 15444-1 conforming files and .jpx for the extended part-2 specifications, published as ISO/IEC 15444-2. The registered MIME types are defined in RFC 3745. For ISO/IEC 15444-1 it is image/jp2. JPEG 2000 code streams are Region of interest, regions of interest that offer several mechanisms to support spatial random access or region of interest access at varying degrees of granularity. It is possible to store different parts of the same picture using different quality. JPEG 2000 is a compression standard based on a discrete wavelet transform (D ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lossless JPEG

Lossless JPEG is a 1993 addition to JPEG standard by the Joint Photographic Experts Group to enable lossless compression. However, the term may also be used to refer to all lossless compression schemes developed by the group, including JPEG 2000 and JPEG-LS. Lossless JPEG was developed as a late addition to JPEG in 1993, using a completely different technique from the lossy JPEG standard. It uses a predictive scheme based on the three nearest (causal) neighbors (upper, left, and upper-left), and entropy coding is used on the prediction error. The standard Independent JPEG Group libraries cannot encode or decode it, but Ken Murchison of Oceana Matrix Ltd. wrote a patch that extends the IJG library to handle lossless JPEG. Lossless JPEG has some popularity in medical imaging, and is used in DNG and some digital cameras to compress raw images, but otherwise was never widely adopted. Adobe'DNG SDK provides a software library for encoding and decoding lossless JPEG with up to 16 bit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

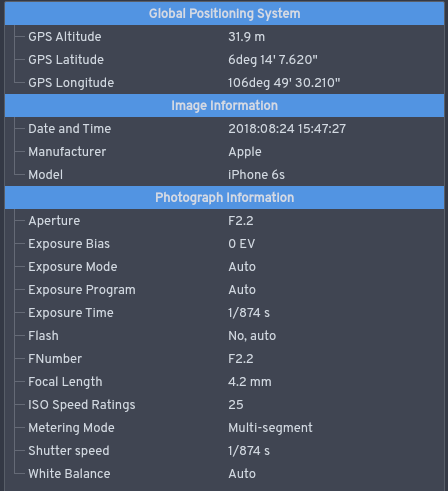

Exchangeable Image File Format

Exchangeable image file format (officially Exif, according to JEIDA/JEITA/CIPA specifications) is a standard that specifies formats for images, sound, and ancillary tags used by digital cameras (including smartphones), scanners and other systems handling image and sound files recorded by digital cameras. The specification uses the following existing encoding formats with the addition of specific metadata tags: JPEG lossy coding for compressed image files, TIFF Rev. 6.0 ( RGB or YCbCr) for uncompressed image files, and RIFF WAV for audio files (linear PCM or ITU-T G.711 μ-law PCM for uncompressed audio data, and IMA-ADPCM for compressed audio data). It does not support JPEG 2000 or GIF encoded images. This standard consists of the Exif image file specification and the Exif audio file specification. Background Exif is supported by almost all camera manufacturers. The metadata tags defined in the Exif standard cover a broad spectrum: * Camera settings: This includes static ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

JPEG

JPEG ( ) is a commonly used method of lossy compression for digital images, particularly for those images produced by digital photography. The degree of compression can be adjusted, allowing a selectable tradeoff between storage size and image quality. JPEG typically achieves 10:1 compression with little perceptible loss in image quality. Since its introduction in 1992, JPEG has been the most widely used image compression standard in the world, and the most widely used digital image format, with several billion JPEG images produced every day as of 2015. The term "JPEG" is an acronym for the Joint Photographic Experts Group, which created the standard in 1992. JPEG was largely responsible for the proliferation of digital images and digital photos across the Internet, and later social media. JPEG compression is used in a number of image file formats. JPEG/Exif is the most common image format used by digital cameras and other photographic image capture devices; along with JPEG ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Set

A data set (or dataset) is a collection of data. In the case of tabular data, a data set corresponds to one or more database tables, where every column of a table represents a particular variable, and each row corresponds to a given record of the data set in question. The data set lists values for each of the variables, such as for example height and weight of an object, for each member of the data set. Data sets can also consist of a collection of documents or files. In the open data discipline, data set is the unit to measure the information released in a public open data repository. The European data.europa.eu portal aggregates more than a million data sets. Some other issues ( real-time data sources, non-relational data sets, etc.) increases the difficulty to reach a consensus about it. Properties Several characteristics define a data set's structure and properties. These include the number and types of the attributes or variables, and various statistical measures applic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Airport Security

Airport security includes the techniques and methods used in an attempt to protect passengers, staff, aircraft, and airport property from malicious harm, crime, terrorism, and other threats. Aviation security is a combination of measures and human and material resources in order to safeguard civil aviation against acts of unlawful interference. Unlawful interference could be acts of terrorism, sabotage, threat to life and property, communication of false threat, bombing, etc. Description Large numbers of people pass through airports every day. This presents potential targets for terrorism and other forms of crime because of the number of people located in one place. Similarly, the high concentration of people on large airliners increases the potentially high death rate with attacks on aircraft, and the ability to use a hijacked airplane as a lethal weapon may provide an alluring target for terrorism (such as during the September 11 attacks). Airport security attempts to prevent ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nondestructive Testing

Nondestructive testing (NDT) is any of a wide group of analysis techniques used in science and technology industry to evaluate the properties of a material, component or system without causing damage. The terms nondestructive examination (NDE), nondestructive inspection (NDI), and nondestructive evaluation (NDE) are also commonly used to describe this technology. Because NDT does not permanently alter the article being inspected, it is a highly valuable technique that can save both money and time in product evaluation, troubleshooting, and research. The six most frequently used NDT methods are eddy-current, magnetic-particle, liquid penetrant, radiographic, ultrasonic, and visual testing. NDT is commonly used in forensic engineering, mechanical engineering, petroleum engineering, electrical engineering, civil engineering, systems engineering, aeronautical engineering, medicine, and art. Innovations in the field of nondestructive testing have had a profound impact on medical im ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |