|

Data Wrangling

Data wrangling, sometimes referred to as data munging, is the process of transforming and mapping data from one " raw" data form into another format with the intent of making it more appropriate and valuable for a variety of downstream purposes such as analytics. The goal of data wrangling is to assure quality and useful data. Data analysts typically spend the majority of their time in the process of data wrangling compared to the actual analysis of the data. The process of data wrangling may include further munging, data visualization, data aggregation, training a statistical model, as well as many other potential uses. Data wrangling typically follows a set of general steps which begin with extracting the data in a raw form from the data source, "munging" the raw data (e.g. sorting) or parsing the data into predefined data structures, and finally depositing the resulting content into a data sink for storage and future use. Background The "wrangler" non-technical term is of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Mapping

In computing and data management, data mapping is the process of creating data element mappings between two distinct data models. Data mapping is used as a first step for a wide variety of data integration tasks, including: * Data transformation or data mediation between a data source and a destination * Identification of data relationships as part of data lineage analysis * Discovery of hidden sensitive data such as the last four digits of a social security number hidden in another user id as part of a data masking or de-identification project * Consolidation of multiple databases into a single database and identifying redundant columns of data for consolidation or elimination For example, a company that would like to transmit and receive purchases and invoices with other companies might use data mapping to create data maps from a company's data to standardized ANSI ASC X12 messages for items such as purchase orders and invoices. Standards X12 standards are generic Electro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Science

Data science is an interdisciplinary field that uses scientific methods, processes, algorithms and systems to extract or extrapolate knowledge and insights from noisy, structured and unstructured data, and apply knowledge from data across a broad range of application domains. Data science is related to data mining, machine learning, big data, computational statistics and analytics. Data science is a "concept to unify statistics, data analysis, informatics, and their related methods" in order to "understand and analyse actual phenomena" with data. It uses techniques and theories drawn from many fields within the context of mathematics, statistics, computer science, information science, and domain knowledge. However, data science is different from computer science and information science. Turing Award winner Jim Gray imagined data science as a "fourth paradigm" of science ( empirical, theoretical, computational, and now data-driven) and asserted that "everything about sc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Programming By Example

In computer science, programming by example (PbE), also termed programming by demonstration or more generally as demonstrational programming, is an end-user development technique for teaching a computer new behavior by demonstrating actions on concrete examples. The system records user actions and infers a generalized program that can be used on new examples. PbE is intended to be easier to do than traditional computer programming, which generally requires learning and using a programming language. Many PbE systems have been developed as research prototypes, but few have found widespread real-world application. More recently, PbE has proved to be a useful paradigm for creating scientific work-flows. PbE is used in two independent clients for the BioMOBY protocolSeahawkanGbrowse moby Also the programming by demonstration (PbD) term has been mostly adopted by robotics researchers for teaching new behaviors to the robot through a physical demonstration of the task. The usual distinc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Recommender System

A recommender system, or a recommendation system (sometimes replacing 'system' with a synonym such as platform or engine), is a subclass of information filtering system that provide suggestions for items that are most pertinent to a particular user. Typically, the suggestions refer to various decision-making processes, such as what product to purchase, what music to listen to, or what online news to read. Recommender systems are particularly useful when an individual needs to choose an item from a potentially overwhelming number of items that a service may offer. Recommender systems are used in a variety of areas, with commonly recognised examples taking the form of playlist generators for video and music services, product recommenders for online stores, or content recommenders for social media platforms and open web content recommenders.Pankaj Gupta, Ashish Goel, Jimmy Lin, Aneesh Sharma, Dong Wang, and Reza Bosagh ZadeWTF:The who-to-follow system at Twitter Proceedings of the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

R (programming Language)

R is a programming language for statistical computing and graphics supported by the R Core Team and the R Foundation for Statistical Computing. Created by statisticians Ross Ihaka and Robert Gentleman, R is used among data miners, bioinformaticians and statisticians for data analysis and developing statistical software. Users have created packages to augment the functions of the R language. According to user surveys and studies of scholarly literature databases, R is one of the most commonly used programming languages used in data mining. R ranks 12th in the TIOBE index, a measure of programming language popularity, in which the language peaked in 8th place in August 2020. The official R software environment is an open-source free software environment within the GNU package, available under the GNU General Public License. It is written primarily in C, Fortran, and R itself (partially self-hosting). Precompiled executables are provided for various operating systems. R ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Python (programming Language)

Python is a high-level, general-purpose programming language. Its design philosophy emphasizes code readability with the use of significant indentation. Python is dynamically-typed and garbage-collected. It supports multiple programming paradigms, including structured (particularly procedural), object-oriented and functional programming. It is often described as a "batteries included" language due to its comprehensive standard library. Guido van Rossum began working on Python in the late 1980s as a successor to the ABC programming language and first released it in 1991 as Python 0.9.0. Python 2.0 was released in 2000 and introduced new features such as list comprehensions, cycle-detecting garbage collection, reference counting, and Unicode support. Python 3.0, released in 2008, was a major revision that is not completely backward-compatible with earlier versions. Python 2 was discontinued with version 2.7.18 in 2020. Python consistently ranks as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

KNIME

KNIME (), the Konstanz Information Miner, is a free and open-source data analytics, reporting and integration platform. KNIME integrates various components for machine learning and data mining through its modular data pipelining "Building Blocks of Analytics" concept. A graphical user interface and use of JDBC allows assembly of nodes blending different data sources, including preprocessing ( ETL: Extraction, Transformation, Loading), for modeling, data analysis and visualization without, or with only minimal, programming. Since 2006, KNIME has been used in pharmaceutical research, it also used in other areas such as CRM customer data analysis, business intelligence, text mining and financial data analysis. Recently attempts were made to use KNIME as robotic process automation (RPA) tool. KNIME's headquarters are based in Zurich, with additional offices in Konstanz, Berlin, and Austin (USA). History The Development of KNIME was started January 2004 by a team of software enginee ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Lake

A data lake is a system or repository of data stored in its natural/raw format, usually object blobs or files. A data lake is usually a single store of data including raw copies of source system data, sensor data, social data etc., and transformed data used for tasks such as reporting, visualization, advanced analytics and machine learning. A data lake can include structured data from relational databases (rows and columns), semi-structured data ( CSV, logs, XML, JSON), unstructured data (emails, documents, PDFs) and binary data (images, audio, video). A data lake can be established "on premises" (within an organization's data centers) or "in the cloud" (using cloud services from vendors such as Amazon, Microsoft, or Google). Background James Dixon, then chief technology officer at Pentaho, coined the term by 2011 to contrast it with data mart, which is a smaller repository of interesting attributes derived from raw data. In promoting data lakes, he argued that data marts h ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Warehouse

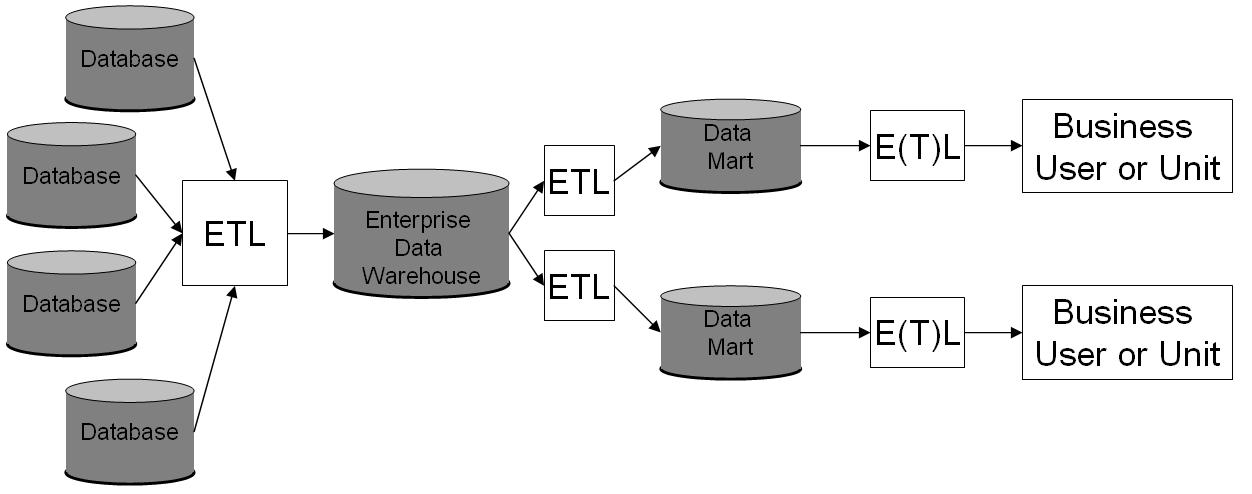

In computing, a data warehouse (DW or DWH), also known as an enterprise data warehouse (EDW), is a system used for Business reporting, reporting and data analysis and is considered a core component of business intelligence. DWs are central Repository (version control), repositories of integrated data from one or more disparate sources. They store current and historical data in one single place that are used for creating analytical reports for workers throughout the enterprise. The data stored in the warehouse is uploaded from the operational systems (such as marketing or sales). The data may pass through an operational data store and may require data cleansing for additional operations to ensure data quality before it is used in the DW for reporting. Extract, transform, load (ETL) and extract, load, transform (ELT) are the two main approaches used to build a data warehouse system. ETL-based data warehousing The typical extract, transform, load (ETL)-based data warehouse uses ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Architect

A data architect is a practitioner of data architecture, a data management discipline concerned with designing, creating, deploying and managing an organization's data architecture. Data architects define how the data will be stored, consumed, integrated and managed by different data entities and IT systems, as well as any applications using or processing that data in some way. It is closely allied with business architecture and is considered to be one of the four domains of enterprise architecture. Role According to the Data Management Body of Knowledge, the data architect “provides a standard common business vocabulary, expresses strategic data requirements, outlines high level integrated designs to meet these requirements, and aligns with enterprise strategy and related business architecture.” According to the Open Group Architecture Framework (TOGAF), a data architect is expected to set data architecture principles, create models of data that enable the implementation of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Consistency

Data consistency refers to whether the same data kept at different places do or do not match. Point-in-time consistency Point-in-time consistency is an important property of backup files and a critical objective of software that creates backups. It is also relevant to the design of disk memory systems, specifically relating to what happens when they are unexpectedly shut down. As a relevant backup example, consider a website with a database such as the online encyclopedia Wikipedia, which needs to be operational around the clock, but also must be backed up with regularity to protect against disaster. Portions of Wikipedia are constantly being updated every minute of every day, meanwhile, Wikipedia's database is stored on servers in the form of one or several very large files which require minutes or hours to back up. These large files—as with any database—contain numerous data structures which reference each other by location. For example, some structures are indexes which ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Validation

In computer science, data validation is the process of ensuring data has undergone data cleansing to ensure they have data quality, that is, that they are both correct and useful. It uses routines, often called "validation rules", "validation constraints", or "check routines", that check for correctness, meaningfulness, and security of data that are input to the system. The rules may be implemented through the automated facilities of a data dictionary, or by the inclusion of explicit application program validation logic of the computer and its application. This is distinct from formal verification, which attempts to prove or disprove the correctness of algorithms for implementing a specification or property. Overview Data validation is intended to provide certain well-defined guarantees for fitness and consistency of data in an application or automated system. Data validation rules can be defined and designed using various methodologies, and be deployed in various contexts. Thei ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.jpg)