|

DG UX

DG/UX is a discontinued Unix operating system developed by Data General for its Eclipse MV minicomputer line, and later the AViiON workstation and server line (both Motorola 88000 and Intel IA-32-based variants). Overview DG/UX 1.00, released in March, 1985, was based on UNIX System V Release 2 with additions from 4.1BSD. By 1987, DG/UX 3.10 had been released, with 4.2BSD TCP/IP networking, NFS and the X Window System included. DG/UX 4.00, in 1988, was a comprehensive re-design of the system, based on System V Release 3, and supporting symmetric multiprocessing on the Eclipse MV. The 4.00 filesystem was based on the AOS/VS II filesystem and, using the logical disk facility, could span multiple disks. DG/UX 5.4, released around 1991, replaced the legacy Unix file buffer cache with unified, demand paged virtual memory management. Later versions were based on System V Release 4. On the AViiON, DG/UX supported multiprocessor machines at a time when most variants of Unix did not. The ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data General

Data General Corporation was one of the first minicomputer firms of the late 1960s. Three of the four founders were former employees of Digital Equipment Corporation (DEC). Their first product, 1969's Data General Nova, was a 16-bit minicomputer intended to both outperform and cost less than the equivalent from DEC, the 12-bit PDP-8. A basic Nova system cost or less than a similar PDP-8 while running faster, offering easy expandability, being significantly smaller, and proving more reliable in the field. Combined with Data General RDOS (DG/RDOS) and programming languages like Data General Business Basic, Novas provided a multi-user platform far ahead of many contemporary systems. A series of updated Nova machines were released through the early 1970s that kept the Nova line at the front of the 16-bit mini world. The Nova was followed by the Eclipse series which offered much larger memory capacity while still being able to run Nova code without modification. The Eclipse lau ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Network File System (protocol)

Network File System (NFS) is a distributed file system protocol originally developed by Sun Microsystems (Sun) in 1984, allowing a user on a client computer to access files over a computer network much like local storage is accessed. NFS, like many other protocols, builds on the Open Network Computing Remote Procedure Call (ONC RPC) system. NFS is an open IETF standard defined in a Request for Comments (RFC), allowing anyone to implement the protocol. Versions and variations Sun used version 1 only for in-house experimental purposes. When the development team added substantial changes to NFS version 1 and released it outside of Sun, they decided to release the new version as v2, so that version interoperation and RPC version fallback could be tested. NFSv2 Version 2 of the protocol (defined in RFC 1094, March 1989) originally operated only over User Datagram Protocol (UDP). Its designers meant to keep the server side stateless, with locking (for exampl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Non-Uniform Memory Access

Non-uniform memory access (NUMA) is a computer memory design used in multiprocessing, where the memory access time depends on the memory location relative to the processor. Under NUMA, a processor can access its own local memory faster than non-local memory (memory local to another processor or memory shared between processors). The benefits of NUMA are limited to particular workloads, notably on servers where the data is often associated strongly with certain tasks or users. NUMA architectures logically follow in scaling from symmetric multiprocessing (SMP) architectures. They were developed commercially during the 1990s by Unisys, Convex Computer (later Hewlett-Packard), Honeywell Information Systems Italy (HISI) (later Groupe Bull), Silicon Graphics (later Silicon Graphics International), Sequent Computer Systems (later IBM), Data General (later EMC, now Dell Technologies), and Digital (later Compaq, then HP, now HPE). Techniques developed by these companies later ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Processor Affinity

Processor affinity, or CPU pinning or "cache affinity", enables the binding and unbinding of a process or a thread to a central processing unit (CPU) or a range of CPUs, so that the process or thread will execute only on the designated CPU or CPUs rather than any CPU. This can be viewed as a modification of the native central queue scheduling algorithm in a symmetric multiprocessing operating system. Each item in the queue has a tag indicating its kin processor. At the time of resource allocation, each task is allocated to its kin processor in preference to others. Processor affinity takes advantage of the fact that remnants of a process that was run on a given processor may remain in that processor's state (for example, data in the cache memory) after another process was run on that processor. Scheduling a CPU-intensive process that has few interrupts to execute on the same processor may improve its performance by reducing degrading events such as cache misses, but may slow down ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Scsi

Small Computer System Interface (SCSI, ) is a set of standards for physically connecting and transferring data between computers and peripheral devices. The SCSI standards define commands, protocols, electrical, optical and logical interfaces. The SCSI standard defines command sets for specific peripheral device types; the presence of "unknown" as one of these types means that in theory it can be used as an interface to almost any device, but the standard is highly pragmatic and addressed toward commercial requirements. The initial Parallel SCSI was most commonly used for hard disk drives and tape drives, but it can connect a wide range of other devices, including scanners and CD drives, although not all controllers can handle all devices. The ancestral SCSI standard, X3.131-1986, generally referred to as SCSI-1, was published by the X3T9 technical committee of the American National Standards Institute (ANSI) in 1986. SCSI-2 was published in August 1990 as X3.T9.2/86-109 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Logical Volume Management

In computer storage, logical volume management or LVM provides a method of allocating space on mass-storage devices that is more flexible than conventional partitioning schemes to store volumes. In particular, a volume manager can concatenate, stripe together or otherwise combine partitions (or block devices in general) into larger virtual partitions that administrators can re-size or move, potentially without interrupting system use. Volume management represents just one of many forms of storage virtualization; its implementation takes place in a layer in the device-driver stack of an operating system (OS) (as opposed to within storage devices or in a network). Design Most volume-manager implementations share the same basic design. They start with physical volumes (PVs), which can be either hard disks, hard disk partitions, or Logical Unit Numbers (LUNs) of an external storage device. Volume management treats each PV as being composed of a sequence of chunks called ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Text Terminal

A computer terminal is an electronic or electromechanical hardware device that can be used for entering data into, and transcribing data from, a computer or a computing system. The teletype was an example of an early-day hard-copy terminal and predated the use of a computer screen by decades. Early terminals were inexpensive devices but very slow compared to punched cards or paper tape for input, yet as the technology improved and video displays were introduced, terminals pushed these older forms of interaction from the industry. A related development was time-sharing systems, which evolved in parallel and made up for any inefficiencies in the user's typing ability with the ability to support multiple users on the same machine, each at their own terminal or terminals. The function of a terminal is typically confined to transcription and input of data; a device with significant local, programmable data-processing capability may be called a "smart terminal" or fat client. A t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pentium Pro

The Pentium Pro is a sixth-generation x86 microprocessor developed and manufactured by Intel and introduced on November 1, 1995. It introduced the P6 microarchitecture (sometimes termed i686) and was originally intended to replace the original Pentium in a full range of applications. While the Pentium and Pentium MMX had 3.1 and 4.5 million transistors, respectively, the Pentium Pro contained 5.5 million transistors. Later, it was reduced to a more narrow role as a server and high-end desktop processor and was used in supercomputers like ASCI Red, the first computer to reach the trillion ''floating point operations per second'' (tera FLOPS) performance mark. The Pentium Pro was capable of both dual- and quad-processor configurations. It only came in one form factor, the relatively large rectangular Socket 8. The Pentium Pro was succeeded by the Pentium II Xeon in 1998. Microarchitecture The lead architect of Pentium Pro was Fred Pollack who was specialized ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

GNU Compiler Collection

The GNU Compiler Collection (GCC) is an optimizing compiler produced by the GNU Project supporting various programming languages, hardware architectures and operating systems. The Free Software Foundation (FSF) distributes GCC as free software under the GNU General Public License (GNU GPL). GCC is a key component of the GNU toolchain and the standard compiler for most projects related to GNU and the Linux kernel. With roughly 15 million lines of code in 2019, GCC is one of the biggest free programs in existence. It has played an important role in the growth of free software, as both a tool and an example. When it was first released in 1987 by Richard Stallman, GCC 1.0 was named the GNU C Compiler since it only handled the C programming language. It was extended to compile C++ in December of that year. Front ends were later developed for Objective-C, Objective-C++, Fortran, Ada, D and Go, among others. The OpenMP and OpenACC specifications are also supported in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multiprocessor

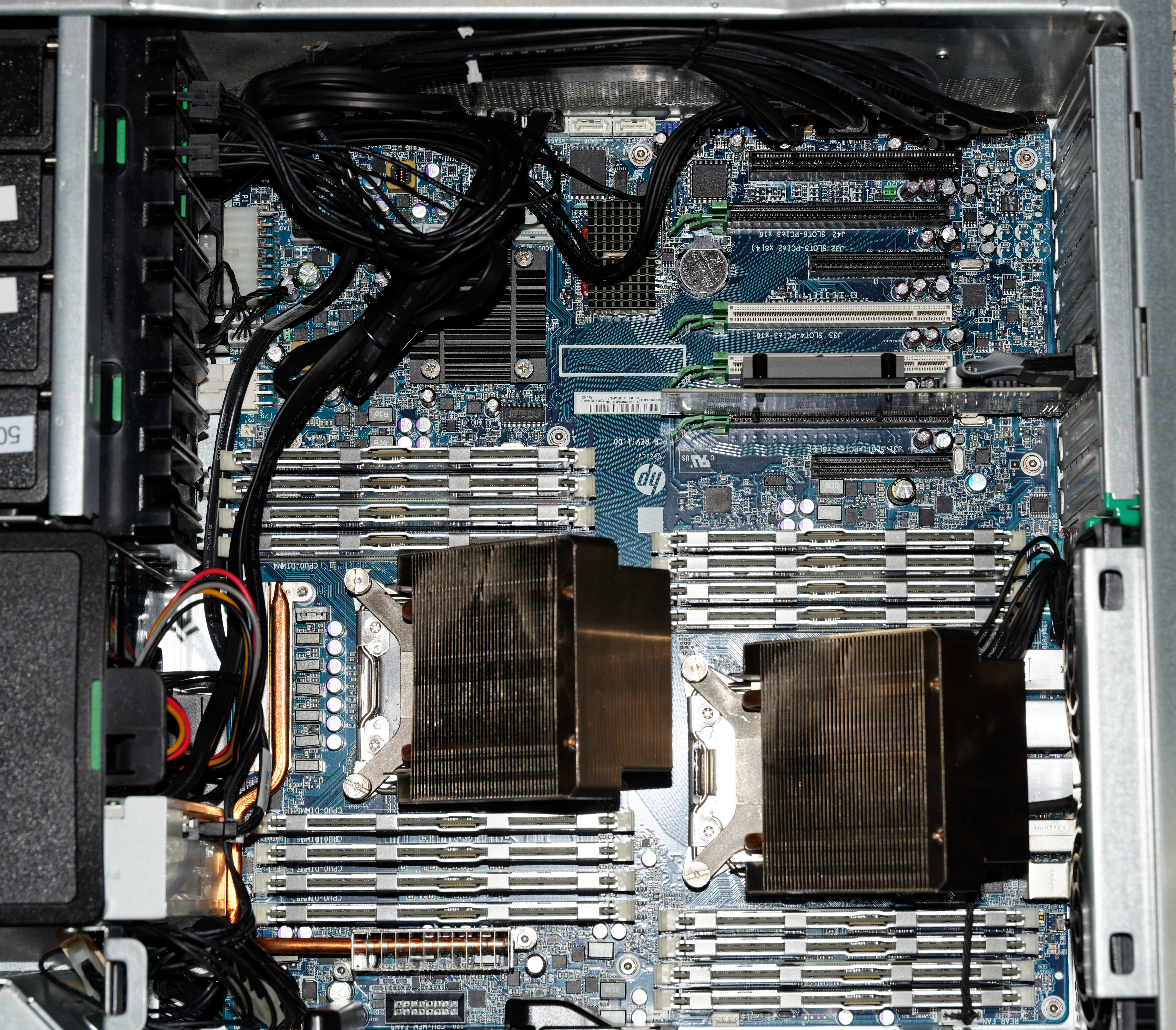

Multiprocessing is the use of two or more central processing units (CPUs) within a single computer system. The term also refers to the ability of a system to support more than one processor or the ability to allocate tasks between them. There are many variations on this basic theme, and the definition of multiprocessing can vary with context, mostly as a function of how CPUs are defined ( multiple cores on one die, multiple dies in one package, multiple packages in one system unit, etc.). According to some on-line dictionaries, a multiprocessor is a computer system having two or more processing units (multiple processors) each sharing main memory and peripherals, in order to simultaneously process programs. A 2009 textbook defined multiprocessor system similarly, but noting that the processors may share "some or all of the system’s memory and I/O facilities"; it also gave tightly coupled system as a synonymous term. At the operating system level, ''multiprocessing'' is som ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

System V Release 4

Unix System V (pronounced: "System Five") is one of the first commercial versions of the Unix operating system. It was originally developed by AT&T and first released in 1983. Four major versions of System V were released, numbered 1, 2, 3, and 4. System V Release 4 (SVR4) was commercially the most successful version, being the result of an effort, marketed as ''Unix System Unification'', which solicited the collaboration of the major Unix vendors. It was the source of several common commercial Unix features. System V is sometimes abbreviated to SysV. , the AT&T-derived Unix market is divided between four System V variants: IBM's AIX, Hewlett Packard Enterprise's HP-UX and Oracle's Solaris, plus the free-software illumos forked from OpenSolaris. Overview Introduction System V was the successor to 1982's UNIX System III. While AT&T developed and sold hardware that ran System V, most customers ran a version from a reseller, based on AT&T's reference implementation. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |