|

Cut-elimination

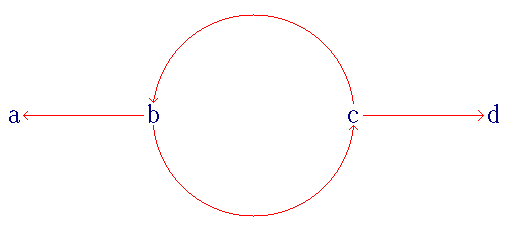

The cut-elimination theorem (or Gentzen's ''Hauptsatz'') is the central result establishing the significance of the sequent calculus. It was originally proved by Gerhard Gentzen in his landmark 1934 paper "Investigations in Logical Deduction" for the systems LJ and LK formalising intuitionistic and classical logic respectively. The cut-elimination theorem states that any judgement that possesses a proof in the sequent calculus making use of the cut rule also possesses a cut-free proof, that is, a proof that does not make use of the cut rule. The cut rule A sequent is a logical expression relating multiple formulas, in the form , which is to be read as proves , and (as glossed by Gentzen) should be understood as equivalent to the truth-function "If (A_1 and A_2 and A_3 …) then (B_1 or B_2 or B_3 …)." Note that the left-hand side (LHS) is a conjunction (and) and the right-hand side (RHS) is a disjunction (or). The LHS may have arbitrarily many or few formulae; when the L ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Theorems In The Foundations Of Mathematics

In mathematics, a theorem is a statement that has been proved, or can be proved. The ''proof'' of a theorem is a logical argument that uses the inference rules of a deductive system to establish that the theorem is a logical consequence of the axioms and previously proved theorems. In the mainstream of mathematics, the axioms and the inference rules are commonly left implicit, and, in this case, they are almost always those of Zermelo–Fraenkel set theory with the axiom of choice, or of a less powerful theory, such as Peano arithmetic. A notable exception is Wiles's proof of Fermat's Last Theorem, which involves the Grothendieck universes whose existence requires the addition of a new axiom to the set theory. Generally, an assertion that is explicitly called a theorem is a proved result that is not an immediate consequence of other known theorems. Moreover, many authors qualify as ''theorems'' only the most important results, and use the terms ''lemma'', ''proposition'' and ' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Deduction Theorem

In mathematical logic, a deduction theorem is a metatheorem that justifies doing conditional proofs—to prove an implication ''A'' → ''B'', assume ''A'' as an hypothesis and then proceed to derive ''B''—in systems that do not have an explicit inference rule for this. Deduction theorems exist for both propositional logic and first-order logic. The deduction theorem is an important tool in Hilbert-style deduction systems because it permits one to write more comprehensible and usually much shorter proofs than would be possible without it. In certain other formal proof systems the same conveniency is provided by an explicit inference rule; for example natural deduction calls it implication introduction. In more detail, the propositional logic deduction theorem states that if a formula B is deducible from a set of assumptions \Delta \cup \ then the implication A \to B is deducible from \Delta ; in symbols, \Delta \cup \ \vdash B implies \Delta \vdash A \to B . In the sp ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Consistency Proof

In classical deductive logic, a consistent theory is one that does not lead to a logical contradiction. The lack of contradiction can be defined in either semantic or syntactic terms. The semantic definition states that a theory is consistent if it has a model, i.e., there exists an interpretation under which all formulas in the theory are true. This is the sense used in traditional Aristotelian logic, although in contemporary mathematical logic the term ''satisfiable'' is used instead. The syntactic definition states a theory T is consistent if there is no formula \varphi such that both \varphi and its negation \lnot\varphi are elements of the set of consequences of T. Let A be a set of closed sentences (informally "axioms") and \langle A\rangle the set of closed sentences provable from A under some (specified, possibly implicitly) formal deductive system. The set of axioms A is consistent when \varphi, \lnot \varphi \in \langle A \rangle for no formula \varphi. If there e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Peano's Axioms

In mathematical logic, the Peano axioms, also known as the Dedekind–Peano axioms or the Peano postulates, are axioms for the natural numbers presented by the 19th century Italian mathematician Giuseppe Peano. These axioms have been used nearly unchanged in a number of metamathematical investigations, including research into fundamental questions of whether number theory is consistent and complete. The need to formalize arithmetic was not well appreciated until the work of Hermann Grassmann, who showed in the 1860s that many facts in arithmetic could be derived from more basic facts about the successor operation and induction. In 1881, Charles Sanders Peirce provided an axiomatization of natural-number arithmetic. In 1888, Richard Dedekind proposed another axiomatization of natural-number arithmetic, and in 1889, Peano published a simplified version of them as a collection of axioms in his book, ''The principles of arithmetic presented by a new method'' ( la, Arithm ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gentzen's Consistency Proof

Gentzen's consistency proof is a result of proof theory in mathematical logic, published by Gerhard Gentzen in 1936. It shows that the Peano axioms of first-order arithmetic do not contain a contradiction (i.e. are "consistent"), as long as a certain other system used in the proof does not contain any contradictions either. This other system, today called "primitive recursive arithmetic with the additional principle of quantifier-free transfinite induction up to the ordinal ε0", is neither weaker nor stronger than the system of Peano axioms. Gentzen argued that it avoids the questionable modes of inference contained in Peano arithmetic and that its consistency is therefore less controversial. Gentzen's theorem Gentzen's theorem is concerned with first-order arithmetic: the theory of the natural numbers, including their addition and multiplication, axiomatized by the first-order Peano axioms. This is a "first-order" theory: the quantifiers extend over natural numbers, but ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Normal Form (term Rewriting)

In abstract rewriting, an object is in normal form if it cannot be rewritten any further, i.e. it is irreducible. Depending on the rewriting system, an object may rewrite to several normal forms or none at all. Many properties of rewriting systems relate to normal forms. Definitions Stated formally, if (''A'',→) is an abstract rewriting system, ''x''∈''A'' is in normal form if no ''y''∈''A'' exists such that ''x''→''y'', i.e. ''x'' is an irreducible term. An object ''a'' is weakly normalizing if there exists at least one particular sequence of rewrites starting from ''a'' that eventually yields a normal form. A rewriting system has the weak normalization property or is ''(weakly) normalizing'' (WN) if every object is weakly normalizing. An object ''a'' is strongly normalizing if every sequence of rewrites starting from ''a'' eventually terminates with a normal form. An abstract rewriting system is ''strongly normalizing'', ''terminating'', ''noetherian'', or has the (str ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Normalization Property (abstract Rewriting)

In abstract rewriting, an object is in normal form if it cannot be rewritten any further, i.e. it is irreducible. Depending on the rewriting system, an object may rewrite to several normal forms or none at all. Many properties of rewriting systems relate to normal forms. Definitions Stated formally, if (''A'',→) is an abstract rewriting system, ''x''∈''A'' is in normal form if no ''y''∈''A'' exists such that ''x''→''y'', i.e. ''x'' is an irreducible term. An object ''a'' is weakly normalizing if there exists at least one particular sequence of rewrites starting from ''a'' that eventually yields a normal form. A rewriting system has the weak normalization property or is ''(weakly) normalizing'' (WN) if every object is weakly normalizing. An object ''a'' is strongly normalizing if every sequence of rewrites starting from ''a'' eventually terminates with a normal form. An abstract rewriting system is ''strongly normalizing'', ''terminating'', ''noetherian'', or has the (str ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Prolog

Prolog is a logic programming language associated with artificial intelligence and computational linguistics. Prolog has its roots in first-order logic, a formal logic, and unlike many other programming languages, Prolog is intended primarily as a declarative programming language: the program logic is expressed in terms of relations, represented as facts and rules. A computation is initiated by running a ''query'' over these relations. The language was developed and implemented in Marseille, France, in 1972 by Alain Colmerauer with Philippe Roussel, based on Robert Kowalski's procedural interpretation of Horn clauses at University of Edinburgh. Prolog was one of the first logic programming languages and remains the most popular such language today, with several free and commercial implementations available. The language has been used for theorem proving, expert systems, term rewriting, type systems, and automated planning, as well as its original intended field of use, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

First-order Resolution

In mathematical logic and automated theorem proving, resolution is a rule of inference leading to a refutation complete theorem-proving technique for sentences in propositional logic and first-order logic. For propositional logic, systematically applying the resolution rule acts as a decision procedure for formula unsatisfiability, solving the (complement of the) Boolean satisfiability problem. For first-order logic, resolution can be used as the basis for a semi-algorithm for the unsatisfiability problem of first-order logic, providing a more practical method than one following from Gödel's completeness theorem. The resolution rule can be traced back to Davis and Putnam (1960); however, their algorithm required trying all ground instances of the given formula. This source of combinatorial explosion was eliminated in 1965 by John Alan Robinson's syntactical unification algorithm, which allowed one to instantiate the formula during the proof "on demand" just as far as needed ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Craig Interpolation

In mathematical logic, Craig's interpolation theorem is a result about the relationship between different logical theories. Roughly stated, the theorem says that if a formula φ implies a formula ψ, and the two have at least one atomic variable symbol in common, then there is a formula ρ, called an interpolant, such that every non-logical symbol in ρ occurs both in φ and ψ, φ implies ρ, and ρ implies ψ. The theorem was first proved for first-order logic by William Craig in 1957. Variants of the theorem hold for other logics, such as propositional logic. A stronger form of Craig's interpolation theorem for first-order logic was proved by Roger Lyndon in 1959; the overall result is sometimes called the Craig–Lyndon theorem. Example In propositional logic, let ::: \varphi = \lnot(P \land Q) \to (\lnot R \land Q) ::: \psi = (S \to P) \lor (S \to \lnot R) . Then \varphi tautologically implies \psi. This can be verified by writing \varphi in conjunctive normal fo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |