|

Cross-spectrum

In time series analysis, the cross-spectrum is used as part of a frequency domain analysis of the cross-correlation or cross-covariance between two time series. Definition Let (X_t,Y_t) represent a pair of stochastic processes that are jointly wide sense stationary with autocovariance functions \gamma_ and \gamma_ and cross-covariance function \gamma_. Then the cross-spectrum \Gamma_ is defined as the Fourier transform of \gamma_ : \Gamma_(f)= \mathcal\(f) = \sum_^\infty \,\gamma_(\tau) \,e^ , where : \gamma_(\tau) = \operatorname x_t - \mu_x)(y_ - \mu_y)/math> . The cross-spectrum has representations as a decomposition into (i) its real part (co-spectrum) and (ii) its imaginary part (quadrature spectrum) : \Gamma_(f)= \Lambda_(f) - i \Psi_(f) , and (ii) in polar coordinates : \Gamma_(f)= A_(f) \,e^ . Here, the amplitude spectrum A_ is given by : A_(f)= (\Lambda_(f)^2 + \Psi_(f)^2)^\frac , and the phase spectrum \Phi_ is given by : \begin \tan^ ( \Psi_(f) / \La ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cross-correlation

In signal processing, cross-correlation is a measure of similarity of two series as a function of the displacement of one relative to the other. This is also known as a ''sliding dot product'' or ''sliding inner-product''. It is commonly used for searching a long signal for a shorter, known feature. It has applications in pattern recognition, single particle analysis, electron tomography, averaging, cryptanalysis, and neurophysiology. The cross-correlation is similar in nature to the convolution of two functions. In an autocorrelation, which is the cross-correlation of a signal with itself, there will always be a peak at a lag of zero, and its size will be the signal energy. In probability and statistics, the term ''cross-correlations'' refers to the correlations between the entries of two random vectors \mathbf and \mathbf, while the ''correlations'' of a random vector \mathbf are the correlations between the entries of \mathbf itself, those forming the correlation matri ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cross-correlation

In signal processing, cross-correlation is a measure of similarity of two series as a function of the displacement of one relative to the other. This is also known as a ''sliding dot product'' or ''sliding inner-product''. It is commonly used for searching a long signal for a shorter, known feature. It has applications in pattern recognition, single particle analysis, electron tomography, averaging, cryptanalysis, and neurophysiology. The cross-correlation is similar in nature to the convolution of two functions. In an autocorrelation, which is the cross-correlation of a signal with itself, there will always be a peak at a lag of zero, and its size will be the signal energy. In probability and statistics, the term ''cross-correlations'' refers to the correlations between the entries of two random vectors \mathbf and \mathbf, while the ''correlations'' of a random vector \mathbf are the correlations between the entries of \mathbf itself, those forming the correlation matri ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Scaled Correlation

In statistics, scaled correlation is a form of a coefficient of correlation applicable to data that have a temporal component such as time series. It is the average short-term correlation. If the signals have multiple components (slow and fast), scaled coefficient of correlation can be computed only for the fast components of the signals, ignoring the contributions of the slow components.Nikolić D, Muresan RC, Feng W, Singer W (2012) Scaled correlation analysis: a better way to compute a cross-correlogram. ''European Journal of Neuroscience'', pp. 1–21, doi:10.1111/j.1460-9568.2011.07987.x http://www.danko-nikolic.com/wp-content/uploads/2012/03/Scaled-correlation-analysis.pdf This filtering-like operation has the advantages of not having to make assumptions about the sinusoidal nature of the signals. For example, in the studies of brain signals researchers are often interested in the high-frequency components (beta and gamma range; 25–80 Hz), and may not be interested in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Time Series Analysis

In mathematics Mathematics is an area of knowledge that includes the topics of numbers, formulas and related structures, shapes and the spaces in which they are contained, and quantities and their changes. These topics are represented in modern mathematics ..., a time series is a series of data points indexed (or listed or graphed) in time order. Most commonly, a time series is a sequence taken at successive equally spaced points in time. Thus it is a sequence of discrete-time data. Examples of time series are heights of ocean tides, counts of sunspots, and the daily closing value of the Dow Jones Industrial Average. A time series is very frequently plotted via a run chart (which is a temporal line chart). Time series are used in statistics, signal processing, pattern recognition, econometrics, mathematical finance, weather forecasting, earthquake prediction, electroencephalography, control engineering, astronomy, communications engineering, and largely in any domain of a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Frequency Domain

In physics, electronics, control systems engineering, and statistics, the frequency domain refers to the analysis of mathematical functions or signals with respect to frequency, rather than time. Put simply, a time-domain graph shows how a signal changes over time, whereas a frequency-domain graph shows how much of the signal lies within each given frequency band over a range of frequencies. A frequency-domain representation can also include information on the phase shift that must be applied to each sinusoid in order to be able to recombine the frequency components to recover the original time signal. A given function or signal can be converted between the time and frequency domains with a pair of mathematical operators called transforms. An example is the Fourier transform, which converts a time function into a complex valued sum or integral of sine waves of different frequencies, with amplitudes and phases, each of which represents a frequency component. The "spectr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cross-covariance

In probability and statistics, given two stochastic processes \left\ and \left\, the cross-covariance is a function that gives the covariance of one process with the other at pairs of time points. With the usual notation \operatorname E for the expectation operator, if the processes have the mean functions \mu_X(t) = \operatorname \operatorname E _t/math> and \mu_Y(t) = \operatorname E _t/math>, then the cross-covariance is given by :\operatorname_(t_1,t_2) = \operatorname (X_, Y_) = \operatorname X_ - \mu_X(t_1))(Y_ - \mu_Y(t_2))= \operatorname _ Y_- \mu_X(t_1) \mu_Y(t_2).\, Cross-covariance is related to the more commonly used cross-correlation of the processes in question. In the case of two random vectors \mathbf=(X_1, X_2, \ldots , X_p)^ and \mathbf=(Y_1, Y_2, \ldots , Y_q)^, the cross-covariance would be a p \times q matrix \operatorname_ (often denoted \operatorname(X,Y)) with entries \operatorname_(j,k) = \operatorname(X_j, Y_k).\, Thus the term ''cross-covariance'' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stochastic Process

In probability theory and related fields, a stochastic () or random process is a mathematical object usually defined as a family of random variables. Stochastic processes are widely used as mathematical models of systems and phenomena that appear to vary in a random manner. Examples include the growth of a bacterial population, an electrical current fluctuating due to thermal noise, or the movement of a gas molecule. Stochastic processes have applications in many disciplines such as biology, chemistry, ecology, neuroscience, physics, image processing, signal processing, control theory, information theory, computer science, cryptography and telecommunications. Furthermore, seemingly random changes in financial markets have motivated the extensive use of stochastic processes in finance. Applications and the study of phenomena have in turn inspired the proposal of new stochastic processes. Examples of such stochastic processes include the Wiener process or Brownia ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Wide Sense Stationary

In mathematics and statistics, a stationary process (or a strict/strictly stationary process or strong/strongly stationary process) is a stochastic process whose unconditional joint probability distribution does not change when shifted in time. Consequently, parameters such as mean and variance also do not change over time. If you draw a line through the middle of a stationary process then it should be flat; it may have 'seasonal' cycles, but overall it does not trend up nor down. Since stationarity is an assumption underlying many statistical procedures used in time series analysis, non-stationary data are often transformed to become stationary. The most common cause of violation of stationarity is a trend in the mean, which can be due either to the presence of a unit root or of a deterministic trend. In the former case of a unit root, stochastic shocks have permanent effects, and the process is not mean-reverting. In the latter case of a deterministic trend, the process is called ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Autocovariance

In probability theory and statistics, given a stochastic process, the autocovariance is a function that gives the covariance of the process with itself at pairs of time points. Autocovariance is closely related to the autocorrelation of the process in question. Auto-covariance of stochastic processes Definition With the usual notation \operatorname for the expectation operator, if the stochastic process \left\ has the mean function \mu_t = \operatorname _t/math>, then the autocovariance is given by where t_1 and t_2 are two moments in time. Definition for weakly stationary process If \left\ is a weakly stationary (WSS) process, then the following are true: :\mu_ = \mu_ \triangleq \mu for all t_1,t_2 and :\operatorname [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

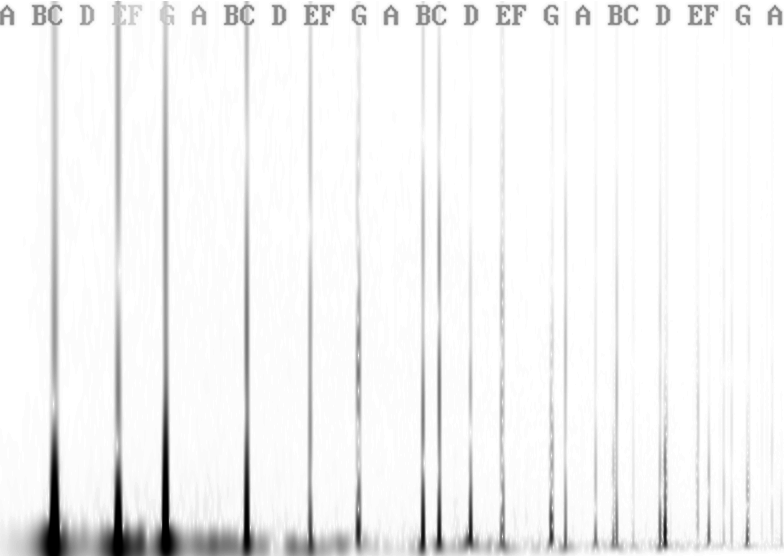

Fourier Transform

A Fourier transform (FT) is a mathematical transform that decomposes functions into frequency components, which are represented by the output of the transform as a function of frequency. Most commonly functions of time or space are transformed, which will output a function depending on temporal frequency or spatial frequency respectively. That process is also called ''analysis''. An example application would be decomposing the waveform of a musical chord into terms of the intensity of its constituent pitches. The term ''Fourier transform'' refers to both the frequency domain representation and the mathematical operation that associates the frequency domain representation to a function of space or time. The Fourier transform of a function is a complex-valued function representing the complex sinusoids that comprise the original function. For each frequency, the magnitude ( absolute value) of the complex value represents the amplitude of a constituent complex sinusoid ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Coherence (signal Processing)

In signal processing, the coherence is a statistic that can be used to examine the relation between two signals or data sets. It is commonly used to estimate the power transfer between input and output of a linear system. If the signals are ergodic, and the system function is linear, it can be used to estimate the causality between the input and output. Definition and formulation The coherence (sometimes called magnitude-squared coherence) between two signals x(t) and y(t) is a real-valued function that is defined as: ::C_(f) = \frac where Gxy(f) is the Cross-spectral density between x and y, and Gxx(f) and Gyy(f) the auto spectral density of x and y respectively. The magnitude of the spectral density is denoted as , G, . Given the restrictions noted above (ergodicity, linearity) the coherence function estimates the extent to which y(t) may be predicted from x(t) by an optimum linear least squares function. Values of coherence will always satisfy 0\le C_(f)\le 1. For an ''idea ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Spectral Density

The power spectrum S_(f) of a time series x(t) describes the distribution of power into frequency components composing that signal. According to Fourier analysis, any physical signal can be decomposed into a number of discrete frequencies, or a spectrum of frequencies over a continuous range. The statistical average of a certain signal or sort of signal (including noise) as analyzed in terms of its frequency content, is called its spectrum. When the energy of the signal is concentrated around a finite time interval, especially if its total energy is finite, one may compute the energy spectral density. More commonly used is the power spectral density (or simply power spectrum), which applies to signals existing over ''all'' time, or over a time period large enough (especially in relation to the duration of a measurement) that it could as well have been over an infinite time interval. The power spectral density (PSD) then refers to the spectral energy distribution that would b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.gif)