|

Context-free Grammar

In formal language theory, a context-free grammar (CFG) is a formal grammar whose production rules are of the form :A\ \to\ \alpha with A a ''single'' nonterminal symbol, and \alpha a string of terminals and/or nonterminals (\alpha can be empty). A formal grammar is "context-free" if its production rules can be applied regardless of the context of a nonterminal. No matter which symbols surround it, the single nonterminal on the left hand side can always be replaced by the right hand side. This is what distinguishes it from a context-sensitive grammar. A formal grammar is essentially a set of production rules that describe all possible strings in a given formal language. Production rules are simple replacements. For example, the first rule in the picture, :\langle\text\rangle \to \langle\text\rangle = \langle\text\rangle ; replaces \langle\text\rangle with \langle\text\rangle = \langle\text\rangle ;. There can be multiple replacement rules for a given nonterminal symbol. The ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

C Grammar Example Derivation Svg

C, or c, is the third letter in the Latin alphabet, used in the modern English alphabet, the alphabets of other western European languages and others worldwide. Its name in English is ''cee'' (pronounced ), plural ''cees''. History "C" comes from the same letter as "G". The Semites named it gimel. The sign is possibly adapted from an Egyptian hieroglyph for a staff sling, which may have been the meaning of the name ''gimel''. Another possibility is that it depicted a camel, the Semitic name for which was ''gamal''. Barry B. Powell, a specialist in the history of writing, states "It is hard to imagine how gimel = "camel" can be derived from the picture of a camel (it may show his hump, or his head and neck!)". In the Etruscan language, plosive consonants had no contrastive voicing, so the Greek ' Γ' (Gamma) was adopted into the Etruscan alphabet to represent . Already in the Western Greek alphabet, Gamma first took a '' form in Early Etruscan, then '' in Classical Etru ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

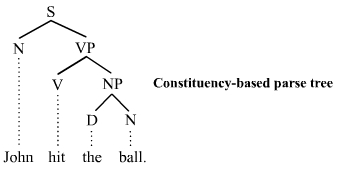

Phrase Structure Grammar

The term phrase structure grammar was originally introduced by Noam Chomsky as the term for grammar studied previously by Emil Post and Axel Thue (Post canonical systems). Some authors, however, reserve the term for more restricted grammars in the Chomsky hierarchy: context-sensitive grammars or context-free grammars. In a broader sense, phrase structure grammars are also known as ''constituency grammars''. The defining trait of phrase structure grammars is thus their adherence to the constituency relation, as opposed to the dependency relation of dependency grammars. Constituency relation In linguistics, phrase structure grammars are all those grammars that are based on the constituency relation, as opposed to the dependency relation associated with dependency grammars; hence, phrase structure grammars are also known as constituency grammars. Any of several related theories for the parsing of natural language qualify as constituency grammars, and most of them have been develope ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Algol (programming Language)

ALGOL (; short for "Algorithmic Language") is a family of imperative computer programming languages originally developed in 1958. ALGOL heavily influenced many other languages and was the standard method for algorithm description used by the Association for Computing Machinery (ACM) in textbooks and academic sources for more than thirty years. In the sense that the syntax of most modern languages is "Algol-like", it was arguably more influential than three other high-level programming languages among which it was roughly contemporary: FORTRAN, Lisp, and COBOL. It was designed to avoid some of the perceived problems with FORTRAN and eventually gave rise to many other programming languages, including PL/I, Simula, BCPL, B, Pascal, and C. ALGOL introduced code blocks and the begin...end pairs for delimiting them. It was also the first language implementing nested function definitions with lexical scope. Moreover, it was the first programming language which gave detailed att ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Generative Grammar

Generative grammar, or generativism , is a linguistic theory that regards linguistics as the study of a hypothesised innate grammatical structure. It is a biological or biologistic modification of earlier structuralist theories of linguistics, deriving ultimately from glossematics. Generative grammar considers grammar as a system of rules that generates exactly those combinations of words that form grammatical sentences in a given language. It is a system of explicit rules that may apply repeatedly to generate an indefinite number of sentences which can be as long as one wants them to be. The difference from structural and functional models is that the object is base-generated within the verb phrase in generative grammar. This purportedly cognitive structure is thought of as being a part of a universal grammar, a syntactic structure which is caused by a genetic mutation in humans. Generativists have created numerous theories to make the NP VP (NP) analysis work in natural la ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Chomsky Hierarchy

In formal language theory, computer science and linguistics, the Chomsky hierarchy (also referred to as the Chomsky–Schützenberger hierarchy) is a containment hierarchy of classes of formal grammars. This hierarchy of grammars was described by Noam Chomsky in 1956. It is also named after Marcel-Paul Schützenberger, who played a crucial role in the development of the theory of formal languages. Formal grammars A formal grammar of this type consists of a finite set of '' production rules'' (''left-hand side'' → ''right-hand side''), where each side consists of a finite sequence of the following symbols: * a finite set of ''nonterminal symbols'' (indicating that some production rule can yet be applied) * a finite set of ''terminal symbols'' (indicating that no production rule can be applied) * a ''start symbol'' (a distinguished nonterminal symbol) A formal grammar provides an axiom schema for (or ''generates'') a ''formal language'', which is a (usually infinite) s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Axel Thue

Axel Thue (; 19 February 1863 – 7 March 1922) was a Norwegian mathematician, known for his original work in diophantine approximation and combinatorics. Work Thue published his first important paper in 1909. He stated in 1914 the so-called word problem for semigroups or Thue problem, closely related to the halting problem.Ronald V. Book and Friedrich Otto, ''String-rewriting Systems'', Springer, 1993, , p. 36. His only known PhD student was Thoralf Skolem Thoralf Albert Skolem (; 23 May 1887 – 23 March 1963) was a Norwegian mathematician who worked in mathematical logic and set theory. Life Although Skolem's father was a primary school teacher, most of his extended family were farmers. Skolem .... The esoteric programming language Thue is named after him. Publications * * See also * * * * * * References External links * Axel Thue private archiveexists at NTNU University LibrarDorabiblioteket 1863 births 1922 deaths 20th-century Norwegian mathematicians N ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Semi-Thue System

In theoretical computer science and mathematical logic a string rewriting system (SRS), historically called a semi- Thue system, is a rewriting system over strings from a (usually finite) alphabet. Given a binary relation R between fixed strings over the alphabet, called rewrite rules, denoted by s\rightarrow t, an SRS extends the rewriting relation to all strings in which the left- and right-hand side of the rules appear as substrings, that is usv\rightarrow utv, where s, t, u, and v are strings. The notion of a semi-Thue system essentially coincides with the presentation of a monoid. Thus they constitute a natural framework for solving the word problem for monoids and groups. An SRS can be defined directly as an abstract rewriting system. It can also be seen as a restricted kind of a term rewriting system. As a formalism, string rewriting systems are Turing complete. The semi-Thue name comes from the Norwegian mathematician Axel Thue, who introduced systematic treatment of strin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

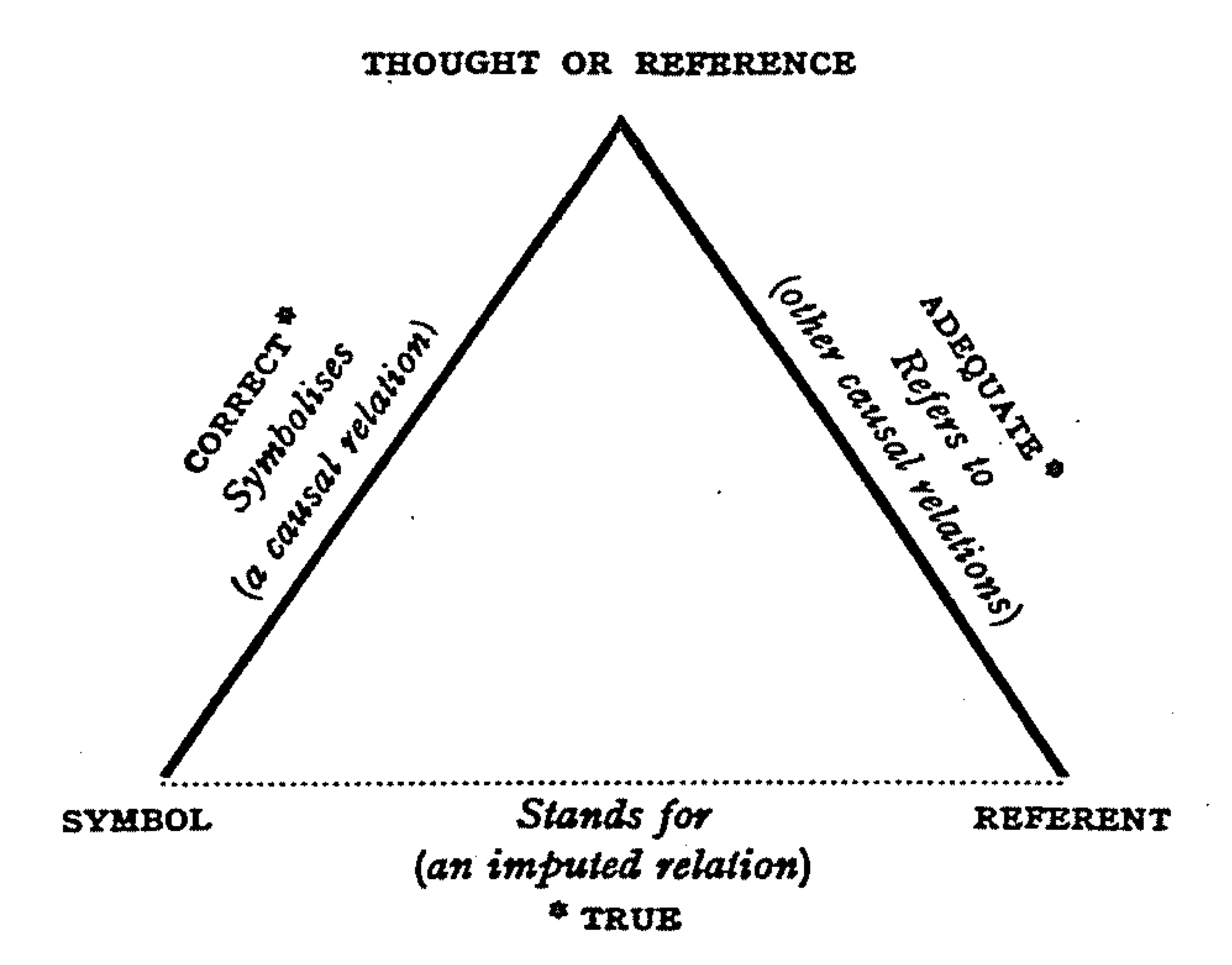

Reference

Reference is a relationship between objects in which one object designates, or acts as a means by which to connect to or link to, another object. The first object in this relation is said to ''refer to'' the second object. It is called a ''name'' for the second object. The second object, the one to which the first object refers, is called the ''referent'' of the first object. A name is usually a phrase or expression, or some other symbolic representation. Its referent may be anything – a material object, a person, an event, an activity, or an abstract concept. References can take on many forms, including: a thought, a sensory perception that is audible (onomatopoeia), visual (text), olfactory, or tactile, emotional state, relationship with other, spacetime coordinate, symbolic or alpha-numeric, a physical object or an energy projection. In some cases, methods are used that intentionally hide the reference from some observers, as in cryptography. References feature in many sph ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Agreement (linguistics)

In linguistics, agreement or concord ( abbreviated ) occurs when a word changes form depending on the other words to which it relates. It is an instance of inflection, and usually involves making the value of some grammatical category (such as gender or person) "agree" between varied words or parts of the sentence. For example, in Standard English, one may say ''I am'' or ''he is'', but not "I is" or "he am". This is because English grammar requires that the verb and its subject agree in ''person''. The pronouns ''I'' and ''he'' are first and third person respectively, as are the verb forms ''am'' and ''is''. The verb form must be selected so that it has the same person as the subject in contrast to notional agreement, which is based on meaning. By category Agreement generally involves matching the value of some grammatical category between different constituents of a sentence (or sometimes between sentences, as in some cases where a pronoun is required to agree with its ante ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Recursion

Recursion (adjective: ''recursive'') occurs when a thing is defined in terms of itself or of its type. Recursion is used in a variety of disciplines ranging from linguistics to logic. The most common application of recursion is in mathematics and computer science, where a function being defined is applied within its own definition. While this apparently defines an infinite number of instances (function values), it is often done in such a way that no infinite loop or infinite chain of references ("crock recursion") can occur. Formal definitions In mathematics and computer science, a class of objects or methods exhibits recursive behavior when it can be defined by two properties: * A simple ''base case'' (or cases) — a terminating scenario that does not use recursion to produce an answer * A ''recursive step'' — a set of rules that reduces all successive cases toward the base case. For example, the following is a recursive definition of a person's ''ancestor''. One's ances ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Grammar

In linguistics, the grammar of a natural language is its set of structure, structural constraints on speakers' or writers' composition of clause (linguistics), clauses, phrases, and words. The term can also refer to the study of such constraints, a field that includes domains such as phonology, morphology (linguistics), morphology, and syntax, often complemented by phonetics, semantics, and pragmatics. There are currently two different approaches to the study of grammar: traditional grammar and Grammar#Theoretical frameworks, theoretical grammar. Fluency, Fluent speakers of a variety (linguistics), language variety or ''lect'' have effectively internalized these constraints, the vast majority of which – at least in the case of one's First language, native language(s) – are language acquisition, acquired not by conscious study or language teaching, instruction but by hearing other speakers. Much of this internalization occurs during early childhood; learning a language later ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.png)