|

Consistent Hashing

In computer science, consistent hashing is a special kind of hashing technique such that when a hash table is resized, only n/m keys need to be remapped on average where n is the number of keys and m is the number of slots. In contrast, in most traditional hash tables, a change in the number of array slots causes nearly all keys to be remapped because the mapping between the keys and the slots is defined by a modular operation. History The term "consistent hashing" was introduced by David Karger ''et al.'' at MIT for use in distributed caching, particularly for the web. This academic paper from 1997 in Symposium on Theory of Computing introduced the term "consistent hashing" as a way of distributing requests among a changing population of web servers. Each slot is then represented by a server in a distributed system or cluster. The addition of a server and the removal of a server (during scalability or outage) requires only num\_keys/num\_slots items to be re-shuffled when the nu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Science

Computer science is the study of computation, automation, and information. Computer science spans theoretical disciplines (such as algorithms, theory of computation, information theory, and automation) to Applied science, practical disciplines (including the design and implementation of Computer architecture, hardware and Computer programming, software). Computer science is generally considered an area of research, academic research and distinct from computer programming. Algorithms and data structures are central to computer science. The theory of computation concerns abstract models of computation and general classes of computational problem, problems that can be solved using them. The fields of cryptography and computer security involve studying the means for secure communication and for preventing Vulnerability (computing), security vulnerabilities. Computer graphics (computer science), Computer graphics and computational geometry address the generation of images. Progr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Consistent Hashing Sample Illustration

In classical deductive logic, a consistent theory is one that does not lead to a logical contradiction. The lack of contradiction can be defined in either semantic or syntactic terms. The semantic definition states that a theory is consistent if it has a model, i.e., there exists an interpretation under which all formulas in the theory are true. This is the sense used in traditional Aristotelian logic, although in contemporary mathematical logic the term '' satisfiable'' is used instead. The syntactic definition states a theory T is consistent if there is no formula \varphi such that both \varphi and its negation \lnot\varphi are elements of the set of consequences of T. Let A be a set of closed sentences (informally "axioms") and \langle A\rangle the set of closed sentences provable from A under some (specified, possibly implicitly) formal deductive system. The set of axioms A is consistent when \varphi, \lnot \varphi \in \langle A \rangle for no formula \varphi. If there ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Tree Traversal

In computer science, tree traversal (also known as tree search and walking the tree) is a form of graph traversal and refers to the process of visiting (e.g. retrieving, updating, or deleting) each node in a tree data structure, exactly once. Such traversals are classified by the order in which the nodes are visited. The following algorithms are described for a binary tree, but they may be generalized to other trees as well. Types Unlike linked lists, one-dimensional arrays and other linear data structures, which are canonically traversed in linear order, trees may be traversed in multiple ways. They may be traversed in depth-first or breadth-first order. There are three common ways to traverse them in depth-first order: in-order, pre-order and post-order. Beyond these basic traversals, various more complex or hybrid schemes are possible, such as depth-limited searches like iterative deepening depth-first search. The latter, as well as breadth-first search, can also be used to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Binary Search Tree

In computer science, a binary search tree (BST), also called an ordered or sorted binary tree, is a rooted binary tree data structure with the key of each internal node being greater than all the keys in the respective node's left subtree and less than the ones in its right subtree. The time complexity of operations on the binary search tree is directly proportional to the height of the tree. Binary search trees allow binary search for fast lookup, addition, and removal of data items. Since the nodes in a BST are laid out so that each comparison skips about half of the remaining tree, the lookup performance is proportional to that of binary logarithm. BSTs were devised in the 1960s for the problem of efficient storage of labeled data and are attributed to Conway Berners-Lee and David Wheeler. The performance of a binary search tree is dependent on the order of insertion of the nodes into the tree since arbitrary insertions may lead to degeneracy; several variations of the bi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

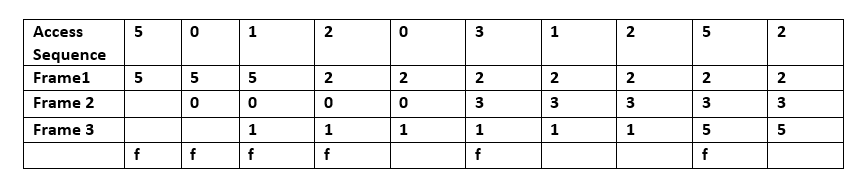

Cache Replacement Policies

In computing, cache algorithms (also frequently called cache replacement algorithms or cache replacement policies) are optimizing instructions, or algorithms, that a computer program or a hardware-maintained structure can utilize in order to manage a cache of information stored on the computer. Caching improves performance by keeping recent or often-used data items in memory locations that are faster or computationally cheaper to access than normal memory stores. When the cache is full, the algorithm must choose which items to discard to make room for the new ones. Overview The average memory reference time is : T = m \times T_m + T_h + E where : m = miss ratio = 1 - (hit ratio) : T_m = time to make a main memory access when there is a miss (or, with multi-level cache, average memory reference time for the next-lower cache) : T_h= the latency: the time to reference the cache (should be the same for hits and misses) : E = various secondary effects, such as queuing effects in mult ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Web Server

A web server is computer software and underlying hardware that accepts requests via HTTP (the network protocol created to distribute web content) or its secure variant HTTPS. A user agent, commonly a web browser or web crawler, initiates communication by making a request for a web page or other resource using HTTP, and the server responds with the content of that resource or an error message. A web server can also accept and store resources sent from the user agent if configured to do so. The hardware used to run a web server can vary according to the volume of requests that it needs to handle. At the low end of the range are embedded systems, such as a router that runs a small web server as its configuration interface. A high-traffic Internet website might handle requests with hundreds of servers that run on racks of high-speed computers. A resource sent from a web server can be a preexisting file (static content) available to the web server, or it can be generated ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cache (computing)

In computing, a cache ( ) is a hardware or software component that stores data so that future requests for that data can be served faster; the data stored in a cache might be the result of an earlier computation or a copy of data stored elsewhere. A ''cache hit'' occurs when the requested data can be found in a cache, while a ''cache miss'' occurs when it cannot. Cache hits are served by reading data from the cache, which is faster than recomputing a result or reading from a slower data store; thus, the more requests that can be served from the cache, the faster the system performs. To be cost-effective and to enable efficient use of data, caches must be relatively small. Nevertheless, caches have proven themselves in many areas of computing, because typical computer applications access data with a high degree of locality of reference. Such access patterns exhibit temporal locality, where data is requested that has been recently requested already, and spatial locality, where d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Search

In computer science, a linear search or sequential search is a method for finding an element within a list. It sequentially checks each element of the list until a match is found or the whole list has been searched. A linear search runs in at worst linear time and makes at most comparisons, where is the length of the list. If each element is equally likely to be searched, then linear search has an average case of comparisons, but the average case can be affected if the search probabilities for each element vary. Linear search is rarely practical because other search algorithms and schemes, such as the binary search algorithm and hash tables, allow significantly faster searching for all but short lists. Algorithm A linear search sequentially checks each element of the list until it finds an element that matches the target value. If the algorithm reaches the end of the list, the search terminates unsuccessfully. Basic algorithm Given a list of elements with values or record ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Binary Search Algorithm

In computer science, binary search, also known as half-interval search, logarithmic search, or binary chop, is a search algorithm that finds the position of a target value within a sorted array. Binary search compares the target value to the middle element of the array. If they are not equal, the half in which the target cannot lie is eliminated and the search continues on the remaining half, again taking the middle element to compare to the target value, and repeating this until the target value is found. If the search ends with the remaining half being empty, the target is not in the array. Binary search runs in logarithmic time in the worst case, making O(\log n) comparisons, where n is the number of elements in the array. Binary search is faster than linear search except for small arrays. However, the array must be sorted first to be able to apply binary search. There are specialized data structures designed for fast searching, such as hash tables, that can be searched mor ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Universally Unique Identifier

A universally unique identifier (UUID) is a 128-bit nominal number, label used for information in computer systems. The term globally unique identifier (GUID) is also used. When generated according to the standard methods, UUIDs are, for practical purposes, unique. Their uniqueness does not depend on a central registration authority or coordination between the parties generating them, unlike most other numbering schemes. While the probability that a UUID will be duplicated is not zero, it is generally considered close enough to zero to be negligible. Thus, anyone can create a UUID and use it to identify something with near certainty that the identifier does not duplicate one that has already been, or will be, created to identify something else. Information labeled with UUIDs by independent parties can therefore be later combined into a single database or transmitted on the same channel, with a negligible probability of duplication. Adoption of UUIDs is widespread, with many comp ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

IP Address

An Internet Protocol address (IP address) is a numerical label such as that is connected to a computer network that uses the Internet Protocol for communication.. Updated by . An IP address serves two main functions: network interface identification and location addressing. Internet Protocol version 4 (IPv4) defines an IP address as a 32-bit number. However, because of the growth of the Internet and the depletion of available IPv4 addresses, a new version of IP (IPv6), using 128 bits for the IP address, was standardized in 1998. IPv6 deployment has been ongoing since the mid-2000s. IP addresses are written and displayed in human-readable notations, such as in IPv4, and in IPv6. The size of the routing prefix of the address is designated in CIDR notation by suffixing the address with the number of significant bits, e.g., , which is equivalent to the historically used subnet mask . The IP address space is managed globally by the Internet Assigned Numbers Authority (IA ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hash Table

In computing, a hash table, also known as hash map, is a data structure that implements an associative array or dictionary. It is an abstract data type that maps keys to values. A hash table uses a hash function to compute an ''index'', also called a ''hash code'', into an array of ''buckets'' or ''slots'', from which the desired value can be found. During lookup, the key is hashed and the resulting hash indicates where the corresponding value is stored. Ideally, the hash function will assign each key to a unique bucket, but most hash table designs employ an imperfect hash function, which might cause hash ''collisions'' where the hash function generates the same index for more than one key. Such collisions are typically accommodated in some way. In a well-dimensioned hash table, the average time complexity for each lookup is independent of the number of elements stored in the table. Many hash table designs also allow arbitrary insertions and deletions of key–value pairs, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |