|

Conditioning (probability)

Beliefs depend on the available information. This idea is formalized in probability theory by conditioning. Conditional probabilities, conditional expectations, and conditional probability distributions are treated on three levels: discrete probabilities, probability density functions, and measure theory. Conditioning leads to a non-random result if the condition is completely specified; otherwise, if the condition is left random, the result of conditioning is also random. Conditioning on the discrete level Example: A fair coin is tossed 10 times; the random variable ''X'' is the number of heads in these 10 tosses, and ''Y'' is the number of heads in the first 3 tosses. In spite of the fact that ''Y'' emerges before ''X'' it may happen that someone knows ''X'' but not ''Y''. Conditional probability Given that ''X'' = 1, the conditional probability of the event ''Y'' = 0 is : \mathbb (Y=0, X=1) = \frac = 0.7 More generally, : \begin \mathbb (Y=0, X=x) &= \frac = \frac && ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Theory

Probability theory is the branch of mathematics concerned with probability. Although there are several different probability interpretations, probability theory treats the concept in a rigorous mathematical manner by expressing it through a set of axioms. Typically these axioms formalise probability in terms of a probability space, which assigns a measure taking values between 0 and 1, termed the probability measure, to a set of outcomes called the sample space. Any specified subset of the sample space is called an event. Central subjects in probability theory include discrete and continuous random variables, probability distributions, and stochastic processes (which provide mathematical abstractions of non-deterministic or uncertain processes or measured quantities that may either be single occurrences or evolve over time in a random fashion). Although it is not possible to perfectly predict random events, much can be said about their behavior. Two major results in probability ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cumulative Distribution Function

In probability theory and statistics, the cumulative distribution function (CDF) of a real-valued random variable X, or just distribution function of X, evaluated at x, is the probability that X will take a value less than or equal to x. Every probability distribution supported on the real numbers, discrete or "mixed" as well as continuous, is uniquely identified by an ''upwards continuous'' ''monotonic increasing'' cumulative distribution function F : \mathbb R \rightarrow ,1/math> satisfying \lim_F(x)=0 and \lim_F(x)=1. In the case of a scalar continuous distribution, it gives the area under the probability density function from minus infinity to x. Cumulative distribution functions are also used to specify the distribution of multivariate random variables. Definition The cumulative distribution function of a real-valued random variable X is the function given by where the right-hand side represents the probability that the random variable X takes on a value less tha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Riemann Integrability

In the branch of mathematics known as real analysis, the Riemann integral, created by Bernhard Riemann, was the first rigorous definition of the integral of a function on an interval. It was presented to the faculty at the University of Göttingen in 1854, but not published in a journal until 1868. For many functions and practical applications, the Riemann integral can be evaluated by the fundamental theorem of calculus or approximated by numerical integration. Overview Let be a non-negative real-valued function on the interval , and let be the region of the plane under the graph of the function and above the interval . See the figure on the top right. This region can be expressed in set-builder notation as S = \left \. We are interested in measuring the area of . Once we have measured it, we will denote the area in the usual way by \int_a^b f(x)\,dx. The basic idea of the Riemann integral is to use very simple approximations for the area of . By taking better and bet ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Galerkin Method

In mathematics, in the area of numerical analysis, Galerkin methods, named after the Russian mathematician Boris Galerkin, convert a continuous operator problem, such as a differential equation, commonly in a weak formulation, to a discrete problem by applying linear constraints determined by finite sets of basis functions. Often when referring to a Galerkin method, one also gives the name along with typical assumptions and approximation methods used: * Ritz–Galerkin method (after Walther Ritz) typically assumes symmetric and positive definite bilinear form in the weak formulation, where the differential equation for a physical system can be formulated via minimization of a quadratic function representing the system energy and the approximate solution is a linear combination of the given set of the basis functions.A. Ern, J.L. Guermond, ''Theory and practice of finite elements'', Springer, 2004, * Bubnov–Galerkin method (after Ivan Bubnov) does not require the bilinear fo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hilbert Space

In mathematics, Hilbert spaces (named after David Hilbert) allow generalizing the methods of linear algebra and calculus from (finite-dimensional) Euclidean vector spaces to spaces that may be infinite-dimensional. Hilbert spaces arise naturally and frequently in mathematics and physics, typically as function spaces. Formally, a Hilbert space is a vector space equipped with an inner product that defines a distance function for which the space is a complete metric space. The earliest Hilbert spaces were studied from this point of view in the first decade of the 20th century by David Hilbert, Erhard Schmidt, and Frigyes Riesz. They are indispensable tools in the theories of partial differential equations, quantum mechanics, Fourier analysis (which includes applications to signal processing and heat transfer), and ergodic theory (which forms the mathematical underpinning of thermodynamics). John von Neumann coined the term ''Hilbert space'' for the abstract concept that under ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lp Space

In mathematics, the spaces are function spaces defined using a natural generalization of the Norm (mathematics)#p-norm, -norm for finite-dimensional vector spaces. They are sometimes called Lebesgue spaces, named after Henri Lebesgue , although according to the Nicolas Bourbaki, Bourbaki group they were first introduced by Frigyes Riesz . spaces form an important class of Banach spaces in functional analysis, and of topological vector spaces. Because of their key role in the mathematical analysis of measure and probability spaces, Lebesgue spaces are used also in the theoretical discussion of problems in physics, statistics, economics, finance, engineering, and other disciplines. Applications Statistics In statistics, measures of central tendency and statistical dispersion, such as the mean, median, and standard deviation, are defined in terms of metrics, and measures of central tendency can be characterized as Central tendency#Solutions to variational problems, solutions to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Congruence (geometry)

In geometry, two figures or objects are congruent if they have the same shape and size, or if one has the same shape and size as the mirror image of the other. More formally, two sets of points are called congruent if, and only if, one can be transformed into the other by an isometry, i.e., a combination of rigid motions, namely a translation, a rotation, and a reflection. This means that either object can be repositioned and reflected (but not resized) so as to coincide precisely with the other object. Therefore two distinct plane figures on a piece of paper are congruent if they can be cut out and then matched up completely. Turning the paper over is permitted. In elementary geometry the word ''congruent'' is often used as follows. The word ''equal'' is often used in place of ''congruent'' for these objects. *Two line segments are congruent if they have the same length. *Two angles are congruent if they have the same measure. *Two circles are congruent if they have the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Weierstrass Function

In mathematics, the Weierstrass function is an example of a real-valued function (mathematics), function that is continuous function, continuous everywhere but Differentiable function, differentiable nowhere. It is an example of a fractal curve. It is named after its discoverer Karl Weierstrass. The Weierstrass function has historically served the role of a pathological (mathematics), pathological function, being the first published example (1872) specifically concocted to challenge the notion that every continuous function is differentiable except on a set of isolated points. Weierstrass's demonstration that continuity did not imply almost-everywhere differentiability upended mathematics, overturning several proofs that relied on geometric intuition and vague definitions of smoothness. These types of functions were denounced by contemporaries: Henri Poincaré famously described them as "monsters" and called Weierstrass' work "an outrage against common sense", while Charles Herm ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

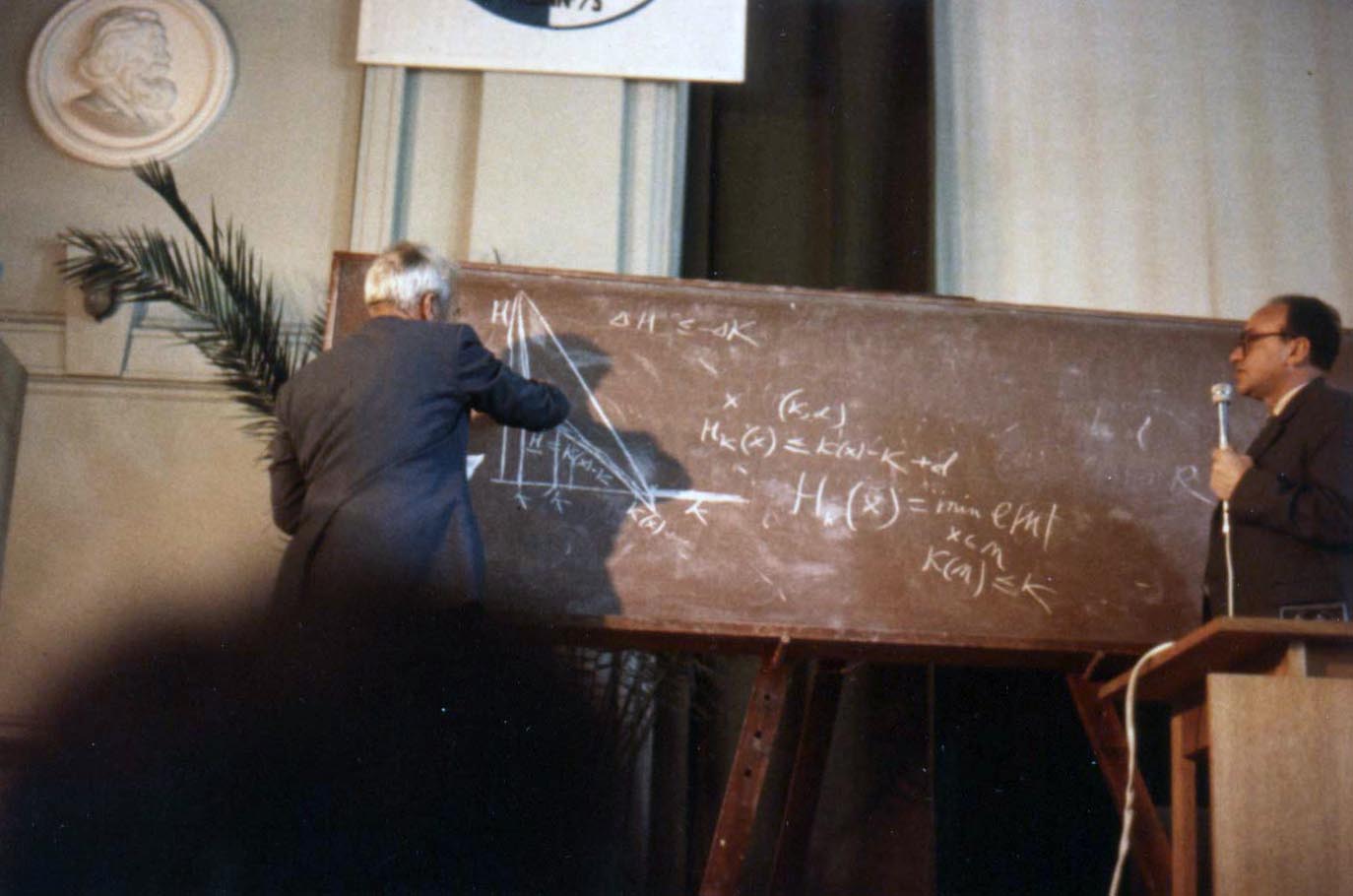

Andrey Kolmogorov

Andrey Nikolaevich Kolmogorov ( rus, Андре́й Никола́евич Колмого́ров, p=ɐnˈdrʲej nʲɪkɐˈlajɪvʲɪtɕ kəlmɐˈɡorəf, a=Ru-Andrey Nikolaevich Kolmogorov.ogg, 25 April 1903 – 20 October 1987) was a Soviet mathematician who contributed to the mathematics of probability theory, topology, intuitionistic logic, turbulence, classical mechanics, algorithmic information theory and computational complexity. Biography Early life Andrey Kolmogorov was born in Tambov, about 500 kilometers south-southeast of Moscow, in 1903. His unmarried mother, Maria Y. Kolmogorova, died giving birth to him. Andrey was raised by two of his aunts in Tunoshna (near Yaroslavl) at the estate of his grandfather, a well-to-do nobleman. Little is known about Andrey's father. He was supposedly named Nikolai Matveevich Kataev and had been an agronomist. Kataev had been exiled from St. Petersburg to the Yaroslavl province after his participation in the revolutionary movem ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |