|

Central Moment

In probability theory and statistics, a central moment is a moment of a probability distribution of a random variable about the random variable's mean; that is, it is the expected value of a specified integer power of the deviation of the random variable from the mean. The various moments form one set of values by which the properties of a probability distribution can be usefully characterized. Central moments are used in preference to ordinary moments, computed in terms of deviations from the mean instead of from zero, because the higher-order central moments relate only to the spread and shape of the distribution, rather than also to its location. Sets of central moments can be defined for both univariate and multivariate distributions. Univariate moments The ''n''th moment about the mean (or ''n''th central moment) of a real-valued random variable ''X'' is the quantity ''μ''''n'' := E 'X''.html"_;"title="''X'' − E[''X''">''X'' − E[''X''''n''_ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Theory

Probability theory is the branch of mathematics concerned with probability. Although there are several different probability interpretations, probability theory treats the concept in a rigorous mathematical manner by expressing it through a set of axioms. Typically these axioms formalise probability in terms of a probability space, which assigns a measure taking values between 0 and 1, termed the probability measure, to a set of outcomes called the sample space. Any specified subset of the sample space is called an event. Central subjects in probability theory include discrete and continuous random variables, probability distributions, and stochastic processes (which provide mathematical abstractions of non-deterministic or uncertain processes or measured quantities that may either be single occurrences or evolve over time in a random fashion). Although it is not possible to perfectly predict random events, much can be said about their behavior. Two major results in prob ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Skewness

In probability theory and statistics, skewness is a measure of the asymmetry of the probability distribution of a real-valued random variable about its mean. The skewness value can be positive, zero, negative, or undefined. For a unimodal distribution, negative skew commonly indicates that the ''tail'' is on the left side of the distribution, and positive skew indicates that the tail is on the right. In cases where one tail is long but the other tail is fat, skewness does not obey a simple rule. For example, a zero value means that the tails on both sides of the mean balance out overall; this is the case for a symmetric distribution, but can also be true for an asymmetric distribution where one tail is long and thin, and the other is short but fat. Introduction Consider the two distributions in the figure just below. Within each graph, the values on the right side of the distribution taper differently from the values on the left side. These tapering sides are called ''tai ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Deviation And Dispersion

Statistics (from German: '' Statistik'', "description of a state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of surveys and experiments.Dodge, Y. (2006) ''The Oxford Dictionary of Statistical Terms'', Oxford University Press. When census data cannot be collected, statisticians collect data by developing specific experiment designs and survey samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample to the population as a whole. An exp ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Image Moment

In image processing, computer vision and related fields, an image moment is a certain particular weighted average (moment) of the image pixels' intensities, or a function of such moments, usually chosen to have some attractive property or interpretation. Image moments are useful to describe objects after segmentation. Simple properties of the image which are found ''via'' image moments include area (or total intensity), its centroid, and information about its orientation. Raw moments For a 2D continuous function ''f''(''x'',''y'') the moment (sometimes called "raw moment") of order (''p'' + ''q'') is defined as : M_=\int\limits_^ \int\limits_^ x^py^qf(x,y) \,dx\, dy for ''p'',''q'' = 0,1,2,... Adapting this to scalar (greyscale) image with pixel intensities ''I''(''x'',''y''), raw image moments ''Mij'' are calculated by :M_ = \sum_x \sum_y x^i y^j I(x,y)\,\! In some cases, this may be calculated by considering the image as a probability density function, ''i.e.'', by di ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Standardized Moment

In probability theory and statistics, a standardized moment of a probability distribution is a moment (often a higher degree central moment) that is normalized, typically by a power of the standard deviation, rendering the moment scale invariant. The shape of different probability distributions can be compared using standardized moments. Standard normalization Let ''X'' be a random variable with a probability distribution ''P'' and mean value \mu = \mathrm /math> (i.e. the first raw moment or moment about zero), the operator E denoting the expected value of ''X''. Then the standardized moment of degree ''k'' is \frac, that is, the ratio of the ''k''th moment about the mean : \mu_k = \operatorname \left ( X - \mu )^k \right = \int_^ (x - \mu)^k P(x)\,dx, to the ''k''th power of the standard deviation, :\sigma^k = \left(\sqrt\right)^k. The power of ''k'' is because moments scale as x^k, meaning that \mu_k(\lambda X) = \lambda^k \mu_k(X): they are homogeneous functions of d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

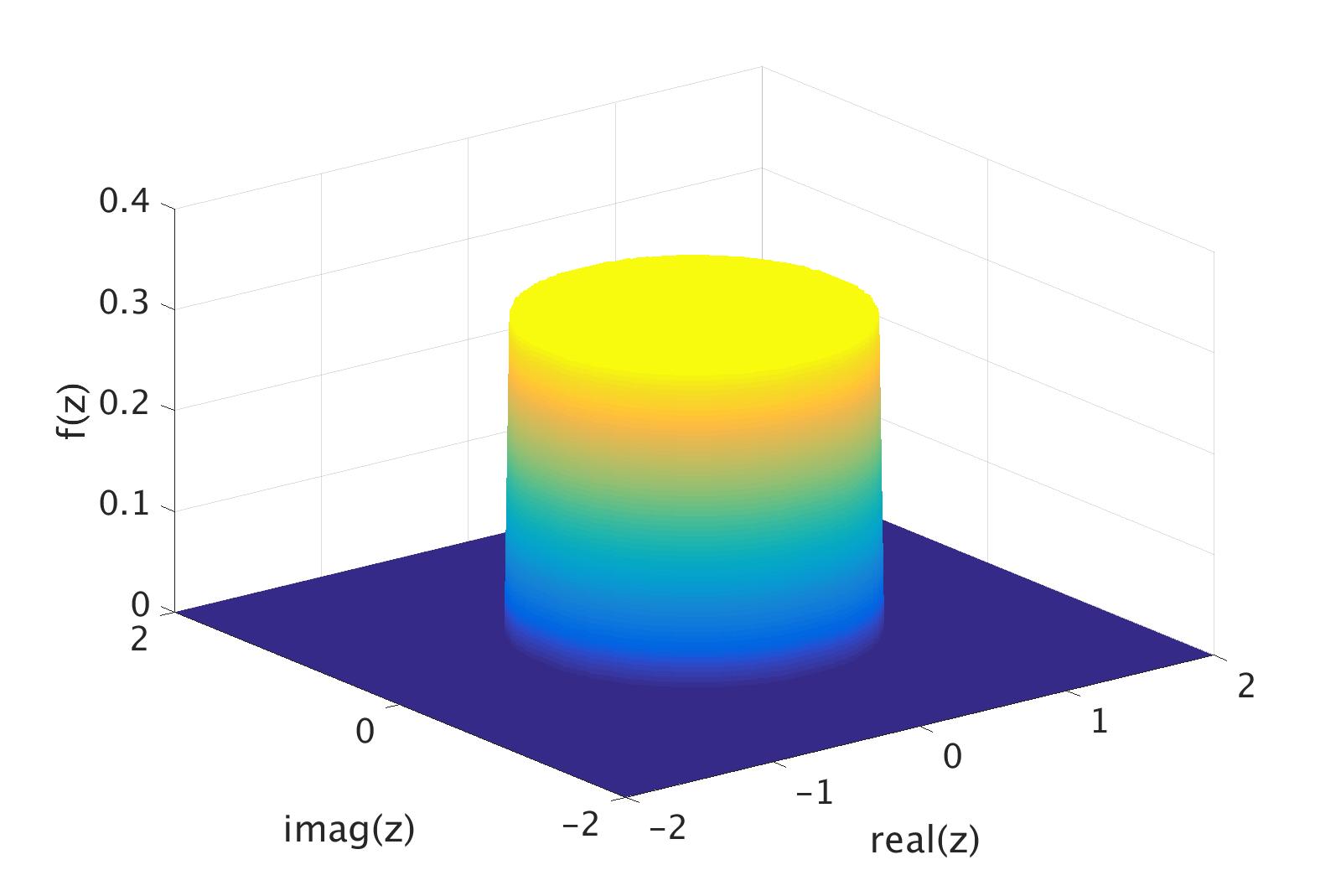

Complex Random Variable

In probability theory and statistics, complex random variables are a generalization of real-valued random variables to complex numbers, i.e. the possible values a complex random variable may take are complex numbers. Complex random variables can always be considered as pairs of real random variables: their real and imaginary parts. Therefore, the distribution of one complex random variable may be interpreted as the joint distribution of two real random variables. Some concepts of real random variables have a straightforward generalization to complex random variables—e.g., the definition of the mean of a complex random variable. Other concepts are unique to complex random variables. Applications of complex random variables are found in digital signal processing, quadrature amplitude modulation and information theory. Definition A complex random variable Z on the probability space (\Omega,\mathcal,P) is a function Z \colon \Omega \rightarrow \mathbb such that both its real p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Joint Probability Distribution

Given two random variables that are defined on the same probability space, the joint probability distribution is the corresponding probability distribution on all possible pairs of outputs. The joint distribution can just as well be considered for any given number of random variables. The joint distribution encodes the marginal distributions, i.e. the distributions of each of the individual random variables. It also encodes the conditional probability distributions, which deal with how the outputs of one random variable are distributed when given information on the outputs of the other random variable(s). In the formal mathematical setup of measure theory, the joint distribution is given by the pushforward measure, by the map obtained by pairing together the given random variables, of the sample space's probability measure. In the case of real-valued random variables, the joint distribution, as a particular multivariate distribution, may be expressed by a multivariate cumulat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reflection (mathematics)

In mathematics, a reflection (also spelled reflexion) is a mapping from a Euclidean space to itself that is an isometry with a hyperplane as a set of fixed points; this set is called the axis (in dimension 2) or plane (in dimension 3) of reflection. The image of a figure by a reflection is its mirror image in the axis or plane of reflection. For example the mirror image of the small Latin letter p for a reflection with respect to a vertical axis would look like q. Its image by reflection in a horizontal axis would look like b. A reflection is an involution: when applied twice in succession, every point returns to its original location, and every geometrical object is restored to its original state. The term ''reflection'' is sometimes used for a larger class of mappings from a Euclidean space to itself, namely the non-identity isometries that are involutions. Such isometries have a set of fixed points (the "mirror") that is an affine subspace, but is possibly smaller th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Symmetric Distribution

In statistics, a symmetric probability distribution is a probability distribution—an assignment of probabilities to possible occurrences—which is unchanged when its probability density function (for continuous probability distribution) or probability mass function (for discrete random variables) is reflected around a vertical line at some value of the random variable represented by the distribution. This vertical line is the line of symmetry of the distribution. Thus the probability of being any given distance on one side of the value about which symmetry occurs is the same as the probability of being the same distance on the other side of that value. Formal definition A probability distribution is said to be symmetric if and only if there exists a value x_0 such that : f(x_0-\delta) = f(x_0+\delta) for all real numbers \delta , where ''f'' is the probability density function if the distribution is continuous or the probability mass function if the distribution is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pascal's Triangle

In mathematics, Pascal's triangle is a triangular array of the binomial coefficients that arises in probability theory, combinatorics, and algebra. In much of the Western world, it is named after the French mathematician Blaise Pascal, although other mathematicians studied it centuries before him in India, Persia, China, Germany, and Italy. The rows of Pascal's triangle are conventionally enumerated starting with row n = 0 at the top (the 0th row). The entries in each row are numbered from the left beginning with k = 0 and are usually staggered relative to the numbers in the adjacent rows. The triangle may be constructed in the following manner: In row 0 (the topmost row), there is a unique nonzero entry 1. Each entry of each subsequent row is constructed by adding the number above and to the left with the number above and to the right, treating blank entries as 0. For example, the initial number of row 1 (or any other row) is 1 (the sum of 0 and 1), whereas the numbers 1 and 3 i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cumulant

In probability theory and statistics, the cumulants of a probability distribution are a set of quantities that provide an alternative to the '' moments'' of the distribution. Any two probability distributions whose moments are identical will have identical cumulants as well, and vice versa. The first cumulant is the mean, the second cumulant is the variance, and the third cumulant is the same as the third central moment. But fourth and higher-order cumulants are not equal to central moments. In some cases theoretical treatments of problems in terms of cumulants are simpler than those using moments. In particular, when two or more random variables are statistically independent, the -th-order cumulant of their sum is equal to the sum of their -th-order cumulants. As well, the third and higher-order cumulants of a normal distribution are zero, and it is the only distribution with this property. Just as for moments, where ''joint moments'' are used for collections of random variabl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Independence

Independence is a fundamental notion in probability theory, as in statistics and the theory of stochastic processes. Two events are independent, statistically independent, or stochastically independent if, informally speaking, the occurrence of one does not affect the probability of occurrence of the other or, equivalently, does not affect the odds. Similarly, two random variables are independent if the realization of one does not affect the probability distribution of the other. When dealing with collections of more than two events, two notions of independence need to be distinguished. The events are called pairwise independent if any two events in the collection are independent of each other, while mutual independence (or collective independence) of events means, informally speaking, that each event is independent of any combination of other events in the collection. A similar notion exists for collections of random variables. Mutual independence implies pairwise independence ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |