|

Cache Placement Policies

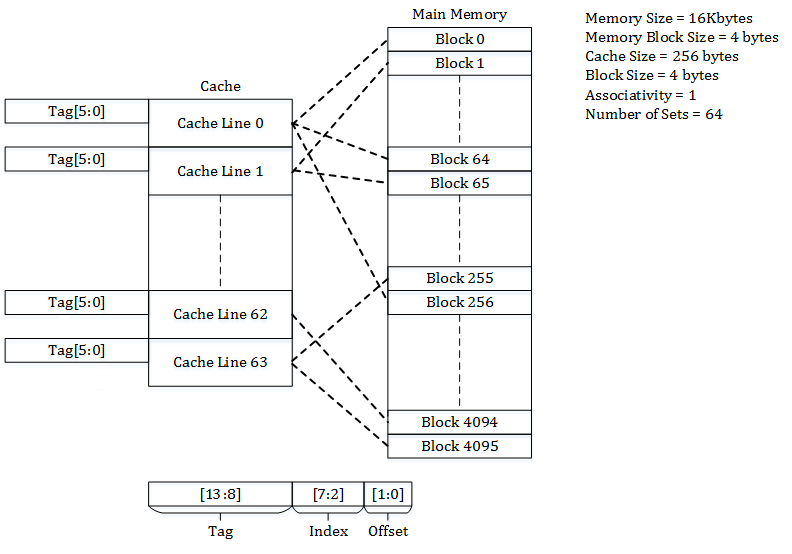

A CPU cache is a memory which holds the recently utilized data by the processor. A block of memory cannot necessarily be placed randomly in the cache and may be restricted to a single cache line or a set of cache lines by the cache placement policy. In other words, the cache placement policy determines where a particular memory block can be placed when it goes into the cache. There are three different policies available for placement of a memory block in the cache: direct-mapped, fully associative, and set-associative. Originally this space of cache organizations was described using the term "congruence mapping". Direct-mapped cache In a direct-mapped cache structure, the cache is organized into multiple sets with a single cache line per set. Based on the address of the memory block, it can only occupy a single cache line. The cache can be framed as a column matrix. To place a block in the cache * The set is determined by the index bits derived from the address of the mem ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

CPU Cache

A CPU cache is a hardware cache used by the central processing unit (CPU) of a computer to reduce the average cost (time or energy) to access data from the main memory. A cache is a smaller, faster memory, located closer to a processor core, which stores copies of the data from frequently used main memory locations. Most CPUs have a hierarchy of multiple cache levels (L1, L2, often L3, and rarely even L4), with different instruction-specific and data-specific caches at level 1. The cache memory is typically implemented with static random-access memory (SRAM), in modern CPUs by far the largest part of them by chip area, but SRAM is not always used for all levels (of I- or D-cache), or even any level, sometimes some latter or all levels are implemented with eDRAM. Other types of caches exist (that are not counted towards the "cache size" of the most important caches mentioned above), such as the translation lookaside buffer (TLB) which is part of the memory management unit ( ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Data Storage

Computer data storage is a technology consisting of computer components and recording media that are used to retain digital data. It is a core function and fundamental component of computers. The central processing unit (CPU) of a computer is what manipulates data by performing computations. In practice, almost all computers use a storage hierarchy, which puts fast but expensive and small storage options close to the CPU and slower but less expensive and larger options further away. Generally, the fast volatile technologies (which lose data when off power) are referred to as "memory", while slower persistent technologies are referred to as "storage". Even the first computer designs, Charles Babbage's Analytical Engine and Percy Ludgate's Analytical Machine, clearly distinguished between processing and memory (Babbage stored numbers as rotations of gears, while Ludgate stored numbers as displacements of rods in shuttles). This distinction was extended in the Von Neuman ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cache Performance Measurement And Metric

A CPU cache is a piece of hardware that reduces access time to data in memory by keeping some part of the frequently used data of the main memory in a 'cache' of smaller and faster memory. The performance of a computer system depends on the performance of all individual units—which include execution units like integer, branch and floating point, I/O units, bus, caches and memory systems. The gap between processor speed and main memory speed has grown exponentially. Until 2001–05, CPU speed, as measured by clock frequency, grew annually by 55%, whereas memory speed only grew by 7%. This problem is known as the memory wall. The motivation for a cache and its hierarchy is to bridge this speed gap and overcome the memory wall. The critical component in most high-performance computers is the cache. Since the cache exists to bridge the speed gap, its performance measurement and metrics are important in designing and choosing various parameters like cache size, associativity, replacem ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hash Function

A hash function is any function that can be used to map data of arbitrary size to fixed-size values. The values returned by a hash function are called ''hash values'', ''hash codes'', ''digests'', or simply ''hashes''. The values are usually used to index a fixed-size table called a ''hash table''. Use of a hash function to index a hash table is called ''hashing'' or ''scatter storage addressing''. Hash functions and their associated hash tables are used in data storage and retrieval applications to access data in a small and nearly constant time per retrieval. They require an amount of storage space only fractionally greater than the total space required for the data or records themselves. Hashing is a computationally and storage space-efficient form of data access that avoids the non-constant access time of ordered and unordered lists and structured trees, and the often exponential storage requirements of direct access of state spaces of large or variable-length keys. Use o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cache Algorithms

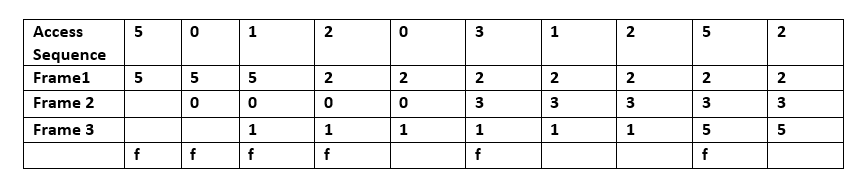

In computing, cache algorithms (also frequently called cache replacement algorithms or cache replacement policies) are optimizing instructions, or algorithms, that a computer program or a hardware-maintained structure can utilize in order to manage a cache of information stored on the computer. Caching improves performance by keeping recent or often-used data items in memory locations that are faster or computationally cheaper to access than normal memory stores. When the cache is full, the algorithm must choose which items to discard to make room for the new ones. Overview The average memory reference time is : T = m \times T_m + T_h + E where : m = miss ratio = 1 - (hit ratio) : T_m = time to make a main memory access when there is a miss (or, with multi-level cache, average memory reference time for the next-lower cache) : T_h= the latency: the time to reference the cache (should be the same for hits and misses) : E = various secondary effects, such as queuing effects in m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Content-addressable Memory

Content-addressable memory (CAM) is a special type of computer memory used in certain very-high-speed searching applications. It is also known as associative memory or associative storage and compares input search data against a table of stored data, and returns the address of matching data. CAM is frequently used in networking devices where it speeds up forwarding information base and routing table operations. This kind of associative memory is also used in cache memory. In associative cache memory, both address and content is stored side by side. When the address matches, the corresponding content is fetched from cache memory. History Dudley Allen Buck invented the concept of content-addressable memory in 1955. Buck is credited with the idea of ''recognition unit''. Hardware associative array Unlike standard computer memory, random-access memory (RAM), in which the user supplies a memory address and the RAM returns the data word stored at that address, a CAM is designed suc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cache Hierarchy

Cache hierarchy, or multi-level caches, refers to a memory architecture that uses a hierarchy of memory stores based on varying access speeds to cache data. Highly requested data is cached in high-speed access memory stores, allowing swifter access by central processing unit (CPU) cores. Cache hierarchy is a form and part of memory hierarchy and can be considered a form of tiered storage. This design was intended to allow CPU cores to process faster despite the memory latency of main memory access. Accessing main memory can act as a bottleneck for CPU core performance as the CPU waits for data, while making all of main memory high-speed may be prohibitively expensive. High-speed caches are a compromise allowing high-speed access to the data most-used by the CPU, permitting a faster CPU clock. Background In the history of computer and electronic chip development, there was a period when increases in CPU speed outpaced the improvements in memory access speed. The gap between t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cache (computing)

In computing, a cache ( ) is a hardware or software component that stores data so that future requests for that data can be served faster; the data stored in a cache might be the result of an earlier computation or a copy of data stored elsewhere. A ''cache hit'' occurs when the requested data can be found in a cache, while a ''cache miss'' occurs when it cannot. Cache hits are served by reading data from the cache, which is faster than recomputing a result or reading from a slower data store; thus, the more requests that can be served from the cache, the faster the system performs. To be cost-effective and to enable efficient use of data, caches must be relatively small. Nevertheless, caches have proven themselves in many areas of computing, because typical computer applications access data with a high degree of locality of reference. Such access patterns exhibit temporal locality, where data is requested that has been recently requested already, and spatial locality, where ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cache Coloring

In computer science, cache coloring (also known as page coloring) is the process of attempting to allocate free pages that are contiguous from the CPU cache's point of view, in order to maximize the total number of pages cached by the processor. Cache coloring is typically employed by low-level dynamic memory allocation code in the operating system, when mapping virtual memory to physical memory. A virtual memory subsystem that lacks cache coloring is less deterministic with regards to cache performance, as differences in page allocation from one program run to the next can lead to large differences in program performance. Details of operations A physically indexed CPU cache is designed such that addresses in adjacent physical memory blocks take different positions ("cache lines") in the cache, but this is not the case when it comes to virtual memory; when virtually adjacent but not physically adjacent memory blocks are allocated, they could potentially both take the same position ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |