|

Convex Optimization

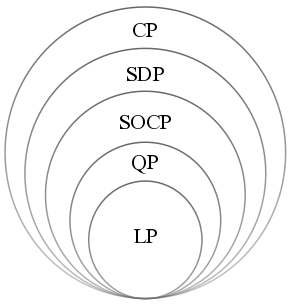

Convex optimization is a subfield of mathematical optimization that studies the problem of minimizing convex functions over convex sets (or, equivalently, maximizing concave functions over convex sets). Many classes of convex optimization problems admit polynomial-time algorithms, whereas mathematical optimization is in general NP-hard. Definition Abstract form A convex optimization problem is defined by two ingredients: * The ''objective function'', which is a real-valued convex function of ''n'' variables, f :\mathcal D \subseteq \mathbb^n \to \mathbb; * The ''feasible set'', which is a convex subset C\subseteq \mathbb^n. The goal of the problem is to find some \mathbf \in C attaining :\inf \. In general, there are three options regarding the existence of a solution: * If such a point ''x''* exists, it is referred to as an ''optimal point'' or ''solution''; the set of all optimal points is called the ''optimal set''; and the problem is called ''solvable''. * If f is unbou ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Mathematical Optimization

Mathematical optimization (alternatively spelled ''optimisation'') or mathematical programming is the selection of a best element, with regard to some criteria, from some set of available alternatives. It is generally divided into two subfields: discrete optimization and continuous optimization. Optimization problems arise in all quantitative disciplines from computer science and engineering to operations research and economics, and the development of solution methods has been of interest in mathematics for centuries. In the more general approach, an optimization problem consists of maxima and minima, maximizing or minimizing a Function of a real variable, real function by systematically choosing Argument of a function, input values from within an allowed set and computing the Value (mathematics), value of the function. The generalization of optimization theory and techniques to other formulations constitutes a large area of applied mathematics. Optimization problems Opti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Second Order Cone Programming

A second-order cone program (SOCP) is a convex optimization problem of the form :minimize \ f^T x \ :subject to ::\lVert A_i x + b_i \rVert_2 \leq c_i^T x + d_i,\quad i = 1,\dots,m ::Fx = g \ where the problem parameters are f \in \mathbb^n, \ A_i \in \mathbb^, \ b_i \in \mathbb^, \ c_i \in \mathbb^n, \ d_i \in \mathbb, \ F \in \mathbb^, and g \in \mathbb^p. x\in\mathbb^n is the optimization variable. \lVert x \rVert_2 is the Euclidean norm and ^T indicates transpose. The "second-order cone" in SOCP arises from the constraints, which are equivalent to requiring the affine function (A x + b, c^T x + d) to lie in the second-order cone in \mathbb^. SOCPs can be solved by interior point methods and in general, can be solved more efficiently than semidefinite programming (SDP) problems. Some engineering applications of SOCP include filter design, antenna array weight design, truss design, and grasping force optimization in robotics. Applications in quantitative finance include ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Linear Algebra

Linear algebra is the branch of mathematics concerning linear equations such as :a_1x_1+\cdots +a_nx_n=b, linear maps such as :(x_1, \ldots, x_n) \mapsto a_1x_1+\cdots +a_nx_n, and their representations in vector spaces and through matrix (mathematics), matrices. Linear algebra is central to almost all areas of mathematics. For instance, linear algebra is fundamental in modern presentations of geometry, including for defining basic objects such as line (geometry), lines, plane (geometry), planes and rotation (mathematics), rotations. Also, functional analysis, a branch of mathematical analysis, may be viewed as the application of linear algebra to Space of functions, function spaces. Linear algebra is also used in most sciences and fields of engineering because it allows mathematical model, modeling many natural phenomena, and computing efficiently with such models. For nonlinear systems, which cannot be modeled with linear algebra, it is often used for dealing with first-order a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Farkas' Lemma

In mathematics, Farkas' lemma is a solvability theorem for a finite system of linear inequalities. It was originally proven by the Hungarian mathematician Gyula Farkas. Farkas' lemma is the key result underpinning the linear programming duality and has played a central role in the development of mathematical optimization (alternatively, mathematical programming). It is used amongst other things in the proof of the Karush–Kuhn–Tucker theorem in nonlinear programming. Remarkably, in the area of the foundations of quantum theory, the lemma also underlies the complete set of Bell inequalities in the form of necessary and sufficient conditions for the existence of a local hidden-variable theory, given data from any specific set of measurements. Generalizations of the Farkas' lemma are about the solvability theorem for convex inequalities, i.e., infinite system of linear inequalities. Farkas' lemma belongs to a class of statements called "theorems of the alternative": a theo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Separating Hyperplane Theorem

In geometry, the hyperplane separation theorem is a theorem about disjoint convex sets in ''n''-dimensional Euclidean space. There are several rather similar versions. In one version of the theorem, if both these sets are closed and at least one of them is compact, then there is a hyperplane in between them and even two parallel hyperplanes in between them separated by a gap. In another version, if both disjoint convex sets are open, then there is a hyperplane in between them, but not necessarily any gap. An axis which is orthogonal to a separating hyperplane is a separating axis, because the orthogonal projections of the convex bodies onto the axis are disjoint. The hyperplane separation theorem is due to Hermann Minkowski. The Hahn–Banach separation theorem generalizes the result to topological vector spaces. A related result is the supporting hyperplane theorem. In the context of support-vector machines, the ''optimally separating hyperplane'' or ''maximum-margin hype ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Hilbert Projection Theorem

In mathematics, the Hilbert projection theorem is a famous result of convex analysis that says that for every vector x in a Hilbert space In mathematics, a Hilbert space is a real number, real or complex number, complex inner product space that is also a complete metric space with respect to the metric induced by the inner product. It generalizes the notion of Euclidean space. The ... H and every nonempty closed convex C \subseteq H, there exists a unique vector m \in C for which \, c - x\, is minimized over the vectors c \in C; that is, such that \, m - x\, \leq \, c - x\, for every c \in C. Finite dimensional case Some intuition for the theorem can be obtained by considering the first order condition of the optimization problem. Consider a finite dimensional real Hilbert space H with a subspace C and a point x. If m \in C is a or of the function N : C \to \R defined by N(c) := \, c - x\, (which is the same as the minimum point of c \mapsto \, c - x\, ^2), then deri ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Functional Analysis

Functional analysis is a branch of mathematical analysis, the core of which is formed by the study of vector spaces endowed with some kind of limit-related structure (for example, Inner product space#Definition, inner product, Norm (mathematics)#Definition, norm, or Topological space#Definitions, topology) and the linear transformation, linear functions defined on these spaces and suitably respecting these structures. The historical roots of functional analysis lie in the study of function space, spaces of functions and the formulation of properties of transformations of functions such as the Fourier transform as transformations defining, for example, continuous function, continuous or unitary operator, unitary operators between function spaces. This point of view turned out to be particularly useful for the study of differential equations, differential and integral equations. The usage of the word ''functional (mathematics), functional'' as a noun goes back to the calculus of v ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Global Minimum

In mathematical analysis, the maximum and minimum of a function are, respectively, the greatest and least value taken by the function. Known generically as extremum, they may be defined either within a given range (the ''local'' or ''relative'' extrema) or on the entire domain (the ''global'' or ''absolute'' extrema) of a function. Pierre de Fermat was one of the first mathematicians to propose a general technique, adequality, for finding the maxima and minima of functions. As defined in set theory, the maximum and minimum of a set are the greatest and least elements in the set, respectively. Unbounded infinite sets, such as the set of real numbers, have no minimum or maximum. In statistics, the corresponding concept is the sample maximum and minimum. Definition A real-valued function ''f'' defined on a domain ''X'' has a global (or absolute) maximum point at ''x''∗, if for all ''x'' in ''X''. Similarly, the function has a global (or absolute) minimum point at ''x''∗, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Entropy Maximization

The principle of maximum entropy states that the probability distribution which best represents the current state of knowledge about a system is the one with largest entropy, in the context of precisely stated prior data (such as a proposition that expresses testable information). Another way of stating this: Take precisely stated prior data or testable information about a probability distribution function. Consider the set of all trial probability distributions that would encode the prior data. According to this principle, the distribution with maximal information entropy is the best choice. History The principle was first expounded by E. T. Jaynes in two papers in 1957, where he emphasized a natural correspondence between statistical mechanics and information theory. In particular, Jaynes argued that the Gibbsian method of statistical mechanics is sound by also arguing that the entropy of statistical mechanics and the information entropy of information theory are the same ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Geometric Programming

A geometric program (GP) is an optimization problem of the form : \begin \mbox & f_0(x) \\ \mbox & f_i(x) \leq 1, \quad i=1, \ldots, m\\ & g_i(x) = 1, \quad i=1, \ldots, p, \end where f_0,\dots,f_m are posynomials and g_1,\dots,g_p are monomials. In the context of geometric programming (unlike standard mathematics), a monomial is a function from \mathbb_^n to \mathbb defined as :x \mapsto c x_1^ x_2^ \cdots x_n^ where c > 0 \ and a_i \in \mathbb . A posynomial is any sum of monomials.S. Boyd, S. J. Kim, L. Vandenberghe, and A. Hassibi. A Tutorial on Geometric Programming'' Retrieved 20 October 2019. Geometric programming is closely related to convex optimization: any GP can be made convex by means of a change of variables. GPs have numerous applications, including component sizing in IC design, aircraft design, maximum likelihood estimation for logistic regression in statistics, and parameter tuning of positive linear systems in control theory. Convex form Geometric program ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |