|

Canny Edge Detection

The Canny edge detector is an edge detection operator that uses a multi-stage algorithm to detect a wide range of edges in images. It was developed by John F. Canny in 1986. Canny also produced a ''computational theory of edge detection'' explaining why the technique works. Development Canny edge detection is a technique to extract useful structural information from different vision objects and dramatically reduce the amount of data to be processed. It has been widely applied in various computer vision systems. Canny has found that the requirements for the application of edge detection on diverse vision systems are relatively similar. Thus, an edge detection solution to address these requirements can be implemented in a wide range of situations. The general criteria for edge detection include: # Detection of edge with low error rate, which means that the detection should accurately catch as many edges shown in the image as possible # The edge point detected from the operator s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Edge Detection

Edge or EDGE may refer to: Technology Computing * Edge computing, a network load-balancing system * Edge device, an entry point to a computer network * Adobe Edge, a graphical development application * Microsoft Edge, a web browser developed by Microsoft * Microsoft Edge Legacy, a discontinued web browser developed by Microsoft * EdgeHTML, the layout engine used in Microsoft Edge Legacy * ThinkPad Edge, a Lenovo laptop computer series marketed from 2010 * Silhouette edge, in computer graphics, a feature of a 3D body projected onto a 2D plane * Explicit data graph execution, a computer instruction set architecture Telecommunication(s) * EDGE (telecommunication), a 2G digital cellular communications technology * Edge Wireless, an American mobile phone provider * Motorola Edge series, a series of smartphones made by Motorola * Samsung Galaxy Note Edge, a phablet made by Samsung * Samsung Galaxy S7 Edge or Samsung Galaxy S6 Edge, smartphones made by Samsung * Ubuntu Edg ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Prewitt Operator

The Prewitt operator is used in image processing, particularly within edge detection algorithms. Technically, it is a discrete differentiation operator, computing an approximation of the gradient of the image intensity function. At each point in the image, the result of the Prewitt operator is either the corresponding gradient vector or the norm of this vector. The Prewitt operator is based on convolving the image with a small, separable, and integer valued filter in horizontal and vertical directions and is therefore relatively inexpensive in terms of computations like Sobel and Kayyali operators. On the other hand, the gradient approximation which it produces is relatively crude, in particular for high frequency variations in the image. The Prewitt operator was developed by Judith M. S. Prewitt. Simplified description In simple terms, the operator calculates the ''gradient'' of the image intensity at each point, giving the direction of the largest possible increase from li ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Infinite Impulse Response

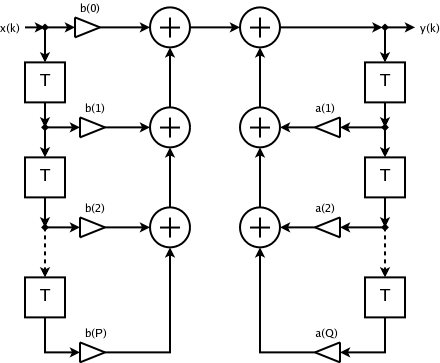

Infinite impulse response (IIR) is a property applying to many linear time-invariant systems that are distinguished by having an impulse response h(t) that does not become exactly zero past a certain point but continues indefinitely. This is in contrast to a finite impulse response (FIR) system, in which the impulse response ''does'' become exactly zero at times t>T for some finite T, thus being of finite duration. Common examples of linear time-invariant systems are most electronic and digital filters. Systems with this property are known as ''IIR systems'' or ''IIR filters''. In practice, the impulse response, even of IIR systems, usually approaches zero and can be neglected past a certain point. However the physical systems which give rise to IIR or FIR responses are dissimilar, and therein lies the importance of the distinction. For instance, analog electronic filters composed of resistors, capacitors, and/or inductors (and perhaps linear amplifiers) are generally IIR filte ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Finite Impulse Response

In signal processing, a finite impulse response (FIR) filter is a filter whose impulse response (or response to any finite length input) is of ''finite'' duration, because it settles to zero in finite time. This is in contrast to infinite impulse response (IIR) filters, which may have internal feedback and may continue to respond indefinitely (usually decaying). The impulse response (that is, the output in response to a Kronecker delta input) of an Nth-order discrete-time FIR filter lasts exactly N+1 samples (from first nonzero element through last nonzero element) before it then settles to zero. FIR filters can be discrete-time or continuous-time, and digital or analog. Definition For a causal discrete-time FIR filter of order ''N'', each value of the output sequence is a weighted sum of the most recent input values: :\begin y &= b_0 x + b_1 x -1+ \cdots + b_N x -N\\ &= \sum_^N b_i\cdot x -i \end where: * x /math> is the input signal, * y /math> is the outpu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Scale Space

Scale-space theory is a framework for multi-scale signal representation developed by the computer vision, image processing and signal processing communities with complementary motivations from physics and biological vision. It is a formal theory for handling image structures at different scales, by representing an image as a one-parameter family of smoothed images, the scale-space representation, parametrized by the size of the smoothing kernel used for suppressing fine-scale structures. The parameter t in this family is referred to as the ''scale parameter'', with the interpretation that image structures of spatial size smaller than about \sqrt have largely been smoothed away in the scale-space level at scale t. The main type of scale space is the ''linear (Gaussian) scale space'', which has wide applicability as well as the attractive property of being possible to derive from a small set of '' scale-space axioms''. The corresponding scale-space framework encompasses a th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Curvelet

Curvelets are a non-Adaptive-additive algorithm, adaptive technique for multi-scale Object (computer science), object representation. Being an extension of the wavelet concept, they are becoming popular in similar fields, namely in image processing and scientific computing. Wavelets generalize the Fourier transform by using a basis that represents both location and spatial frequency. For 2D or 3D signals, directional wavelet transforms go further, by using basis functions that are also localized in ''orientation''. A curvelet transform differs from other directional wavelet transforms in that the degree of localisation in orientation varies with scale. In particular, fine-scale basis functions are long ridges; the shape of the basis functions at scale ''j'' is 2^ by 2^ so the fine-scale bases are skinny ridges with a precisely determined orientation. Curvelets are an appropriate basis for representing images (or other functions) which are smooth apart from singularities along smoo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Histogram

A histogram is a visual representation of the frequency distribution, distribution of quantitative data. To construct a histogram, the first step is to Data binning, "bin" (or "bucket") the range of values— divide the entire range of values into a series of intervals—and then count how many values fall into each interval. The bins are usually specified as consecutive, non-overlapping interval (mathematics), intervals of a variable. The bins (intervals) are adjacent and are typically (but not required to be) of equal size. Histograms give a rough sense of the density of the underlying distribution of the data, and often for density estimation: estimating the probability density function of the underlying variable. The total area of a histogram used for probability density is always normalized to 1. If the length of the intervals on the ''x''-axis are all 1, then a histogram is identical to a relative frequency plot. Histograms are sometimes confused with bar charts. In a his ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Otsu's Method

In computer vision and image processing, Otsu's method, named after , is used to perform automatic image thresholding (image processing), thresholding. In the simplest form, the algorithm returns a single intensity threshold that separate pixels into two classes foreground and background. This threshold is determined by minimizing intra-class intensity variance, or equivalently, by maximizing inter-class variance. Otsu's method is a one-dimensional discrete analogue of Linear discriminant analysis#Fisher's linear discriminant, Fisher's discriminant analysis, is related to Jenks optimization method, and is equivalent to a globally optimal K-means clustering, ''k''-means performed on the intensity histogram. The extension to multi-level thresholding was described in the original paper, and computationally efficient implementations have since been proposed. Otsu's method The algorithm exhaustively searches for the threshold that minimizes the intra-class variance, defined as a weigh ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Finite Difference

A finite difference is a mathematical expression of the form . Finite differences (or the associated difference quotients) are often used as approximations of derivatives, such as in numerical differentiation. The difference operator, commonly denoted \Delta, is the operator (mathematics), operator that maps a function to the function \Delta[f] defined by \Delta[f](x) = f(x+1)-f(x). A difference equation is a functional equation that involves the finite difference operator in the same way as a differential equation involves derivatives. There are many similarities between difference equations and differential equations. Certain Recurrence relation#Relationship to difference equations narrowly defined, recurrence relations can be written as difference equations by replacing iteration notation with finite differences. In numerical analysis, finite differences are widely used for #Relation with derivatives, approximating derivatives, and the term "finite difference" is often used a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Connected-component Labeling

Connected-component labeling (CCL), connected-component analysis (CCA), blob extraction, region labeling, blob discovery, or region extraction is an algorithmic application of graph theory, where subsets of connected components are uniquely labeled based on a given heuristic. Connected-component labeling is not to be confused with segmentation. Connected-component labeling is used in computer vision to detect connected regions in binary digital images, although color images and data with higher dimensionality can also be processed. When integrated into an image recognition system or human-computer interaction interface, connected component labeling can operate on a variety of information. Blob extraction is generally performed on the resulting binary image from a thresholding step, but it can be applicable to gray-scale and color images as well. Blobs may be counted, filtered, and tracked. Blob extraction is related to but distinct from blob detection. Overview A graph, con ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Atan2

In computing and mathematics, the function (mathematics), function atan2 is the 2-Argument of a function, argument arctangent. By definition, \theta = \operatorname(y, x) is the angle measure (in radians, with -\pi 0, \\[5mu] \arctan\left(\frac y x\right) + \pi &\text x < 0 \text y \ge 0, \\[5mu] \arctan\left(\frac y x\right) - \pi &\text x < 0 \text y < 0, \\[5mu] +\frac &\text x = 0 \text y > 0, \\[5mu] -\frac &\text x = 0 \text y < 0, \\[5mu] \text &\text x = 0 \text y = 0. \end Instead of the tangent, it can be convenient to use the half-tangent as a representation of an angle, partly because the angle has a unique half-tangent, (See tangent half-angle formula.) The expression with in the denominator should be used when and to avoid possible loss of significance in computing . When an function is unavailable, it can be computed as twice the arctangent of the half-tangent . That is, |