|

BrookGPU

The Brook programming language and its implementation BrookGPU were early and influential attempts to enable general-purpose computing on graphics processing units. Brook, developed at Stanford University graphics group, was a compiler and runtime implementation of a stream programming language targeting modern, highly parallel GPUs such as those found on ATI or Nvidia graphics cards. BrookGPU compiled programs written using the Brook stream programming language, which is a variant of ANSI C. It could target OpenGL v1.3+, DirectX v9+ or AMD's Close to Metal for the computational backend and ran on both Microsoft Windows and Linux. For debugging, BrookGPU could also simulate a virtual graphics card on the CPU. Status The last major beta release (v0.4) was in October 2004 but renewed development began and stopped again in November 2007 with a v0.5 beta 1 release. The new features of v0.5 include a much upgraded and faster OpenGL backend which uses framebuffer objects instead ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stream Processing

In computer science, stream processing (also known as event stream processing, data stream processing, or distributed stream processing) is a programming paradigm which views data streams, or sequences of events in time, as the central input and output objects of computation. Stream processing encompasses dataflow programming, reactive programming, and distributed data processing. Stream processing systems aim to expose parallel processing for data streams and rely on streaming algorithms for efficient implementation. The software stack for these systems includes components such as programming models and query languages, for expressing computation; stream management systems, for distribution and scheduling; and hardware components for acceleration including floating-point units, graphics processing units, and field-programmable gate arrays. The stream processing paradigm simplifies parallel software and hardware by restricting the parallel computation that can be performed. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lib Sh

Sh was an early metaprogramming language for programmable GPUs. It offered a general-purpose programming language, following a stream-processing model. Programs written in Sh could either run on CPUs or GPUs, obviating the need to write programs in a mix of two programming languages as was the case with earlier GPU programming systems such as Cg or HLSL. As of August 2006, it is no longer maintained. RapidMind Inc. was formed to commercialize the research behind Sh. RapidMind was then bought by Intel and ceased Sh development as well. See also *BrookGPU *CUDA * Close to Metal *OpenCL OpenCL (Open Computing Language) is a framework for writing programs that execute across heterogeneous platforms consisting of central processing units (CPUs), graphics processing units (GPUs), digital signal processors (DSPs), field-prog ... * RapidMind References External links * GPGPU GPGPU libraries {{Compu-graphics-stub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

OpenCL

OpenCL (Open Computing Language) is a framework for writing programs that execute across heterogeneous platforms consisting of central processing units (CPUs), graphics processing units (GPUs), digital signal processors (DSPs), field-programmable gate arrays (FPGAs) and other processors or hardware accelerators. OpenCL specifies programming languages (based on C99, C++14 and C++17) for programming these devices and application programming interfaces (APIs) to control the platform and execute programs on the compute devices. OpenCL provides a standard interface for parallel computing using task- and data-based parallelism. OpenCL is an open standard maintained by the non-profit technology consortium Khronos Group. Conformant implementations are available from Altera, AMD, ARM, Creative, IBM, Imagination, Intel, Nvidia, Qualcomm, Samsung, Vivante, Xilinx, and ZiiLABS. Overview OpenCL views a computing system as consisting of a number of ''compute devices' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

General-purpose Computing On Graphics Processing Units

General-purpose computing on graphics processing units (GPGPU, or less often GPGP) is the use of a graphics processing unit (GPU), which typically handles computation only for computer graphics, to perform computation in applications traditionally handled by the central processing unit (CPU). The use of multiple video cards in one computer, or large numbers of graphics chips, further parallelizes the already parallel nature of graphics processing. Essentially, a GPGPU pipeline is a kind of parallel processing between one or more GPUs and CPUs that analyzes data as if it were in image or other graphic form. While GPUs operate at lower frequencies, they typically have many times the number of cores. Thus, GPUs can process far more pictures and graphical data per second than a traditional CPU. Migrating data into graphical form and then using the GPU to scan and analyze it can create a large speedup. GPGPU pipelines were developed at the beginning of the 21st century for grap ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

CUDA

CUDA (or Compute Unified Device Architecture) is a parallel computing platform and application programming interface (API) that allows software to use certain types of graphics processing units (GPUs) for general purpose processing, an approach called general-purpose computing on GPUs ( GPGPU). CUDA is a software layer that gives direct access to the GPU's virtual instruction set and parallel computational elements, for the execution of compute kernels. CUDA is designed to work with programming languages such as C, C++, and Fortran. This accessibility makes it easier for specialists in parallel programming to use GPU resources, in contrast to prior APIs like Direct3D and OpenGL, which required advanced skills in graphics programming. CUDA-powered GPUs also support programming frameworks such as OpenMP, OpenACC and OpenCL; and HIP by compiling such code to CUDA. CUDA was created by Nvidia. When it was first introduced, the name was an acronym for Compute Unified Device A ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

GPGPU

General-purpose computing on graphics processing units (GPGPU, or less often GPGP) is the use of a graphics processing unit (GPU), which typically handles computation only for computer graphics, to perform computation in applications traditionally handled by the central processing unit (CPU). The use of multiple video cards in one computer, or large numbers of graphics chips, further parallelizes the already parallel nature of graphics processing. Essentially, a GPGPU pipeline is a kind of parallel processing between one or more GPUs and CPUs that analyzes data as if it were in image or other graphic form. While GPUs operate at lower frequencies, they typically have many times the number of cores. Thus, GPUs can process far more pictures and graphical data per second than a traditional CPU. Migrating data into graphical form and then using the GPU to scan and analyze it can create a large speedup. GPGPU pipelines were developed at the beginning of the 21st century for grap ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Close To Metal

In computing, Close To Metal ("CTM" in short, originally called ''Close-to-the-Metal'') is the name of a beta version of a low-level programming interface developed by ATI, now the AMD Graphics Product Group, aimed at enabling GPGPU computing. CTM was short-lived, and the first production version of AMD's GPGPU technology is now called AMD Stream SDK, or rather the current AMD APP SDK for Windows and Linux 32-bit and 64-bit. APP stands for "Accelerated Parallel Processing" and also targets Heterogeneous System Architecture. Overview Close To Metal, originally called THIN (Thin Hardware INterface) and Data Parallel Virtual Machine, gave developers direct access to the native instruction set and memory of the massively parallel computational elements in modern AMD video cards. CTM bypassed the graphics-centric DirectX and OpenGL APIs for the GPGPU programmer to expose previously unavailable low-level functionality, including direct control of the stream processors/ALUs and the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linux

Linux ( or ) is a family of open-source Unix-like operating systems based on the Linux kernel, an operating system kernel first released on September 17, 1991, by Linus Torvalds. Linux is typically packaged as a Linux distribution, which includes the kernel and supporting system software and libraries, many of which are provided by the GNU Project. Many Linux distributions use the word "Linux" in their name, but the Free Software Foundation uses the name "GNU/Linux" to emphasize the importance of GNU software, causing some controversy. Popular Linux distributions include Debian, Fedora Linux, and Ubuntu, the latter of which itself consists of many different distributions and modifications, including Lubuntu and Xubuntu. Commercial distributions include Red Hat Enterprise Linux and SUSE Linux Enterprise. Desktop Linux distributions include a windowing system such as X11 or Wayland, and a desktop environment such as GNOME or KDE Plasma. Distributions intended for ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

OpenMP

OpenMP (Open Multi-Processing) is an application programming interface (API) that supports multi-platform shared-memory multiprocessing programming in C, C++, and Fortran, on many platforms, instruction-set architectures and operating systems, including Solaris, AIX, FreeBSD, HP-UX, Linux, macOS, and Windows. It consists of a set of compiler directives, library routines, and environment variables that influence run-time behavior. OpenMP is managed by the nonprofit technology consortium ''OpenMP Architecture Review Board'' (or ''OpenMP ARB''), jointly defined by a broad swath of leading computer hardware and software vendors, including Arm, AMD, IBM, Intel, Cray, HP, Fujitsu, Nvidia, NEC, Red Hat, Texas Instruments, and Oracle Corporation. OpenMP uses a portable, scalable model that gives programmers a simple and flexible interface for developing parallel applications for platforms ranging from the standard desktop computer to the supercomputer. An app ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Folding@home

Folding@home (FAH or F@h) is a volunteer computing project aimed to help scientists develop new therapeutics for a variety of diseases by the means of simulating protein dynamics. This includes the process of protein folding and the movements of proteins, and is reliant on simulations run on volunteers' personal computers. Folding@home is currently based at the University of Pennsylvania and led by Greg Bowman, a former student of Vijay Pande. The project utilizes graphics processing units (GPUs), central processing units (CPUs), and ARM processors like those on the Raspberry Pi for volunteer computing and scientific research. The project uses statistical simulation methodology that is a paradigm shift from traditional computing methods. As part of the client–server model network architecture, the volunteered machines each receive pieces of a simulation (work units), complete them, and return them to the project's database servers, where the units are compiled into an ov ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

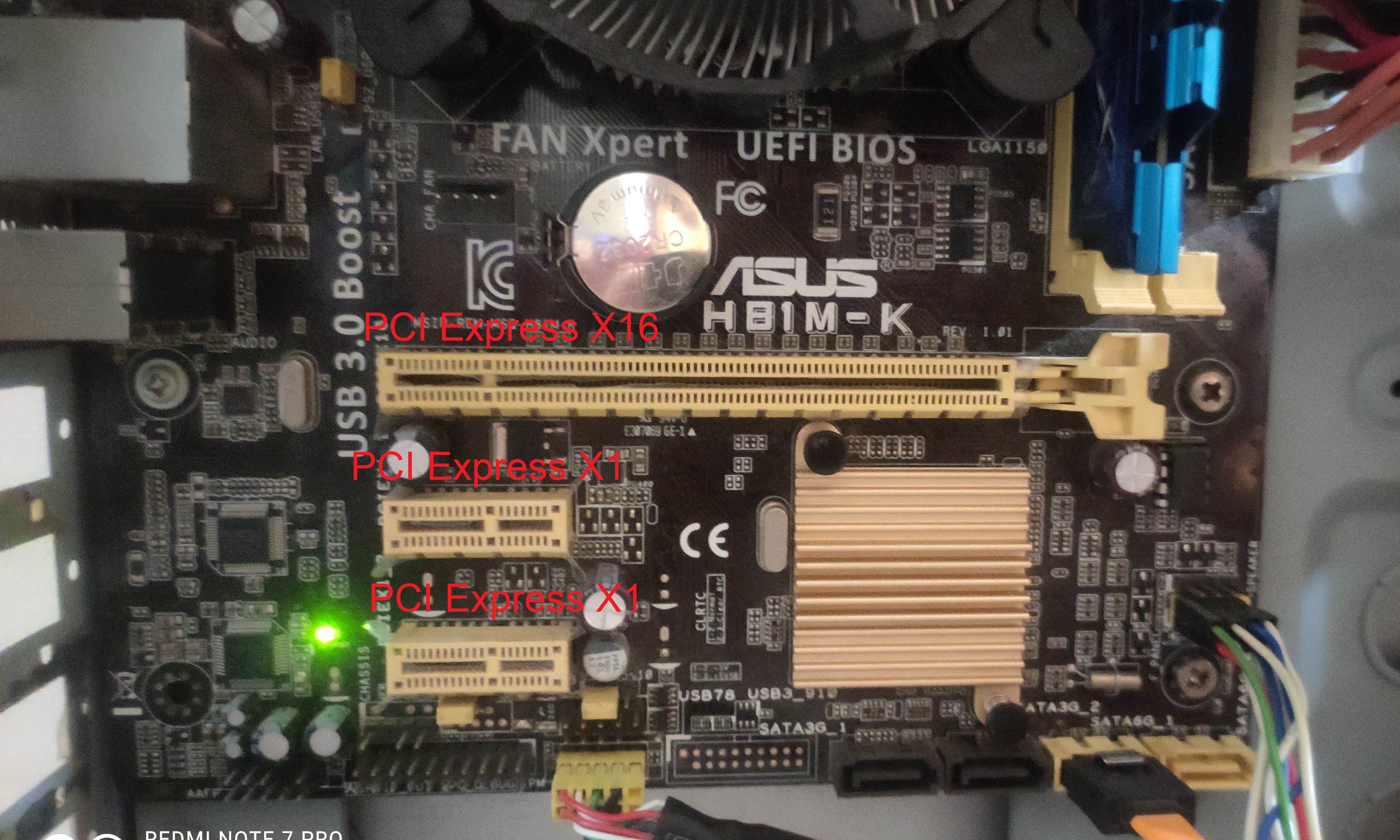

PCI Express

PCI Express (Peripheral Component Interconnect Express), officially abbreviated as PCIe or PCI-e, is a high-speed serial computer expansion bus standard, designed to replace the older PCI, PCI-X and AGP bus standards. It is the common motherboard interface for personal computers' graphics cards, hard disk drive host adapters, SSDs, Wi-Fi and Ethernet hardware connections. PCIe has numerous improvements over the older standards, including higher maximum system bus throughput, lower I/O pin count and smaller physical footprint, better performance scaling for bus devices, a more detailed error detection and reporting mechanism (Advanced Error Reporting, AER), and native hot-swap functionality. More recent revisions of the PCIe standard provide hardware support for I/O virtualization. The PCI Express electrical interface is measured by the number of simultaneous lanes. (A lane is a single send/receive line of data. The analogy is a highway with traffic in both direc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cg (programming Language)

Cg (short for C for Graphics) and High-Level Shader Language (HLSL) are two names given to a high-level shading language developed by Nvidia and Microsoft for programming shaders. Cg/HLSL is based on the C programming language and although they share the same core syntax, some features of C were modified and new data types were added to make Cg/HLSL more suitable for programming graphics processing units. Two main branches of the Cg/HLSL language exist: the Nvidia Cg compiler (cgc) which outputs DirectX or OpenGL and the Microsoft HLSL which outputs DirectX shaders in bytecode format. Nvidia's cgc was deprecated in 2012, with no additional development or support available. HLSL shaders can enable many special effects in both 2D and 3D computer graphics. The Cg/HLSL language originally only included support for vertex shaders and pixel shaders, but other types of shaders were introduced gradually as well: * DirectX 10 (Shader Model 4) and Cg 2.0 introduced geometry shaders. * D ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.jpg)