|

Boyer–Moore Majority Vote Algorithm

The Boyer–Moore majority vote algorithm is an algorithm for finding the majority of a sequence of elements using linear time and constant space. It is named after Robert S. Boyer and J Strother Moore, who published it in 1981,. Originally published as a technical report in 1981. and is a prototypical example of a streaming algorithm. In its simplest form, the algorithm finds a majority element, if there is one: that is, an element that occurs repeatedly for more than half of the elements of the input. A version of the algorithm that makes a second pass through the data can be used to verify that the element found in the first pass really is a majority. If a second pass is not performed and there is no majority the algorithm will not detect that no majority exists. In the case that no strict majority exists, the returned element can be arbitrary; it is not guaranteed to be the element that occurs most often (the mode of the sequence). It is not possible for a streaming algorithm ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Word

In computing, a word is the natural unit of data used by a particular processor design. A word is a fixed-sized datum handled as a unit by the instruction set or the hardware of the processor. The number of bits or digits in a word (the ''word size'', ''word width'', or ''word length'') is an important characteristic of any specific processor design or computer architecture. The size of a word is reflected in many aspects of a computer's structure and operation; the majority of the registers in a processor are usually word-sized and the largest datum that can be transferred to and from the working memory in a single operation is a word in many (not all) architectures. The largest possible address size, used to designate a location in memory, is typically a hardware word (here, "hardware word" means the full-sized natural word of the processor, as opposed to any other definition used). Documentation for older computers with fixed word size commonly states memory sizes in words r ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Misra–Gries Heavy Hitters Algorithm

Misra and Gries defined the ''heavy-hitters problem'' (though they did not introduce the term ''heavy-hitters'') and described the first algorithm for it in the paper ''Finding repeated elements''. Their algorithm extends the Boyer-Moore majority finding algorithm in a significant way. One version of the heavy-hitters problem is as follows: Given is a bag of elements and an integer . Find the values that occur more than times in . The Misra-Gries algorithm solves the problem by making two passes over the values in , while storing at most values from and their number of occurrences during the course of the algorithm. Misra-Gries is one of the earliest streaming algorithms, and it is described below in those terms in section #Summaries. Misra–Gries algorithm A bag is a like a set in which the same value may occur multiple times. Assume that a bag is available as an array of elements. In the abstract description of the algorithm, we treat and its segments also as bags ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cellular Automaton

A cellular automaton (pl. cellular automata, abbrev. CA) is a discrete model of computation studied in automata theory. Cellular automata are also called cellular spaces, tessellation automata, homogeneous structures, cellular structures, tessellation structures, and iterative arrays. Cellular automata have found application in various areas, including physics, theoretical biology and microstructure modeling. A cellular automaton consists of a regular grid of ''cells'', each in one of a finite number of '' states'', such as ''on'' and ''off'' (in contrast to a coupled map lattice). The grid can be in any finite number of dimensions. For each cell, a set of cells called its ''neighborhood'' is defined relative to the specified cell. An initial state (time ''t'' = 0) is selected by assigning a state for each cell. A new ''generation'' is created (advancing ''t'' by 1), according to some fixed ''rule'' (generally, a mathematical function) that determines the new state of e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Majority Problem (cellular Automaton)

The majority problem, or density classification task, is the problem of finding one-dimensional cellular automaton rules that accurately perform majority voting. Using local transition rules, cells cannot know the total count of all the ones in system. In order to count the number of ones (or, by symmetry, the number of zeros), the system requires a logarithmic number of bits in the total size of the system. It also requires the system send messages over a distance linear in the size of the system and for the system to recognize a non-regular language. Thus, this problem is an important test case in measuring the computational power of cellular automaton systems. Problem statement Given a configuration of a two-state cellular automaton with ''i'' + ''j'' cells total, ''i'' of which are in the zero state and ''j'' of which are in the one state, a correct solution to the voting problem must eventually set all cells to zero if ''i'' > ''j'' and must eventually set all ce ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Boolean Value

In mathematics and mathematical logic, Boolean algebra is a branch of algebra. It differs from elementary algebra in two ways. First, the values of the variables are the truth values ''true'' and ''false'', usually denoted 1 and 0, whereas in elementary algebra the values of the variables are numbers. Second, Boolean algebra uses logical operators such as conjunction (''and'') denoted as ∧, disjunction (''or'') denoted as ∨, and the negation (''not'') denoted as ¬. Elementary algebra, on the other hand, uses arithmetic operators such as addition, multiplication, subtraction and division. So Boolean algebra is a formal way of describing logical operations, in the same way that elementary algebra describes numerical operations. Boolean algebra was introduced by George Boole in his first book ''The Mathematical Analysis of Logic'' (1847), and set forth more fully in his '' An Investigation of the Laws of Thought'' (1854). According to Huntington, the term "Boolean algebra" wa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Majority Function

In Boolean logic, the majority function (also called the median operator) is the Boolean function that evaluates to false when half or more arguments are false and true otherwise, i.e. the value of the function equals the value of the majority of the inputs. Representing true values as 1 and false values as 0, we may use the (real-valued) formula: :\langle p_1,\dots,p_n \rangle = \operatorname \left ( p_1,\dots,p_n \right ) = \left \lfloor \frac + \frac \right \rfloor. The "−1/2" in the formula serves to break ties in favor of zeros when the number of arguments ''n'' is even. If the term "−1/2" is omitted, the formula can be used for a function that breaks ties in favor of ones. Most applications deliberately force an odd number of inputs so they don't have to deal with the question of what happens when exactly half the inputs are 0 and exactly half the inputs are 1. The few systems that calculate the majority function on an even number of inputs are often biased to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Element Distinctness Problem

In computational complexity theory, the element distinctness problem or element uniqueness problem is the problem of determining whether all the elements of a list are distinct. It is a well studied problem in many different models of computation. The problem may be solved by sorting the list and then checking if there are any consecutive equal elements; it may also be solved in linear expected time by a randomized algorithm that inserts each item into a hash table and compares only those elements that are placed in the same hash table cell. Several lower bounds in computational complexity are proved by reducing the element distinctness problem to the problem in question, i.e., by demonstrating that the solution of the element uniqueness problem may be quickly found after solving the problem in question. Decision tree complexity The number of comparisons needed to solve the problem of size n, in a comparison-based model of computation such as a decision tree or algebraic decisio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Binary Logarithm

In mathematics, the binary logarithm () is the power to which the number must be raised to obtain the value . That is, for any real number , :x=\log_2 n \quad\Longleftrightarrow\quad 2^x=n. For example, the binary logarithm of is , the binary logarithm of is , the binary logarithm of is , and the binary logarithm of is . The binary logarithm is the logarithm to the base and is the inverse function of the power of two function. As well as , an alternative notation for the binary logarithm is (the notation preferred by ISO 31-11 and ISO 80000-2). Historically, the first application of binary logarithms was in music theory, by Leonhard Euler: the binary logarithm of a frequency ratio of two musical tones gives the number of octaves by which the tones differ. Binary logarithms can be used to calculate the length of the representation of a number in the binary numeral system, or the number of bits needed to encode a message in information theory. In computer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

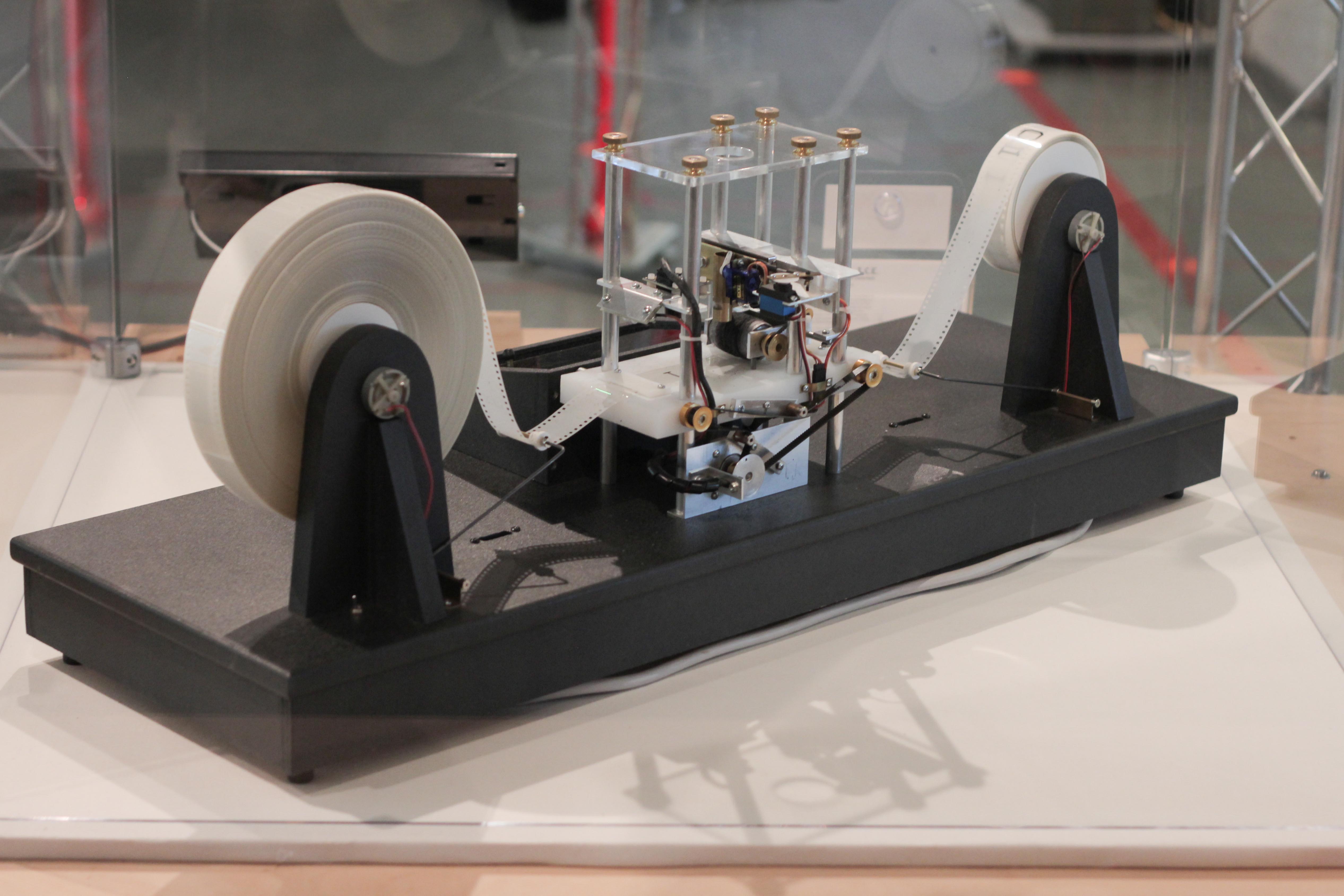

Turing Machine

A Turing machine is a mathematical model of computation describing an abstract machine that manipulates symbols on a strip of tape according to a table of rules. Despite the model's simplicity, it is capable of implementing any computer algorithm. The machine operates on an infinite memory tape divided into discrete cells, each of which can hold a single symbol drawn from a finite set of symbols called the alphabet of the machine. It has a "head" that, at any point in the machine's operation, is positioned over one of these cells, and a "state" selected from a finite set of states. At each step of its operation, the head reads the symbol in its cell. Then, based on the symbol and the machine's own present state, the machine writes a symbol into the same cell, and moves the head one step to the left or the right, or halts the computation. The choice of which replacement symbol to write and which direction to move is based on a finite table that specifies what to do for each comb ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bit Complexity

In computer science, the computational complexity or simply complexity of an algorithm is the amount of resources required to run it. Particular focus is given to time complexity, computation time (generally measured by the number of needed elementary operations) and space complexity, memory storage requirements. The complexity of a computational problem, problem is the complexity of the best algorithms that allow solving the problem. The study of the complexity of explicitly given algorithms is called analysis of algorithms, while the study of the complexity of problems is called computational complexity theory. Both areas are highly related, as the complexity of an algorithm is always an upper bound on the complexity of the problem solved by this algorithm. Moreover, for designing efficient algorithms, it is often fundamental to compare the complexity of a specific algorithm to the complexity of the problem to be solved. Also, in most cases, the only thing that is known about the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Analysis Of Algorithms

In computer science, the analysis of algorithms is the process of finding the computational complexity of algorithms—the amount of time, storage, or other resources needed to execute them. Usually, this involves determining a function that relates the size of an algorithm's input to the number of steps it takes (its time complexity) or the number of storage locations it uses (its space complexity). An algorithm is said to be efficient when this function's values are small, or grow slowly compared to a growth in the size of the input. Different inputs of the same size may cause the algorithm to have different behavior, so best, worst and average case descriptions might all be of practical interest. When not otherwise specified, the function describing the performance of an algorithm is usually an upper bound, determined from the worst case inputs to the algorithm. The term "analysis of algorithms" was coined by Donald Knuth. Algorithm analysis is an important part of a broader ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |