|

Blum Blum Shub

Blum Blum Shub (B.B.S.) is a pseudorandom number generator proposed in 1986 by Lenore Blum, Manuel Blum and Michael Shub that is derived from Michael O. Rabin's one-way function. __TOC__ Blum Blum Shub takes the form :x_ = x_n^2 \bmod M, where ''M'' = ''pq'' is the product of two large primes ''p'' and ''q''. At each step of the algorithm, some output is derived from ''x''''n''+1; the output is commonly either the bit parity of ''x''''n''+1 or one or more of the least significant bits of ''x''''n''+1''. The seed ''x''0 should be an integer that is co-prime to ''M'' (i.e. ''p'' and ''q'' are not factors of ''x''0) and not 1 or 0. The two primes, ''p'' and ''q'', should both be congruent to 3 (mod 4) (this guarantees that each quadratic residue has one square root which is also a quadratic residue), and should be safe primes with a small gcd((''p-3'')''/2'', (''q-3'')''/2'') (this makes the cycle length large). An interesting characteristic of the Blum Blum Shub generator is th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pseudorandom Number Generator

A pseudorandom number generator (PRNG), also known as a deterministic random bit generator (DRBG), is an algorithm for generating a sequence of numbers whose properties approximate the properties of sequences of random numbers. The PRNG-generated sequence is not truly random, because it is completely determined by an initial value, called the PRNG's ''seed'' (which may include truly random values). Although sequences that are closer to truly random can be generated using hardware random number generators, ''pseudorandom number generators'' are important in practice for their speed in number generation and their reproducibility. PRNGs are central in applications such as simulations (e.g. for the Monte Carlo method), electronic games (e.g. for procedural generation), and cryptography. Cryptographic applications require the output not to be predictable from earlier outputs, and more elaborate algorithms, which do not inherit the linearity of simpler PRNGs, are needed. Good statist ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Greatest Common Divisor

In mathematics, the greatest common divisor (GCD) of two or more integers, which are not all zero, is the largest positive integer that divides each of the integers. For two integers ''x'', ''y'', the greatest common divisor of ''x'' and ''y'' is denoted \gcd (x,y). For example, the GCD of 8 and 12 is 4, that is, \gcd (8, 12) = 4. In the name "greatest common divisor", the adjective "greatest" may be replaced by "highest", and the word "divisor" may be replaced by "factor", so that other names include highest common factor (hcf), etc. Historically, other names for the same concept have included greatest common measure. This notion can be extended to polynomials (see Polynomial greatest common divisor) and other commutative rings (see below). Overview Definition The ''greatest common divisor'' (GCD) of two nonzero integers and is the greatest positive integer such that is a divisor of both and ; that is, there are integers and such that and , and is the largest s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Common Lisp

Common Lisp (CL) is a dialect of the Lisp programming language, published in ANSI standard document ''ANSI INCITS 226-1994 (S20018)'' (formerly ''X3.226-1994 (R1999)''). The Common Lisp HyperSpec, a hyperlinked HTML version, has been derived from the ANSI Common Lisp standard. The Common Lisp language was developed as a standardized and improved successor of Maclisp. By the early 1980s several groups were already at work on diverse successors to MacLisp: Lisp Machine Lisp (aka ZetaLisp), Spice Lisp, NIL and S-1 Lisp. Common Lisp sought to unify, standardise, and extend the features of these MacLisp dialects. Common Lisp is not an implementation, but rather a language specification. Several implementations of the Common Lisp standard are available, including free and open-source software and proprietary products. Common Lisp is a general-purpose, multi-paradigm programming language. It supports a combination of procedural, functional, and object-oriented programming paradigms ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Least Significant Bit

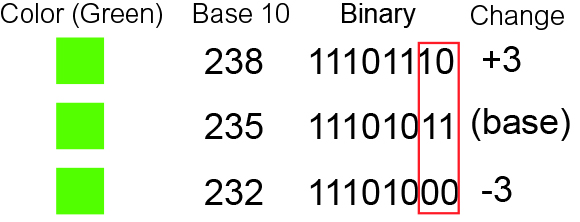

In computing, bit numbering is the convention used to identify the bit positions in a binary number. Bit significance and indexing In computing, the least significant bit (LSB) is the bit position in a binary integer representing the binary 1s place of the integer. Similarly, the most significant bit (MSB) represents the highest-order place of the binary integer. The LSB is sometimes referred to as the ''low-order bit'' or ''right-most bit'', due to the convention in positional notation of writing less significant digits further to the right. The MSB is similarly referred to as the ''high-order bit'' or ''left-most bit''. In both cases, the LSB and MSB correlate directly to the least significant digit and most significant digit of a decimal integer. Bit indexing correlates to the positional notation of the value in base 2. For this reason, bit index is not affected by how the value is stored on the device, such as the value's byte order. Rather, it is a property of the numeri ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parity Bit

A parity bit, or check bit, is a bit added to a string of binary code. Parity bits are a simple form of error detecting code. Parity bits are generally applied to the smallest units of a communication protocol, typically 8-bit octets (bytes), although they can also be applied separately to an entire message string of bits. The parity bit ensures that the total number of 1-bits in the string is even or odd. Accordingly, there are two variants of parity bits: even parity bit and odd parity bit. In the case of even parity, for a given set of bits, the bits whose value is 1 are counted. If that count is odd, the parity bit value is set to 1, making the total count of occurrences of 1s in the whole set (including the parity bit) an even number. If the count of 1s in a given set of bits is already even, the parity bit's value is 0. In the case of odd parity, the coding is reversed. For a given set of bits, if the count of bits with a value of 1 is even, the parity bit value is se ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Quadratic Residuosity Problem

The quadratic residuosity problem (QRP) in computational number theory is to decide, given integers a and N, whether a is a quadratic residue modulo N or not. Here N = p_1 p_2 for two unknown primes p_1 and p_2, and a is among the numbers which are not obviously quadratic non-residues (see below). The problem was first described by Gauss in his ''Disquisitiones Arithmeticae'' in 1801. This problem is believed to be computationally difficult. Several cryptographic methods rely on its hardness, see . An efficient algorithm for the quadratic residuosity problem immediately implies efficient algorithms for other number theoretic problems, such as deciding whether a composite N of unknown factorization is the product of 2 or 3 primes. Precise formulation Given integers a and T, a is said to be a ''quadratic residue modulo T'' if there exists an integer b such that :a \equiv b^2 \pmod T. Otherwise we say it is a quadratic non-residue. When T = p is a prime, it is customary to us ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Logarithm

In mathematics, the logarithm is the inverse function to exponentiation. That means the logarithm of a number to the base is the exponent to which must be raised, to produce . For example, since , the ''logarithm base'' 10 of is , or . The logarithm of to ''base'' is denoted as , or without parentheses, , or even without the explicit base, , when no confusion is possible, or when the base does not matter such as in big O notation. The logarithm base is called the decimal or common logarithm and is commonly used in science and engineering. The natural logarithm has the number as its base; its use is widespread in mathematics and physics, because of its very simple derivative. The binary logarithm uses base and is frequently used in computer science. Logarithms were introduced by John Napier in 1614 as a means of simplifying calculations. They were rapidly adopted by navigators, scientists, engineers, surveyors and others to perform high-a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Big O Notation

Big ''O'' notation is a mathematical notation that describes the limiting behavior of a function when the argument tends towards a particular value or infinity. Big O is a member of a family of notations invented by Paul Bachmann, Edmund Landau, and others, collectively called Bachmann–Landau notation or asymptotic notation. The letter O was chosen by Bachmann to stand for ''Ordnung'', meaning the order of approximation. In computer science, big O notation is used to classify algorithms according to how their run time or space requirements grow as the input size grows. In analytic number theory, big O notation is often used to express a bound on the difference between an arithmetical function and a better understood approximation; a famous example of such a difference is the remainder term in the prime number theorem. Big O notation is also used in many other fields to provide similar estimates. Big O notation characterizes functions according to their growth rates: d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computational Complexity Theory

In theoretical computer science and mathematics, computational complexity theory focuses on classifying computational problems according to their resource usage, and relating these classes to each other. A computational problem is a task solved by a computer. A computation problem is solvable by mechanical application of mathematical steps, such as an algorithm. A problem is regarded as inherently difficult if its solution requires significant resources, whatever the algorithm used. The theory formalizes this intuition, by introducing mathematical models of computation to study these problems and quantifying their computational complexity, i.e., the amount of resources needed to solve them, such as time and storage. Other measures of complexity are also used, such as the amount of communication (used in communication complexity), the number of gates in a circuit (used in circuit complexity) and the number of processors (used in parallel computing). One of the roles of computationa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Carmichael Function

In number theory, a branch of mathematics, the Carmichael function of a positive integer is the smallest positive integer such that :a^m \equiv 1 \pmod holds for every integer coprime to . In algebraic terms, is the exponent of the multiplicative group of integers modulo . The Carmichael function is named after the American mathematician Robert Carmichael who defined it in 1910. It is also known as Carmichael's λ function, the reduced totient function, and the least universal exponent function. The following table compares the first 36 values of with Euler's totient function (in bold if they are different; the s such that they are different are listed in ). Numerical examples # Carmichael's function at 5 is 4, , because for any number 0 |

Euler's Theorem

In number theory, Euler's theorem (also known as the Fermat–Euler theorem or Euler's totient theorem) states that, if and are coprime positive integers, and \varphi(n) is Euler's totient function, then raised to the power \varphi(n) is congruent to modulo ; that is :a^ \equiv 1 \pmod. In 1736, Leonhard Euler published a proof of Fermat's little theorem (stated by Fermat without proof), which is the restriction of Euler's theorem to the case where is a prime number. Subsequently, Euler presented other proofs of the theorem, culminating with his paper of 1763, in which he proved a generalization to the case where is not prime. The converse of Euler's theorem is also true: if the above congruence is true, then a and n must be coprime. The theorem is further generalized by Carmichael's theorem. The theorem may be used to easily reduce large powers modulo n. For example, consider finding the ones place decimal digit of 7^, i.e. 7^ \pmod. The integers 7 and 10 are coprime, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Safe Prime

In number theory, a prime number ''p'' is a if 2''p'' + 1 is also prime. The number 2''p'' + 1 associated with a Sophie Germain prime is called a . For example, 11 is a Sophie Germain prime and 2 × 11 + 1 = 23 is its associated safe prime. Sophie Germain primes are named after French mathematician Sophie Germain, who used them in her investigations of Fermat's Last Theorem. One attempt by Germain to prove Fermat’s Last Theorem was to let ''p'' be a prime number of the form 8''k'' + 7 and to let ''n'' = ''p'' – 1. In this case, x^n + y^n = z^n is unsolvable. Germain’s proof, however, remained unfinished. Through her attempts to solve Fermat's Last Theorem, Germain developed a result now known as Germain's Theorem which states that if ''p'' is an odd prime and 2''p'' + 1 is also prime, then ''p'' must divide ''x'', ''y'', or ''z.'' Otherwise, x^n + y^n \neq z^n. This case where ''p'' does not divide ''x'', ''y'', or ''z'' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |