|

Bisimilarity

In theoretical computer science a bisimulation is a binary relation between state transition systems, associating systems that behave in the same way in that one system simulates the other and vice versa. Intuitively two systems are bisimilar if they, assuming we view them as playing a ''game'' according to some rules, match each other's moves. In this sense, each of the systems cannot be distinguished from the other by an observer. Formal definition Given a state transition system, labelled state transition system (S, \Lambda, →), where S is a set of states, \Lambda is a set of labels and → is a set of labelled transitions (i.e., a subset of S \times \Lambda \times S), a bisimulation is a binary relation R \subseteq S \times S, such that both R and its converse relation, converse R^T are simulation preorder, simulations. From this follows that the symmetric closure of a bisimulation is a bisimulation, and that each symmetric simulation is a bisimulation. Thus some aut ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Simulation Preorder

In theoretical computer science a simulation is a Relation (mathematics), relation between state transition systems associating systems that behave in the same way in the sense that one system ''simulates'' the other. Intuitively, a system simulates another system if it can match all of its moves. The basic definition relates states within one transition system, but this is easily adapted to relate two separate transition systems by building a system consisting of the disjoint union of the corresponding components. Formal definition Given a state transition system, labelled state transition system (S, \Lambda, →), where S is a set of states, \Lambda is a set of labels and → is a set of labelled transitions (i.e., a subset of S \times \Lambda \times S), a relation R \subseteq S \times S is a simulation if and only if for every pair of states (p,q) in R and all labels α in \Lambda: : if p \overset p', then there is q \overset q' such that (p',q') \in R Equivalently, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Theoretical Computer Science

Theoretical computer science (TCS) is a subset of general computer science and mathematics that focuses on mathematical aspects of computer science such as the theory of computation, lambda calculus, and type theory. It is difficult to circumscribe the theoretical areas precisely. The Association for Computing Machinery, ACM's ACM SIGACT, Special Interest Group on Algorithms and Computation Theory (SIGACT) provides the following description: History While logical inference and mathematical proof had existed previously, in 1931 Kurt Gödel proved with his incompleteness theorem that there are fundamental limitations on what statements could be proved or disproved. Information theory was added to the field with a 1948 mathematical theory of communication by Claude Shannon. In the same decade, Donald Hebb introduced a mathematical model of Hebbian learning, learning in the brain. With mounting biological data supporting this hypothesis with some modification, the fields of n ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Product (category Theory)

In category theory, the product of two (or more) objects in a category is a notion designed to capture the essence behind constructions in other areas of mathematics such as the Cartesian product of sets, the direct product of groups or rings, and the product of topological spaces. Essentially, the product of a family of objects is the "most general" object which admits a morphism to each of the given objects. Definition Product of two objects Fix a category C. Let X_1 and X_2 be objects of C. A product of X_1 and X_2 is an object X, typically denoted X_1 \times X_2, equipped with a pair of morphisms \pi_1 : X \to X_1, \pi_2 : X \to X_2 satisfying the following universal property: * For every object Y and every pair of morphisms f_1 : Y \to X_1, f_2 : Y \to X_2, there exists a unique morphism f : Y \to X_1 \times X_2 such that the following diagram commutes: *: Whether a product exists may depend on C or on X_1 and X_2. If it does exist, it is unique up to canonical ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Quasilinear Time

In computer science, the time complexity is the computational complexity that describes the amount of computer time it takes to run an algorithm. Time complexity is commonly estimated by counting the number of elementary operations performed by the algorithm, supposing that each elementary operation takes a fixed amount of time to perform. Thus, the amount of time taken and the number of elementary operations performed by the algorithm are taken to be related by a constant factor. Since an algorithm's running time may vary among different inputs of the same size, one commonly considers the worst-case time complexity, which is the maximum amount of time required for inputs of a given size. Less common, and usually specified explicitly, is the average-case complexity, which is the average of the time taken on inputs of a given size (this makes sense because there are only a finite number of possible inputs of a given size). In both cases, the time complexity is generally expressed ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Polynomial Time

In computer science, the time complexity is the computational complexity that describes the amount of computer time it takes to run an algorithm. Time complexity is commonly estimated by counting the number of elementary operations performed by the algorithm, supposing that each elementary operation takes a fixed amount of time to perform. Thus, the amount of time taken and the number of elementary operations performed by the algorithm are taken to be related by a constant factor. Since an algorithm's running time may vary among different inputs of the same size, one commonly considers the worst-case time complexity, which is the maximum amount of time required for inputs of a given size. Less common, and usually specified explicitly, is the average-case complexity, which is the average of the time taken on inputs of a given size (this makes sense because there are only a finite number of possible inputs of a given size). In both cases, the time complexity is generally express ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Johan Van Benthem (logician)

Johannes Franciscus Abraham Karel (Johan) van Benthem (born 12 June 1949 in Rijswijk) is a University Professor (') of logic at the University of Amsterdam at the Institute for Logic, Language and Computation and professor of philosophy at Stanford University (at CSLI). He was awarded the Spinozapremie in 1996 and elected a Foreign Fellow of the American Academy of Arts & Sciences in 2015. Biography Van Benthem studied physics ( B.Sc. 1969), philosophy ( M.A. 1972) and mathematics ( M.Sc. 1973) at the University of Amsterdam and received a PhD from the same university under supervision of Martin Löb in 1977. Before becoming University Professor in 2003, he held appointments at the University of Amsterdam (1973–1977), at the University of Groningen (1977–1986), and as a professor at the University of Amsterdam (1986–2003). In 1992 he was elected member of the Royal Netherlands Academy of Arts and Sciences. Van Benthem is known for his research in the area of mod ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

First-order Logic

First-order logic—also known as predicate logic, quantificational logic, and first-order predicate calculus—is a collection of formal systems used in mathematics, philosophy, linguistics, and computer science. First-order logic uses quantified variables over non-logical objects, and allows the use of sentences that contain variables, so that rather than propositions such as "Socrates is a man", one can have expressions in the form "there exists x such that x is Socrates and x is a man", where "there exists''"'' is a quantifier, while ''x'' is a variable. This distinguishes it from propositional logic, which does not use quantifiers or relations; in this sense, propositional logic is the foundation of first-order logic. A theory about a topic is usually a first-order logic together with a specified domain of discourse (over which the quantified variables range), finitely many functions from that domain to itself, finitely many predicates defined on that domain, and a set ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Modal Logic

Modal logic is a collection of formal systems developed to represent statements about necessity and possibility. It plays a major role in philosophy of language, epistemology, metaphysics, and natural language semantics. Modal logics extend other systems by adding unary operators \Diamond and \Box, representing possibility and necessity respectively. For instance the modal formula \Diamond P can be read as "possibly P" while \Box P can be read as "necessarily P". Modal logics can be used to represent different phenomena depending on what kind of necessity and possibility is under consideration. When \Box is used to represent epistemic necessity, \Box P states that P is epistemically necessary, or in other words that it is known. When \Box is used to represent deontic necessity, \Box P states that P is a moral or legal obligation. In the standard relational semantics for modal logic, formulas are assigned truth values relative to a '' possible world''. A formula's truth value ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kripke Semantics

Kripke semantics (also known as relational semantics or frame semantics, and often confused with possible world semantics) is a formal semantics for non-classical logic systems created in the late 1950s and early 1960s by Saul Kripke and André Joyal. It was first conceived for modal logics, and later adapted to intuitionistic logic and other non-classical systems. The development of Kripke semantics was a breakthrough in the theory of non-classical logics, because the model theory of such logics was almost non-existent before Kripke (algebraic semantics existed, but were considered 'syntax in disguise'). Semantics of modal logic The language of propositional modal logic consists of a countably infinite set of propositional variables, a set of truth-functional connectives (in this article \to and \neg), and the modal operator \Box ("necessarily"). The modal operator \Diamond ("possibly") is (classically) the dual of \Box and may be defined in terms of necessity like so: \Di ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

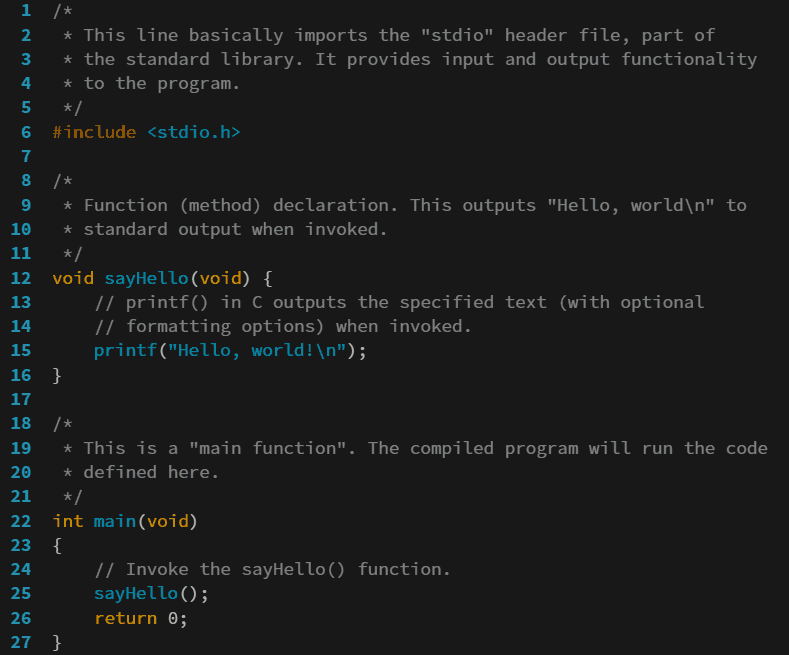

Programming Language

A programming language is a system of notation for writing computer programs. Most programming languages are text-based formal languages, but they may also be graphical. They are a kind of computer language. The description of a programming language is usually split into the two components of syntax (form) and semantics (meaning), which are usually defined by a formal language. Some languages are defined by a specification document (for example, the C programming language is specified by an ISO Standard) while other languages (such as Perl) have a dominant implementation that is treated as a reference. Some languages have both, with the basic language defined by a standard and extensions taken from the dominant implementation being common. Programming language theory is the subfield of computer science that studies the design, implementation, analysis, characterization, and classification of programming languages. Definitions There are many considerations when defining ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Operational Semantics

Operational semantics is a category of formal programming language semantics in which certain desired properties of a program, such as correctness, safety or security, are verified by constructing proofs from logical statements about its execution and procedures, rather than by attaching mathematical meanings to its terms ( denotational semantics). Operational semantics are classified in two categories: structural operational semantics (or small-step semantics) formally describe how the ''individual steps'' of a computation take place in a computer-based system; by opposition natural semantics (or big-step semantics) describe how the ''overall results'' of the executions are obtained. Other approaches to providing a formal semantics of programming languages include axiomatic semantics and denotational semantics. The operational semantics for a programming language describes how a valid program is interpreted as sequences of computational steps. These sequences then ''are'' t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Up To

Two mathematical objects ''a'' and ''b'' are called equal up to an equivalence relation ''R'' * if ''a'' and ''b'' are related by ''R'', that is, * if ''aRb'' holds, that is, * if the equivalence classes of ''a'' and ''b'' with respect to ''R'' are equal. This figure of speech is mostly used in connection with expressions derived from equality, such as uniqueness or count. For example, ''x'' is unique up to ''R'' means that all objects ''x'' under consideration are in the same equivalence class with respect to the relation ''R''. Moreover, the equivalence relation ''R'' is often designated rather implicitly by a generating condition or transformation. For example, the statement "an integer's prime factorization is unique up to ordering" is a concise way to say that any two lists of prime factors of a given integer are equivalent with respect to the relation ''R'' that relates two lists if one can be obtained by reordering (permutation) from the other. As another example, the stat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |