|

Big Memory

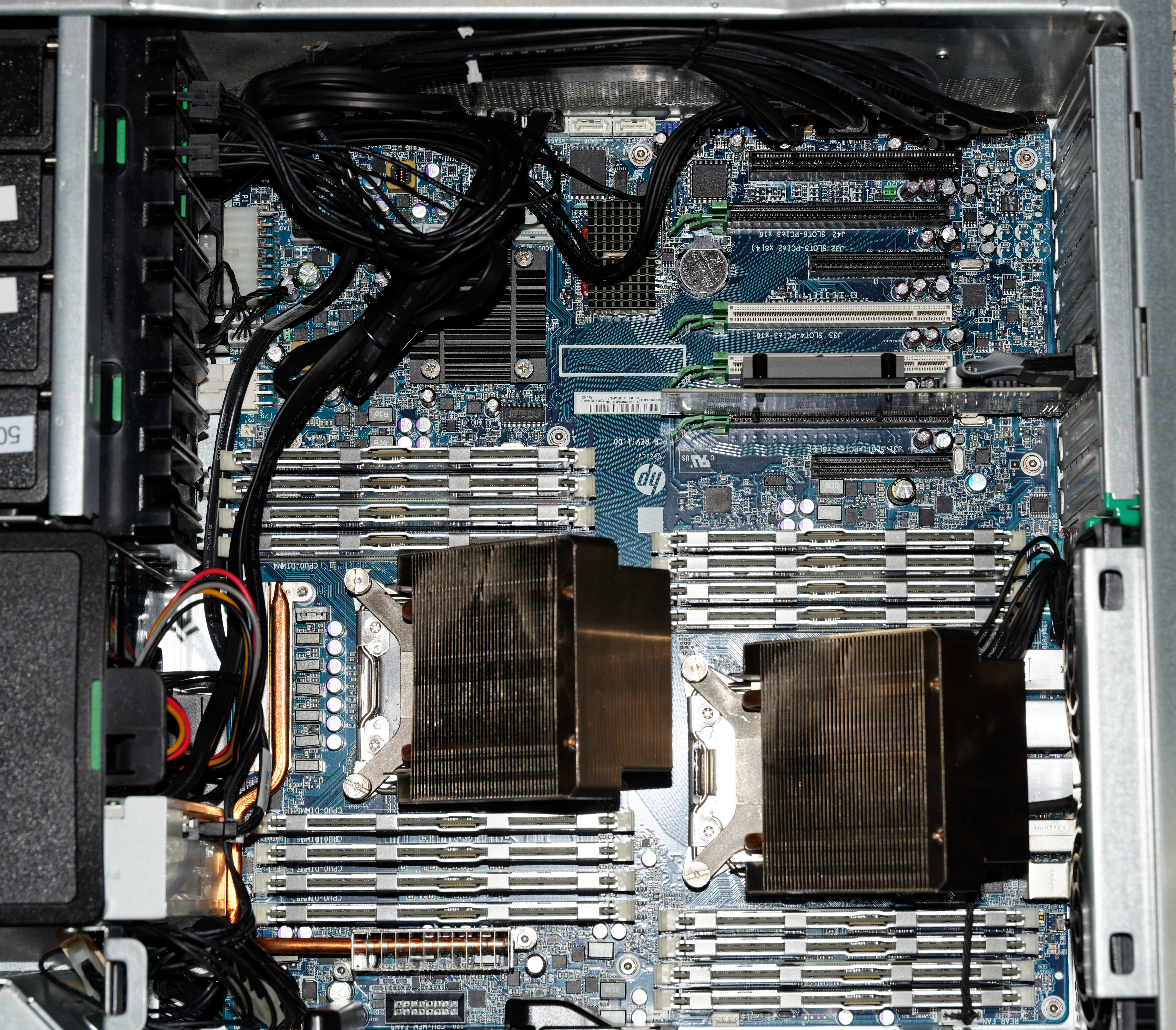

Big memory computers are machines with a large amount of random-access memory (RAM). The computers are required for databases, graph analytics, or more generally, high-performance computing, data science and big data. Some database systems called in-memory databases are designed to run mostly in memory, rarely if ever retrieving data from disk or flash memory. See list of in-memory databases. Details The performance of big memory systems depends on how the central processing units (CPUs) access the memory, via a conventional memory controller or via non-uniform memory access (NUMA). Performance also depends on the size and design of the CPU cache. Performance also depends on operating system (OS) design. The huge pages feature in Linux and other OSes can improve the efficiency of virtual memory In computing, virtual memory, or virtual storage, is a memory management technique that provides an "idealized abstraction of the storage resources that are actually available on a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Random-access Memory

Random-access memory (RAM; ) is a form of Computer memory, electronic computer memory that can be read and changed in any order, typically used to store working Data (computing), data and machine code. A random-access memory device allows data items to be read (computer), read or written in almost the same amount of time irrespective of the physical location of data inside the memory, in contrast with other direct-access data storage media (such as hard disks and Magnetic tape data storage, magnetic tape), where the time required to read and write data items varies significantly depending on their physical locations on the recording medium, due to mechanical limitations such as media rotation speeds and arm movement. In today's technology, random-access memory takes the form of integrated circuit (IC) chips with MOSFET, MOS (metal–oxide–semiconductor) Memory cell (computing), memory cells. RAM is normally associated with Volatile memory, volatile types of memory where s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Operating System

An operating system (OS) is system software that manages computer hardware and software resources, and provides common daemon (computing), services for computer programs. Time-sharing operating systems scheduler (computing), schedule tasks for efficient use of the system and may also include accounting software for cost allocation of Scheduling (computing), processor time, mass storage, peripherals, and other resources. For hardware functions such as input and output and memory allocation, the operating system acts as an intermediary between programs and the computer hardware, although the application code is usually executed directly by the hardware and frequently makes system calls to an OS function or is interrupted by it. Operating systems are found on many devices that contain a computerfrom cellular phones and video game consoles to web servers and supercomputers. , Android (operating system), Android is the most popular operating system with a 46% market share, followed ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Distributed Computing Problems

Distribution may refer to: Mathematics *Distribution (mathematics), generalized functions used to formulate solutions of partial differential equations *Probability distribution, the probability of a particular value or value range of a variable **Cumulative distribution function, in which the probability of being no greater than a particular value is a function of that value *Frequency distribution, a list of the values recorded in a sample * Inner distribution, and outer distribution, in coding theory *Distribution (differential geometry), a subset of the tangent bundle of a manifold * Distributed parameter system, systems that have an infinite-dimensional state-space * Distribution of terms, a situation in which all members of a category are accounted for *Distributivity, a property of binary operations that generalises the distributive law from elementary algebra *Distribution (number theory) *Distribution problems, a common type of problems in combinatorics where the goa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Management

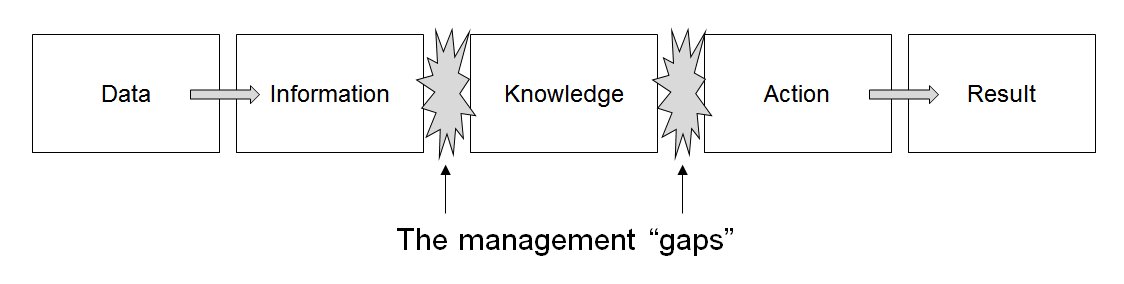

Data management comprises all disciplines related to handling data as a valuable resource, it is the practice of managing an organization's data so it can be analyzed for decision making. Concept The concept of data management emerged alongside the evolution of computing technology. In the 1950s, as computers became more prevalent, organizations began to grapple with the challenge of organizing and storing data efficiently. Early methods relied on punch cards and manual sorting, which were labor-intensive and prone to errors. The introduction of database management systems in the 1970s marked a significant milestone, enabling structured storage and retrieval of data. By the 1980s, relational database models revolutionized data management, emphasizing the importance of data as an asset and fostering a data-centric mindset in business. This era also saw the rise of data governance practices, which prioritized the organization and regulation of data to ensure quality and complian ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Big Data

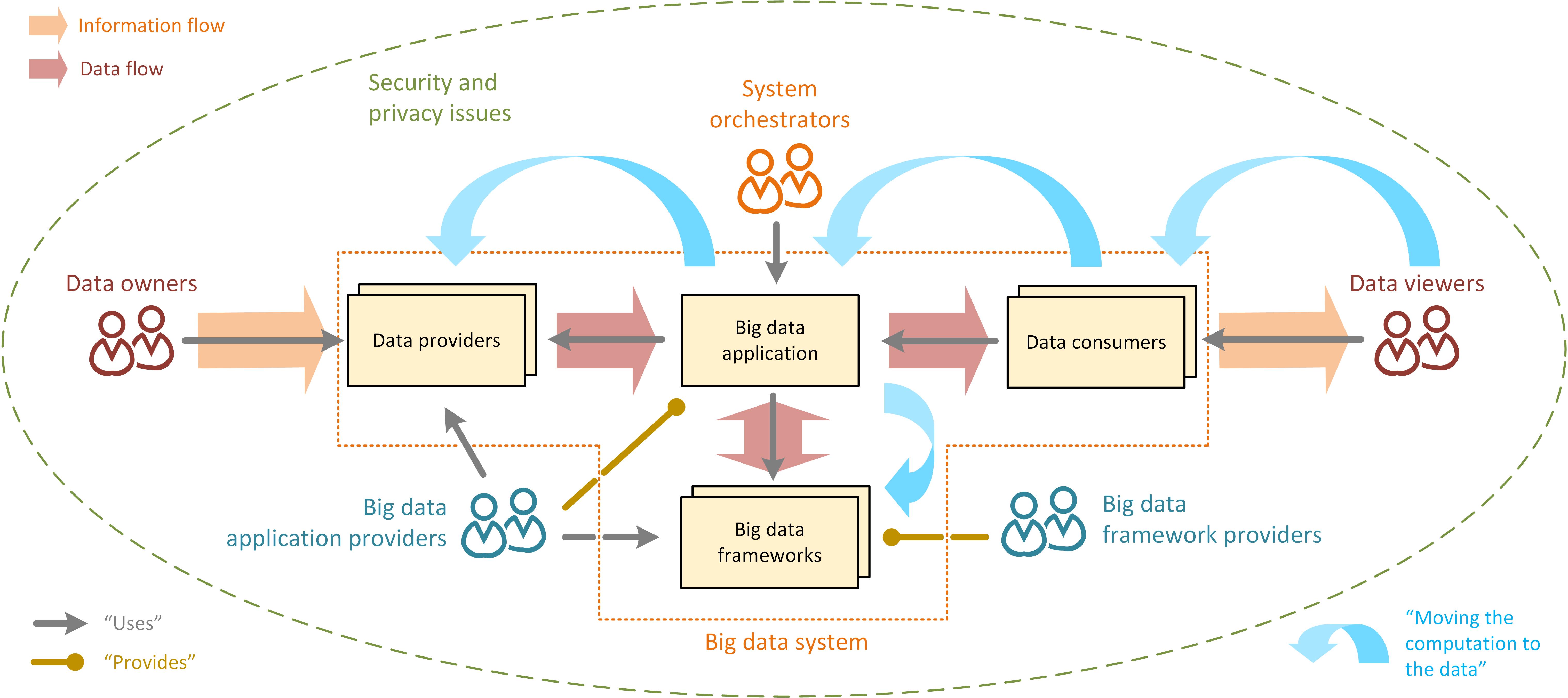

Big data primarily refers to data sets that are too large or complex to be dealt with by traditional data processing, data-processing application software, software. Data with many entries (rows) offer greater statistical power, while data with higher complexity (more attributes or columns) may lead to a higher false discovery rate. Big data analysis challenges include Automatic identification and data capture, capturing data, Computer data storage, data storage, data analysis, search, Data sharing, sharing, Data transmission, transfer, Data visualization, visualization, Query language, querying, updating, information privacy, and data source. Big data was originally associated with three key concepts: ''volume'', ''variety'', and ''velocity''. The analysis of big data presents challenges in sampling, and thus previously allowing for only observations and sampling. Thus a fourth concept, ''veracity,'' refers to the quality or insightfulness of the data. Without sufficient investm ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Transparent Huge Pages

A page, memory page, or virtual page is a fixed-length contiguous block of virtual memory, described by a single entry in a page table. It is the smallest unit of data for memory management in an operating system that uses virtual memory. Similarly, a page frame is the smallest fixed-length contiguous block of physical memory into which memory pages are mapped by the operating system. A transfer of pages between main memory and an auxiliary store, such as a hard disk drive, is referred to as paging or swapping. Explanation Computer memory is divided into pages so that information can be found more quickly. The concept is named by analogy to the pages of a printed book. If a reader wanted to find, for example, the 5,000th word in the book, they could count from the first word. This would be time-consuming. It would be much faster if the reader had a listing of how many words are on each page. From this listing they could determine which page the 5,000th word appears on, and how ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Virtual Memory

In computing, virtual memory, or virtual storage, is a memory management technique that provides an "idealized abstraction of the storage resources that are actually available on a given machine" which "creates the illusion to users of a very large (main) memory". The computer's operating system, using a combination of hardware and software, maps memory addresses used by a program, called '' virtual addresses'', into ''physical addresses'' in computer memory. Main storage, as seen by a process or task, appears as a contiguous address space or collection of contiguous segments. The operating system manages virtual address spaces and the assignment of real memory to virtual memory. Address translation hardware in the CPU, often referred to as a memory management unit (MMU), automatically translates virtual addresses to physical addresses. Software within the operating system may extend these capabilities, utilizing, e.g., disk storage, to provide a virtual address space ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Huge Pages

A page, memory page, or virtual page is a fixed-length contiguous block of virtual memory, described by a single entry in a page table. It is the smallest unit of data for memory management in an operating system that uses virtual memory. Similarly, a page frame is the smallest fixed-length contiguous block of physical memory into which memory pages are mapped by the operating system. A transfer of pages between main memory and an auxiliary store, such as a hard disk drive, is referred to as paging or swapping. Explanation Computer memory is divided into pages so that information can be found more quickly. The concept is named by analogy to the pages of a printed book. If a reader wanted to find, for example, the 5,000th word in the book, they could count from the first word. This would be time-consuming. It would be much faster if the reader had a listing of how many words are on each page. From this listing they could determine which page the 5,000th word appears on, and how ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

CPU Cache

A CPU cache is a hardware cache used by the central processing unit (CPU) of a computer to reduce the average cost (time or energy) to access data from the main memory. A cache is a smaller, faster memory, located closer to a processor core, which stores copies of the data from frequently used main memory locations. Most CPUs have a hierarchy of multiple cache levels (L1, L2, often L3, and rarely even L4), with different instruction-specific and data-specific caches at level 1. The cache memory is typically implemented with static random-access memory (SRAM), in modern CPUs by far the largest part of them by chip area, but SRAM is not always used for all levels (of I- or D-cache), or even any level, sometimes some latter or all levels are implemented with eDRAM. Other types of caches exist (that are not counted towards the "cache size" of the most important caches mentioned above), such as the translation lookaside buffer (TLB) which is part of the memory management unit (M ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

High-performance Computing

High-performance computing (HPC) is the use of supercomputers and computer clusters to solve advanced computation problems. Overview HPC integrates systems administration (including network and security knowledge) and parallel programming into a multidisciplinary field that combines digital electronics, computer architecture, system software, programming languages, algorithms and computational techniques. HPC technologies are the tools and systems used to implement and create high performance computing systems. Recently, HPC systems have shifted from supercomputing to computing clusters and grids. Because of the need of networking in clusters and grids, High Performance Computing Technologies are being promoted by the use of a collapsed network backbone, because the collapsed backbone architecture is simple to troubleshoot and upgrades can be applied to a single router as opposed to multiple ones. HPC integrates with data analytics in AI engineering workflows to generate ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Non-uniform Memory Access

Non-uniform memory access (NUMA) is a computer storage, computer memory design used in multiprocessing, where the memory access time depends on the memory location relative to the processor. Under NUMA, a processor can access its own local memory faster than non-local memory (memory local to another processor or memory shared between processors). NUMA is beneficial for workloads with high memory locality of reference and low lock contention, because a processor may operate on a subset of memory mostly or entirely within its own cache node, reducing traffic on the memory bus. NUMA architectures logically follow in scaling from symmetric multiprocessing (SMP) architectures. They were developed commercially during the 1990s by Unisys, Convex Computer (later Hewlett-Packard), Honeywell Information Systems Italy (HISI) (later Groupe Bull), Silicon Graphics (later Silicon Graphics International), Sequent Computer Systems (later IBM), Data General (later EMC Corporation, EMC, now Dell Te ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Memory Controller

A memory controller, also known as memory chip controller (MCC) or a memory controller unit (MCU), is a digital circuit that manages the flow of data going to and from a computer's main memory. When a memory controller is integrated into another chip, such as an integral part of a microprocessor, it is usually called an integrated memory controller (IMC). Memory controllers contain the logic necessary to read and write to dynamic random-access memory (DRAM), and to provide the critical memory refresh and other functions. Reading and writing to DRAM is performed by selecting the row and column data addresses of the DRAM as the inputs to the multiplexer circuit, where the demultiplexer on the DRAM uses the converted inputs to select the correct memory location and return the data, which is then passed back through a multiplexer to consolidate the data in order to reduce the required bus width for the operation. Memory controllers' bus widths range from 8-bit in earlier systems ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |