|

Bidirectional Reflectance Distribution Function

The bidirectional reflectance distribution function (BRDF; f_(\omega_,\, \omega_) ) is a function of four real variables that defines how light is reflected at an opaque surface. It is employed in the optics of real-world light, in computer graphics algorithms, and in computer vision algorithms. The function takes an incoming light direction, \omega_, and outgoing direction, \omega_ (taken in a coordinate system where the surface normal \mathbf n lies along the ''z''-axis), and returns the ratio of reflected radiance exiting along \omega_ to the irradiance incident on the surface from direction \omega_. Each direction \omega is itself parameterized by azimuth angle \phi and zenith angle \theta, therefore the BRDF as a whole is a function of 4 variables. The BRDF has units sr−1, with steradians (sr) being a unit of solid angle. Definition The BRDF was first defined by Fred Nicodemus around 1965. The definition is: f_(\omega_,\, \omega_) \,=\, \frac \,=\, \frac\frac wher ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

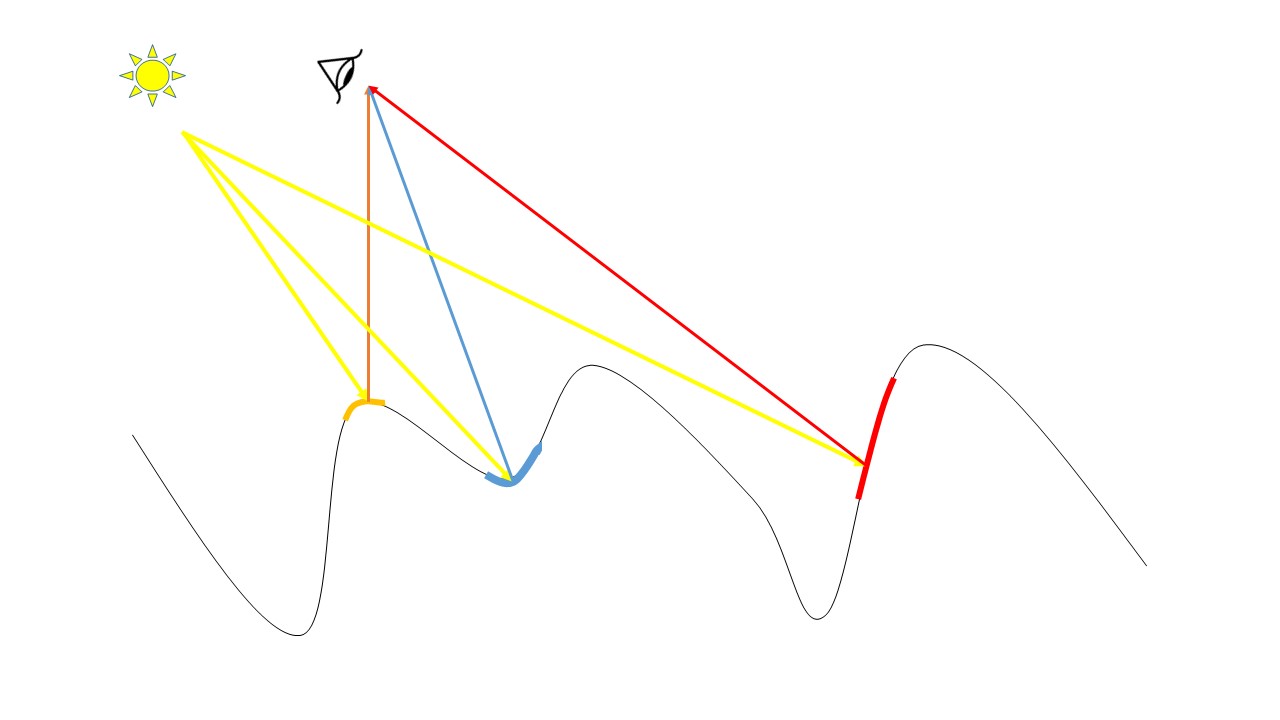

BRDF Diagram

The bidirectional reflectance distribution function (BRDF; f_(\omega_,\, \omega_) ) is a function of four real variables that defines how light is reflected at an opaque surface. It is employed in the optics of real-world light, in computer graphics algorithms, and in computer vision algorithms. The function takes an incoming light direction, \omega_, and outgoing direction, \omega_ (taken in a coordinate system where the surface normal \mathbf n lies along the ''z''-axis), and returns the ratio of reflected radiance exiting along \omega_ to the irradiance incident on the surface from direction \omega_. Each direction \omega is itself parameterized by azimuth angle \phi and zenith angle \theta, therefore the BRDF as a whole is a function of 4 variables. The BRDF has units sr−1, with steradians (sr) being a unit of solid angle. Definition The BRDF was first defined by Fred Nicodemus around 1965. The definition is: f_(\omega_,\, \omega_) \,=\, \frac \,=\, \frac\frac wher ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bidirectional Texture Function

Bidirectional texture function (BTF) is a 6-dimensional function depending on planar texture coordinates (x,y) as well as on view and illumination spherical angles. In practice this function is obtained as a set of several thousand color images of material sample taken during different camera and light positions. The BTF is a representation of the appearance of texture as a function of viewing and illumination direction. It is an image-based representation, since the geometry of the surface is unknown and not measured. BTF is typically captured by imaging the surface at a sampling of the hemisphere of possible viewing and illumination directions. BTF measurements are collections of images. The term BTF was first introduced in and similar terms have since been introduced including BSSRDF and SBRDF (spatial BRDF). SBRDF has a very similar definition to BTF, i.e. BTF is also a spatially varying BRDF. To cope with a massive BTF data with high redundancy, many compression methods were ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Solar Cell

A solar cell, or photovoltaic cell, is an electronic device that converts the energy of light directly into electricity by the photovoltaic effect, which is a physical and chemical phenomenon.Solar Cells chemistryexplained.com It is a form of photoelectric cell, defined as a device whose electrical characteristics, such as , , or resistance, vary when exposed to light. Individual solar cell devices are often the electrical ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Object Recognition

Object recognition – technology in the field of computer vision for finding and identifying objects in an image or video sequence. Humans recognize a multitude of objects in images with little effort, despite the fact that the image of the objects may vary somewhat in different view points, in many different sizes and scales or even when they are translated or rotated. Objects can even be recognized when they are partially obstructed from view. This task is still a challenge for computer vision systems. Many approaches to the task have been implemented over multiple decades. Approaches based on CAD-like object models * Edge detection * Primal sketch * Marr, Mohan and Nevatia * Lowe * Olivier Faugeras Recognition by parts * Generalized cylinders (Thomas Binford) * Geon (psychology), Geons (Irving Biederman) * Dickinson, Forsyth and Ponce Appearance-based methods * Use example images (called templates or exemplars) of the objects to perform recognition * Objects ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Inverse Problem

An inverse problem in science is the process of calculating from a set of observations the causal factors that produced them: for example, calculating an image in X-ray computed tomography, source reconstruction in acoustics, or calculating the density of the Earth from measurements of its gravity field. It is called an inverse problem because it starts with the effects and then calculates the causes. It is the inverse of a forward problem, which starts with the causes and then calculates the effects. Inverse problems are some of the most important mathematical problems in science and mathematics because they tell us about parameters that we cannot directly observe. They have wide application in system identification, optics, radar, acoustics, communication theory, signal processing, medical imaging, computer vision, geophysics, oceanography, astronomy, remote sensing, natural language processing, machine learning, nondestructive testing, slope stability analysis and man ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Rendering Equation

In computer graphics, the rendering equation is an integral equation in which the equilibrium radiance leaving a point is given as the sum of emitted plus reflected radiance under a geometric optics approximation. It was simultaneously introduced into computer graphics by David Immel et al. and James Kajiya in 1986. The various realistic rendering techniques in computer graphics attempt to solve this equation. The physical basis for the rendering equation is the law of conservation of energy. Assuming that ''L'' denotes radiance, we have that at each particular position and direction, the outgoing light (Lo) is the sum of the emitted light (Le) and the reflected light. The reflected light itself is the sum from all directions of the incoming light (Li) multiplied by the surface reflection and cosine of the incident angle. Equation form The rendering equation may be written in the form :L_(\mathbf x, \omega_, \lambda, t) = L_(\mathbf x, \omega_, \lambda, t) \ + \int_\Omega f_( ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

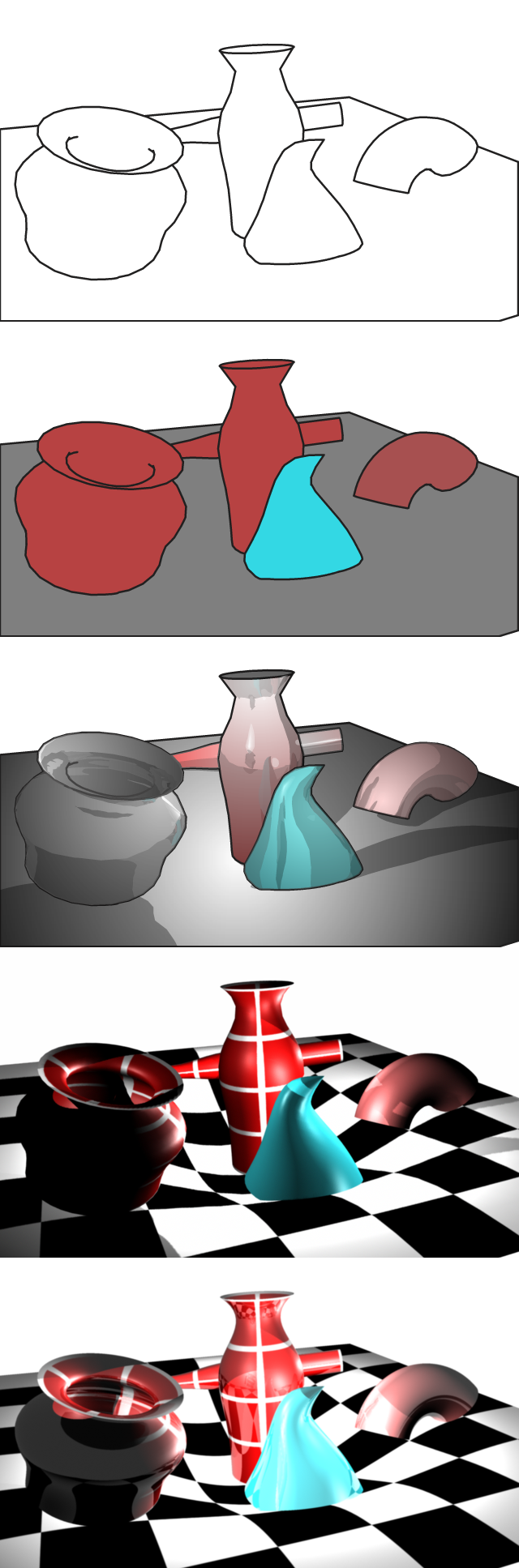

Rendering (computer Graphics)

Rendering or image synthesis is the process of generating a photorealistic or non-photorealistic image from a 2D or 3D model by means of a computer program. The resulting image is referred to as the render. Multiple models can be defined in a ''scene file'' containing objects in a strictly defined language or data structure. The scene file contains geometry, viewpoint, texture, lighting, and shading information describing the virtual scene. The data contained in the scene file is then passed to a rendering program to be processed and output to a digital image or raster graphics image file. The term "rendering" is analogous to the concept of an artist's impression of a scene. The term "rendering" is also used to describe the process of calculating effects in a video editing program to produce the final video output. Rendering is one of the major sub-topics of 3D computer graphics, and in practice it is always connected to the others. It is the last major step in the gr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Radiometric

Radiometry is a set of techniques for measuring electromagnetic radiation, including visible light. Radiometric techniques in optics characterize the distribution of the radiation's power in space, as opposed to photometric techniques, which characterize the light's interaction with the human eye. The fundamental difference between radiometry and photometry is that radiometry gives the entire optical radiation spectrum, while photometry is limited to the visible spectrum. Radiometry is distinct from quantum techniques such as photon counting. The use of radiometers to determine the temperature of objects and gasses by measuring radiation flux is called pyrometry. Handheld pyrometer devices are often marketed as infrared thermometers. Radiometry is important in astronomy, especially radio astronomy, and plays a significant role in Earth remote sensing. The measurement techniques categorized as ''radiometry'' in optics are called ''photometry'' in some astronomical applications, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Helmholtz Reciprocity

The Helmholtz reciprocity principle describes how a ray of light and its reverse ray encounter matched optical adventures, such as reflections, refractions, and absorptions in a passive medium, or at an interface. It does not apply to moving, non-linear, or magnetic media. For example, incoming and outgoing light can be considered as reversals of each other,Hapke, B. (1993). ''Theory of Reflectance and Emittance Spectroscopy'', Cambridge University Press, Cambridge UK, , Section 10C, pages 263-264. without affecting the bidirectional reflectance distribution function (BRDF) outcome. If light was measured with a sensor and that light reflected on a material with a BRDF that obeys the Helmholtz reciprocity principle one would be able to swap the sensor and light source and the measurement of flux would remain equal. In the computer graphics scheme of global illumination, the Helmholtz reciprocity principle is important if the global illumination algorithm reverses light paths ( ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Optics

Linear optics is a sub-field of optics, consisting of linear systems, and is the opposite of nonlinear optics. Linear optics includes most applications of lenses, mirrors, waveplates, diffraction gratings, and many other common optical components and systems. If an optical system is linear, it has the following properties (among others): * If monochromatic light enters an unchanging linear-optical system, the output will be at the same frequency. For example, if red light enters a lens, it will still be red when it exits the lens. * The superposition principle is valid for linear-optical systems. For example, if a mirror transforms light input A into output B, and input C into output D, then an input consisting of A and C simultaneously give an output of B and D simultaneously. * Relatedly, if the input light is made more intense, then the output light is made more intense but otherwise unchanged. These properties are violated in nonlinear optics, which frequently involves high-pow ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Luminescence

Luminescence is spontaneous emission of light by a substance not resulting from heat; or "cold light". It is thus a form of cold-body radiation. It can be caused by chemical reactions, electrical energy, subatomic motions or stress on a crystal. This distinguishes luminescence from incandescence, which is light emitted by a substance as a result of heating. Historically, radioactivity was thought of as a form of "radio-luminescence", although it is today considered to be separate since it involves more than electromagnetic radiation. The dials, hands, scales, and signs of aviation and navigational instruments and markings are often coated with luminescent materials in a process known as "luminising". Types The following are types of luminescence: * Chemiluminescence, the emission of light as a result of a chemical reaction **Bioluminescence, a result of biochemical reactions in a living organism **Electrochemiluminescence, a result of an electrochemical reaction **Lyolumine ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Iridescence

Iridescence (also known as goniochromism) is the phenomenon of certain surfaces that appear to gradually change color as the angle of view or the angle of illumination changes. Examples of iridescence include soap bubbles, feathers, butterfly wings and seashell nacre, and minerals such as opal. It is a kind of structural coloration that is due to wave interference of light in microstructures or thin films. Pearlescence is a related effect where some or most of the reflected light is white. The term pearlescent is used to describe certain paint finishes, usually in the automotive industry, which actually produce iridescent effects. Etymology The word ''iridescence'' is derived in part from the Greek word ἶρις ''îris'' ( gen. ἴριδος ''íridos''), meaning ''rainbow'', and is combined with the Latin suffix ''-escent'', meaning "having a tendency toward". Iris in turn derives from the goddess Iris of Greek mythology, who is the personification of the rainbow and ac ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |