|

Backpropagation Through Time

Backpropagation through time (BPTT) is a gradient-based technique for training certain types of recurrent neural networks, such as Elman networks. The algorithm was independently derived by numerous researchers. Algorithm The training data for a recurrent neural network is an ordered sequence of k input-output pairs, \langle \mathbf_0,\mathbf_0 \rangle, \langle\mathbf_1,\mathbf_1 \rangle,\langle\mathbf_2,\mathbf_2\rangle,...,\langle\mathbf_,\mathbf_\rangle. An initial value must be specified for the hidden state \mathbf_0, typically chosen to be a zero vector. BPTT begins by unfolding a recurrent neural network in time. The unfolded network contains k inputs and outputs, but every copy of the network shares the same parameters. Then, the backpropagation algorithm is used to find the gradient of the loss function with respect to all the network parameters. Consider an example of a neural network that contains a recurrent layer f and a feedforward layer g. There are diff ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Gradient Method

In optimization, a gradient method is an algorithm In mathematics and computer science, an algorithm () is a finite sequence of Rigour#Mathematics, mathematically rigorous instructions, typically used to solve a class of specific Computational problem, problems or to perform a computation. Algo ... to solve problems of the form :\min_\; f(x) with the search directions defined by the gradient of the function at the current point. Examples of gradient methods are the gradient descent and the conjugate gradient. See also * Gradient descent * Stochastic gradient descent * Coordinate descent * Frank–Wolfe algorithm * Landweber iteration * Random coordinate descent * Conjugate gradient method * Derivation of the conjugate gradient method * Nonlinear conjugate gradient method * Biconjugate gradient method * Biconjugate gradient stabilized method References * First order methods Optimization algorithms and methods Numerical linear algebra {{linear-al ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Recurrent Neural Network

Recurrent neural networks (RNNs) are a class of artificial neural networks designed for processing sequential data, such as text, speech, and time series, where the order of elements is important. Unlike feedforward neural networks, which process inputs independently, RNNs utilize recurrent connections, where the output of a neuron at one time step is fed back as input to the network at the next time step. This enables RNNs to capture temporal dependencies and patterns within sequences. The fundamental building block of RNNs is the ''recurrent unit'', which maintains a ''hidden state''—a form of memory that is updated at each time step based on the current input and the previous hidden state. This feedback mechanism allows the network to learn from past inputs and incorporate that knowledge into its current processing. RNNs have been successfully applied to tasks such as unsegmented, connected handwriting recognition, speech recognition, natural language processing, and neural ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Unfold Through Time

Unfold may refer to: Science * Unfoldable cardinal, in mathematics * Unfold (higher-order function), in computer science a family of anamorphism functions * Unfoldment (other), in spirituality and physics * Unfolded protein response, in biochemistry * Equilibrium unfolding, in biochemistry * Unfolded state (denatured protein), in biochemistry * Maximum variance unfolding Maximum Variance Unfolding (MVU), also known as Semidefinite Embedding (SDE), is an algorithm in computer science that uses semidefinite programming to perform non-linear dimensionality reduction of high-dimensional vectorial input data. It is mo ... (semidefinite embedding), in computer science Music * ''Unfold'' (Marié Digby album), 2008 * ''Unfold'' (John O'Callaghan album), 2011 * ''Unfold'' (Almah album), 2013 * ''Unfold'' (The Necks album), 2017 * "Unfold" (Porter Robinson song), 2021 * "Unfold", a song by De La Soul from the 2016 album '' And the Anonymous Nobody...'' Technology * Un ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Zero Vector

In mathematics, a zero element is one of several generalizations of the number zero to other algebraic structures. These alternate meanings may or may not reduce to the same thing, depending on the context. Additive identities An '' additive identity'' is the identity element in an additive group or monoid. It corresponds to the element 0 such that for all x in the group, . Some examples of additive identity include: * The zero vector under vector addition: the vector whose components are all 0; in a normed vector space its norm (length) is also 0. Often denoted as \mathbf or \vec. * The zero function or zero map defined by , under pointwise addition * The ''empty set'' under set union * An '' empty sum'' or ''empty coproduct'' * An ''initial object'' in a category (an empty coproduct, and so an identity under coproducts) Absorbing elements An '' absorbing element'' in a multiplicative semigroup or semiring generalises the property . Examples include: *The ''empty set'', ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Backpropagation

In machine learning, backpropagation is a gradient computation method commonly used for training a neural network to compute its parameter updates. It is an efficient application of the chain rule to neural networks. Backpropagation computes the gradient of a loss function with respect to the weights of the network for a single input–output example, and does so efficiently, computing the gradient one layer at a time, iterating backward from the last layer to avoid redundant calculations of intermediate terms in the chain rule; this can be derived through dynamic programming. Strictly speaking, the term ''backpropagation'' refers only to an algorithm for efficiently computing the gradient, not how the gradient is used; but the term is often used loosely to refer to the entire learning algorithm – including how the gradient is used, such as by stochastic gradient descent, or as an intermediate step in a more complicated optimizer, such as Adaptive Moment Estimation. The ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

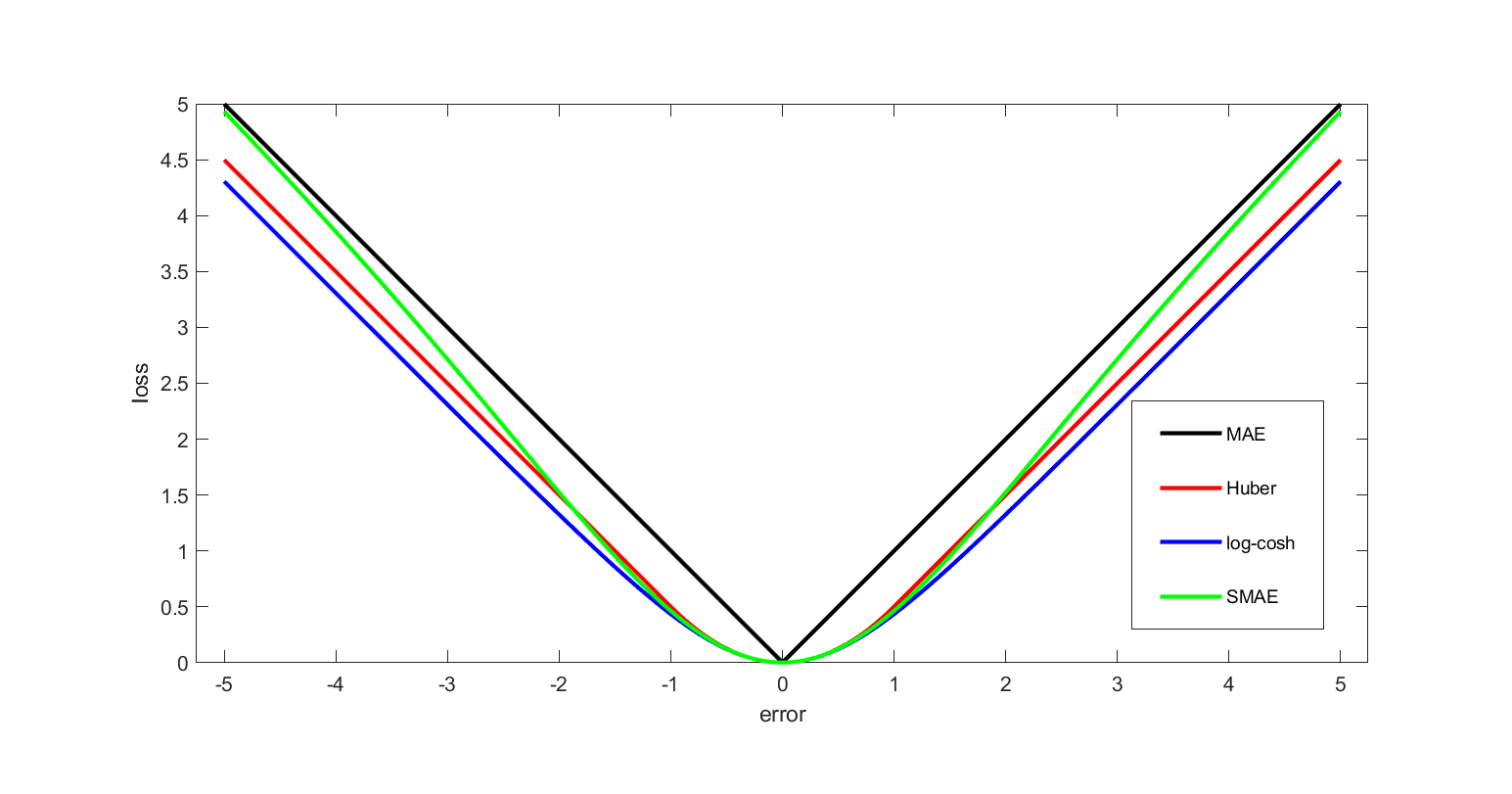

Loss Function

In mathematical optimization and decision theory, a loss function or cost function (sometimes also called an error function) is a function that maps an event or values of one or more variables onto a real number intuitively representing some "cost" associated with the event. An optimization problem seeks to minimize a loss function. An objective function is either a loss function or its opposite (in specific domains, variously called a reward function, a profit function, a utility function, a fitness function, etc.), in which case it is to be maximized. The loss function could include terms from several levels of the hierarchy. In statistics, typically a loss function is used for parameter estimation, and the event in question is some function of the difference between estimated and true values for an instance of data. The concept, as old as Pierre-Simon Laplace, Laplace, was reintroduced in statistics by Abraham Wald in the middle of the 20th century. In the context of economi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Feedforward Neural Network

Feedforward refers to recognition-inference architecture of neural networks. Artificial neural network architectures are based on inputs multiplied by weights to obtain outputs (inputs-to-output): feedforward. Recurrent neural networks, or neural networks with loops allow information from later processing stages to feed back to earlier stages for sequence processing. However, at every stage of inference a feedforward multiplication remains the core, essential for backpropagationRumelhart, David E., Geoffrey E. Hinton, and R. J. Williams.Learning Internal Representations by Error Propagation. David E. Rumelhart, James L. McClelland, and the PDP research group. (editors), Parallel distributed processing: Explorations in the microstructure of cognition, Volume 1: Foundation. MIT Press, 1986. or backpropagation through time. Thus neural networks cannot contain feedback like negative feedback or positive feedback where the outputs feed back to the ''very same'' inputs and modify them, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Evolutionary Programming

Evolutionary programming is an evolutionary algorithm, where a share of new population is created by mutation of previous population without crossover. Evolutionary programming differs from evolution strategy ES(\mu+\lambda) in one detail. All individuals are selected for the new population, while in ES(\mu+\lambda), every individual has the same probability to be selected. It is one of the four major evolutionary algorithm paradigms. History It was first used by Lawrence J. Fogel in the US in 1960 in order to use simulated evolution as a learning process aiming to generate artificial intelligence. It was used to evolve finite-state machines as predictors. See also * Artificial intelligence * Genetic algorithm In computer science and operations research, a genetic algorithm (GA) is a metaheuristic inspired by the process of natural selection that belongs to the larger class of evolutionary algorithms (EA). Genetic algorithms are commonly used to g ... * Genetic opera ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Backpropagation Through Structure

Backpropagation through structure (BPTS) is a gradient-based technique for training recursive neural network A recursive neural network is a kind of deep neural network created by applying the same set of weights recursively over a structured input, to produce a structured prediction over variable-size input structures, or a scalar prediction on it, by ...s, proposed in a 1996 paper written by Christoph Goller and Andreas Küchler. References Artificial neural networks {{compu-ai-stub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |