|

BLOOM (language Model)

BigScience Large Open-science Open-access Multilingual Language Model (BLOOM) is a transformer A transformer is a passive component that transfers electrical energy from one electrical circuit to another circuit, or multiple circuits. A varying current in any coil of the transformer produces a varying magnetic flux in the transformer' ...-based large language model (LLM). It is a free LLM available to the public. It was trained on approximately 366 billion tokens from March to July 2022. Initiated by a co founder of HuggingFace the BLOOM project involved six main groups: HuggingFace's BigScience team, Microsoft DeepSpeed team, NVIDIA Megatron-LM team, IDRIS/GENCI team, PyTorch team, and volunteers in the BigScience Engineering workgroup. The training data encompasses 46 natural languages and 13 programming languages amounting to 1.6 terabytes of pre-processed text converted into 350 billion unique tokens to BLOOM's training datasets. References Large language model ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Transformer (machine Learning Model)

A transformer is a deep learning model that adopts the mechanism of self-attention, differentially weighting the significance of each part of the input data. It is used primarily in the fields of natural language processing (NLP) and computer vision (CV). Like recurrent neural networks (RNNs), transformers are designed to process sequential input data, such as natural language, with applications towards tasks such as translation and text summarization. However, unlike RNNs, transformers process the entire input all at once. The attention mechanism provides context for any position in the input sequence. For example, if the input data is a natural language sentence, the transformer does not have to process one word at a time. This allows for more parallelization than RNNs and therefore reduces training times. Transformers were introduced in 2017 by a team at Google Brain and are increasingly the model of choice for NLP problems, replacing RNN models such as long short-term memor ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Large Language Model

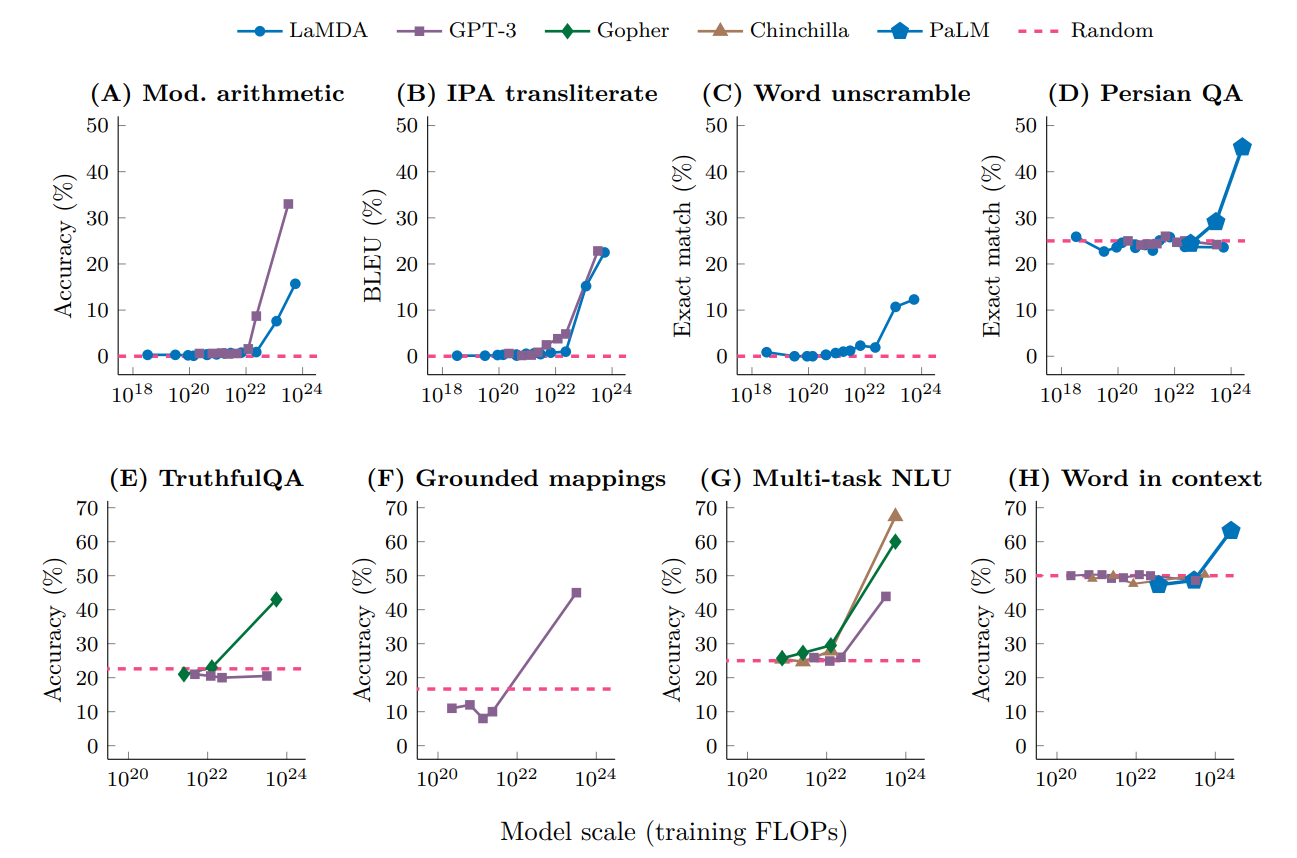

A large language model (LLM) is a language model consisting of a neural network with many parameters (typically billions of weights or more), trained on large quantities of unlabelled text using self-supervised learning. LLMs emerged around 2018 and perform well at a wide variety of tasks. This has shifted the focus of natural language processing research away from the previous paradigm of training specialized supervised models for specific tasks. Properties Though the term ''large language model'' has no formal definition, it often refers to deep learning models having a parameter count on the order of billions or more. LLMs are general purpose models which excel at a wide range of tasks, as opposed to being trained for one specific task (such as sentiment analysis, named entity recognition, or mathematical reasoning). The skill with which they accomplish tasks, and the range of tasks at which they are capable, seems to be a function of the amount of resources (data, parameter-siz ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

MIT Technology Review

''MIT Technology Review'' is a bimonthly magazine wholly owned by the Massachusetts Institute of Technology, and editorially independent of the university. It was founded in 1899 as ''The Technology Review'', and was re-launched without "The" in its name on April 23, 1998 under then publisher R. Bruce Journey. In September 2005, it was changed, under its then editor-in-chief and publisher, Jason Pontin, to a form resembling the historical magazine. Before the 1998 re-launch, the editor stated that "nothing will be left of the old magazine except the name." It was therefore necessary to distinguish between the modern and the historical ''Technology Review''. The historical magazine had been published by the MIT Alumni Association, was more closely aligned with the interests of MIT alumni, and had a more intellectual tone and much smaller public circulation. The magazine, billed from 1998 to 2005 as "MIT's Magazine of Innovation," and from 2005 onwards as simply "published by MIT", ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

French National Centre For Scientific Research

The French National Centre for Scientific Research (french: link=no, Centre national de la recherche scientifique, CNRS) is the French state research organisation and is the largest fundamental science agency in Europe. In 2016, it employed 31,637 staff, including 11,137 tenured researchers, 13,415 engineers and technical staff, and 7,085 contractual workers. It is headquartered in Paris and has administrative offices in Brussels, Beijing, Tokyo, Singapore, Washington, D.C., Bonn, Moscow, Tunis, Johannesburg, Santiago de Chile, Israel, and New Delhi. From 2009 to 2016, the CNRS was ranked No. 1 worldwide by the SCImago Institutions Rankings (SIR), an international ranking of research-focused institutions, including universities, national research centers, and companies such as Facebook or Google. The CNRS ranked No. 2 between 2017 and 2021, then No. 3 in 2022 in the same SIR, after the Chinese Academy of Sciences and before universities such as Harvard University, MIT, or Stanford ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

HuggingFace

Hugging Face, Inc. is an American company that develops tools for building applications using machine learning. It is most notable for its Transformers library built for natural language processing applications and its platform that allows users to share machine learning models and datasets. History The company was founded in 2016 by Clément Delangue, Julien Chaumond, and Thomas Wolf originally as a company that developed a chatbot app targeted at teenagers. After open-sourcing the model behind the chatbot, the company pivoted to focus on being a platform for democratizing machine learning. In March 2021, Hugging Face raised $40 million in a Series B funding round. On April 28, 2021, the company launched the BigScience Research Workshop in collaboration with several other research groups to release an open large language model. In 2022, the workshop concluded with the announcement of BLOOM, a multilingual large language model with 176 billion parameters. On December 21, 202 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Large Language Models

A large language model (LLM) is a language model consisting of a neural network with many parameters (typically billions of weights or more), trained on large quantities of unlabelled text using self-supervised learning. LLMs emerged around 2018 and perform well at a wide variety of tasks. This has shifted the focus of natural language processing research away from the previous paradigm of training specialized supervised models for specific tasks. Properties Though the term ''large language model'' has no formal definition, it often refers to deep learning models having a parameter count on the order of billions or more. LLMs are general purpose models which excel at a wide range of tasks, as opposed to being trained for one specific task (such as sentiment analysis, named entity recognition, or mathematical reasoning). The skill with which they accomplish tasks, and the range of tasks at which they are capable, seems to be a function of the amount of resources (data, parameter-siz ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.jpg)