|

Average Variance Extracted

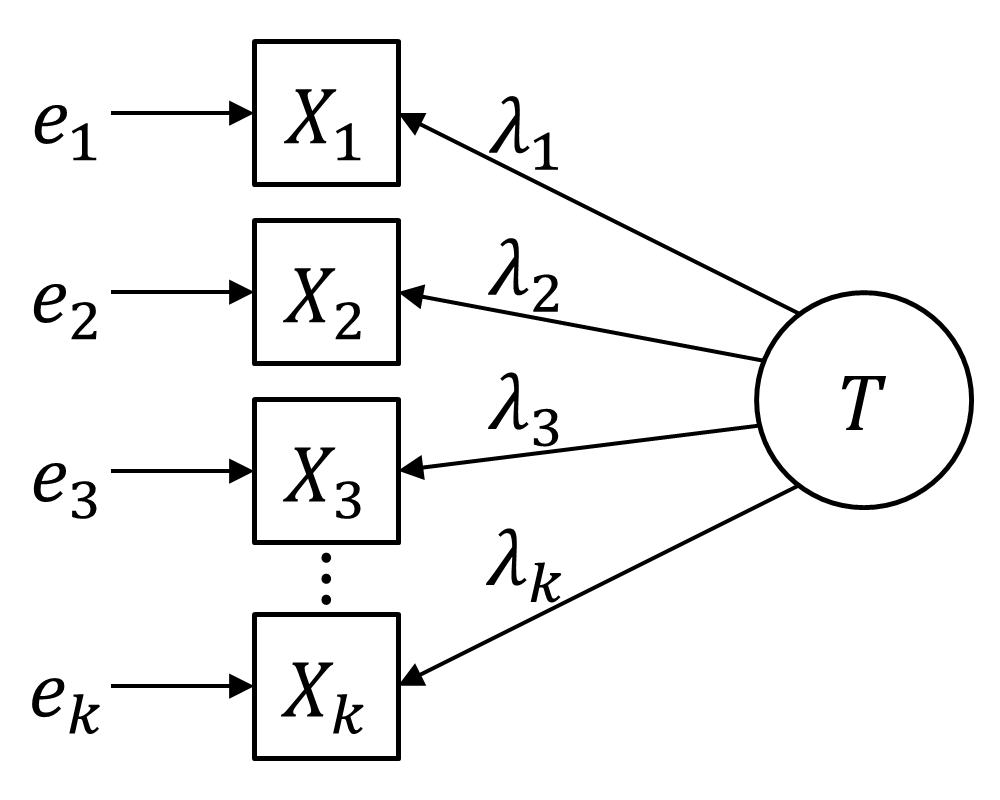

In statistics (classical test theory), average variance extracted (AVE) is a measure of the amount of variance that is captured by a construct in relation to the amount of variance due to measurement error.Fornell & Larcker (1981), https://www.jstor.org/stable/3151312 History The average variance extracted was first proposed by Fornell & Larcker (1981). Calculation The average variance extracted can be calculated as follows: : \text = \frac Here, k is the number of items, \lambda_i the factor loading of item i and \operatorname( e_i ) the variance of the error of item i. Role for assessing discriminant validity The average variance extracted has often been used to assess discriminant validity based on the following "rule of thumb": the positive square root of the AVE for each of the latent variables should be higher than the highest correlation with any other latent variable. If that is the case, discriminant validity is established at the construct level. This rule ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Congeneric Measurement Model

Congener may refer to: * A thing or person of the same kind as another, or of the same group. * Congener (biology), organisms within the same genus. * Congener (chemistry), related chemicals, e.g., elements in the same group of the periodic table. * Congener (beverages), a substance other than ethanol produced during the fermentation of alcoholic beverages. Species * ''Agabus congener'', a beetle in the family Dytiscidae. * ''Amata congener'', a moth in the family Erebidae. * ''Amyema congener'', a flowering plant in the family Loranthaceae. * ''Arthroplea congener'', a mayfly in the family Arthropleidae. * ''Elaphropus congener'', a ground beetle in the family Carabidae. * ''Gemmula congener'', a sea snail in the family Turridae. * ''Heterachthes congener'', a beetle in the family Cerambycidae. * ''Lestes congener'', a damselfly in the family Lestidae. * ''Megacyllene congener'', a beetle in the family Cerambycidae. * '' Potamarcha congener'', a dragonfly in the family Libellulid ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German: '' Statistik'', "description of a state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of surveys and experiments.Dodge, Y. (2006) ''The Oxford Dictionary of Statistical Terms'', Oxford University Press. When census data cannot be collected, statisticians collect data by developing specific experiment designs and survey samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample to the population as a whole. An ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Classical Test Theory

Classical test theory (CTT) is a body of related psychometric theory that predicts outcomes of psychological testing such as the difficulty of items or the ability of test-takers. It is a theory of testing based on the idea that a person's observed or obtained score on a test is the sum of a true score (error-free score) and an error score. Generally speaking, the aim of classical test theory is to understand and improve the reliability of psychological tests. ''Classical test theory'' may be regarded as roughly synonymous with ''true score theory''. The term "classical" refers not only to the chronology of these models but also contrasts with the more recent psychometric theories, generally referred to collectively as item response theory, which sometimes bear the appellation "modern" as in "modern latent trait theory". Classical test theory as we know it today was codified by Novick (1966) and described in classic texts such as Lord & Novick (1968) and Allen & Yen (1979/2002). ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Factor Loading

Factor analysis is a statistical method used to describe variability among observed, correlated variables in terms of a potentially lower number of unobserved variables called factors. For example, it is possible that variations in six observed variables mainly reflect the variations in two unobserved (underlying) variables. Factor analysis searches for such joint variations in response to unobserved latent variables. The observed variables are modelled as linear combinations of the potential factors plus "error" terms, hence factor analysis can be thought of as a special case of errors-in-variables models. Simply put, the factor loading of a variable quantifies the extent to which the variable is related to a given factor. A common rationale behind factor analytic methods is that the information gained about the interdependencies between observed variables can be used later to reduce the set of variables in a dataset. Factor analysis is commonly used in psychometrics, persona ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Variance

In probability theory and statistics, variance is the expectation of the squared deviation of a random variable from its population mean or sample mean. Variance is a measure of dispersion, meaning it is a measure of how far a set of numbers is spread out from their average value. Variance has a central role in statistics, where some ideas that use it include descriptive statistics, statistical inference, hypothesis testing, goodness of fit, and Monte Carlo sampling. Variance is an important tool in the sciences, where statistical analysis of data is common. The variance is the square of the standard deviation, the second central moment of a distribution, and the covariance of the random variable with itself, and it is often represented by \sigma^2, s^2, \operatorname(X), V(X), or \mathbb(X). An advantage of variance as a measure of dispersion is that it is more amenable to algebraic manipulation than other measures of dispersion such as the expected absolute deviatio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Discriminant Validity

In psychology, discriminant validity tests whether concepts or measurements that are not supposed to be related are actually unrelated. Campbell and Fiske (1959) introduced the concept of discriminant validity within their discussion on evaluating test validity. They stressed the importance of using both discriminant and convergent validation techniques when assessing new tests. A successful evaluation of discriminant validity shows that a test of a concept is not highly correlated with other tests designed to measure theoretically different concepts. In showing that two scales do not correlate, it is necessary to correct for attenuation in the correlation due to measurement error. It is possible to calculate the extent to which the two scales overlap by using the following formula where r_ is correlation between x and y, r_ is the reliability of x, and r_ is the reliability of y: :\cfrac Although there is no standard value for discriminant validity, a result less than 0.70 sugge ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Structural Equation Model

Structural equation modeling (SEM) is a label for a diverse set of methods used by scientists in both experimental and observational research across the sciences, business, and other fields. It is used most in the social and behavioral sciences. A definition of SEM is difficult without reference to highly technical language, but a good starting place is the name itself. SEM involves the construction of a ''model'', to represent how various aspects of an observable or theoretical phenomenon are thought to be Causality, causally structurally related to one another. The ''structure, structural'' aspect of the model implies theoretical associations between variables that represent the phenomenon under investigation. The postulated causal structuring is often depicted with arrows representing causal connections between variables (as in Figures 1 and 2) but these causal connections can be equivalently represented as equations. The causal structures imply that specific patterns of co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Tau-equivalent Reliability

Cronbach's alpha (Cronbach's \alpha), also known as tau-equivalent reliability (\rho_T) or coefficient alpha (coefficient \alpha), is a reliability coefficient that provides a method of measuring internal consistency of tests and measures. Numerous studies warn against using it unconditionally, and note that reliability coefficients based on structural equation modeling (SEM) are in many cases a suitable alternative.Sijtsma, K. (2009). On the use, the misuse, and the very limited usefulness of Cronbach's alpha. Psychometrika, 74(1), 107–120. Green, S. B., & Yang, Y. (2009). Commentary on coefficient alpha: A cautionary tale. Psychometrika, 74(1), 121–135. Revelle, W., & Zinbarg, R. E. (2009). Coefficients alpha, beta, omega, and the glb: Comments on Sijtsma. Psychometrika, 74(1), 145–154. Cho, E., & Kim, S. (2015). Cronbach's coefficient alpha: Well known but poorly understood. Organizational Research Methods, 18(2), 207–230. Raykov, T., & Marcoulides, G. A. (2017). Thanks ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Congeneric Reliability

In statistical models applied to psychometrics, congeneric reliability \rho_C ("rho C")Cho, E. (2016). Making reliability reliable: A systematic approach to reliability coefficients. Organizational Research Methods, 19(4), 651–682. https://doi.org/10.1177/1094428116656239 a single-administration test score reliability (i.e., the reliability of persons over items holding occasion fixed coefficient, commonly referred to as composite reliability, construct reliability, and coefficient omega. \rho_C is a structural equation model(SEM)-based reliability coefficients and is obtained from on a unidimensional model. \rho_C is the second most commonly used reliability factor after tau-equivalent reliability(\rho_T), and is often recommended as its alternative. Formula and calculation Systematic and conventional formula Let X_i denote the observed score of item i and X(=X_1 + X_2 + \cdots + X_k) denote the sum of all items in a test consisting of k items. It is assumed that ea ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Measurement Model

Measurement is the quantification of attributes of an object or event, which can be used to compare with other objects or events. In other words, measurement is a process of determining how large or small a physical quantity is as compared to a basic reference quantity of the same kind. The scope and application of measurement are dependent on the context and discipline. In natural sciences and engineering, measurements do not apply to nominal properties of objects or events, which is consistent with the guidelines of the ''International vocabulary of metrology'' published by the International Bureau of Weights and Measures. However, in other fields such as statistics as well as the social and behavioural sciences, measurements can have multiple levels, which would include nominal, ordinal, interval and ratio scales. Measurement is a cornerstone of trade, science, technology and quantitative research in many disciplines. Historically, many measurement systems existed f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |