|

Automatic Mutual Exclusion

Automatic mutual exclusion is a parallel computing programming paradigm in which threads are divided into atomic chunks, and the atomic execution of the chunks automatically parallelized using transactional memory. References See also * Bulk synchronous parallel The bulk synchronous parallel (BSP) abstract computer is a bridging model for designing parallel algorithms. It is similar to the parallel random access machine (PRAM) model, but unlike PRAM, BSP does not take communication and synchronization ... Parallel computing Programming paradigms {{compsci-stub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Parallel Computing

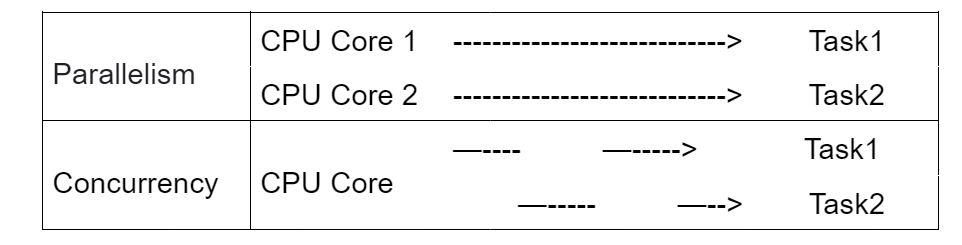

Parallel computing is a type of computing, computation in which many calculations or Process (computing), processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: Bit-level parallelism, bit-level, Instruction-level parallelism, instruction-level, Data parallelism, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling.S.V. Adve ''et al.'' (November 2008)"Parallel Computing Research at Illinois: The UPCRC Agenda" (PDF). Parallel@Illinois, University of Illinois at Urbana-Champaign. "The main techniques for these performance benefits—increased clock frequency and smarter but increasingly complex architectures—are now hitting the so-called power wall. The computer industry has accepted that future performance inc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Programming Paradigm

A programming paradigm is a relatively high-level way to conceptualize and structure the implementation of a computer program. A programming language can be classified as supporting one or more paradigms. Paradigms are separated along and described by different dimensions of programming. Some paradigms are about implications of the execution model, such as allowing Side effect (computer science), side effects, or whether the sequence of operations is defined by the execution model. Other paradigms are about the way code is organized, such as grouping into units that include both state and behavior. Yet others are about Syntax (programming languages), syntax and Formal grammar, grammar. Some common programming paradigms include (shown in hierarchical relationship): * imperative programming, Imperative code directly controls Control flow, execution flow and state change, explicit statements that change a program state ** procedural programming, procedural organized as function (c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Atomicity (programming)

Atomicity may refer to: Chemistry * Atomicity (chemistry), the total number of atoms present in 1 molecule of a substance * Valence (chemistry) In chemistry, the valence (US spelling) or valency (British spelling) of an atom is a measure of its combining capacity with other atoms when it forms chemical compounds or molecules. Valence is generally understood to be the number of chemica ..., sometimes referred to as atomicity Computing * Atomicity (database systems), a property of database transactions which are guaranteed to either completely occur, or have no effects * Atomicity (programming), an operation appears to occur at a single instant between its invocation and its response * Atomicity, a property of an S-expression, in a symbolic language like Lisp Mathematics * Atomicity, an element of orthogonality in a component-based system * Atomicity, in order theory; see Atom (order theory) See also * Atom (other) {{Disambiguation ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Transactional Memory

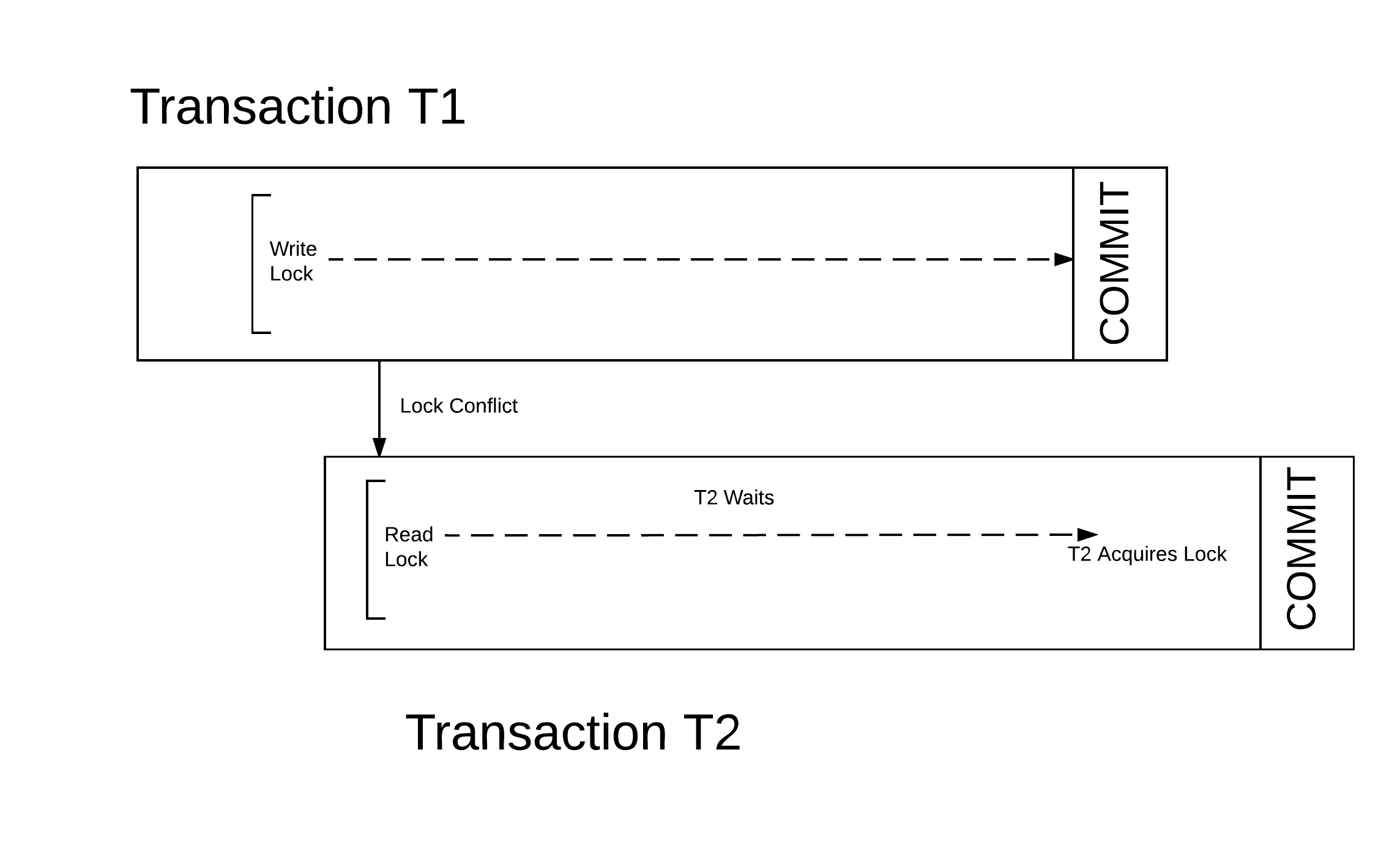

In computer science and computer engineering, engineering, transactional memory attempts to simplify concurrent programming by allowing a group of load and store instructions to execute in an linearizability, atomic way. It is a concurrency control mechanism analogous to database transactions for controlling access to Shared memory (interprocess communication), shared memory in concurrent computing. Transactional memory systems provide high-level abstraction as an alternative to low-level thread synchronization. This abstraction allows for coordination between concurrent reads and writes of shared data in parallel systems. Motivation In concurrent programming, synchronization is required when parallel threads attempt to access a shared resource. Low-level thread synchronization constructs such as locks are pessimistic and prohibit threads that are outside a critical section from running the code protected by the critical section. The process of applying and releasing locks often ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Bulk Synchronous Parallel

The bulk synchronous parallel (BSP) abstract computer is a bridging model for designing parallel algorithms. It is similar to the parallel random access machine (PRAM) model, but unlike PRAM, BSP does not take communication and synchronization for granted. In fact, quantifying the requisite synchronization and communication is an important part of analyzing a BSP algorithm. History The BSP model was developed by Leslie Valiant of Harvard University during the 1980s. The definitive article was published in 1990.Leslie G. Valiant, A bridging model for parallel computation, Communications of the ACM, Volume 33 Issue 8, Aug. 199/ref> Between 1990 and 1992, Leslie Valiant and Bill McColl of Oxford University worked on ideas for a distributed memory BSP programming model, in Princeton and at Harvard. Between 1992 and 1997, McColl led a large research team at Oxford that developed various BSP programming libraries, languages and tools, and also numerous massively parallel BSP algorit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Parallel Computing

Parallel computing is a type of computing, computation in which many calculations or Process (computing), processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: Bit-level parallelism, bit-level, Instruction-level parallelism, instruction-level, Data parallelism, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling.S.V. Adve ''et al.'' (November 2008)"Parallel Computing Research at Illinois: The UPCRC Agenda" (PDF). Parallel@Illinois, University of Illinois at Urbana-Champaign. "The main techniques for these performance benefits—increased clock frequency and smarter but increasingly complex architectures—are now hitting the so-called power wall. The computer industry has accepted that future performance inc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |