|

AutoML

Automated machine learning (AutoML) is the process of automating the tasks of applying machine learning to real-world problems. It is the combination of automation and ML. AutoML potentially includes every stage from beginning with a raw dataset to building a machine learning model ready for deployment. AutoML was proposed as an artificial intelligence-based solution to the growing challenge of applying machine learning. The high degree of automation in AutoML aims to allow non-experts to make use of machine learning models and techniques without requiring them to become experts in machine learning. Automating the process of applying machine learning end-to-end additionally offers the advantages of producing simpler solutions, faster creation of those solutions, and models that often outperform hand-designed models. Common techniques used in AutoML include hyperparameter optimization, meta-learning and neural architecture search. Comparison to the standard approach In a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Neural Architecture Search

Neural architecture search (NAS) is a technique for automating the design of artificial neural networks (ANN), a widely used model in the field of machine learning. NAS has been used to design networks that are on par with or outperform hand-designed architectures. Methods for NAS can be categorized according to the search space, search strategy and performance estimation strategy used: * The ''search space'' defines the type(s) of ANN that can be designed and optimized. * The ''search strategy'' defines the approach used to explore the search space. * The ''performance estimation strategy'' evaluates the performance of a possible ANN from its design (without constructing and training it). NAS is closely related to hyperparameter optimization and meta-learning and is a subfield of automated machine learning (AutoML). Reinforcement learning Reinforcement learning (RL) can underpin a NAS search strategy. Barret Zoph and Quoc Viet Le applied NAS with RL targeting the CIFAR-10 datas ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Meta-learning (computer Science)

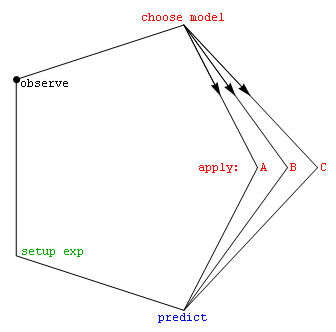

Meta-learning is a subfield of machine learning where automatic learning algorithms are applied to metadata about machine learning experiments. As of 2017, the term had not found a standard interpretation, however the main goal is to use such metadata to understand how automatic learning can become flexible in solving learning problems, hence to improve the performance of existing learning algorithms or to learn (induce) the learning algorithm itself, hence the alternative term learning to learn. Flexibility is important because each learning algorithm is based on a set of assumptions about the data, its inductive bias. This means that it will only learn well if the bias matches the learning problem. A learning algorithm may perform very well in one domain, but not on the next. This poses strong restrictions on the use of machine learning or data mining techniques, since the relationship between the learning problem (often some kind of database) and the effectiveness of differen ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Model Selection

Model selection is the task of selecting a model from among various candidates on the basis of performance criterion to choose the best one. In the context of machine learning and more generally statistical analysis, this may be the selection of a statistical model from a set of candidate models, given data. In the simplest cases, a pre-existing set of data is considered. However, the task can also involve the design of experiments such that the data collected is well-suited to the problem of model selection. Given candidate models of similar predictive or explanatory power, the simplest model is most likely to be the best choice (Occam's razor). state, "The majority of the problems in statistical inference can be considered to be problems related to statistical modeling". Relatedly, has said, "How hetranslation from subject-matter problem to statistical model is done is often the most critical part of an analysis". Model selection may also refer to the problem of selecting ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Hyperparameter Optimization

In machine learning, hyperparameter optimization or tuning is the problem of choosing a set of optimal hyperparameters for a learning algorithm. A hyperparameter is a parameter whose value is used to control the learning process, which must be configured before the process starts. Hyperparameter optimization determines the set of hyperparameters that yields an optimal model which minimizes a predefined loss function on a given data set. The objective function takes a set of hyperparameters and returns the associated loss. Cross-validation is often used to estimate this generalization performance, and therefore choose the set of values for hyperparameters that maximize it. Approaches Grid search The traditional method for hyperparameter optimization has been ''grid search'', or a ''parameter sweep'', which is simply an exhaustive searching through a manually specified subset of the hyperparameter space of a learning algorithm. A grid search algorithm must be guided by so ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Leakage (machine Learning)

In statistics and machine learning, leakage (also known as data leakage or target leakage) is the use of information in the model training process which would not be expected to be available at prediction time, causing the predictive scores (metrics) to overestimate the model's utility when run in a production environment. Leakage is often subtle and indirect, making it hard to detect and eliminate. Leakage can cause a statistician or modeler to select a suboptimal model, which could be outperformed by a leakage-free model. Leakage modes Leakage can occur in many steps in the machine learning process. The leakage causes can be sub-classified into two possible sources of leakage for a model: features and training examples. Feature leakage Feature or column-wise leakage is caused by the inclusion of columns which are one of the following: a duplicate label, a proxy for the label, or the label itself. These features, known as anachronisms, will not be available when the model is u ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Hyperparameter Optimization

In machine learning, hyperparameter optimization or tuning is the problem of choosing a set of optimal hyperparameters for a learning algorithm. A hyperparameter is a parameter whose value is used to control the learning process, which must be configured before the process starts. Hyperparameter optimization determines the set of hyperparameters that yields an optimal model which minimizes a predefined loss function on a given data set. The objective function takes a set of hyperparameters and returns the associated loss. Cross-validation is often used to estimate this generalization performance, and therefore choose the set of values for hyperparameters that maximize it. Approaches Grid search The traditional method for hyperparameter optimization has been ''grid search'', or a ''parameter sweep'', which is simply an exhaustive searching through a manually specified subset of the hyperparameter space of a learning algorithm. A grid search algorithm must be guided by so ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Feature Selection

In machine learning, feature selection is the process of selecting a subset of relevant Feature (machine learning), features (variables, predictors) for use in model construction. Feature selection techniques are used for several reasons: * simplification of models to make them easier to interpret, * shorter training times, * to avoid the curse of dimensionality, * improve the compatibility of the data with a certain learning model class, * to encode inherent Symmetric space, symmetries present in the input space. The central premise when using feature selection is that data sometimes contains features that are ''redundant'' or ''irrelevant'', and can thus be removed without incurring much loss of information. Redundancy and irrelevance are two distinct notions, since one relevant feature may be redundant in the presence of another relevant feature with which it is strongly correlated. Feature extraction creates new features from functions of the original features, whereas feat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Feature Extraction

Feature may refer to: Computing * Feature recognition, could be a hole, pocket, or notch * Feature (computer vision), could be an edge, corner or blob * Feature (machine learning), in statistics: individual measurable properties of the phenomena being observed * Software feature, a distinguishing characteristic of a software program Science and analysis * Feature data, in geographic information systems, comprise information about an entity with a geographic location * Features, in audio signal processing, an aim to capture specific aspects of audio signals in a numeric way * Feature (archaeology), any dug, built, or dumped evidence of human activity Media * Feature film, a film with a running time long enough to be considered the principal or sole film to fill a program ** Feature length, the standardized length of such films * Feature story, a piece of non-fiction writing about news * Radio documentary (feature), a radio program devoted to covering a particular topic in so ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Transfer Learning

Transfer learning (TL) is a technique in machine learning (ML) in which knowledge learned from a task is re-used in order to boost performance on a related task. For example, for image classification, knowledge gained while learning to recognize cars could be applied when trying to recognize trucks. This topic is related to the psychological literature on transfer of learning, although practical ties between the two fields are limited. Reusing/transferring information from previously learned tasks to new tasks has the potential to significantly improve learning efficiency. Since transfer learning makes use of training with multiple objective functions it is related to cost-sensitive machine learning and multi-objective optimization. History In 1976, Bozinovski and Fulgosi published a paper addressing transfer learning in neural network training. The paper gives a mathematical and geometrical model of the topic. In 1981, a report considered the application of transfer learni ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Automation

Automation describes a wide range of technologies that reduce human intervention in processes, mainly by predetermining decision criteria, subprocess relationships, and related actions, as well as embodying those predeterminations in machines. Automation has been achieved by various means including Mechanical system, mechanical, hydraulic, pneumatic, electrical, electronic devices, and computers, usually in combination. Complicated systems, such as modern Factory, factories, airplanes, and ships typically use combinations of all of these techniques. The benefit of automation includes labor savings, reducing waste, savings in electricity costs, savings in material costs, and improvements to quality, accuracy, and precision. Automation includes the use of various equipment and control systems such as machinery, processes in factories, boilers, and heat-treating ovens, switching on telephone networks, steering, Stabilizer (ship), stabilization of ships, aircraft and other applic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |