|

Advanced Synchronization Facility

Advanced Synchronization Facility (ASF) is a proposed extension to the x86-64 instruction set architecture that adds hardware transactional memory support. It was introduced by AMD; the latest specification was dated March 2009. , it was still in the proposal stage. No released microprocessors implement the extension. Features ASF provides the capability to start, end and abort transactional execution and to mark CPU cache lines for protected memory access in transactional code regions. It contains four new instructions—SPECULATE, COMMIT, ABORT and RELEASE—and turns the otherwise invalid LOCK-prefixed MOVx, PREFETCH and PREFETCHW instructions into valid ones inside transactional code regions. Up to 256 levels of nested transactional code regions is supported. The SPECULATE and COMMIT instructions mark the start and end of a transactional code region. Inside transactional code regions, the LOCK-prefixed MOVx reg/xmm, mem, PREFETCH and PREFETCHW instructions can mark up to fo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

X86-64

x86-64 (also known as x64, x86_64, AMD64, and Intel 64) is a 64-bit extension of the x86 instruction set architecture, instruction set. It was announced in 1999 and first available in the AMD Opteron family in 2003. It introduces two new operating modes: 64-bit mode and compatibility mode, along with a new four-level paging mechanism. In 64-bit mode, x86-64 supports significantly larger amounts of virtual memory and physical memory compared to its 32-bit computing, 32-bit predecessors, allowing programs to utilize more memory for data storage. The architecture expands the number of general-purpose registers from 8 to 16, all fully general-purpose, and extends their width to 64 bits. Floating-point arithmetic is supported through mandatory SSE2 instructions in 64-bit mode. While the older x87 FPU and MMX registers are still available, they are generally superseded by a set of sixteen 128-bit Processor register, vector registers (XMM registers). Each of these vector registers ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Instruction Set Architecture

In computer science, an instruction set architecture (ISA) is an abstract model that generally defines how software controls the CPU in a computer or a family of computers. A device or program that executes instructions described by that ISA, such as a central processing unit (CPU), is called an ''implementation'' of that ISA. In general, an ISA defines the supported instructions, data types, registers, the hardware support for managing main memory, fundamental features (such as the memory consistency, addressing modes, virtual memory), and the input/output model of implementations of the ISA. An ISA specifies the behavior of machine code running on implementations of that ISA in a fashion that does not depend on the characteristics of that implementation, providing binary compatibility between implementations. This enables multiple implementations of an ISA that differ in characteristics such as performance, physical size, and monetary cost (among other things), but t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Transactional Memory

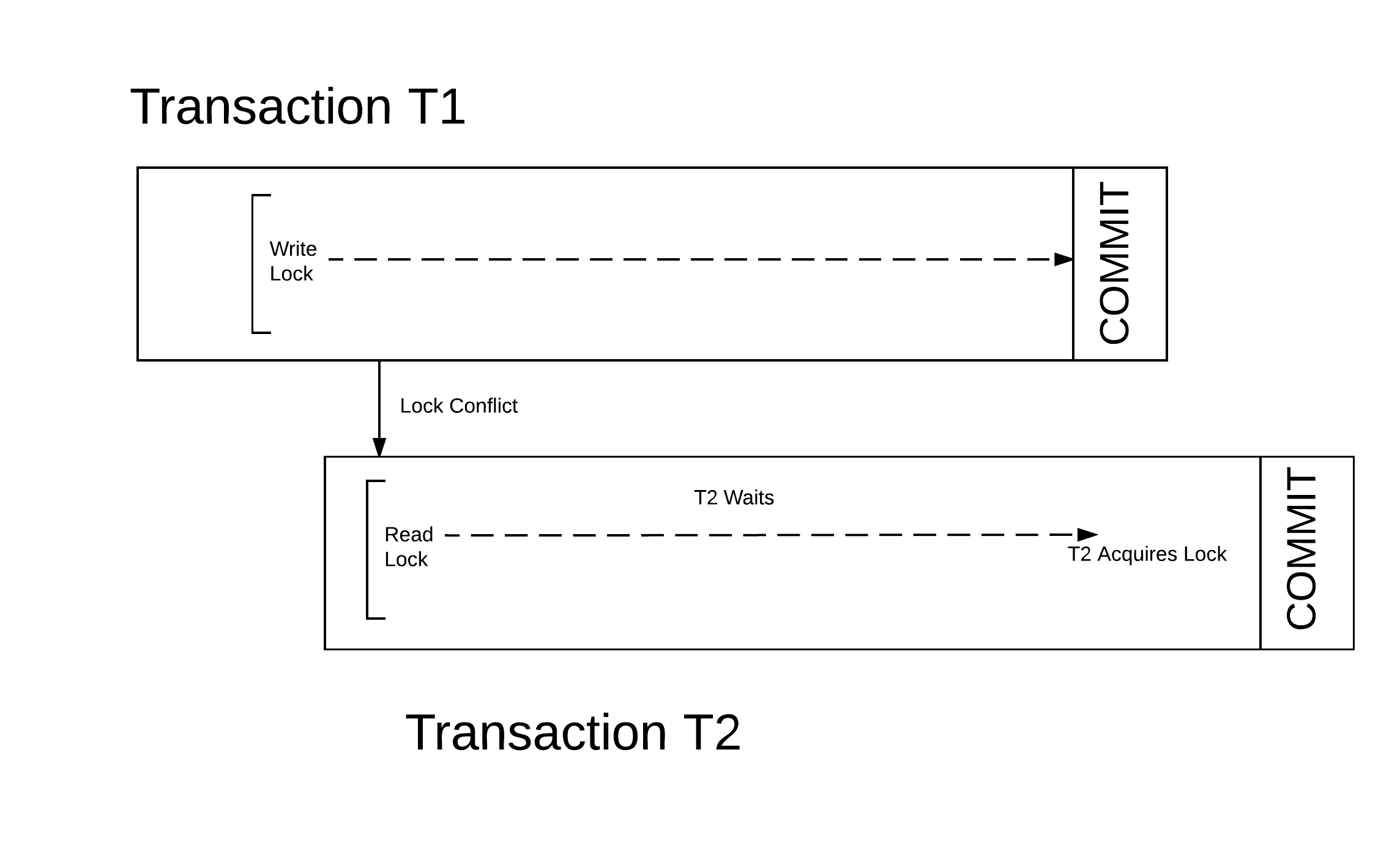

In computer science and computer engineering, engineering, transactional memory attempts to simplify concurrent programming by allowing a group of load and store instructions to execute in an linearizability, atomic way. It is a concurrency control mechanism analogous to database transactions for controlling access to Shared memory (interprocess communication), shared memory in concurrent computing. Transactional memory systems provide high-level abstraction as an alternative to low-level thread synchronization. This abstraction allows for coordination between concurrent reads and writes of shared data in parallel systems. Motivation In concurrent programming, synchronization is required when parallel threads attempt to access a shared resource. Low-level thread synchronization constructs such as locks are pessimistic and prohibit threads that are outside a critical section from running the code protected by the critical section. The process of applying and releasing locks often ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Microprocessor

A microprocessor is a computer processor (computing), processor for which the data processing logic and control is included on a single integrated circuit (IC), or a small number of ICs. The microprocessor contains the arithmetic, logic, and control circuitry required to perform the functions of a computer's central processing unit (CPU). The IC is capable of interpreting and executing program instructions and performing arithmetic operations. The microprocessor is a multipurpose, Clock signal, clock-driven, Processor register, register-based, digital integrated circuit that accepts binary code, binary data as input, processes it according to instruction (computing), instructions stored in its computer memory, memory, and provides results (also in binary form) as output. Microprocessors contain both combinational logic and sequential logic, sequential digital logic, and operate on numbers and symbols represented in the binary number system. The integration of a whole CPU on ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Cache Lines

A CPU cache is a hardware cache used by the central processing unit (CPU) of a computer to reduce the average cost (time or energy) to access data from the main memory. A cache is a smaller, faster memory, located closer to a processor core, which stores copies of the data from frequently used main memory locations. Most CPUs have a hierarchy of multiple cache levels (L1, L2, often L3, and rarely even L4), with different instruction-specific and data-specific caches at level 1. The cache memory is typically implemented with static random-access memory (SRAM), in modern CPUs by far the largest part of them by chip area, but SRAM is not always used for all levels (of I- or D-cache), or even any level, sometimes some latter or all levels are implemented with eDRAM. Other types of caches exist (that are not counted towards the "cache size" of the most important caches mentioned above), such as the translation lookaside buffer (TLB) which is part of the memory management unit (MMU) whic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Transactional Synchronization Extensions

Transactional Synchronization Extensions (TSX), also called Transactional Synchronization Extensions New Instructions (TSX-NI), is an extension to the x86 instruction set architecture (ISA) that adds hardware transactional memory support, speeding up execution of multi-threaded software through lock elision. According to different benchmarks, TSX/TSX-NI can provide around 40% faster applications execution in specific workloads, and 4–5 times more database transactions per second (TPS). TSX/TSX-NI was documented by Intel in February 2012, and debuted in June 2013 on selected Intel microprocessors based on the Haswell microarchitecture. Haswell processors below 45xx as well as R-series and K-series (with unlocked multiplier) SKUs do not support TSX/TSX-NI. In August 2014, Intel announced a bug in the TSX/TSX-NI implementation on current steppings of Haswell, Haswell-E, Haswell-EP and early Broadwell CPUs, which resulted in disabling the TSX/TSX-NI feature on affected CPUs ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

X86 Instructions

The x86 instruction set refers to the set of instructions that x86-compatible microprocessors support. The instructions are usually part of an executable program, often stored as a computer file and executed on the processor. The x86 instruction set has been extended several times, introducing wider registers and datatypes as well as new functionality. x86 integer instructions Below is the full 8086/ 8088 instruction set of Intel (81 instructions total). These instructions are also available in 32-bit mode, in which they operate on 32-bit registers (eax, ebx, etc.) and values instead of their 16-bit (ax, bx, etc.) counterparts. The updated instruction set is grouped according to architecture ( i186, i286, i386, i486, i586/ i686) and is referred to as (32-bit) x86 and (64-bit) x86-64 (also known as AMD64). Original 8086/8088 instructions This is the original instruction set. In the 'Notes' column, ''r'' means ''register'', ''m'' means ''memory address'' and ''imm'' mean ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Parallel Computing

Parallel computing is a type of computing, computation in which many calculations or Process (computing), processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: Bit-level parallelism, bit-level, Instruction-level parallelism, instruction-level, Data parallelism, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling.S.V. Adve ''et al.'' (November 2008)"Parallel Computing Research at Illinois: The UPCRC Agenda" (PDF). Parallel@Illinois, University of Illinois at Urbana-Champaign. "The main techniques for these performance benefits—increased clock frequency and smarter but increasingly complex architectures—are now hitting the so-called power wall. The computer industry has accepted that future performance inc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Transactional Memory

In computer science and computer engineering, engineering, transactional memory attempts to simplify concurrent programming by allowing a group of load and store instructions to execute in an linearizability, atomic way. It is a concurrency control mechanism analogous to database transactions for controlling access to Shared memory (interprocess communication), shared memory in concurrent computing. Transactional memory systems provide high-level abstraction as an alternative to low-level thread synchronization. This abstraction allows for coordination between concurrent reads and writes of shared data in parallel systems. Motivation In concurrent programming, synchronization is required when parallel threads attempt to access a shared resource. Low-level thread synchronization constructs such as locks are pessimistic and prohibit threads that are outside a critical section from running the code protected by the critical section. The process of applying and releasing locks often ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Transaction Processing

In computer science, transaction processing is information processing that is divided into individual, indivisible operations called ''transactions''. Each transaction must succeed or fail as a complete unit; it can never be only partially complete. For example, when you purchase a book from an online bookstore, you exchange money (in the form of credit) for a book. If your credit is good, a series of related operations ensures that you get the book and the bookstore gets your money. However, if a single operation in the series fails during the exchange, the entire exchange fails. You do not get the book and the bookstore does not get your money. The technology responsible for making the exchange balanced and predictable is called ''transaction processing''. Transactions ensure that data-oriented resources are not permanently updated unless all operations within the transactional unit complete successfully. By combining a set of related operations into a unit that either com ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Concurrency Control

In information technology and computer science, especially in the fields of computer programming, operating systems, multiprocessors, and databases, concurrency control ensures that correct results for concurrent operations are generated, while getting those results as quickly as possible. Computer systems, both software and hardware, consist of modules, or components. Each component is designed to operate correctly, i.e., to obey or to meet certain consistency rules. When components that operate concurrently interact by messaging or by sharing accessed data (in memory or storage), a certain component's consistency may be violated by another component. The general area of concurrency control provides rules, methods, design methodologies, and theories to maintain the consistency of components operating concurrently while interacting, and thus the consistency and correctness of the whole system. Introducing concurrency control into a system means applying operation constraints ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |