|

Arellano–Bond Estimator

In econometrics, the Arellano–Bond estimator is a generalized method of moments estimator used to estimate dynamic models of panel data. It was proposed in 1991 by Manuel Arellano and Stephen Bond, based on the earlier work by Alok Bhargava and John Denis Sargan in 1983, for addressing certain endogeneity problems. The GMM-SYS estimator is a system that contains both the levels and the first difference equations. It provides an alternative to the standard first difference GMM estimator. Qualitative description Unlike static panel data models, dynamic panel data models include lagged levels of the dependent variable as regressors. Including a lagged dependent variable as a regressor violates strict exogeneity, because the lagged dependent variable is likely to be correlated with the random effects and/or the general errors. The Bhargava-Sargan article developed optimal linear combinations of predetermined variables from different time periods, provided sufficient conditions ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Econometrics

Econometrics is the application of Statistics, statistical methods to economic data in order to give Empirical evidence, empirical content to economic relationships.M. Hashem Pesaran (1987). "Econometrics," ''The New Palgrave: A Dictionary of Economics'', v. 2, p. 8 [pp. 8–22]. Reprinted in J. Eatwell ''et al.'', eds. (1990). ''Econometrics: The New Palgrave''p. 1[pp. 1–34].Abstract (The New Palgrave Dictionary of Economics, 2008 revision by J. Geweke, J. Horowitz, and H. P. Pesaran). More precisely, it is "the quantitative analysis of actual economic Phenomenon, phenomena based on the concurrent development of theory and observation, related by appropriate methods of inference". An introductory economics textbook describes econometrics as allowing economists "to sift through mountains of data to extract simple relationships". Jan Tinbergen is one of the two founding fathers of econometrics. The other, Ragnar Frisch, also coined the term in the sense in which it is used toda ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Journal Of The American Statistical Association

The ''Journal of the American Statistical Association (JASA)'' is the primary journal published by the American Statistical Association, the main professional body for statisticians in the United States. It is published four times a year in March, June, September and December by Taylor & Francis, Ltd on behalf of the American Statistical Association. As a statistics journal it publishes articles primarily focused on the application of statistics, statistical theory and methods in economic, social, physical, engineering, and health sciences. The journal also includes reviews of academic books which are important to the advancement of the field. It had an impact factor of 2.063 in 2010, tenth highest in the "Statistics and Probability" category of ''Journal Citation Reports''. In a 2003 survey of statisticians, the ''Journal of the American Statistical Association'' was ranked first, among all journals, for "Applications of Statistics" and second (after ''Annals of Statistics'') f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Estimator

In statistics, an estimator is a rule for calculating an estimate of a given quantity based on observed data: thus the rule (the estimator), the quantity of interest (the estimand) and its result (the estimate) are distinguished. For example, the sample mean is a commonly used estimator of the population mean. There are point and interval estimators. The point estimators yield single-valued results. This is in contrast to an interval estimator, where the result would be a range of plausible values. "Single value" does not necessarily mean "single number", but includes vector valued or function valued estimators. ''Estimation theory'' is concerned with the properties of estimators; that is, with defining properties that can be used to compare different estimators (different rules for creating estimates) for the same quantity, based on the same data. Such properties can be used to determine the best rules to use under given circumstances. However, in robust statistics, statistica ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mixed Model

A mixed model, mixed-effects model or mixed error-component model is a statistical model containing both fixed effects and random effects. These models are useful in a wide variety of disciplines in the physical, biological and social sciences. They are particularly useful in settings where repeated measurements are made on the same statistical units (longitudinal study), or where measurements are made on clusters of related statistical units. Because of their advantage in dealing with missing values, mixed effects models are often preferred over more traditional approaches such as repeated measures analysis of variance. This page will discuss mainly linear mixed-effects models (LMEM) rather than generalized linear mixed models or nonlinear mixed-effects models. History and current status Ronald Fisher introduced random effects models to study the correlations of trait values between relatives. In the 1950s, Charles Roy Henderson provided best linear unbiased estimates of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Random Effects Model

In statistics, a random effects model, also called a variance components model, is a statistical model where the model parameters are random variables. It is a kind of hierarchical linear model, which assumes that the data being analysed are drawn from a hierarchy of different populations whose differences relate to that hierarchy. A random effects model is a special case of a mixed model. Contrast this to the biostatistics definitions, as biostatisticians use "fixed" and "random" effects to respectively refer to the population-average and subject-specific effects (and where the latter are generally assumed to be unknown, latent variables). Qualitative description Random effect models assist in controlling for unobserved heterogeneity when the heterogeneity is constant over time and not correlated with independent variables. This constant can be removed from longitudinal data through differencing, since taking a first difference will remove any time invariant components of the m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stata

Stata (, , alternatively , occasionally stylized as STATA) is a general-purpose statistical software package developed by StataCorp for data manipulation, visualization, statistics, and automated reporting. It is used by researchers in many fields, including biomedicine, epidemiology, sociology and science. Stata was initially developed by Computing Resource Center in California and the first version was released in 1985. In 1993, the company moved to College Station, TX and was renamed Stata Corporation, now known as StataCorp. A major release in 2003 included a new graphics system and dialog boxes for all commands. Since then, a new version has been released once every two years. The current version is Stata 17, released in April 2021. Technical overview and terminology User interface From its creation, Stata has always employed an integrated command-line interface. Starting with version 8.0, Stata has included a graphical user interface based on Qt framework which uses m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Journal Of Statistical Software

The ''Journal of Statistical Software'' is a peer-reviewed open-access scientific journal that publishes papers related to statistical software. The ''Journal of Statistical Software'' was founded in 1996 by Jan de Leeuw of the Department of Statistics at the University of California, Los Angeles. Its current editors-in-chief are Achim Zeileis, Bettina Grün, Edzer Pebesma, and Torsten Hothorn. It is published by the Foundation for Open Access Statistics. The journal charges no author fees or subscription fees. The journal publishes peer-reviewed articles about statistical software, together with the source code. It also publishes reviews of statistical software and books (by invitation only). Articles are licensed under the Creative Commons Attribution License, while the source codes distributed with articles are licensed under the GNU General Public License. Articles are often about free statistical software and coverage includes packages for the R programming language. Abs ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

R (programming Language)

R is a programming language for statistical computing and graphics supported by the R Core Team and the R Foundation for Statistical Computing. Created by statisticians Ross Ihaka and Robert Gentleman, R is used among data miners, bioinformaticians and statisticians for data analysis and developing statistical software. Users have created packages to augment the functions of the R language. According to user surveys and studies of scholarly literature databases, R is one of the most commonly used programming languages used in data mining. R ranks 12th in the TIOBE index, a measure of programming language popularity, in which the language peaked in 8th place in August 2020. The official R software environment is an open-source free software environment within the GNU package, available under the GNU General Public License. It is written primarily in C, Fortran, and R itself (partially self-hosting). Precompiled executables are provided for various operating systems. R ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Random Walk

In mathematics, a random walk is a random process that describes a path that consists of a succession of random steps on some mathematical space. An elementary example of a random walk is the random walk on the integer number line \mathbb Z which starts at 0, and at each step moves +1 or −1 with equal probability. Other examples include the path traced by a molecule as it travels in a liquid or a gas (see Brownian motion), the search path of a foraging animal, or the price of a fluctuating stock and the financial status of a gambler. Random walks have applications to engineering and many scientific fields including ecology, psychology, computer science, physics, chemistry, biology, economics, and sociology. The term ''random walk'' was first introduced by Karl Pearson in 1905. Lattice random walk A popular random walk model is that of a random walk on a regular lattice, where at each step the location jumps to another site according to some probability distribution. In a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Heteroskedasticity-consistent Standard Errors

The topic of heteroskedasticity-consistent (HC) standard errors arises in statistics and econometrics in the context of linear regression and time series analysis. These are also known as heteroskedasticity-robust standard errors (or simply robust standard errors), Eicker–Huber–White standard errors (also Huber–White standard errors or White standard errors), to recognize the contributions of Friedhelm Eicker, Peter J. Huber, and Halbert White. In regression and time-series modelling, basic forms of models make use of the assumption that the errors or disturbances ''u''''i'' have the same variance across all observation points. When this is not the case, the errors are said to be heteroskedastic, or to have heteroskedasticity, and this behaviour will be reflected in the residuals \widehat_i estimated from a fitted model. Heteroskedasticity-consistent standard errors are used to allow the fitting of a model that does contain heteroskedastic residuals. The first such appro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Errors And Residuals In Statistics

In statistics and optimization, errors and residuals are two closely related and easily confused measures of the deviation of an observed value of an element of a statistical sample from its "true value" (not necessarily observable). The error of an observation is the deviation of the observed value from the true value of a quantity of interest (for example, a population mean). The residual is the difference between the observed value and the ''estimated'' value of the quantity of interest (for example, a sample mean). The distinction is most important in regression analysis, where the concepts are sometimes called the regression errors and regression residuals and where they lead to the concept of studentized residuals. In econometrics, "errors" are also called disturbances. Introduction Suppose there is a series of observations from a univariate distribution and we want to estimate the mean of that distribution (the so-called location model). In this case, the errors are th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

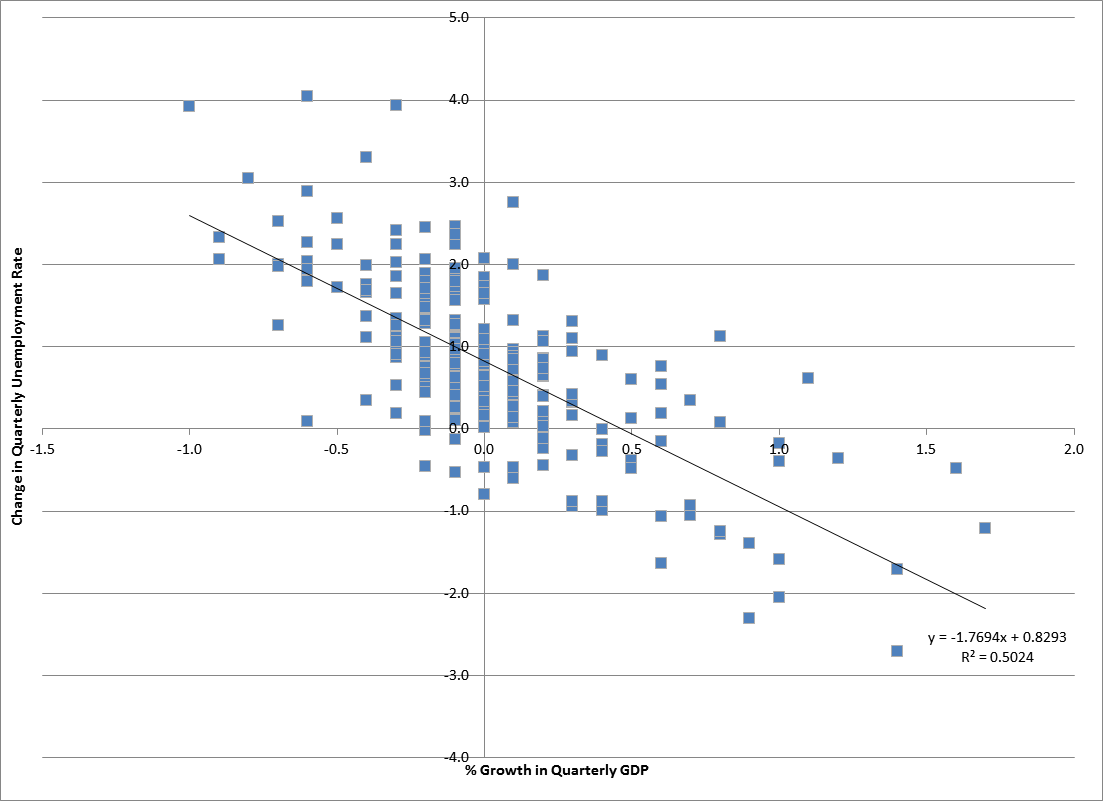

Regression Equation

In statistical modeling, regression analysis is a set of statistical processes for estimating the relationships between a dependent variable (often called the 'outcome' or 'response' variable, or a 'label' in machine learning parlance) and one or more independent variables (often called 'predictors', 'covariates', 'explanatory variables' or 'features'). The most common form of regression analysis is linear regression, in which one finds the line (or a more complex linear combination) that most closely fits the data according to a specific mathematical criterion. For example, the method of ordinary least squares computes the unique line (or hyperplane) that minimizes the sum of squared differences between the true data and that line (or hyperplane). For specific mathematical reasons (see linear regression), this allows the researcher to estimate the conditional expectation (or population average value) of the dependent variable when the independent variables take on a given set of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |