|

Analytica (software)

Analytica is a visual software developed by Lumina Decision Systems for creating, analyzing and communicating quantitative decision models. It combines hierarchical influence diagrams for visual creation and view of models, intelligent arrays for working with multidimensional data, Monte Carlo simulation for analyzing risk and uncertainty, and optimization, including linear and nonlinear programming. Its design is based on ideas from the field of decision analysis. As a computer language, it combines a declarative (non-procedural) structure for referential transparency, array abstraction, and automatic dependency maintenance for efficient sequencing of computation. Hierarchical influence diagrams Analytica models are organized as influence diagrams. Variables (and other objects) appear as nodes of various shapes on a diagram, connected by arrows that provide a visual representation of dependencies. Analytica influence diagrams may be hierarchical, in which a single ''module'' n ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Decision Analysis

Decision analysis (DA) is the Academic discipline, discipline comprising the philosophy, methodology, and professional practice necessary to address important Decision making, decisions in a formal manner. Decision analysis includes many procedures, methods, and tools for identifying, clearly representing, and formally assessing important aspects of a decision; for prescribing a recommended course of action by applying the maximum Expected_utility_hypothesis, expected-utility action axiom, axiom to a well-formed representation of the decision; and for translating the formal representation of a decision and its corresponding recommendation into insight for the Decision making, decision maker, and other corporate and non-corporate Stakeholder (corporate), stakeholders. History In 1931, mathematical philosopher Frank_Ramsey_(mathematician), Frank Ramsey pioneered the idea of Probability_interpretations#Subjectivism, subjective probability as a Expected utility hypothesis#Ramsey-theore ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

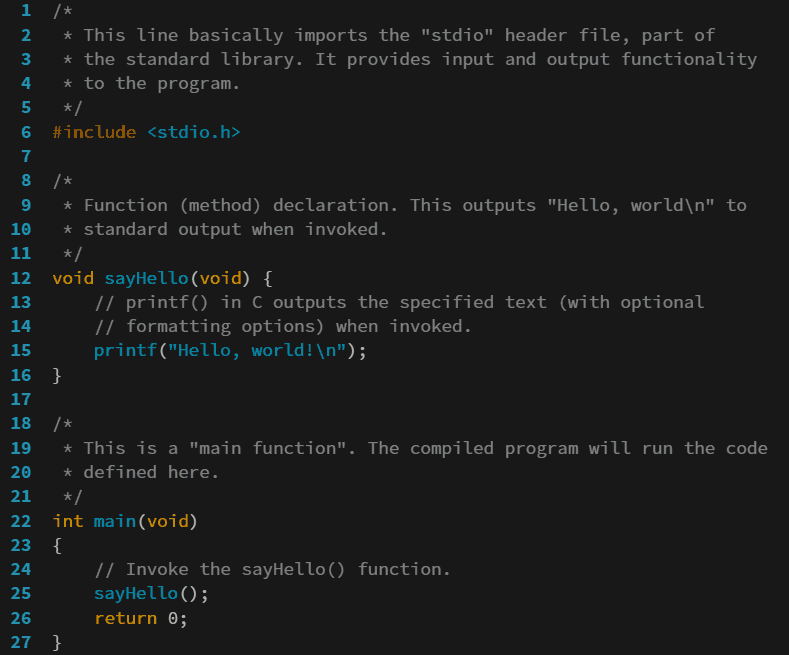

C++ (programming Language)

C (''pronounced'' '' – like the letter c'') is a general-purpose programming language. It was created in the 1970s by Dennis Ritchie and remains very widely used and influential. By design, C's features cleanly reflect the capabilities of the targeted Central processing unit, CPUs. It has found lasting use in operating systems code (especially in Kernel (operating system), kernels), device drivers, and protocol stacks, but its use in application software has been decreasing. C is commonly used on computer architectures that range from the largest supercomputers to the smallest microcontrollers and embedded systems. A successor to the programming language B (programming language), B, C was originally developed at Bell Labs by Ritchie between 1972 and 1973 to construct utilities running on Unix. It was applied to re-implementing the kernel of the Unix operating system. During the 1980s, C gradually gained popularity. It has become one of the most widely used programming langu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Net Present Value

The net present value (NPV) or net present worth (NPW) is a way of measuring the value of an asset that has cashflow by adding up the present value of all the future cash flows that asset will generate. The present value of a cash flow depends on the interval of time between now and the cash flow because of the Time value of money (which includes the annual effective discount rate). It provides a method for evaluating and comparing capital projects or financial products with cash flows spread over time, as in loans, investments, payouts from insurance contracts plus many other applications. Time value of money dictates that time affects the value of cash flows. For example, a lender may offer 99 cents for the promise of receiving $1.00 a month from now, but the promise to receive that same dollar 20 years in the future would be worth much less today to that same person (lender), even if the payback in both cases was equally certain. This decrease in the current value of future c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Influence Diagrams

An influence diagram (ID) (also called a relevance diagram, decision diagram or a decision network) is a compact graphical and mathematical representation of a decision situation. It is a generalization of a Bayesian network, in which not only probabilistic inference problems but also decision making problems (following the maximum expected utility criterion) can be modeled and solved. ID was first developed in the mid-1970s by decision analysts with an intuitive semantic that is easy to understand. It is now adopted widely and becoming an alternative to the decision tree which typically suffers from exponential growth in number of branches with each variable modeled. ID is directly applicable in team decision analysis, since it allows incomplete sharing of information among team members to be modeled and solved explicitly. Extensions of ID also find their use in game theory as an alternative representation of the game tree. Semantics An ID is a directed acyclic graph with ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

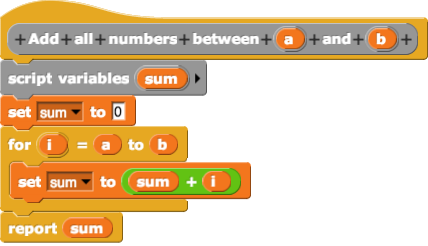

Visual Programming Languages

In computing, a visual programming language (visual programming system, VPL, or, VPS), also known as diagrammatic programming, graphical programming or block coding, is a programming language that lets users create programs by manipulating program elements rather than by specifying them . A VPL allows programming with visual expressions, spatial arrangements of text and graphic symbols, used either as elements of syntax or secondary notation. For example, many VPLs are based on the idea of "boxes and arrows", where boxes or other screen objects are treated as entities, connected by arrows, lines or arcs which represent relations. VPLs are generally the basis of low-code development platforms. Definition VPLs may be further classified, according to the type and extent of visual expression used, into icon-based languages, form-based languages, and diagram languages. Visual programming environments provide graphical or iconic elements which can be manipulated by users in an inter ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Programming Language

A programming language is a system of notation for writing computer programs. Programming languages are described in terms of their Syntax (programming languages), syntax (form) and semantics (computer science), semantics (meaning), usually defined by a formal language. Languages usually provide features such as a type system, Variable (computer science), variables, and mechanisms for Exception handling (programming), error handling. An Programming language implementation, implementation of a programming language is required in order to Execution (computing), execute programs, namely an Interpreter (computing), interpreter or a compiler. An interpreter directly executes the source code, while a compiler produces an executable program. Computer architecture has strongly influenced the design of programming languages, with the most common type (imperative languages—which implement operations in a specified order) developed to perform well on the popular von Neumann architecture. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

System Dynamics

System dynamics (SD) is an approach to understanding the nonlinear behaviour of complex systems over time using stocks, flows, internal feedback loops, table functions and time delays. Overview System dynamics is a methodology and mathematical modeling technique to frame, understand, and discuss complex issues and problems. Originally developed in the 1950s to help corporate managers improve their understanding of industrial processes, SD is currently being used throughout the public and private sector for policy analysis and design. Convenient graphical user interface (GUI) system dynamics software developed into user friendly versions by the 1990s and have been applied to diverse systems. SD models solve the problem of simultaneity (mutual causation) by updating all variables in small time increments with positive and negative feedbacks and time delays structuring the interactions and control. The best known SD model is probably the 1972 ''The Limits to Growth''. This model fo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Management

The discipline of probability management communicates and calculates uncertainties as data structures that obey both the laws of arithmetic and probability, while preserving statistical coherence. Such data is referred to as coherent stochastic data. The simplest approach is to use vector arrays of simulated or historical realizations and metadata called Stochastic Information Packets (SIPs). A set of SIPs, which preserve statistical relationships between variables, is said to be coherent and is referred to as a Stochastic Library Unit with Relationships Preserved (SLURP). SIPs and SLURPs allow stochastic simulations to communicate with one another. For example, see Analytica (Wikipedia), AnalyticaSIP page an Autobox The first ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cumulative Distribution Function

In probability theory and statistics, the cumulative distribution function (CDF) of a real-valued random variable X, or just distribution function of X, evaluated at x, is the probability that X will take a value less than or equal to x. Every probability distribution Support (measure theory), supported on the real numbers, discrete or "mixed" as well as Continuous variable, continuous, is uniquely identified by a right-continuous Monotonic function, monotone increasing function (a càdlàg function) F \colon \mathbb R \rightarrow [0,1] satisfying \lim_F(x)=0 and \lim_F(x)=1. In the case of a scalar continuous distribution, it gives the area under the probability density function from negative infinity to x. Cumulative distribution functions are also used to specify the distribution of multivariate random variables. Definition The cumulative distribution function of a real-valued random variable X is the function given by where the right-hand side represents the probability ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Density Function

In probability theory, a probability density function (PDF), density function, or density of an absolutely continuous random variable, is a Function (mathematics), function whose value at any given sample (or point) in the sample space (the set of possible values taken by the random variable) can be interpreted as providing a ''relative likelihood'' that the value of the random variable would be equal to that sample. Probability density is the probability per unit length, in other words, while the ''absolute likelihood'' for a continuous random variable to take on any particular value is 0 (since there is an infinite set of possible values to begin with), the value of the PDF at two different samples can be used to infer, in any particular draw of the random variable, how much more likely it is that the random variable would be close to one sample compared to the other sample. More precisely, the PDF is used to specify the probability of the random variable falling ''within ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Quantile

In statistics and probability, quantiles are cut points dividing the range of a probability distribution into continuous intervals with equal probabilities or dividing the observations in a sample in the same way. There is one fewer quantile than the number of groups created. Common quantiles have special names, such as '' quartiles'' (four groups), '' deciles'' (ten groups), and '' percentiles'' (100 groups). The groups created are termed halves, thirds, quarters, etc., though sometimes the terms for the quantile are used for the groups created, rather than for the cut points. -quantiles are values that partition a finite set of values into subsets of (nearly) equal sizes. There are partitions of the -quantiles, one for each integer satisfying . In some cases the value of a quantile may not be uniquely determined, as can be the case for the median (2-quantile) of a uniform probability distribution on a set of even size. Quantiles can also be applied to continuous di ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mean

A mean is a quantity representing the "center" of a collection of numbers and is intermediate to the extreme values of the set of numbers. There are several kinds of means (or "measures of central tendency") in mathematics, especially in statistics. Each attempts to summarize or typify a given group of data, illustrating the magnitude and sign of the data set. Which of these measures is most illuminating depends on what is being measured, and on context and purpose. The ''arithmetic mean'', also known as "arithmetic average", is the sum of the values divided by the number of values. The arithmetic mean of a set of numbers ''x''1, ''x''2, ..., x''n'' is typically denoted using an overhead bar, \bar. If the numbers are from observing a sample of a larger group, the arithmetic mean is termed the '' sample mean'' (\bar) to distinguish it from the group mean (or expected value) of the underlying distribution, denoted \mu or \mu_x. Outside probability and statistics, a wide rang ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |