|

3D Pose Estimation

3D pose estimation is a process of predicting the transformation of an object from a user-defined reference pose, given an image or a 3D scan. It arises in computer vision or robotics where the pose or transformation of an object can be used for alignment of a computer-aided design models, identification, grasping, or manipulation of the object. The image data from which the pose of an object is determined can be either a single image, a stereo image pair, or an image sequence where, typically, the camera is moving with a known velocity. The objects which are considered can be rather general, including a living being or body parts, e.g., a head or hands. The methods which are used for determining the pose of an object, however, are usually specific for a class of objects and cannot generally be expected to work well for other types of objects. From an uncalibrated 2D camera It is possible to estimate the 3D rotation and translation of a 3D object from a single 2D photo, if a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

C (programming Language)

C (''pronounced'' '' – like the letter c'') is a general-purpose programming language. It was created in the 1970s by Dennis Ritchie and remains very widely used and influential. By design, C's features cleanly reflect the capabilities of the targeted Central processing unit, CPUs. It has found lasting use in operating systems code (especially in Kernel (operating system), kernels), device drivers, and protocol stacks, but its use in application software has been decreasing. C is commonly used on computer architectures that range from the largest supercomputers to the smallest microcontrollers and embedded systems. A successor to the programming language B (programming language), B, C was originally developed at Bell Labs by Ritchie between 1972 and 1973 to construct utilities running on Unix. It was applied to re-implementing the kernel of the Unix operating system. During the 1980s, C gradually gained popularity. It has become one of the most widely used programming langu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

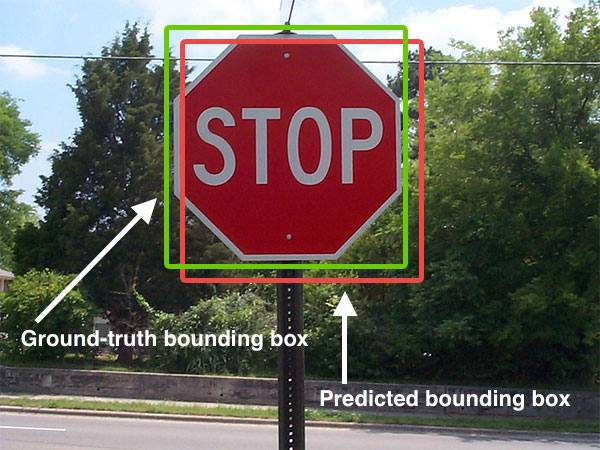

Computer Vision

Computer vision tasks include methods for image sensor, acquiring, Image processing, processing, Image analysis, analyzing, and understanding digital images, and extraction of high-dimensional data from the real world in order to produce numerical or symbolic information, e.g. in the form of decisions. "Understanding" in this context signifies the transformation of visual images (the input to the retina) into descriptions of the world that make sense to thought processes and can elicit appropriate action. This image understanding can be seen as the disentangling of symbolic information from image data using models constructed with the aid of geometry, physics, statistics, and learning theory. The scientific discipline of computer vision is concerned with the theory behind artificial systems that extract information from images. Image data can take many forms, such as video sequences, views from multiple cameras, multi-dimensional data from a 3D scanning, 3D scanner, 3D point clouds ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pose Estimation

3D pose estimation is a process of predicting the transformation of an object from a user-defined reference pose, given an image or a 3D scan. It arises in computer vision or robotics where the pose or transformation of an object can be used for alignment of a computer-aided design models, identification, grasping, or manipulation of the object. The image data from which the pose of an object is determined can be either a single image, a stereo image pair, or an image sequence where, typically, the camera is moving with a known velocity. The objects which are considered can be rather general, including a living being or body parts, e.g., a head or hands. The methods which are used for determining the pose of an object, however, are usually specific for a class of objects and cannot generally be expected to work well for other types of objects. From an uncalibrated 2D camera It is possible to estimate the 3D rotation and translation of a 3D object from a single 2D photo, if a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Trifocal Tensor

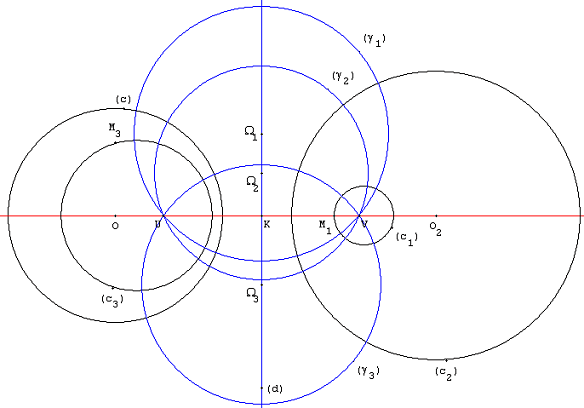

In computer vision, the trifocal tensor (also tritensor) is a 3×3×3 array of numbers (i.e., a tensor) that incorporates all projective geometric relationships among three views. It relates the coordinates of corresponding points or lines in three views, being independent of the scene structure and depending only on the relative motion (i.e., pose) among the three views and their intrinsic calibration parameters. Hence, the trifocal tensor can be considered as the generalization of the fundamental matrix in three views. It is noted that despite the tensor being made up of 27 elements, only 18 of them are actually independent. There is also a so-called calibrated trifocal tensor, which relates the coordinates of points and lines in three views given their intrinsic parameters and encodes the relative pose of the cameras up to global scale, totalling 11 independent elements or degrees of freedom. The reduced degrees of freedom allow for fewer correspondences to fit the model, at t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Homography (computer Vision)

In projective geometry, a homography is an isomorphism of projective spaces, induced by an isomorphism of the vector spaces from which the projective spaces derive. It is a bijection that maps lines to lines, and thus a collineation. In general, some collineations are not homographies, but the fundamental theorem of projective geometry asserts that is not so in the case of real projective spaces of dimension at least two. Synonyms include projectivity, projective transformation, and projective collineation. Historically, homographies (and projective spaces) have been introduced to study perspective and projections in Euclidean geometry, and the term ''homography'', which, etymologically, roughly means "similar drawing", dates from this time. At the end of the 19th century, formal definitions of projective spaces were introduced, which extended Euclidean and affine spaces by the addition of new points called points at infinity. The term "projective transformation" originated in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Camera Resectioning

Camera resectioning is the process of estimating the parameters of a pinhole camera model approximating the camera that produced a given photograph or video; it determines which incoming light ray is associated with each pixel on the resulting image. Basically, the process determines the pose of the pinhole camera. Usually, the camera parameters are represented in a 3 × 4 projection matrix called the '' camera matrix''. The extrinsic parameters define the camera '' pose'' (position and orientation) while the intrinsic parameters specify the camera image format (focal length, pixel size, and image origin). This process is often called geometric camera calibration or simply camera calibration, although that term may also refer to photometric camera calibration or be restricted for the estimation of the intrinsic parameters only. Exterior orientation and interior orientation refer to the determination of only the extrinsic and intrinsic parameters, respectively. The ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Articulated Body Pose Estimation

An articulated vehicle is a vehicle which has a permanent or semi-permanent coupling in its construction. This coupling works as a large pivot joint, allowing it to bend and turn more sharply. There are many kinds, from heavy equipment to buses, trams and trains. Steam locomotives were sometimes articulated so the driving wheels could pivot around corners. In a broader sense, any vehicle towing a trailer (including a semi-trailer) could be described as articulated (which comes from the Latin word ''articulus'', "small joint"). In the UK, an ''articulated lorry'' is the combination of a tractor and a trailer, abbreviated to "artic". In the US, it is called a semi-trailer truck, tractor-trailer or semi-truck and is not necessarily considered articulated. Types Buses Buses are articulated to allow for a much longer bus that can still navigate within the turning radius of a normal bus. Most buses have one articulation, but some have two. Trucks In the UK, tractor unit an ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

3D Object Recognition

{{FeatureDetectionCompVisNavbox In computer vision, 3D object recognition involves recognizing and determining 3D information, such as the pose, volume, or shape, of user-chosen 3D objects in a photograph or range scan. Typically, an example of the object to be recognized is presented to a vision system in a controlled environment, and then for an arbitrary input such as a video stream, the system locates the previously presented object. This can be done either off-line, or in real-time. The algorithms for solving this problem are specialized for locating a single pre-identified object, and can be contrasted with algorithms which operate on general classes of objects, such as face recognition systems or 3D generic object recognition. Due to the low cost and ease of acquiring photographs, a significant amount of research has been devoted to 3D object recognition in photographs. 3D single-object recognition in photographs The method of recognizing a 3D object depends on t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gesture Recognition

Gesture recognition is an area of research and development in computer science and language technology concerned with the recognition and interpretation of human gestures. A subdiscipline of computer vision, it employs mathematical algorithms to interpret gestures. Gesture recognition offers a path for computers to begin to better understand and interpret computer processing of body language, human body language, previously not possible through text user interface, text or unenhanced graphical user interfaces (GUIs). Gestures can originate from any bodily motion or state, but commonly originate from the face or hand. One area of the field is emotion recognition derived from facial expressions and hand gestures. Users can make simple gestures to control or interact with devices without physically touching them. Many approaches have been made using cameras and computer vision algorithms to interpret sign language, however, the identification and recognition of posture, gait, pro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Scale-invariant Feature Transform

The scale-invariant feature transform (SIFT) is a computer vision algorithm to detect, describe, and match local '' features'' in images, invented by David Lowe in 1999. Applications include object recognition, robotic mapping and navigation, image stitching, 3D modeling, gesture recognition, video tracking, individual identification of wildlife and match moving. SIFT keypoints of objects are first extracted from a set of reference images and stored in a database. An object is recognized in a new image by individually comparing each feature from the new image to this database and finding candidate matching features based on Euclidean distance of their feature vectors. From the full set of matches, subsets of keypoints that agree on the object and its location, scale, and orientation in the new image are identified to filter out good matches. The determination of consistent clusters is performed rapidly by using an efficient hash table implementation of the generalised Hough t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |