rendering (computer graphics) on:

[Wikipedia]

[Google]

[Amazon]

Rendering is the process of generating a

Ray marching is a family of algorithms, used by ray casting, for finding intersections between a ray and a complex object, such as a Volume ray casting, volumetric dataset or a surface defined by a signed distance function. It is not, by itself, a rendering method, but it can be incorporated into ray tracing and path tracing, and is used by rasterization to implement screen-space reflection and other effects.

A technique called photon mapping traces paths of photons from a light source to an object, accumulating data about irradiance which is then used during conventional ray tracing or path tracing. Rendering a scene using only rays traced from the light source to the camera is impractical, even though it corresponds more closely to reality, because a huge number of photons would need to be simulated, only a tiny fraction of which actually hit the camera.

Some authors call conventional ray tracing "backward" ray tracing because it traces the paths of photons backwards from the camera to the light source, and call following paths from the light source (as in photon mapping) "forward" ray tracing. However, sometimes the meaning of these terms is reversed. Tracing rays starting at the light source can also be called ''particle tracing'' or ''light tracing'', which avoids this ambiguity.

Real-time rendering, including video game graphics, typically uses rasterization, but increasingly combines it with ray tracing and path tracing. To enable realistic

Ray marching is a family of algorithms, used by ray casting, for finding intersections between a ray and a complex object, such as a Volume ray casting, volumetric dataset or a surface defined by a signed distance function. It is not, by itself, a rendering method, but it can be incorporated into ray tracing and path tracing, and is used by rasterization to implement screen-space reflection and other effects.

A technique called photon mapping traces paths of photons from a light source to an object, accumulating data about irradiance which is then used during conventional ray tracing or path tracing. Rendering a scene using only rays traced from the light source to the camera is impractical, even though it corresponds more closely to reality, because a huge number of photons would need to be simulated, only a tiny fraction of which actually hit the camera.

Some authors call conventional ray tracing "backward" ray tracing because it traces the paths of photons backwards from the camera to the light source, and call following paths from the light source (as in photon mapping) "forward" ray tracing. However, sometimes the meaning of these terms is reversed. Tracing rays starting at the light source can also be called ''particle tracing'' or ''light tracing'', which avoids this ambiguity.

Real-time rendering, including video game graphics, typically uses rasterization, but increasingly combines it with ray tracing and path tracing. To enable realistic

The term ''rasterization'' (in a broad sense) encompasses many techniques used for 2D rendering and Real-time computer graphics, real-time 3D rendering. 3D animated films were rendered by rasterization before ray tracing and

The term ''rasterization'' (in a broad sense) encompasses many techniques used for 2D rendering and Real-time computer graphics, real-time 3D rendering. 3D animated films were rendered by rasterization before ray tracing and

When rendering scenes containing many objects, testing the intersection of a ray with every object becomes very expensive. Special data structures are used to speed up this process by allowing large numbers of objects to be excluded quickly (such as objects behind the camera). These structures are analogous to database indexes for finding the relevant objects. The most common are the ''bounding volume hierarchy'' (BVH), which stores a pre-computed bounding volume, bounding box or sphere for each branch of a Tree (data structure), tree of objects, and the ''k-d tree'' which Recursion (computer science), recursively divides space into two parts. Recent GPUs include hardware acceleration for BVH intersection tests. K-d trees are a special case of ''binary space partitioning'', which was frequently used in early computer graphics (it can also generate a rasterization order for the painter's algorithm). ''Octrees'', another historically popular technique, are still often used for volumetric data.

Geometric formulas are sufficient for finding the intersection of a ray with shapes like spheres,

When rendering scenes containing many objects, testing the intersection of a ray with every object becomes very expensive. Special data structures are used to speed up this process by allowing large numbers of objects to be excluded quickly (such as objects behind the camera). These structures are analogous to database indexes for finding the relevant objects. The most common are the ''bounding volume hierarchy'' (BVH), which stores a pre-computed bounding volume, bounding box or sphere for each branch of a Tree (data structure), tree of objects, and the ''k-d tree'' which Recursion (computer science), recursively divides space into two parts. Recent GPUs include hardware acceleration for BVH intersection tests. K-d trees are a special case of ''binary space partitioning'', which was frequently used in early computer graphics (it can also generate a rasterization order for the painter's algorithm). ''Octrees'', another historically popular technique, are still often used for volumetric data.

Geometric formulas are sufficient for finding the intersection of a ray with shapes like spheres,

Generalization of Lambert's Reflectance Model

". SIGGRAPH. pp.239-246, Jul, 1994 * 1993 – Tone mapping * 1993 – Subsurface scattering * 1993 – Path tracing#Bidirectional path tracing, Bidirectional path tracing (Lafortune & Willems formulation) * 1994 – Ambient occlusion * 1995 – Photon mapping * 1995 – Multiple importance sampling * 1997 – Path tracing#Bidirectional path tracing, Bidirectional path tracing (Veach & Guibas formulation) * 1997 – Metropolis light transport * 1997 – Instant Radiosity * 2002 – Precomputed Radiance Transfer * 2002 – Primary sample space Metropolis light transport * 2003 – Mitsubishi Electric Research Laboratories, MERL Bidirectional reflectance distribution function, BRDF database * 2005 – Lightcuts * 2005 – Radiance caching * 2009 – Stochastic progressive photon mapping (SPPM) * 2012 – Vertex connection and merging (VCM) (also called unified path sampling) * 2012 – Manifold exploration * 2013 – Gradient-domain rendering * 2014 – Multiplexed Metropolis light transport * 2014 – Michael J. Black#Differentiable rendering, Differentiable rendering * 2015 – Manifold next event estimation (MNEE) * 2017 – Path guiding (using adaptive SD-tree) * 2020 – Spatiotemporal Reservoir sampling, reservoir resampling (ReSTIR) * 2020 – Neural radiance fields * 2023 – 3D Gaussian splatting

SIGGRAPH

the ACMs special interest group in graphics the largest academic and professional association and conference

vintage3d.org "The way to home 3d"

Extensive history of computer graphics hardware, including research, commercialization, and video games and consoles {{DEFAULTSORT:Rendering (Computer Graphics) 3D rendering

photorealistic

Photorealism is a genre of art that encompasses painting, drawing and other graphic media, in which an artist studies a photograph and then attempts to reproduce the image as realistically as possible in another medium. Although the term can b ...

or non-photorealistic image from input data such as 3D model

In 3D computer graphics, 3D modeling is the process of developing a mathematical coordinate-based representation of a surface of an object (inanimate or living) in three dimensions via specialized software by manipulating edges, vertices, and ...

s. The word "rendering" (in one of its senses) originally meant the task performed by an artist when depicting a real or imaginary thing (the finished artwork is also called a " rendering"). Today, to "render" commonly means to generate an image or video from a precise description (often created by an artist) using a computer program

A computer program is a sequence or set of instructions in a programming language for a computer to Execution (computing), execute. It is one component of software, which also includes software documentation, documentation and other intangibl ...

.

A software application

Application software is any computer program that is intended for end-user use not computer operator, operating, system administration, administering or computer programming, programming the computer. An application (app, application program, sof ...

or component

Component may refer to:

In engineering, science, and technology Generic systems

*System components, an entity with discrete structure, such as an assembly or software module, within a system considered at a particular level of analysis

* Lumped e ...

that performs rendering is called a rendering engine

An engine or motor is a machine designed to convert one or more forms of energy into mechanical energy.

Available energy sources include potential energy (e.g. energy of the Earth's gravitational field as exploited in hydroelectric power ge ...

, render engine, rendering system, graphics engine, or simply a renderer.

A distinction is made between real-time rendering, in which images are generated and displayed immediately (ideally fast enough to give the impression of motion or animation), and offline rendering (sometimes called pre-rendering

Pre-rendering is the process in which video footage is not rendered in real-time by the hardware that is outputting or playing back the video. Instead, the video is a recording of footage that was previously rendered on different equipment (typ ...

) in which images, or film or video frames, are generated for later viewing. Offline rendering can use a slower and higher-quality renderer. Interactive applications such as games must primarily use real-time rendering, although they may incorporate pre-rendered content.

Rendering can produce images of scenes or objects defined using coordinates in 3D space

In geometry, a three-dimensional space (3D space, 3-space or, rarely, tri-dimensional space) is a mathematical space in which three values (''coordinates'') are required to determine the position (geometry), position of a point (geometry), poi ...

, seen from a particular viewpoint. Such 3D rendering

3D rendering is the 3D computer graphics process of converting 3D models into 2D images on a computer. 3D renders may include photorealistic effects or non-photorealistic styles.

Rendering methods

Rendering is the final process of creati ...

uses knowledge and ideas from optics

Optics is the branch of physics that studies the behaviour and properties of light, including its interactions with matter and the construction of optical instruments, instruments that use or Photodetector, detect it. Optics usually describes t ...

, the study of visual perception

Visual perception is the ability to detect light and use it to form an image of the surrounding Biophysical environment, environment. Photodetection without image formation is classified as ''light sensing''. In most vertebrates, visual percept ...

, mathematics

Mathematics is a field of study that discovers and organizes methods, Mathematical theory, theories and theorems that are developed and Mathematical proof, proved for the needs of empirical sciences and mathematics itself. There are many ar ...

, and software engineering

Software engineering is a branch of both computer science and engineering focused on designing, developing, testing, and maintaining Application software, software applications. It involves applying engineering design process, engineering principl ...

, and it has applications such as video game

A video game or computer game is an electronic game that involves interaction with a user interface or input device (such as a joystick, game controller, controller, computer keyboard, keyboard, or motion sensing device) to generate visual fe ...

s, simulators, visual effects

Visual effects (sometimes abbreviated as VFX) is the process by which imagery is created or manipulated outside the context of

a live-action shot in filmmaking and video production.

The integration of live-action footage and other live-action fo ...

for films and television, design visualization, and medical diagnosis

Medical diagnosis (abbreviated Dx, Dx, or Ds) is the process of determining which disease or condition explains a person's symptoms and signs. It is most often referred to as a diagnosis with the medical context being implicit. The information ...

. Realistic 3D rendering requires modeling the propagation of light in an environment, e.g. by applying the rendering equation

In computer graphics, the rendering equation is an integral equation that expresses the amount of light leaving a point on a surface as the sum of emitted light and reflected light. It was independently introduced into computer graphics by David ...

.

Real-time rendering uses high-performance ''rasterization

In computer graphics, rasterisation (British English) or rasterization (American English) is the task of taking an image described in a vector graphics format (shapes) and converting it into a raster image (a series of pixels, dots or lines, whic ...

'' algorithms that process a list of shapes and determine which pixel

In digital imaging, a pixel (abbreviated px), pel, or picture element is the smallest addressable element in a Raster graphics, raster image, or the smallest addressable element in a dot matrix display device. In most digital display devices, p ...

s are covered by each shape. When more realism is required (e.g. for architectural visualization or visual effects

Visual effects (sometimes abbreviated as VFX) is the process by which imagery is created or manipulated outside the context of

a live-action shot in filmmaking and video production.

The integration of live-action footage and other live-action fo ...

) slower pixel-by-pixel algorithms such as '' ray tracing'' are used instead. (Ray tracing can also be used selectively during rasterized rendering to improve the realism of lighting and reflections.) A type of ray tracing called ''path tracing

Path tracing is a rendering algorithm in computer graphics that Simulation, simulates how light interacts with Physical object, objects, voxels, and Volumetric_path_tracing, participating media to generate realistic (''physically plausible'') R ...

'' is currently the most common technique for photorealistic rendering. Path tracing is also popular for generating high-quality non-photorealistic images, such as frames for 3D animated films. Both rasterization and ray tracing can be sped up ("accelerated") by specially designed microprocessors called GPUs.

Rasterization algorithms are also used to render images containing only 2D shapes such as polygon

In geometry, a polygon () is a plane figure made up of line segments connected to form a closed polygonal chain.

The segments of a closed polygonal chain are called its '' edges'' or ''sides''. The points where two edges meet are the polygon ...

s and text

Text may refer to:

Written word

* Text (literary theory)

In literary theory, a text is any object that can be "read", whether this object is a work of literature, a street sign, an arrangement of buildings on a city block, or styles of clothi ...

. Applications of this type of rendering include digital illustration, graphic design

Graphic design is a profession, academic discipline and applied art that involves creating visual communications intended to transmit specific messages to social groups, with specific objectives. Graphic design is an interdisciplinary branch of ...

, 2D animation

Animation is a filmmaking technique whereby still images are manipulated to create moving images. In traditional animation, images are drawn or painted by hand on transparent celluloid sheets to be photographed and exhibited on film. Animati ...

, desktop publishing

Desktop publishing (DTP) is the creation of documents using dedicated software on a personal ("desktop") computer. It was first used almost exclusively for print publications, but now it also assists in the creation of various forms of online co ...

and the display of user interface

In the industrial design field of human–computer interaction, a user interface (UI) is the space where interactions between humans and machines occur. The goal of this interaction is to allow effective operation and control of the machine fro ...

s.

Historically, rendering was called image synthesis but today this term is likely to mean AI image generation. The term "neural rendering" is sometimes used when a neural network

A neural network is a group of interconnected units called neurons that send signals to one another. Neurons can be either biological cells or signal pathways. While individual neurons are simple, many of them together in a network can perfor ...

is the primary means of generating an image but some degree of control over the output image is provided. Neural networks can also assist rendering without replacing traditional algorithms, e.g. by removing noise from path traced images.

Features

Photorealistic rendering

A large proportion ofcomputer graphics

Computer graphics deals with generating images and art with the aid of computers. Computer graphics is a core technology in digital photography, film, video games, digital art, cell phone and computer displays, and many specialized applications. ...

research has worked towards producing images that resemble photographs. Fundamental techniques that make this possible were invented in the 1980s, but at the end of the decade, photorealism for complex scenes was still considered a distant goal. Today, photorealism is routinely achievable for offline rendering, but remains difficult for real-time rendering.

In order to produce realistic images, rendering must simulate how light travels from light sources, is reflected, refracted, and scattered (often many times) by objects in the scene, passes through a camera lens, and finally reaches the film or sensor of the camera. The physics used in these simulations is primarily geometrical optics

Geometrical optics, or ray optics, is a model of optics that describes light Wave propagation, propagation in terms of ''ray (optics), rays''. The ray in geometrical optics is an abstract object, abstraction useful for approximating the paths along ...

, in which particles of light follow (usually straight) lines called '' rays'', but in some situations (such as when rendering thin films

A thin film is a layer of materials ranging from fractions of a nanometer ( monolayer) to several micrometers in thickness. The controlled synthesis of materials as thin films (a process referred to as deposition) is a fundamental step in many ...

, like the surface of soap bubble

A soap bubble (commonly referred to as simply a bubble) is an extremely thin soap film, film of soap or detergent and water enclosing air that forms a hollow sphere with an iridescent surface. Soap bubbles usually last for only a few seconds b ...

s) the wave nature of light must be taken into account.

Effects that may need to be simulated include:

* Shadow

A shadow is a dark area on a surface where light from a light source is blocked by an object. In contrast, shade occupies the three-dimensional volume behind an object with light in front of it. The cross-section of a shadow is a two-dimensio ...

s, including both shadows with sharp edges and ''soft shadows

The umbra, penumbra and antumbra are three distinct parts of a shadow, created by any light source after impinging on an opaque object of lesser size. In cases of equal or smaller impinging objects, only an umbra and penumba are generated. As ...

'' with umbra and penumbra

* Reflections in mirrors and smooth surfaces, as well as rough or rippled reflective surfaces

* Refraction

In physics, refraction is the redirection of a wave as it passes from one transmission medium, medium to another. The redirection can be caused by the wave's change in speed or by a change in the medium. Refraction of light is the most commo ...

the bending of light when it crosses a boundary between two transparent materials such as air and glass. The amount of bending varies with the wavelength of the light, which may cause colored fringes or "rainbows" to appear.

* Volumetric effects Absorption and scattering when light travels through partially transparent or translucent substances (called ''participating media'' because they modify the light rather than simply allow rays to pass through)

* Caustics bright patches, sometimes with distinct filaments and a folded or twisted appearance, resulting when light is reflected or refracted before illuminating an object.

In realistic scenes, objects are illuminated both by light that arrives directly from a light source (after passing mostly unimpeded through air), and light that has bounced off other objects in the scene. The simulation of this complex lighting is called global illumination

Global illumination (GI), or indirect illumination, is a group of algorithms used in 3D computer graphics that are meant to add more realistic lighting

Lighting or illumination is the deliberate use of light to achieve practical or aest ...

. In the past, indirect lighting was often faked (especially when rendering animated films) by placing additional hidden lights in the scene, but today path tracing

Path tracing is a rendering algorithm in computer graphics that Simulation, simulates how light interacts with Physical object, objects, voxels, and Volumetric_path_tracing, participating media to generate realistic (''physically plausible'') R ...

is used to render it accurately.

For true photorealism, the camera used to take the photograph must be simulated. The '' thin lens approximation'' allows combining perspective projection

Linear or point-projection perspective () is one of two types of graphical projection perspective in the graphic arts; the other is parallel projection. Linear perspective is an approximate representation, generally on a flat surface, of ...

with depth of field

The depth of field (DOF) is the distance between the nearest and the farthest objects that are in acceptably sharp focus (optics), focus in an image captured with a camera. See also the closely related depth of focus.

Factors affecting depth ...

(and bokeh

In photography, bokeh ( or ; ) is the aesthetic quality of the blur produced in out-of-focus parts of an image, whether foreground or background or both. It is created by using a wide aperture lens.

Some photographers incorrectly restr ...

) emulation. Camera lens

A camera lens, photographic lens or photographic objective is an optical lens (optics), lens or assembly of lenses (compound lens) used in conjunction with a camera body and mechanism to Imaging, make images of objects either on photographic film ...

simulations can be made more realistic by modeling the way light is refracted by the components of the lens. Motion blur

Motion blur is the apparent streaking of moving objects in a photograph or a sequence of frames, such as a film or animation. It results when the image being recorded changes during the recording of a single exposure, due to rapid movement or l ...

is often simulated if film or video frames are being rendered. Simulated lens flare

A lens flare happens when light is scattered, or ''flared'', in a lens system, often in response to a bright light, producing a sometimes undesirable artifact in the image. This happens through light scattered by the imaging mechanism itself, ...

and bloom are sometimes added to make the image appear subjectively brighter (although the design of real cameras tries to reduce these effects).

Realistic rendering uses mathematical descriptions of how different surface materials reflect light, called ''reflectance

The reflectance of the surface of a material is its effectiveness in reflecting radiant energy. It is the fraction of incident electromagnetic power that is reflected at the boundary. Reflectance is a component of the response of the electronic ...

models'' or (when physically plausible) '' bidirectional reflectance distribution functions (BRDFs)''. Rendering materials such as marble

Marble is a metamorphic rock consisting of carbonate minerals (most commonly calcite (CaCO3) or Dolomite (mineral), dolomite (CaMg(CO3)2) that have recrystallized under the influence of heat and pressure. It has a crystalline texture, and is ty ...

, plant leaves, and human skin requires simulating an effect called subsurface scattering

Subsurface scattering (SSS), also known as subsurface light transport (SSLT), is a mechanism of light transport in which light that penetrates the surface of a translucent object is scattering, scattered by interacting with the Material (comput ...

, in which a portion of the light travels into the material, is scattered, and then travels back out again. The way color, and properties such as roughness, vary over a surface can be represented efficiently using texture mapping

Texture mapping is a term used in computer graphics to describe how 2D images are projected onto 3D models. The most common variant is the UV unwrap, which can be described as an inverse paper cutout, where the surfaces of a 3D model are cut ap ...

.

Other styles of 3D rendering

For some applications (including early stages of3D modeling

In 3D computer graphics, 3D modeling is the process of developing a mathematical coordinate-based Computer representation of surfaces, representation of a surface of an object (inanimate or living) in Three-dimensional space, three dimensions vi ...

), simplified rendering styles such as wireframe rendering may be appropriate, particularly when the material and surface details have not been defined and only the shape of an object is known. Games and other real-time applications may use simpler and less realistic rendering techniques as an artistic or design choice, or to allow higher frame rate

Frame rate, most commonly expressed in frame/s, or FPS, is typically the frequency (rate) at which consecutive images (Film frame, frames) are captured or displayed. This definition applies to film and video cameras, computer animation, and moti ...

s on lower-end hardware.

Orthographic and isometric projections can be used for a stylized effect or to ensure that parallel lines are depicted as parallel in CAD rendering.

Non-photorealistic rendering (NPR) uses techniques like edge detection

Edge or EDGE may refer to:

Technology Computing

* Edge computing, a network load-balancing system

* Edge device, an entry point to a computer network

* Adobe Edge, a graphical development application

* Microsoft Edge, a web browser developed b ...

and posterization

Posterization or posterisation of an image is the conversion of a continuous gradation of tone to several regions of fewer tones, causing abrupt changes from one tone to another. This was originally done with photographic processes to create ...

to produce 3D images that resemble technical illustrations, cartoons, or other styles of drawing or painting.

Inputs

Before a 3D scene or 2D image can be rendered, it must be described in a way that the rendering software can understand. Historically, inputs for both 2D and 3D rendering were usuallytext file

A text file (sometimes spelled textfile; an old alternative name is flat file) is a kind of computer file that is structured as a sequence of lines of electronic text. A text file exists stored as data within a computer file system.

In ope ...

s, which are easier than binary files for humans to edit and debug. For 3D graphics, text formats have largely been supplanted by more efficient binary formats, and by API

An application programming interface (API) is a connection between computers or between computer programs. It is a type of software interface, offering a service to other pieces of software. A document or standard that describes how to build ...

s which allow interactive applications to communicate directly with a rendering component without generating a file on disk (although a scene description is usually still created in memory prior to rendering).

Traditional rendering algorithms use geometric descriptions of 3D scenes or 2D images. Applications and algorithms that render visualizations of data scanned from the real world, or scientific simulations

A simulation is an imitative representation of a process or system that could exist in the real world. In this broad sense, simulation can often be used interchangeably with model. Sometimes a clear distinction between the two terms is made, in ...

, may require different types of input data.

The PostScript

PostScript (PS) is a page description language and dynamically typed, stack-based programming language. It is most commonly used in the electronic publishing and desktop publishing realm, but as a Turing complete programming language, it c ...

format (which is often credited with the rise of desktop publishing

Desktop publishing (DTP) is the creation of documents using dedicated software on a personal ("desktop") computer. It was first used almost exclusively for print publications, but now it also assists in the creation of various forms of online co ...

) provides a standardized, interoperable way to describe 2D graphics and page layout

In graphic design, page layout is the arrangement of visual elements on a page. It generally involves organizational principles of composition to achieve specific communication objectives.

The high-level page layout involves deciding on the ...

. The Scalable Vector Graphics (SVG) format is also text-based, and the PDF

Portable document format (PDF), standardized as ISO 32000, is a file format developed by Adobe Inc., Adobe in 1992 to present documents, including text formatting and images, in a manner independent of application software, computer hardware, ...

format uses the PostScript language internally. In contrast, although many 3D graphics file formats have been standardized (including text-based formats such as VRML and X3D), different rendering applications typically use formats tailored to their needs, and this has led to a proliferation of proprietary and open formats, with binary files being more common.

2D vector graphics

Avector graphics

Vector graphics are a form of computer graphics in which visual images are created directly from geometric shapes defined on a Cartesian plane, such as points, lines, curves and polygons. The associated mechanisms may include vector displ ...

image description may include:

* Coordinates

In geometry, a coordinate system is a system that uses one or more numbers, or coordinates, to uniquely determine and standardize the Position (geometry), position of the Point (geometry), points or other geometric elements on a manifold such as ...

and curvature

In mathematics, curvature is any of several strongly related concepts in geometry that intuitively measure the amount by which a curve deviates from being a straight line or by which a surface deviates from being a plane. If a curve or su ...

information for line segments

In geometry, a line segment is a part of a straight line that is bounded by two distinct endpoints (its extreme points), and contains every point on the line that is between its endpoints. It is a special case of an '' arc'', with zero curvatu ...

, arcs, and Bézier curve

A Bézier curve ( , ) is a parametric equation, parametric curve used in computer graphics and related fields. A set of discrete "control points" defines a smooth, continuous curve by means of a formula. Usually the curve is intended to approxima ...

s (which may be used as boundaries of filled shapes)

* Center coordinates, width, and height (or bounding rectangle coordinates) of basic

Basic or BASIC may refer to:

Science and technology

* BASIC, a computer programming language

* Basic (chemistry), having the properties of a base

* Basic access authentication, in HTTP

Entertainment

* Basic (film), ''Basic'' (film), a 2003 film

...

shapes such as rectangle

In Euclidean geometry, Euclidean plane geometry, a rectangle is a Rectilinear polygon, rectilinear convex polygon or a quadrilateral with four right angles. It can also be defined as: an equiangular quadrilateral, since equiangular means that a ...

s, circle

A circle is a shape consisting of all point (geometry), points in a plane (mathematics), plane that are at a given distance from a given point, the Centre (geometry), centre. The distance between any point of the circle and the centre is cal ...

s and ellipse

In mathematics, an ellipse is a plane curve surrounding two focus (geometry), focal points, such that for all points on the curve, the sum of the two distances to the focal points is a constant. It generalizes a circle, which is the special ty ...

s

* Color, width and pattern (such as dashed or dotted) for rendering lines

* Colors, patterns, and gradients for filling shapes

* Bitmap

In computing, a bitmap (also called raster) graphic is an image formed from rows of different colored pixels. A GIF is an example of a graphics image file that uses a bitmap.

As a noun, the term "bitmap" is very often used to refer to a partic ...

image data (either embedded or in an external file) along with scale and position information

* Text to be rendered (along with size, position, orientation, color, and font)

* Clipping information, if only part of a shape or bitmap image should be rendered

* Transparency and compositing

Compositing is the process or technique of combining visual elements from separate sources into single images, often to create the illusion that all those elements are parts of the same scene. Live action, Live-action shooting for compositing ...

information for rendering overlapping shapes

* Color space

A color space is a specific organization of colors. In combination with color profiling supported by various physical devices, it supports reproducible representations of colorwhether such representation entails an analog or a digital represe ...

information, allowing the image to be rendered consistently on different displays and printers

3D geometry

A geometric scene description may include: * Size, position, and orientation ofgeometric primitive

In vector computer graphics, CAD systems, and geographic information systems, a geometric primitive (or prim) is the simplest (i.e. 'atomic' or irreducible) geometric shape that the system can handle (draw, store). Sometimes the subroutines ...

s such as spheres and cones (which may be combined in various ways to create more complex objects)

* Vertex coordinates

In geometry, a coordinate system is a system that uses one or more numbers, or coordinates, to uniquely determine and standardize the Position (geometry), position of the Point (geometry), points or other geometric elements on a manifold such as ...

and surface normal

In geometry, a normal is an object (e.g. a line, ray, or vector) that is perpendicular to a given object. For example, the normal line to a plane curve at a given point is the infinite straight line perpendicular to the tangent line to the ...

vectors for meshes of triangles or polygons (often rendered as smooth surfaces by subdividing the mesh)

* Transformations for positioning, rotating, and scaling objects within a scene (allowing parts of the scene to use different local coordinate systems).

* "Camera" information describing how the scene is being viewed (position, direction, focal length

The focal length of an Optics, optical system is a measure of how strongly the system converges or diverges light; it is the Multiplicative inverse, inverse of the system's optical power. A positive focal length indicates that a system Converge ...

, and field of view

The field of view (FOV) is the angle, angular extent of the observable world that is visual perception, seen at any given moment. In the case of optical instruments or sensors, it is a solid angle through which a detector is sensitive to elec ...

)

* Light information (location, type, brightness, and color)

* Optical properties of surfaces, such as albedo

Albedo ( ; ) is the fraction of sunlight that is Diffuse reflection, diffusely reflected by a body. It is measured on a scale from 0 (corresponding to a black body that absorbs all incident radiation) to 1 (corresponding to a body that reflects ...

, roughness, and refractive index

In optics, the refractive index (or refraction index) of an optical medium is the ratio of the apparent speed of light in the air or vacuum to the speed in the medium. The refractive index determines how much the path of light is bent, or refrac ...

,

* Optical properties of media through which light passes (transparent solids, liquids, clouds, smoke), e.g. absorption and scattering

In physics, scattering is a wide range of physical processes where moving particles or radiation of some form, such as light or sound, are forced to deviate from a straight trajectory by localized non-uniformities (including particles and radiat ...

cross sections

* Bitmap

In computing, a bitmap (also called raster) graphic is an image formed from rows of different colored pixels. A GIF is an example of a graphics image file that uses a bitmap.

As a noun, the term "bitmap" is very often used to refer to a partic ...

image data used as texture maps for surfaces

* Small scripts or programs for generating complex 3D shapes or scenes procedurally

* Description of how object and camera locations and other information change over time, for rendering an animation

Many file formats exist for storing individual 3D objects or "models

A model is an informative representation of an object, person, or system. The term originally denoted the plans of a building in late 16th-century English, and derived via French and Italian ultimately from Latin , .

Models can be divided int ...

". These can be imported into a larger scene, or loaded on-demand by rendering software or games. A realistic scene may require hundreds of items like household objects, vehicles, and trees, and 3D artists often utilize large libraries of models. In game production, these models (along with other data such as textures, audio files, and animations) are referred to as "assets

In financial accounting, an asset is any resource owned or controlled by a business or an economic entity. It is anything (tangible or intangible) that can be used to produce positive economic value. Assets represent value of ownership that can b ...

".

Volumetric data

Scientific and engineering visualization often requires rendering volumetric data generated by 3D scans orsimulations

A simulation is an imitative representation of a process or system that could exist in the real world. In this broad sense, simulation can often be used interchangeably with model. Sometimes a clear distinction between the two terms is made, in ...

. Perhaps the most common source of such data is medical CT and MRI

Magnetic resonance imaging (MRI) is a medical imaging technique used in radiology to generate pictures of the anatomy and the physiological processes inside the body. MRI scanners use strong magnetic fields, magnetic field gradients, and rad ...

scans, which need to be rendered for diagnosis. Volumetric data can be extremely large, and requires specialized data formats to store it efficiently, particularly if the volume is '' sparse'' (with empty regions that do not contain data).

Before rendering, level set

In mathematics, a level set of a real-valued function of real variables is a set where the function takes on a given constant value , that is:

: L_c(f) = \left\~.

When the number of independent variables is two, a level set is call ...

s for volumetric data can be extracted and converted into a mesh of triangles, e.g. by using the marching cubes

Marching cubes is a computer graphics algorithm, published in the 1987 SIGGRAPH proceedings by Lorensen and Cline, for extracting a polygonal mesh of an isosurface from a three-dimensional discrete scalar field (the elements of which are somet ...

algorithm. Algorithms have also been developed that work directly with volumetric data, for example to render realistic depictions of the way light is scattered and absorbed by clouds and smoke, and this type of volumetric rendering is used extensively in visual effects for movies. When rendering lower-resolution volumetric data without interpolation, the individual cubes or "voxels" may be visible, an effect sometimes used deliberately for game graphics.

Photogrammetry and scanning

Photographs of real world objects can be incorporated into a rendered scene by using them as Texture mapping, textures for 3D objects. Photos of a scene can also be stitched together to create panorama, panoramic images or Reflection mapping, environment maps, which allow the scene to be rendered very efficiently but only from a single viewpoint. Scanning of real objects and scenes using Structured-light 3D scanner, structured light or lidar produces point clouds consisting of the coordinates of millions of individual points in space, sometimes along with color information. These point clouds may either be rendered directly or Point cloud#Conversion to 3D surfaces, converted into meshes before rendering. (Note: "point cloud" sometimes also refers to a minimalist rendering style that can be used for any 3D geometry, similar to wireframe rendering.)Neural approximations and light fields

A more recent, experimental approach is description of scenes using neural radiance field, radiance fields which define the color, intensity, and direction of incoming light at each point in space. (This is conceptually similar to, but not identical to, the light field recorded by a Holography, hologram.) For any useful resolution, the amount of data in a radiance field is so large that it is impractical to represent it directly as volumetric data, and an approximation function must be found. Deep learning, Neural networks are typically used to generate and evaluate these approximations, sometimes using video frames, or a collection of photographs of a scene taken at different angles, as "Training, validation, and test data sets#Training data set, training data". Algorithms related to neural networks have recently been used to find approximations of a scene as Gaussian splatting, 3D Gaussians. The resulting representation is similar to a point cloud, except that it uses fuzzy, partially-transparent blobs of varying dimensions and orientations instead of points. As with neural radiance fields, these approximations are often generated from photographs or video frames.Outputs

The output of rendering may be displayed immediately on the screen (many times a second, in the case of real-time rendering such as games) or saved in a raster graphics file format such as JPEG or PNG. High-end rendering applications commonly use the OpenEXR file format, which can represent finer gradations of colors and high dynamic range lighting, allowing tone mapping or other adjustments to be applied afterwards without loss of quality. Quickly rendered animations can be saved directly as video files, but for high-quality rendering, individual frames (which may be rendered by different computers in a Computer cluster, cluster or ''render farm'' and may take hours or even days to render) are output as separate files and combined later into a video clip. The output of a renderer sometimes includes more than just RGB color model#Numeric representations, RGB color values. For example, the spectrum can be sampled using multiple wavelengths of light, or additional information such as depth (distance from camera) or the material of each point in the image can be included (this data can be used during compositing or when generating texture maps for real-time rendering, or used to assist in Noise reduction#In images, removing noise from a path-traced image). Transparency information can be included, allowing rendered foreground objects to be composited with photographs or video. It is also sometimes useful to store the contributions of different lights, or of specular and diffuse lighting, as separate channels, so lighting can be adjusted after rendering. The OpenEXR format allows storing many channels of data in a single file. Renderers such as Blender (software), Blender and Pixar RenderMan support a large variety of configurable values called Arbitrary Output Variables (AOVs).Techniques

Choosing how to render a 3D scene usually involves trade-offs between speed, memory usage, and realism (although realism is not always desired). The developed over the years follow a loose progression, with more advanced methods becoming practical as computing power and memory capacity increased. Multiple techniques may be used for a single final image. An important distinction is between Image and object order rendering, image order algorithms, which iterate over pixels in the image, and Image and object order rendering, object order algorithms, which iterate over objects in the scene. For simple scenes, object order is usually more efficient, as there are fewer objects than pixels. ; vector graphics, 2D vector graphics : The vector monitor, vector displays of the 1960s-1970s used deflection of an Cathode ray, electron beam to draw line segments directly on the screen. Nowadays,vector graphics

Vector graphics are a form of computer graphics in which visual images are created directly from geometric shapes defined on a Cartesian plane, such as points, lines, curves and polygons. The associated mechanisms may include vector displ ...

are rendered by rasterization

In computer graphics, rasterisation (British English) or rasterization (American English) is the task of taking an image described in a vector graphics format (shapes) and converting it into a raster image (a series of pixels, dots or lines, whic ...

algorithms that also support filled shapes. In principle, any 2D vector graphics renderer can be used to render 3D objects by first projecting them onto a 2D image plane.

; Rasterisation#3D images, 3D rasterization

: Adapts 2D rasterization algorithms so they can be used more efficiently for 3D rendering, handling Hidden-surface determination, hidden surface removal via scanline rendering, scanline or Z-buffering, z-buffer techniques. Different realistic or stylized effects can be obtained by coloring the pixels covered by the objects in different ways. Computer representation of surfaces, Surfaces are typically divided into meshes of triangles before being rasterized. Rasterization is usually synonymous with "object order" rendering (as described above).

; Ray casting

: Uses geometric formulas to compute the first object that a Line (geometry)#Ray, ray intersects. It can be used to implement "image order" rendering by casting a ray for each pixel, and finding a corresponding point in the scene. Ray casting is a fundamental operation used for both graphical and non-graphical purposes, e.g. determining whether a point is in shadow, or checking what an enemy can see in a Artificial intelligence in video games, game.

; Ray tracing (graphics), Ray tracing

: Simulates the bouncing paths of light caused by specular reflection and refraction, requiring a varying number of ray casting operations for each path. Advanced forms use Monte Carlo method, Monte Carlo techniques to render effects such as area lights, depth of field

The depth of field (DOF) is the distance between the nearest and the farthest objects that are in acceptably sharp focus (optics), focus in an image captured with a camera. See also the closely related depth of focus.

Factors affecting depth ...

, blurry reflections, and soft shadows

The umbra, penumbra and antumbra are three distinct parts of a shadow, created by any light source after impinging on an opaque object of lesser size. In cases of equal or smaller impinging objects, only an umbra and penumba are generated. As ...

, but computing global illumination

Global illumination (GI), or indirect illumination, is a group of algorithms used in 3D computer graphics that are meant to add more realistic lighting

Lighting or illumination is the deliberate use of light to achieve practical or aest ...

is usually in the domain of path tracing.

; Radiosity (computer graphics), Radiosity

: A Finite element method, finite element analysis approach that breaks surfaces in the scene into pieces, and estimates the amount of light that each piece receives from light sources, or indirectly from other surfaces. Once the irradiance of each surface is known, the scene can be rendered using rasterization or ray tracing.

; Path tracing

: Uses Monte Carlo method, Monte Carlo integration with a simplified form of ray tracing, computing the average brightness of a Sampling (statistics), sample of the possible paths that a photon could take when traveling from a light source to the camera (for some images, thousands of paths need to be sampled per pixel). It was introduced as a Unbiased rendering, statistically unbiased way to solve the rendering equation

In computer graphics, the rendering equation is an integral equation that expresses the amount of light leaving a point on a surface as the sum of emitted light and reflected light. It was independently introduced into computer graphics by David ...

, giving ray tracing a rigorous mathematical foundation.

Each of the above approaches has many variations, and there is some overlap. Path tracing may be considered either a distinct technique or a particular type of ray tracing. Note that the usage (language), usage of terminology related to ray tracing and path tracing has changed significantly over time.

Ray marching is a family of algorithms, used by ray casting, for finding intersections between a ray and a complex object, such as a Volume ray casting, volumetric dataset or a surface defined by a signed distance function. It is not, by itself, a rendering method, but it can be incorporated into ray tracing and path tracing, and is used by rasterization to implement screen-space reflection and other effects.

A technique called photon mapping traces paths of photons from a light source to an object, accumulating data about irradiance which is then used during conventional ray tracing or path tracing. Rendering a scene using only rays traced from the light source to the camera is impractical, even though it corresponds more closely to reality, because a huge number of photons would need to be simulated, only a tiny fraction of which actually hit the camera.

Some authors call conventional ray tracing "backward" ray tracing because it traces the paths of photons backwards from the camera to the light source, and call following paths from the light source (as in photon mapping) "forward" ray tracing. However, sometimes the meaning of these terms is reversed. Tracing rays starting at the light source can also be called ''particle tracing'' or ''light tracing'', which avoids this ambiguity.

Real-time rendering, including video game graphics, typically uses rasterization, but increasingly combines it with ray tracing and path tracing. To enable realistic

Ray marching is a family of algorithms, used by ray casting, for finding intersections between a ray and a complex object, such as a Volume ray casting, volumetric dataset or a surface defined by a signed distance function. It is not, by itself, a rendering method, but it can be incorporated into ray tracing and path tracing, and is used by rasterization to implement screen-space reflection and other effects.

A technique called photon mapping traces paths of photons from a light source to an object, accumulating data about irradiance which is then used during conventional ray tracing or path tracing. Rendering a scene using only rays traced from the light source to the camera is impractical, even though it corresponds more closely to reality, because a huge number of photons would need to be simulated, only a tiny fraction of which actually hit the camera.

Some authors call conventional ray tracing "backward" ray tracing because it traces the paths of photons backwards from the camera to the light source, and call following paths from the light source (as in photon mapping) "forward" ray tracing. However, sometimes the meaning of these terms is reversed. Tracing rays starting at the light source can also be called ''particle tracing'' or ''light tracing'', which avoids this ambiguity.

Real-time rendering, including video game graphics, typically uses rasterization, but increasingly combines it with ray tracing and path tracing. To enable realistic global illumination

Global illumination (GI), or indirect illumination, is a group of algorithms used in 3D computer graphics that are meant to add more realistic lighting

Lighting or illumination is the deliberate use of light to achieve practical or aest ...

, real-time rendering often relies on pre-rendered ("baked") lighting for stationary objects. For moving objects, it may use a technique called ''light probes'', in which lighting is recorded by rendering omnidirectional views of the scene at chosen points in space (often points on a grid to allow easier interpolation). These are similar to reflection mapping, environment maps, but typically use a very low resolution or an approximation such as spherical harmonics. (Note: Blender (software), Blender uses the term 'light probes' for a more general class of pre-recorded lighting data, including reflection maps.)

Rasterization

The term ''rasterization'' (in a broad sense) encompasses many techniques used for 2D rendering and Real-time computer graphics, real-time 3D rendering. 3D animated films were rendered by rasterization before ray tracing and

The term ''rasterization'' (in a broad sense) encompasses many techniques used for 2D rendering and Real-time computer graphics, real-time 3D rendering. 3D animated films were rendered by rasterization before ray tracing and path tracing

Path tracing is a rendering algorithm in computer graphics that Simulation, simulates how light interacts with Physical object, objects, voxels, and Volumetric_path_tracing, participating media to generate realistic (''physically plausible'') R ...

became practical.

A renderer combines rasterization with ''geometry processing'' (which is not specific to rasterization) and ''pixel processing'' which computes the RGB color model, RGB color values to be placed in the ''framebuffer'' for display.

The main tasks of rasterization (including pixel processing) are:

* Determining which pixels are covered by each geometric shape in the 3D scene or 2D image (this is the actual rasterization step, in the strictest sense)

* Blending between colors and depths defined at the Vertex (computer graphics), vertices of shapes, e.g. using Barycentric coordinate system, barycentric coordinates (''interpolation'')

* Determining if parts of shapes are hidden by other shapes, due to 2D layering or 3D depth (''Hidden-surface determination, hidden surface removal'')

* Evaluating a function for each pixel covered by a shape (''shading'')

* Smoothing edges of shapes so pixels are less visible (''Spatial anti-aliasing, anti-aliasing'')

* Blending overlapping transparent shapes (''compositing

Compositing is the process or technique of combining visual elements from separate sources into single images, often to create the illusion that all those elements are parts of the same scene. Live action, Live-action shooting for compositing ...

'')

3D rasterization is typically part of a ''graphics pipeline'' in which an application provides Triangle mesh, lists of triangles to be rendered, and the rendering system transforms and 3D projection, projects their coordinates, determines which triangles are potentially visible in the ''viewport'', and performs the above rasterization and pixel processing tasks before displaying the final result on the screen.

Historically, 3D rasterization used algorithms like the ''Warnock algorithm'' and ''scanline rendering'' (also called "scan-conversion"), which can handle arbitrary polygons and can rasterize many shapes simultaneously. Although such algorithms are still important for 2D rendering, 3D rendering now usually divides shapes into triangles and rasterizes them individually using simpler methods.

Digital differential analyzer (graphics algorithm), High-performance algorithms exist for rasterizing Bresenham's line algorithm, 2D lines, including Xiaolin Wu's line algorithm, anti-aliased lines, as well as Midpoint circle algorithm, ellipses and filled triangles. An important special case of 2D rasterization is Font rasterization, text rendering, which requires careful anti-aliasing and rounding of coordinates to avoid distorting the letterforms and preserve spacing, density, and sharpness.

After 3D coordinates have been 3D projection, projected onto the image plane, rasterization is primarily a 2D problem, but the 3rd dimension necessitates ''Hidden-surface determination, hidden surface removal''. Early computer graphics used Computational geometry, geometric algorithms or ray casting to remove the hidden portions of shapes, or used the ''painter's algorithm'', which sorts shapes by depth (distance from camera) and renders them from back to front. Depth sorting was later avoided by incorporating depth comparison into the scanline rendering algorithm. The ''Z-buffering, z-buffer'' algorithm performs the comparisons indirectly by including a depth or "z" value in the framebuffer. A pixel is only covered by a shape if that shape's z value is lower (indicating closer to the camera) than the z value currently in the buffer. The z-buffer requires additional memory (an expensive resource at the time it was invented) but simplifies the rasterization code and permits multiple passes. Memory is now faster and more plentiful, and a z-buffer is almost always used for real-time rendering.

A drawback of the basic Z-buffering, z-buffer algorithm is that each pixel ends up either entirely covered by a single object or filled with the background color, causing jagged edges in the final image. Early ''Spatial anti-aliasing, anti-aliasing'' approaches addressed this by detecting when a pixel is partially covered by a shape, and calculating the covered area. The A-buffer (and other supersampling and Multisample anti-aliasing, multi-sampling techniques) solve the problem less precisely but with higher performance. For real-time 3D graphics, it has become common to use Fast approximate anti-aliasing, complicated heuristics (and even Deep learning anti-aliasing, neural-networks) to perform anti-aliasing.

In 3D rasterization, color is usually determined by a ''Shader#Pixel shaders, pixel shader'' or ''fragment shader'', a small program that is run for each pixel. The shader does not (or cannot) directly access 3D data for the entire scene (this would be very slow, and would result in an algorithm similar to ray tracing) and a variety of techniques have been developed to render effects like Shadow mapping, shadows and Reflection (computer graphics), reflections using only texture mapping

Texture mapping is a term used in computer graphics to describe how 2D images are projected onto 3D models. The most common variant is the UV unwrap, which can be described as an inverse paper cutout, where the surfaces of a 3D model are cut ap ...

and multiple passes.

Older and more basic 3D rasterization implementations did not support shaders, and used simple shading techniques such as ''Shading#Flat shading, flat shading'' (lighting is computed once for each triangle, which is then rendered entirely in one color), ''Gouraud shading'' (lighting is computed using Normal (geometry), normal vectors defined at vertices and then colors are interpolated across each triangle), or ''Phong shading'' (normal vectors are interpolated across each triangle and lighting is computed for each pixel).

Until relatively recently, Pixar used rasterization for rendering its animated films. Unlike the renderers commonly used for real-time graphics, the Reyes rendering, Reyes rendering system in Pixar's Pixar RenderMan, RenderMan software was optimized for rendering very small (pixel-sized) polygons, and incorporated stochastic sampling techniques more typically associated with ray tracing.

Ray casting

One of the simplest ways to render a 3D scene is to test if a Line (geometry)#Ray, ray starting at the viewpoint (the "eye" or "camera") intersects any of the geometric shapes in the scene, repeating this test using a different ray direction for each pixel. This method, called ''ray casting'', was important in early computer graphics, and is a fundamental building block for more advanced algorithms. Ray casting can be used to render shapes defined by ''constructive solid geometry'' (CSG) operations. Early ray casting experiments include the work of Arthur Appel in the 1960s. Appel rendered shadows by casting an additional ray from each visible surface point towards a light source. He also tried rendering the density of illumination by casting random rays from the light source towards the object and Plotter, plotting the intersection points (similar to the later technique called ''photon mapping''). When rendering scenes containing many objects, testing the intersection of a ray with every object becomes very expensive. Special data structures are used to speed up this process by allowing large numbers of objects to be excluded quickly (such as objects behind the camera). These structures are analogous to database indexes for finding the relevant objects. The most common are the ''bounding volume hierarchy'' (BVH), which stores a pre-computed bounding volume, bounding box or sphere for each branch of a Tree (data structure), tree of objects, and the ''k-d tree'' which Recursion (computer science), recursively divides space into two parts. Recent GPUs include hardware acceleration for BVH intersection tests. K-d trees are a special case of ''binary space partitioning'', which was frequently used in early computer graphics (it can also generate a rasterization order for the painter's algorithm). ''Octrees'', another historically popular technique, are still often used for volumetric data.

Geometric formulas are sufficient for finding the intersection of a ray with shapes like spheres,

When rendering scenes containing many objects, testing the intersection of a ray with every object becomes very expensive. Special data structures are used to speed up this process by allowing large numbers of objects to be excluded quickly (such as objects behind the camera). These structures are analogous to database indexes for finding the relevant objects. The most common are the ''bounding volume hierarchy'' (BVH), which stores a pre-computed bounding volume, bounding box or sphere for each branch of a Tree (data structure), tree of objects, and the ''k-d tree'' which Recursion (computer science), recursively divides space into two parts. Recent GPUs include hardware acceleration for BVH intersection tests. K-d trees are a special case of ''binary space partitioning'', which was frequently used in early computer graphics (it can also generate a rasterization order for the painter's algorithm). ''Octrees'', another historically popular technique, are still often used for volumetric data.

Geometric formulas are sufficient for finding the intersection of a ray with shapes like spheres, polygon

In geometry, a polygon () is a plane figure made up of line segments connected to form a closed polygonal chain.

The segments of a closed polygonal chain are called its '' edges'' or ''sides''. The points where two edges meet are the polygon ...

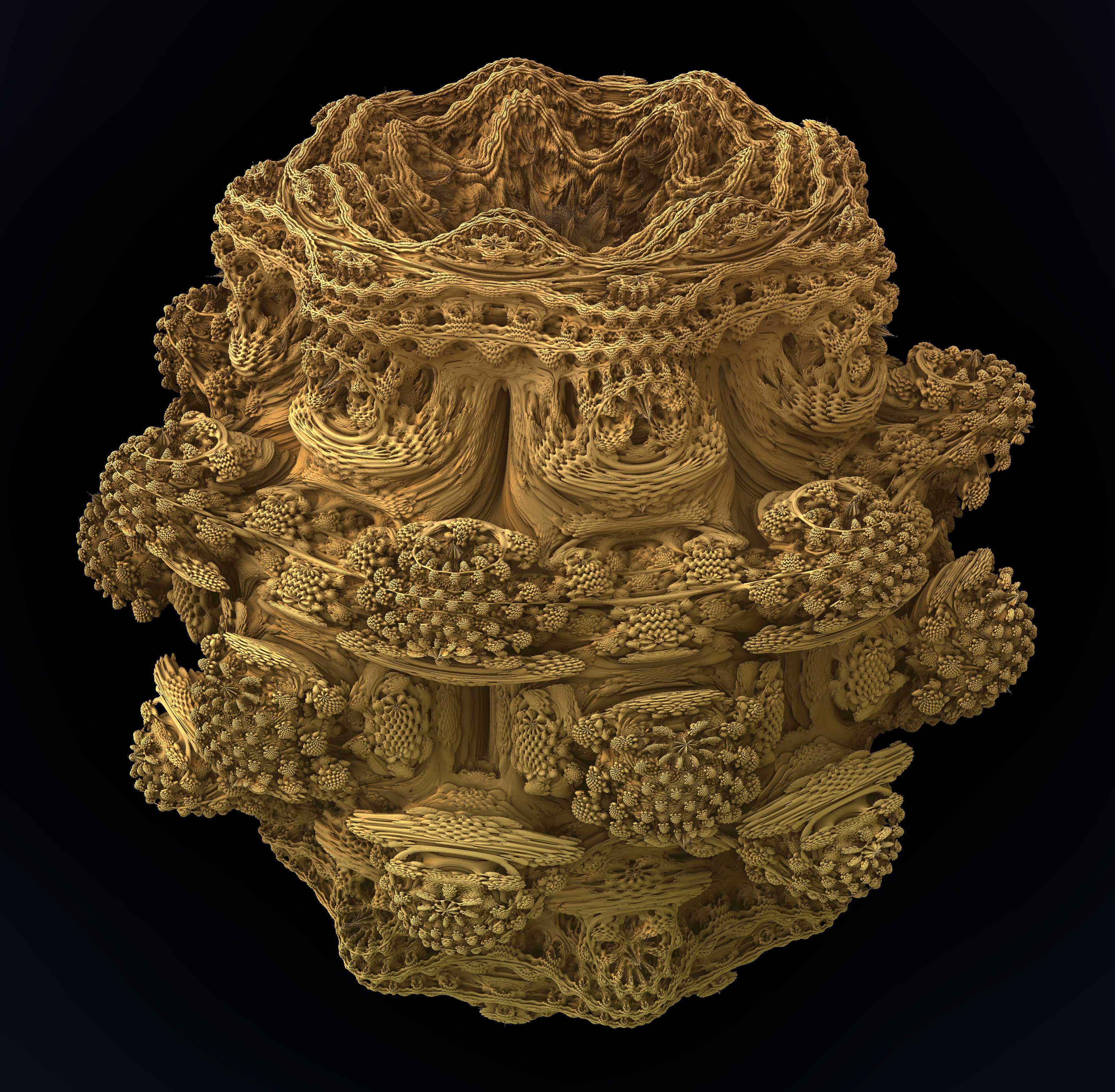

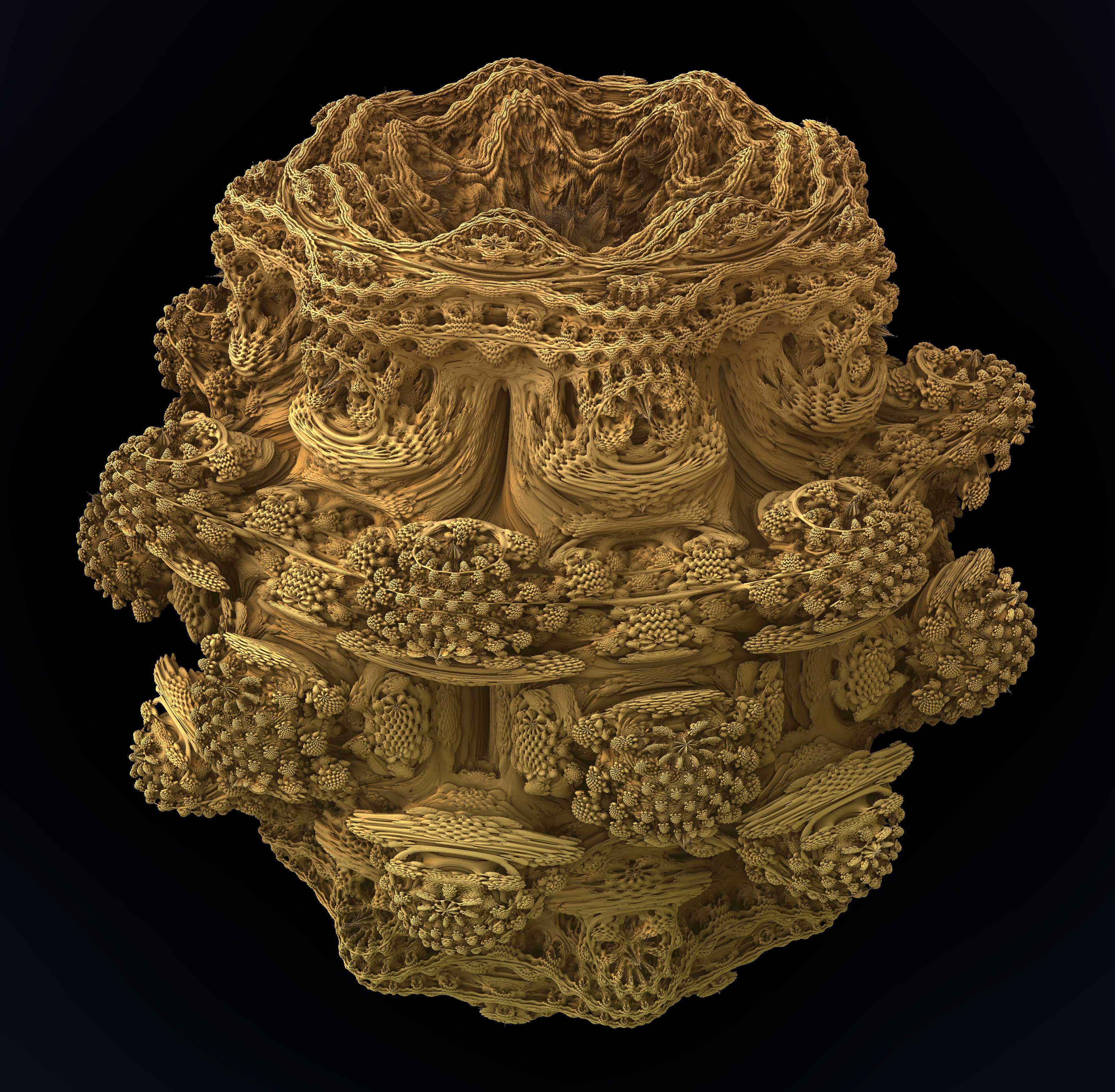

s, and polyhedron, polyhedra, but for most curved surfaces there is no Closed-form expression#Analytic expression, analytic solution, or the intersection is difficult to compute accurately using limited precision Floating-point arithmetic, floating point numbers. Root-finding algorithms such as Newton's method can sometimes be used. To avoid these complications, curved surfaces are often approximated as Triangle mesh, meshes of triangles. Volume rendering (e.g. rendering clouds and smoke), and some surfaces such as fractals, may require ray marching instead of basic ray casting.

Ray tracing

Ray casting can be used to render an image by tracing Ray (optics), light rays backwards from a simulated camera. After finding a point on a surface where a ray originated, another ray is traced towards the light source to determine if anything is casting a shadow on that point. If not, a ''Bidirectional reflectance distribution function, reflectance model'' (such as Lambertian reflectance for Paint sheen, matte surfaces, or the Phong reflection model for glossy surfaces) is used to compute the probability that a photon arriving from the light would be reflected towards the camera, and this is multiplied by the brightness of the light to determine the pixel brightness. If there are multiple light sources, brightness contributions of the lights are added together. For color images, calculations are repeated for multiple Visible spectrum, wavelengths of light (e.g. red, green, and blue). ''Classical ray tracing'' (also called ''Whitted-style'' or ''recursive'' ray tracing) extends this method so it can render mirrors and transparent objects. If a ray traced backwards from the camera originates at a point on a mirror, the Specular reflection, reflection formula from geometric optics is used to calculate the direction the reflected ray came from, and another ray is cast backwards in that direction. If a ray originates at a transparent surface, rays are cast backwards for both Specular reflection, reflected and Refraction, refracted rays (using Snell's law to compute the refracted direction), and so ray tracing needs to support a branching "tree" of rays. In simple implementations, a Recursion (computer science), recursive function is called to trace each ray. Ray tracing usually performs Spatial anti-aliasing, anti-aliasing by taking the average of multiple Sampling (statistics), samples for each pixel. It may also use multiple samples for effects likedepth of field

The depth of field (DOF) is the distance between the nearest and the farthest objects that are in acceptably sharp focus (optics), focus in an image captured with a camera. See also the closely related depth of focus.

Factors affecting depth ...

and motion blur. If evenly spaced ray directions or times are used for each of these features, many rays are required, and some aliasing will remain. ''Cook-style'', ''stochastic'', or ''Monte Carlo ray tracing'' avoids this problem by using Monte Carlo method, random sampling instead of evenly spaced samples. This type of ray tracing is commonly called distributed ray tracing, ''distributed ray tracing'', or ''distribution ray tracing'' because it samples rays from probability distributions. Distribution ray tracing can also render realistic "soft" shadows from large lights by using a random sample of points on the light when testing for shadowing, and it can simulate chromatic aberration by sampling multiple wavelengths from the Visible spectrum, spectrum of light.

Real surface materials reflect small amounts of light in almost every direction because they have small (or microscopic) bumps and grooves. A distribution ray tracer can simulate this by sampling possible ray directions, which allows rendering blurry reflections from glossy and metallic surfaces. However, if this procedure is repeated Recursion, recursively to simulate realistic indirect lighting, and if more than one sample is taken at each surface point, the tree of rays quickly becomes huge. Another kind of ray tracing, called ''path tracing'', handles indirect light more efficiently, avoiding branching, and ensures that the distribution of all possible paths from a light source to the camera is sampled in an Unbiased rendering, unbiased way.

Ray tracing was often used for rendering reflections in animated films, until path tracing became standard for film rendering. Films such as Shrek 2 and Monsters University also used distribution ray tracing or path tracing to precompute indirect illumination for a scene or frame prior to rendering it using rasterization.

Advances in GPU technology have made real-time ray tracing possible in games, although it is currently almost always used in combination with rasterization. This enables visual effects that are difficult with only rasterization, including reflection from curved surfaces and interreflective objects, and shadows that are accurate over a wide range of distances and surface orientations. Ray tracing support is included in recent versions of the graphics APIs used by games, such as DirectX Raytracing, DirectX, Metal (API), Metal, and Vulkan.

Ray tracing has been used to render simulated black holes, and the appearance of objects moving at close to the speed of light, by taking curved spacetime, spacetime curvature and Special relativity, relativistic effects into account during light ray simulation.

Radiosity

Radiosity (named after the Radiosity (radiometry), radiometric quantity of the same name) is a method for rendering objects illuminated by light Diffuse reflection, bouncing off rough or matte surfaces. This type of illumination is called ''indirect light'', ''environment lighting'', ''diffuse lighting'', or ''diffuse interreflection'', and the problem of rendering it realistically is called ''global illumination''. Rasterization and basic forms of ray tracing (other than distribution ray tracing and path tracing) can only roughly approximate indirect light, e.g. by adding a uniform "ambient" lighting amount chosen by the artist. Radiosity techniques are also suited to rendering scenes with ''area lights'' such as rectangular fluorescent lighting panels, which are difficult for rasterization and traditional ray tracing. Radiosity is considered a Physically based rendering, physically-based method, meaning that it aims to simulate the flow of light in an environment using equations and experimental data from physics, however it often assumes that all surfaces are opaque and perfectly Lambertian reflectance, Lambertian, which reduces realism and limits its applicability. In the original radiosity method (first proposed in 1984) now called ''classical radiosity'', surfaces and lights in the scene are split into pieces called ''patches'', a process called ''Mesh generation, meshing'' (this step makes it a finite element method). The rendering code must then determine what fraction of the light being emitted or Diffuse reflection, diffusely reflected (scattered) by each patch is received by each other patch. These fractions are called ''form factors'' or ''view factors'' (first used in engineering to model Thermal radiation, radiative heat transfer). The form factors are multiplied by thealbedo

Albedo ( ; ) is the fraction of sunlight that is Diffuse reflection, diffusely reflected by a body. It is measured on a scale from 0 (corresponding to a black body that absorbs all incident radiation) to 1 (corresponding to a body that reflects ...

of the receiving surface and put in a Matrix (mathematics), matrix. The lighting in the scene can then be expressed as a matrix equation (or equivalently a system of linear equations) that can be solved by methods from linear algebra.

Solving the radiosity equation gives the total amount of light emitted and reflected by each patch, which is divided by area to get a value called ''Radiosity (radiometry), radiosity'' that can be used when rasterizing or ray tracing to determine the color of pixels corresponding to visible parts of the patch. For real-time rendering, this value (or more commonly the irradiance, which does not depend on local surface albedo) can be pre-computed and stored in a texture (called an ''irradiance map'') or stored as vertex data for 3D models. This feature was used in architectural visualization software to allow real-time walk-throughs of a building interior after computing the lighting.

The large size of the matrices used in classical radiosity (the square of the number of patches) causes problems for realistic scenes. Practical implementations may use Jacobi method, Jacobi or Gauss–Seidel method, Gauss-Seidel iterations, which is equivalent (at least in the Jacobi case) to simulating the propagation of light one bounce at a time until the amount of light remaining (not yet absorbed by surfaces) is insignificant. The number of iterations (bounces) required is dependent on the scene, not the number of patches, so the total work is proportional to the square of the number of patches (in contrast, solving the matrix equation using Gaussian elimination requires work proportional to the cube of the number of patches). Form factors may be recomputed when they are needed, to avoid storing a complete matrix in memory.

The quality of rendering is often determined by the size of the patches, e.g. very fine meshes are needed to depict the edges of shadows accurately. An important improvement is ''hierarchical radiosity'', which uses a coarser mesh (larger patches) for simulating the transfer of light between surfaces that are far away from one another, and adaptively sub-divides the patches as needed. This allows radiosity to be used for much larger and more complex scenes.

Alternative and extended versions of the radiosity method support non-Lambertian surfaces, such as glossy surfaces and mirrors, and sometimes use volumes or "clusters" of objects as well as surface patches. Stochastic or Monte Carlo method, Monte Carlo radiosity uses Sampling (statistics), random sampling in various ways, e.g. taking samples of incident light instead of integrating over all patches, which can improve performance but adds noise (this noise can be reduced by using deterministic iterations as a final step, unlike path tracing noise). Simplified and partially precomputed versions of radiosity are widely used for real-time rendering, combined with techniques such as ''octree radiosity'' that store approximations of the light field.

Path tracing

As part of the approach known as ''physically based rendering'',path tracing

Path tracing is a rendering algorithm in computer graphics that Simulation, simulates how light interacts with Physical object, objects, voxels, and Volumetric_path_tracing, participating media to generate realistic (''physically plausible'') R ...

has become the dominant technique for rendering realistic scenes, including effects for movies. For example, the popular open source 3D software Blender (software), Blender uses path tracing in its Cycles renderer. Images produced using path tracing for global illumination

Global illumination (GI), or indirect illumination, is a group of algorithms used in 3D computer graphics that are meant to add more realistic lighting

Lighting or illumination is the deliberate use of light to achieve practical or aest ...

are generally noisier than when using Radiosity (computer graphics), radiosity (the main competing algorithm for realistic lighting), but radiosity can be difficult to apply to complex scenes and is prone to artifacts that arise from using a Tessellation (computer graphics), tessellated representation of irradiance.

Like ''distributed ray tracing'', path tracing is a kind of ''stochastic'' or ''Randomized algorithm, randomized'' ray tracing that uses Monte Carlo integration, Monte Carlo or Quasi-Monte Carlo method, Quasi-Monte Carlo integration. It was proposed and named in 1986 by Jim Kajiya in the same paper as the rendering equation

In computer graphics, the rendering equation is an integral equation that expresses the amount of light leaving a point on a surface as the sum of emitted light and reflected light. It was independently introduced into computer graphics by David ...

. Kajiya observed that much of the complexity of distributed ray tracing could be avoided by only tracing a single path from the camera at a time (in Kajiya's implementation, this "no branching" rule was broken by tracing additional rays from each surface intersection point to randomly chosen points on each light source). Kajiya suggested reducing the noise present in the output images by using ''stratified sampling'' and ''importance sampling'' for making random decisions such as choosing which ray to follow at each step of a path. Even with these techniques, path tracing would not have been practical for film rendering, using computers available at the time, because the computational cost of generating enough samples to reduce variance to an acceptable level was too high. Monster House (film), Monster House, the first feature film rendered entirely using path tracing, was not released until 20 years later.