Flatness Problem on:

[Wikipedia]

[Google]

[Amazon]

The flatness problem (also known as the oldness problem) is a

The flatness problem (also known as the oldness problem) is a

The flatness problem (also known as the oldness problem) is a

The flatness problem (also known as the oldness problem) is a cosmological

Cosmology () is a branch of physics and metaphysics dealing with the nature of the universe, the cosmos. The term ''cosmology'' was first used in English in 1656 in Thomas Blount's ''Glossographia'', with the meaning of "a speaking of the wo ...

fine-tuning problem within the Big Bang

The Big Bang is a physical theory that describes how the universe expanded from an initial state of high density and temperature. Various cosmological models based on the Big Bang concept explain a broad range of phenomena, including th ...

model of the universe. Such problems arise from the observation that some of the initial conditions of the universe appear to be fine-tuned to very 'special' values, and that small deviations from these values would have extreme effects on the appearance of the universe at the current time.

In the case of the flatness problem, the parameter which appears fine-tuned is the density of matter and energy in the universe. This value affects the curvature of space-time, with a very specific critical value Critical value or threshold value can refer to:

* A quantitative threshold in medicine, chemistry and physics

* Critical value (statistics), boundary of the acceptance region while testing a statistical hypothesis

* Value of a function at a crit ...

being required for a flat universe. The current density of the universe is observed to be very close to this critical value. Since any departure of the total density from the critical value would increase rapidly over cosmic time, the early universe must have had a density even closer to the critical density, departing from it by one part in 1062 or less. This leads cosmologists to question how the initial density came to be so closely fine-tuned to this 'special' value.

The problem was first mentioned by Robert Dicke in 1969. The most commonly accepted solution among cosmologists is cosmic inflation, the idea that the universe went through a brief period of extremely rapid expansion in the first fraction of a second after the Big Bang; along with the monopole problem and the horizon problem, the flatness problem is one of the three primary motivations for inflationary theory.

Energy density and the Friedmann equation

According toEinstein

Albert Einstein (14 March 187918 April 1955) was a German-born theoretical physicist who is best known for developing the theory of relativity. Einstein also made important contributions to quantum mechanics. His mass–energy equivalence f ...

's field equations

A classical field theory is a physical theory that predicts how one or more fields in physics interact with matter through field equations, without considering effects of quantization; theories that incorporate quantum mechanics are called qua ...

of general relativity

General relativity, also known as the general theory of relativity, and as Einstein's theory of gravity, is the differential geometry, geometric theory of gravitation published by Albert Einstein in 1915 and is the current description of grav ...

, the structure of spacetime

In physics, spacetime, also called the space-time continuum, is a mathematical model that fuses the three dimensions of space and the one dimension of time into a single four-dimensional continuum. Spacetime diagrams are useful in visualiz ...

is affected by the presence of matter

In classical physics and general chemistry, matter is any substance that has mass and takes up space by having volume. All everyday objects that can be touched are ultimately composed of atoms, which are made up of interacting subatomic pa ...

and energy. On small scales space appears flat – as does the surface of the Earth if one looks at a small area. On large scales however, space is bent by the gravitational effect of matter. Since relativity indicates that matter and energy are equivalent, this effect is also produced by the presence of energy (such as light and other electromagnetic radiation) in addition to matter. The amount of bending (or curvature

In mathematics, curvature is any of several strongly related concepts in geometry that intuitively measure the amount by which a curve deviates from being a straight line or by which a surface deviates from being a plane. If a curve or su ...

) of the universe depends on the density of matter/energy present.

This relationship can be expressed by the first Friedmann equation. In a universe without a cosmological constant

In cosmology, the cosmological constant (usually denoted by the Greek capital letter lambda: ), alternatively called Einstein's cosmological constant,

is a coefficient that Albert Einstein initially added to his field equations of general rel ...

, this is:

:

Here is the Hubble parameter, a measure of the rate at which the universe is expanding. is the total density of mass and energy in the universe, is the scale factor (essentially the 'size' of the universe), and is the curvature parameter — that is, a measure of how curved spacetime is. A positive, zero or negative value of corresponds to a respectively closed, flat or open universe. The constants and are Newton's gravitational constant

The gravitational constant is an empirical physical constant involved in the calculation of gravitational effects in Sir Isaac Newton's law of universal gravitation and in Albert Einstein's general relativity, theory of general relativity. It ...

and the speed of light

The speed of light in vacuum, commonly denoted , is a universal physical constant exactly equal to ). It is exact because, by international agreement, a metre is defined as the length of the path travelled by light in vacuum during a time i ...

, respectively.

Cosmologists often simplify this equation by defining a critical density, . For a given value of , this is defined as the density required for a flat universe, i.e. . Thus the above equation implies

:.

Since the constant is known and the expansion rate can be measured by observing the speed at which distant galaxies are receding from us,

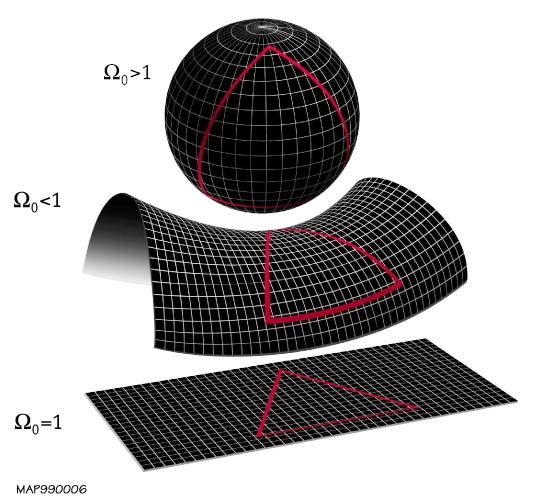

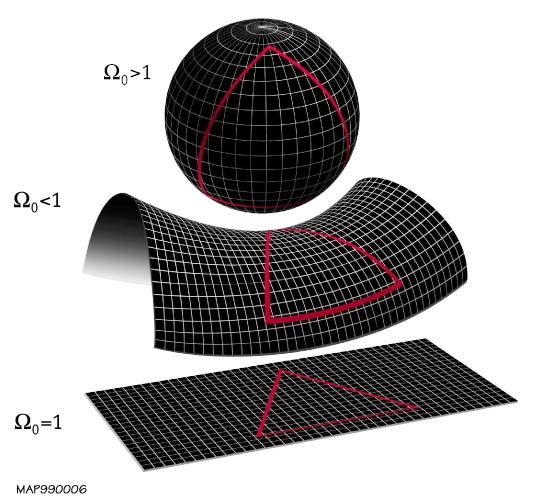

can be determined. Its value is currently around . The ratio of the actual density to this critical value is called Ω, and its difference from 1 determines the geometry of the universe: corresponds to a greater than critical density, , and hence a closed universe. gives a low density open universe, and Ω equal to exactly 1 gives a flat universe.

The Friedmann equation,

:

can be re-arranged into

:

which after factoring , and using , leads to

:

The right hand side of the last expression above contains constants only and therefore the left hand side must remain constant throughout the evolution of the universe.

As the universe expands the scale factor increases, but the density decreases as matter (or energy) becomes spread out. For the standard model of the universe which contains mainly matter and radiation for most of its history, decreases more quickly than increases, and so the factor will decrease. Since the time of the Planck era, shortly after the Big Bang, this term has decreased by a factor of around and so must have increased by a similar amount to retain the constant value of their product.

Current value of Ω

Measurement

The value of Ω at the present time is denoted Ω0. This value can be deduced by measuring the curvature of spacetime (since , or , is defined as the density for which the curvature ). The curvature can be inferred from a number of observations. One such observation is that of anisotropies (that is, variations with direction - see below) in theCosmic Microwave Background

The cosmic microwave background (CMB, CMBR), or relic radiation, is microwave radiation that fills all space in the observable universe. With a standard optical telescope, the background space between stars and galaxies is almost completely dar ...

(CMB) radiation. The CMB is electromagnetic radiation

In physics, electromagnetic radiation (EMR) is a self-propagating wave of the electromagnetic field that carries momentum and radiant energy through space. It encompasses a broad spectrum, classified by frequency or its inverse, wavelength ...

which fills the universe, left over from an early stage in its history when it was filled with photons

A photon () is an elementary particle that is a quantum of the electromagnetic field, including electromagnetic radiation such as light and radio waves, and the force carrier for the electromagnetic force. Photons are massless particles that ...

and a hot, dense plasma. This plasma cooled as the universe expanded, and when it cooled enough to form stable atoms

Atoms are the basic particles of the chemical elements. An atom consists of a nucleus of protons and generally neutrons, surrounded by an electromagnetically bound swarm of electrons. The chemical elements are distinguished from each other ...

it no longer absorbed the photons. The photons present at that stage have been propagating ever since, growing fainter and less energetic as they spread through the ever-expanding universe.

The temperature of this radiation is almost the same at all points on the sky, but there is a slight variation (around one part in 100,000) between the temperature received from different directions. The angular scale of these fluctuations - the typical angle between a hot patch and a cold patch on the skySince there are fluctuations on many scales, not a single angular separation between hot and cold spots, the necessary measure is the angular scale of the first peak in the anisotropies' power spectrum

In signal processing, the power spectrum S_(f) of a continuous time signal x(t) describes the distribution of Power (physics), power into frequency components f composing that signal. According to Fourier analysis, any physical signal can be ...

. See Cosmic Microwave Background#Primary anisotropy. - depends on the curvature of the universe which in turn depends on its density as described above. Thus, measurements of this angular scale allow an estimation of Ω0.

Another probe of Ω0 is the frequency of Type-Ia supernovae

A supernova (: supernovae or supernovas) is a powerful and luminous explosion of a star. A supernova occurs during the last evolutionary stages of a massive star, or when a white dwarf is triggered into runaway nuclear fusion. The original ob ...

at different distances from Earth. These supernovae, the explosions of degenerate white dwarf stars, are a type of standard candle

The cosmic distance ladder (also known as the extragalactic distance scale) is the succession of methods by which astronomers determine the distances to celestial objects. A ''direct'' distance measurement of an astronomical object is possible ...

; this means that the processes governing their intrinsic brightness are well understood so that a measure of ''apparent'' brightness when seen from Earth can be used to derive accurate distance measures for them (the apparent brightness decreasing in proportion to the square of the distance - see luminosity distance

Luminosity distance ''DL'' is defined in terms of the relationship between the absolute magnitude ''M'' and apparent magnitude ''m'' of an astronomical object.

: M = m - 5 \log_\!\,

which gives:

: D_L = 10^

where ''DL'' is measured in parsecs. ...

). Comparing this distance to the redshift

In physics, a redshift is an increase in the wavelength, and corresponding decrease in the frequency and photon energy, of electromagnetic radiation (such as light). The opposite change, a decrease in wavelength and increase in frequency and e ...

of the supernovae gives a measure of the rate at which the universe has been expanding at different points in history. Since the expansion rate evolves differently over time in cosmologies with different total densities, Ω0 can be inferred from the supernovae data.

Data from the Wilkinson Microwave Anisotropy Probe

The Wilkinson Microwave Anisotropy Probe (WMAP), originally known as the Microwave Anisotropy Probe (MAP and Explorer 80), was a NASA spacecraft operating from 2001 to 2010 which measured temperature differences across the sky in the cosmic mic ...

(WMAP, measuring CMB anisotropies) combined with that from the Sloan Digital Sky Survey

The Sloan Digital Sky Survey or SDSS is a major multi-spectral imaging and spectroscopic redshift survey using a dedicated 2.5-m wide-angle optical telescope at Apache Point Observatory in New Mexico, United States. The project began in 2000 a ...

and observations of type-Ia supernovae constrain Ω0 to be 1 within 1%. In other words, the term , Ω − 1, is currently less than 0.01, and therefore must have been less than 10−62 at the Planck era. The cosmological parameters measured by Planck spacecraft mission reaffirmed previous results by WMAP.

Implication

This tiny value is the crux of the flatness problem. If the initial density of the universe could take any value, it would seem extremely surprising to find it so 'finely tuned' to the critical value . Indeed, a very small departure of Ω from 1 in the early universe would have been magnified during billions of years of expansion to create a current density very far from critical. In the case of an overdensity this would lead to a universe so dense it would cease expanding and collapse into a Big Crunch (an opposite to the Big Bang in which all matter and energy falls back into an extremely dense state) in a few years or less; in the case of an underdensity it would expand so quickly and become so sparse it would soon seem essentially empty, andgravity

In physics, gravity (), also known as gravitation or a gravitational interaction, is a fundamental interaction, a mutual attraction between all massive particles. On Earth, gravity takes a slightly different meaning: the observed force b ...

would not be strong enough by comparison to cause matter to collapse and form galaxies resulting in a big freeze. In either case the universe would contain no complex structures such as galaxies, stars, planets and any form of life.

This problem with the Big Bang model was first pointed out by Robert Dicke in 1969, and it motivated a search for some reason the density should take such a specific value.

Solutions to the problem

Some cosmologists agreed with Dicke that the flatness problem was a serious one, in need of a fundamental reason for the closeness of the density to criticality. But there was also a school of thought which denied that there was a problem to solve, arguing instead that since the universe must have some density it may as well have one close to as far from it, and that speculating on a reason for any particular value was "beyond the domain of science". That, however, is a minority viewpoint, even among those sceptical of the existence of the flatness problem. Several cosmologists have argued that, for a variety of reasons, the flatness problem is based on a misunderstanding.Anthropic principle

One solution to the problem is to invoke theanthropic principle

In cosmology, the anthropic principle, also known as the observation selection effect, is the proposition that the range of possible observations that could be made about the universe is limited by the fact that observations are only possible in ...

, which states that humans should take into account the conditions necessary for them to exist when speculating about causes of the universe's properties. If two types of universe seem equally likely but only one is suitable for the evolution of intelligent life, the anthropic principle suggests that finding ourselves in that universe is no surprise: if the other universe had existed instead, there would be no observers to notice the fact.

The principle can be applied to solve the flatness problem in two somewhat different ways. The first (an application of the 'strong anthropic principle') was suggested by C. B. Collins and Stephen Hawking

Stephen William Hawking (8January 194214March 2018) was an English theoretical physics, theoretical physicist, cosmologist, and author who was director of research at the Centre for Theoretical Cosmology at the University of Cambridge. Between ...

, who in 1973 considered the existence of an infinite number of universes such that every possible combination of initial properties was held by some universe. In such a situation, they argued, only those universes with exactly the correct density for forming galaxies and stars would give rise to intelligent observers such as humans: therefore, the fact that we observe Ω to be so close to 1 would be "simply a reflection of our own existence".

An alternative approach, which makes use of the 'weak anthropic principle', is to suppose that the universe is infinite in size, but with the density varying in different places (i.e. an inhomogeneous

Homogeneity and heterogeneity are concepts relating to the uniformity of a substance, process or image. A homogeneous feature is uniform in composition or character (i.e., color, shape, size, weight, height, distribution, texture, language, i ...

universe). Thus some regions will be over-dense and some under-dense . These regions may be extremely far apart - perhaps so far that light has not had time to travel from one to another during the age of the universe

In physical cosmology, the age of the universe is the cosmological time, time elapsed since the Big Bang: 13.79 billion years.

Astronomers have two different approaches to determine the age of the universe. One is based on a particle physics ...

(that is, they lie outside one another's cosmological horizon

A cosmological horizon is a measure of the distance from which one could possibly retrieve information. This observable constraint is due to various properties of general relativity, the expanding universe, and the physics of Big Bang cosmology. ...

s). Therefore, each region would behave essentially as a separate universe: if we happened to live in a large patch of almost-critical density we would have no way of knowing of the existence of far-off under- or over-dense patches since no light or other signal has reached us from them. An appeal to the anthropic principle can then be made, arguing that intelligent life would only arise in those patches with Ω very close to 1, and that therefore our living in such a patch is unsurprising.

This latter argument makes use of a version of the anthropic principle which is 'weaker' in the sense that it requires no speculation on multiple universes, or on the probabilities of various different universes existing instead of the current one. It requires only a single universe which is infinite - or merely large enough that many disconnected patches can form - and that the density varies in different regions (which is certainly the case on smaller scales, giving rise to galactic clusters and voids

Void may refer to:

Science, engineering, and technology

* Void (astronomy), the spaces between galaxy filaments that contain no galaxies

* Void (composites), a pore that remains unoccupied in a composite material

* Void, synonym for vacuum, ...

).

However, the anthropic principle has been criticised by many scientists. For example, in 1979 Bernard Carr and Martin Rees argued that the principle "is entirely post hoc: it has not yet been used to predict any feature of the Universe." Others have taken objection to its philosophical basis, with Ernan McMullin writing in 1994 that "the weak Anthropic principle is trivial ... and the strong Anthropic principle is indefensible." Since many physicists and philosophers of science do not consider the principle to be compatible with the scientific method

The scientific method is an Empirical evidence, empirical method for acquiring knowledge that has been referred to while doing science since at least the 17th century. Historically, it was developed through the centuries from the ancient and ...

, another explanation for the flatness problem was needed.

Inflation

The standard solution to the flatness problem invokes cosmic inflation, a process whereby the universe expands exponentially quickly (i.e. grows as with time , for some constant ) during a short period in its early history. The theory of inflation was first proposed in 1979, and published in 1981, by Alan Guth. His two main motivations for doing so were the flatness problem and the horizon problem, another fine-tuning problem of physical cosmology. However, "In December, 1980 when Guth was developing his inflation model, he was not trying to solve either the flatness or horizon problems. Indeed, at that time, he knew nothing of the horizon problem and had never quantitatively calculated the flatness problem". He was a particle physicist trying to solve the magnetic monopole problem." The proposed cause of inflation is a field which permeates space and drives the expansion. The field contains a certain energy density, but unlike the density of the matter or radiation present in the late universe, which decrease over time, the density of the inflationary field remains roughly constant as space expands. Therefore, the term increases extremely rapidly as the scale factor grows exponentially. Recalling the Friedmann Equation :, and the fact that the right-hand side of this expression is constant, the term must therefore decrease with time. Thus if initially takes any arbitrary value, a period of inflation can force it down towards 0 and leave it extremely small - around as required above, for example. Subsequent evolution of the universe will cause the value to grow, bringing it to the currently observed value of around 0.01. Thus the sensitive dependence on the initial value of Ω has been removed: a large and therefore 'unsurprising' starting value need not become amplified and lead to a very curved universe with no opportunity to form galaxies and other structures. This success in solving the flatness problem is considered one of the major motivations for inflationary theory. However, some physicists deny that inflationary theory resolves the flatness problem, arguing that it merely moves the fine-tuning from the probability distribution to the potential of a field, or even deny that it is a scientific theory.Post inflation

Although inflationary theory is regarded as having had much success, and the evidence for it is compelling, it is not universally accepted: cosmologists recognize that there are still gaps in the theory and are open to the possibility that future observations will disprove it. In particular, in the absence of any firm evidence for what the field driving inflation should be, many different versions of the theory have been proposed. Many of these contain parameters or initial conditions which themselves require fine-tuning in much the way that the early density does without inflation. For these reasons work is still being done on alternative solutions to the flatness problem. These have included non-standard interpretations of the effect of dark energy and gravity, particle production in an oscillating universe, and use of a Bayesian statistical approach to argue that the problem is non-existent. The latter argument, suggested for example by Evrard and Coles, maintains that the idea that Ω being close to 1 is 'unlikely' is based on assumptions about the likely distribution of the parameter which are not necessarily justified. Despite this ongoing work, inflation remains by far the dominant explanation for the flatness problem. The question arises, however, whether it is still the dominant explanation because it is the best explanation, or because the community is unaware of progress on this problem. In particular, in addition to the idea that Ω is not a suitable parameter in this context, other arguments against the flatness problem have been presented: if the universe collapses in the future, then the flatness problem "exists", but only for a relatively short time, so a typical observer would not expect to measure Ω appreciably different from 1; in the case of a universe which expands forever with a positive cosmological constant, fine-tuning is needed not to achieve a (nearly) flat universe, but also to avoid it.Einstein–Cartan theory

The flatness problem is naturally solved by the Einstein–Cartan–Sciama–Kibble theory of gravity, without an exotic form of matter required in inflationary theory. This theory extends general relativity by removing a constraint of the symmetry of the affine connection and regarding its antisymmetric part, thetorsion tensor

In differential geometry, the torsion tensor is a tensor that is associated to any affine connection. The torsion tensor is a bilinear map of two input vectors X,Y, that produces an output vector T(X,Y) representing the displacement within a t ...

, as a dynamical variable. It has no free parameters. Including torsion gives the correct conservation law for the total (orbital plus intrinsic) angular momentum

Angular momentum (sometimes called moment of momentum or rotational momentum) is the rotational analog of Momentum, linear momentum. It is an important physical quantity because it is a Conservation law, conserved quantity – the total ang ...

of matter in the presence of gravity. The minimal coupling between torsion and Dirac spinors obeying the nonlinear Dirac equation generates a spin-spin interaction which is significant in fermion

In particle physics, a fermion is a subatomic particle that follows Fermi–Dirac statistics. Fermions have a half-integer spin (spin 1/2, spin , Spin (physics)#Higher spins, spin , etc.) and obey the Pauli exclusion principle. These particles i ...

ic matter at extremely high densities. Such an interaction averts the unphysical big bang singularity, replacing it with a bounce at a finite minimum scale factor, before which the Universe was contracting. The rapid expansion immediately after the big bounce explains why the present Universe at largest scales appears spatially flat, homogeneous and isotropic. As the density of the Universe decreases, the effects of torsion weaken and the Universe smoothly enters the radiation-dominated era.

See also

* Magnetic monopole * Horizon problemNotes

References

{{Portal bar, Physics, Space Physical cosmology Inflation (cosmology) Unsolved problems in physics