|

Well-conditioned

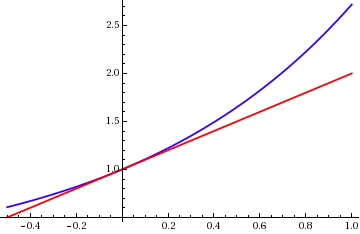

In numerical analysis, the condition number of a function measures how much the output value of the function can change for a small change in the input argument. This is used to measure how sensitive a function is to changes or errors in the input, and how much error in the output results from an error in the input. Very frequently, one is solving the inverse problem: given f(x) = y, one is solving for ''x,'' and thus the condition number of the (local) inverse must be used. In linear regression the condition number of the moment matrix can be used as a diagnostic for multicollinearity. The condition number is an application of the derivative, and is formally defined as the value of the asymptotic worst-case relative change in output for a relative change in input. The "function" is the solution of a problem and the "arguments" are the data in the problem. The condition number is frequently applied to questions in linear algebra, in which case the derivative is straightforward but ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Numerical Analysis

Numerical analysis is the study of algorithms that use numerical approximation (as opposed to symbolic computation, symbolic manipulations) for the problems of mathematical analysis (as distinguished from discrete mathematics). It is the study of numerical methods that attempt at finding approximate solutions of problems rather than the exact ones. Numerical analysis finds application in all fields of engineering and the physical sciences, and in the 21st century also the life and social sciences, medicine, business and even the arts. Current growth in computing power has enabled the use of more complex numerical analysis, providing detailed and realistic mathematical models in science and engineering. Examples of numerical analysis include: ordinary differential equations as found in celestial mechanics (predicting the motions of planets, stars and galaxies), numerical linear algebra in data analysis, and stochastic differential equations and Markov chains for simulating living ce ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Floating-point

In computing, floating-point arithmetic (FP) is arithmetic that represents real numbers approximately, using an integer with a fixed precision, called the significand, scaled by an integer exponent of a fixed base. For example, 12.345 can be represented as a base-ten floating-point number: 12.345 = \underbrace_\text \times \underbrace_\text\!\!\!\!\!\!^ In practice, most floating-point systems use base two, though base ten (decimal floating point) is also common. The term ''floating point'' refers to the fact that the number's radix point can "float" anywhere to the left, right, or between the significant digits of the number. This position is indicated by the exponent, so floating point can be considered a form of scientific notation. A floating-point system can be used to represent, with a fixed number of digits, numbers of very different orders of magnitude — such as the number of meters between galaxies or between protons in an atom. For this reason, floating-poi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Round-off Error

A roundoff error, also called rounding error, is the difference between the result produced by a given algorithm using exact arithmetic and the result produced by the same algorithm using finite-precision, rounded arithmetic. Rounding errors are due to inexactness in the representation of real numbers and the arithmetic operations done with them. This is a form of quantization error. When using approximation equations or algorithms, especially when using finitely many digits to represent real numbers (which in theory have infinitely many digits), one of the goals of numerical analysis is to estimate computation errors. Computation errors, also called numerical errors, include both truncation errors and roundoff errors. When a sequence of calculations with an input involving any roundoff error are made, errors may accumulate, sometimes dominating the calculation. In ill-conditioned problems, significant error may accumulate. In short, there are two major facets of roundoff errors ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Numerical Linear Algebra

Numerical linear algebra, sometimes called applied linear algebra, is the study of how matrix operations can be used to create computer algorithms which efficiently and accurately provide approximate answers to questions in continuous mathematics. It is a subfield of numerical analysis, and a type of linear algebra. Computers use floating-point arithmetic and cannot exactly represent irrational data, so when a computer algorithm is applied to a matrix of data, it can sometimes increase the difference between a number stored in the computer and the true number that it is an approximation of. Numerical linear algebra uses properties of vectors and matrices to develop computer algorithms that minimize the error introduced by the computer, and is also concerned with ensuring that the algorithm is as efficient as possible. Numerical linear algebra aims to solve problems of continuous mathematics using finite precision computers, so its applications to the natural and social sciences ar ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Moment Matrix

In mathematics, a moment matrix is a special symmetric square matrix whose rows and columns are indexed by monomials. The entries of the matrix depend on the product of the indexing monomials only (cf. Hankel matrices.) Moment matrices play an important role in polynomial fitting, polynomial optimization (since positive semidefinite moment matrices correspond to polynomials which are sums of squares) and econometrics. Application in regression A multiple linear regression model can be written as :y = \beta_ + \beta_ x_ + \beta_ x_ + \dots \beta_ x_ + u where y is the explained variable, x_, x_ \dots, x_ are the explanatory variables, u is the error, and \beta_, \beta_ \dots, \beta_ are unknown coefficients to be estimated. Given observations \left\_^, we have a system of n linear equations that can be expressed in matrix notation. :\begin y_ \\ y_ \\ \vdots \\ y_ \end = \begin 1 & x_ & x_ & \dots & x_ \\ 1 & x_ & x_ & \dots & x_ \\ \vdots & \vdots & \vdots & \ddots & \vdots \\ 1 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Relative Error

The approximation error in a data value is the discrepancy between an exact value and some ''approximation'' to it. This error can be expressed as an absolute error (the numerical amount of the discrepancy) or as a relative error (the absolute error divided by the data value). An approximation error can occur because of computing machine precision or measurement error (e.g. the length of a piece of paper is 4.53 cm but the ruler only allows you to estimate it to the nearest 0.1 cm, so you measure it as 4.5 cm). In the mathematical field of numerical analysis, the numerical stability of an algorithm indicates how the error is propagated by the algorithm. Formal definition One commonly distinguishes between the relative error and the absolute error. Given some value ''v'' and its approximation ''v''approx, the absolute error is :\epsilon = , v-v_\text, \ , where the vertical bars denote the absolute value. If v \ne 0, the relative error is : \eta = \frac = \left, \ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Unitary Matrix

In linear algebra, a complex square matrix is unitary if its conjugate transpose is also its inverse, that is, if U^* U = UU^* = UU^ = I, where is the identity matrix. In physics, especially in quantum mechanics, the conjugate transpose is referred to as the Hermitian adjoint of a matrix and is denoted by a dagger (†), so the equation above is written U^\dagger U = UU^\dagger = I. The real analogue of a unitary matrix is an orthogonal matrix. Unitary matrices have significant importance in quantum mechanics because they preserve norms, and thus, probability amplitudes. Properties For any unitary matrix of finite size, the following hold: * Given two complex vectors and , multiplication by preserves their inner product; that is, . * is normal (U^* U = UU^*). * is diagonalizable; that is, is unitarily similar to a diagonal matrix, as a consequence of the spectral theorem. Thus, has a decomposition of the form U = VDV^*, where is unitary, and is diagonal and uni ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Eigenvalue

In linear algebra, an eigenvector () or characteristic vector of a linear transformation is a nonzero vector that changes at most by a scalar factor when that linear transformation is applied to it. The corresponding eigenvalue, often denoted by \lambda, is the factor by which the eigenvector is scaled. Geometrically, an eigenvector, corresponding to a real nonzero eigenvalue, points in a direction in which it is stretched by the transformation and the eigenvalue is the factor by which it is stretched. If the eigenvalue is negative, the direction is reversed. Loosely speaking, in a multidimensional vector space, the eigenvector is not rotated. Formal definition If is a linear transformation from a vector space over a field into itself and is a nonzero vector in , then is an eigenvector of if is a scalar multiple of . This can be written as T(\mathbf) = \lambda \mathbf, where is a scalar in , known as the eigenvalue, characteristic value, or characteristic root ass ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Normal Matrix

In mathematics, a complex square matrix is normal if it commutes with its conjugate transpose : The concept of normal matrices can be extended to normal operators on infinite dimensional normed spaces and to normal elements in C*-algebras. As in the matrix case, normality means commutativity is preserved, to the extent possible, in the noncommutative setting. This makes normal operators, and normal elements of C*-algebras, more amenable to analysis. The spectral theorem states that a matrix is normal if and only if it is unitarily similar to a diagonal matrix, and therefore any matrix satisfying the equation is diagonalizable. The converse does not hold because diagonalizable matrices may have non-orthogonal eigenspaces. The left and right singular vectors in the singular value decomposition of a normal matrix \mathbf = \mathbf \boldsymbol \mathbf^* differ only in complex phase from each other and from the corresponding eigenvectors, since the phase must be factored out ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Singular Value

In mathematics, in particular functional analysis, the singular values, or ''s''-numbers of a compact operator T: X \rightarrow Y acting between Hilbert spaces X and Y, are the square roots of the (necessarily non-negative) eigenvalues of the self-adjoint operator T^*T (where T^* denotes the adjoint of T). The singular values are non-negative real numbers, usually listed in decreasing order (''σ''1(''T''), ''σ''2(''T''), …). The largest singular value ''σ''1(''T'') is equal to the operator norm of ''T'' (see Min-max theorem). If ''T'' acts on Euclidean space \Reals ^n, there is a simple geometric interpretation for the singular values: Consider the image by T of the unit sphere; this is an ellipsoid, and the lengths of its semi-axes are the singular values of T (the figure provides an example in \Reals^2). The singular values are the absolute values of the eigenvalues of a normal matrix ''A'', because the spectral theorem can be applied to obtain unitary diagonalization of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Matrix Norm

In mathematics, a matrix norm is a vector norm in a vector space whose elements (vectors) are matrices (of given dimensions). Preliminaries Given a field K of either real or complex numbers, let K^ be the -vector space of matrices with m rows and n columns and entries in the field K. A matrix norm is a norm on K^. This article will always write such norms with double vertical bars (like so: \, A\, ). Thus, the matrix norm is a function \, \cdot\, : K^ \to \R that must satisfy the following properties: For all scalars \alpha \in K and matrices A, B \in K^, *\, A\, \ge 0 (''positive-valued'') *\, A\, = 0 \iff A=0_ (''definite'') *\left\, \alpha A\right\, =\left, \alpha\ \left\, A\right\, (''absolutely homogeneous'') *\, A+B\, \le \, A\, +\, B\, (''sub-additive'' or satisfying the ''triangle inequality'') The only feature distinguishing matrices from rearranged vectors is multiplication. Matrix norms are particularly useful if they are also sub-multiplicative: *\left\, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Invertible Matrix

In linear algebra, an -by- square matrix is called invertible (also nonsingular or nondegenerate), if there exists an -by- square matrix such that :\mathbf = \mathbf = \mathbf_n \ where denotes the -by- identity matrix and the multiplication used is ordinary matrix multiplication. If this is the case, then the matrix is uniquely determined by , and is called the (multiplicative) ''inverse'' of , denoted by . Matrix inversion is the process of finding the matrix that satisfies the prior equation for a given invertible matrix . A square matrix that is ''not'' invertible is called singular or degenerate. A square matrix is singular if and only if its determinant is zero. Singular matrices are rare in the sense that if a square matrix's entries are randomly selected from any finite region on the number line or complex plane, the probability that the matrix is singular is 0, that is, it will "almost never" be singular. Non-square matrices (-by- matrices for which ) do not hav ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |