|

Vertex (computer Graphics)

A vertex (plural vertices) in computer graphics is a data structure that describes certain attributes, like the position of a point in 2D or 3D space, or multiple points on a Computer representation of surfaces, surface. Application to 3D models 3D models are most often represented as triangulated Polyhedron, polyhedra forming a triangle mesh. Non-triangular surfaces can be converted to an array of triangles through Tessellation (computer graphics), tessellation. Attributes from the vertices are typically interpolated across mesh surfaces. Vertex attributes The vertices of triangles are associated not only with spatial position but also with other values used to rendering (computer graphics), render the object correctly. Most attributes of a vertex represent vectors in the space to be Rendering (computer graphics), rendered. These vectors are typically 1 (''x''), 2 (''x, y''), or 3 (''x, y, z'') dimensional and can include a fourth Homogeneous coordinates, homogeneous coordina ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

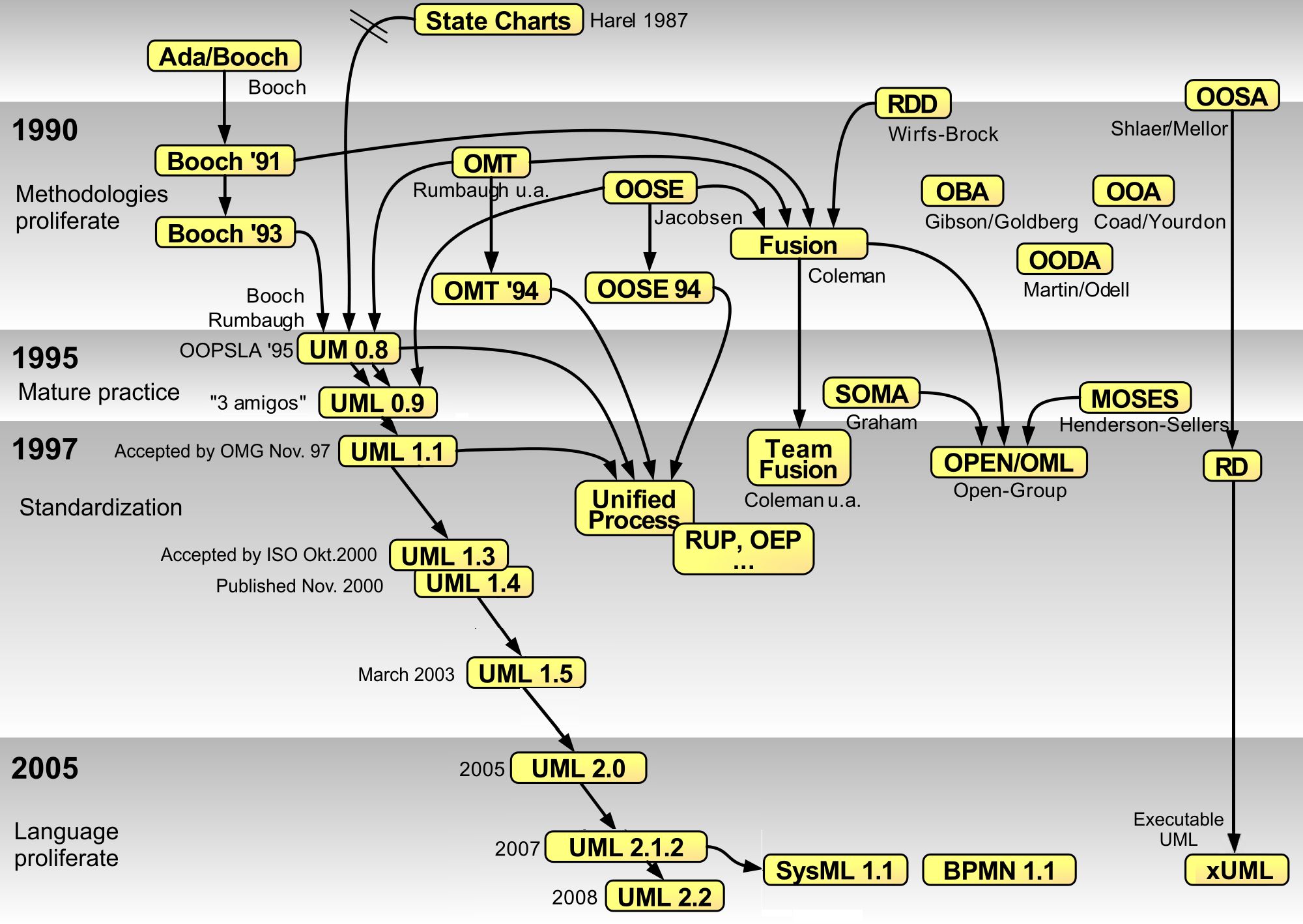

UML Class Vector

The Unified Modeling Language (UML) is a general-purpose visual modeling language that is intended to provide a standard way to visualize the design of a system. UML provides a standard notation for many types of diagrams which can be roughly divided into three main groups: behavior diagrams, interaction diagrams, and structure diagrams. The creation of UML was originally motivated by the desire to standardize the disparate notational systems and approaches to software design. It was developed at Rational Software in 1994–1995, with further development led by them through 1996. In 1997, UML was adopted as a standard by the Object Management Group (OMG) and has been managed by this organization ever since. In 2005, UML was also published by the International Organization for Standardization (ISO) and the International Electrotechnical Commission (IEC) as the ISO/IEC 19501 standard. Since then the standard has been periodically revised to cover the latest revision of UML. In ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Precomputed Lighting

A lightmap is a data structure used in lightmapping, a form of surface caching in which the brightness of surfaces in a virtual scene is pre-calculated and stored in texture maps for later use. Lightmaps are most commonly applied to static objects in applications that use real-time 3D computer graphics, such as video game A video game or computer game is an electronic game that involves interaction with a user interface or input device (such as a joystick, game controller, controller, computer keyboard, keyboard, or motion sensing device) to generate visual fe ...s, in order to provide lighting effects such as global illumination at a relatively low computational cost. History John Carmack's '' Quake'' was the first computer game to use lightmaps to augment rendering. Before lightmaps were invented, realtime applications relied purely on Gouraud shading to interpolate vertex lighting for surfaces. This only allowed low frequency lighting information, and could cr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Facial Animation

Computer facial animation is primarily an area of computer graphics that encapsulates methods and techniques for generating and animating images or models of a character face. The character can be a human, a humanoid, an animal, a legendary creature or character, etc. Due to its subject and output type, it is also related to many other scientific and artistic fields from psychology to traditional animation. The importance of face, human faces in communication, verbal and non-verbal communication and advances in Graphics processing unit, computer graphics hardware and software have caused considerable scientific, technological, and artistic interests in computer facial animation. Although development of computer graphics methods for facial animation started in the early-1970s, major achievements in this field are more recent and happened since the late 1980s. The body of work around computer facial animation can be divided into two main areas: techniques to generate animation data, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Skeletal Animation

Skeletal animation or rigging is a technique in computer animation in which a character (or other articulated object) is represented in two parts: a polygonal or parametric mesh representation of the surface of the object, and a hierarchical set of interconnected parts (called joints or bones, and collectively forming the skeleton), a virtual armature used to animate (pose and keyframe) the mesh. While this technique is often used to animate humans and other organic figures, it only serves to make the animation process more intuitive, and the same technique can be used to control the deformation of any object—such as a door, a spoon, a building, or a galaxy. When the animated object is more general than, for example, a humanoid character, the set of "bones" may not be hierarchical or interconnected, but simply represent a higher-level description of the motion of the part of mesh it is influencing. The technique was introduced in 1988 by Nadia Magnenat Thalmann, Richard Lape ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Weighting

The process of frequency weighting involves emphasizing the contribution of particular aspects of a phenomenon (or of a set of data) over others to an outcome or result; thereby highlighting those aspects in comparison to others in the analysis. That is, rather than each variable in the data set contributing equally to the final result, some of the data is adjusted to make a greater contribution than others. This is analogous to the practice of adding (extra) weight to one side of a pair of scales in order to favour either the buyer or seller. While weighting may be applied to a set of data, such as epidemiological data, it is more commonly applied to measurements of light, heat, sound, gamma radiation, and in fact any stimulus that is spread over a spectrum A spectrum (: spectra or spectrums) is a set of related ideas, objects, or properties whose features overlap such that they blend to form a continuum. The word ''spectrum'' was first used scientifically in optics t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Weight Maps

This is a glossary of terms relating to computer graphics. For more general computer hardware terms, see glossary of computer hardware terms. 0–9 A B C D E F G H I K L M N O P Q R S T ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Tangent Vectors

In mathematics, a tangent vector is a vector that is tangent to a curve or surface at a given point. Tangent vectors are described in the differential geometry of curves in the context of curves in R''n''. More generally, tangent vectors are elements of a ''tangent space'' of a differentiable manifold. Tangent vectors can also be described in terms of germs. Formally, a tangent vector at the point x is a linear derivation of the algebra defined by the set of germs at x. Motivation Before proceeding to a general definition of the tangent vector, we discuss its use in calculus and its tensor In mathematics, a tensor is an algebraic object that describes a multilinear relationship between sets of algebraic objects associated with a vector space. Tensors may map between different objects such as vectors, scalars, and even other ... properties. Calculus Let \mathbf(t) be a parametric smooth curve. The tangent vector is given by \mathbf'(t) provided it exists and provi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Displacement Mapping

Displacement mapping is an alternative computer graphics technique in contrast to bump, normal, and parallax mapping, using a texture or height map to cause an effect where the actual geometric position of points over the textured surface are ''displaced'', often along the local surface normal, according to the value the texture function evaluates to at each point on the surface. It gives surfaces a sense of depth and detail, permitting in particular self-occlusion, self-shadowing and silhouettes; on the other hand, it is the most costly of this class of techniques owing to the large amount of additional geometry. For years, displacement mapping was a peculiarity of high-end rendering systems like PhotoRealistic RenderMan, while realtime APIs, like OpenGL and DirectX, were only starting to use this feature. One of the reasons for this is that the original implementation of displacement mapping required an adaptive tessellation of the surface in order to obtain enough microp ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Normal Mapping

In 3D computer graphics, normal mapping, or Dot3 bump mapping, is a texture mapping technique used for faking the lighting of bumps and dents – an implementation of bump mapping. It is used to add details without using more polygonal modeling, polygons. A common use of this technique is to greatly enhance the appearance and details of a low poly, low polygon model by generating a normal map from a high polygon model or Heightmap, height map. Normal maps are commonly stored as regular RGB images where the RGB components correspond to the X, Y, and Z coordinates, respectively, of the surface normal. History In 1978 Jim Blinn described how the normals of a surface could be perturbed to make geometrically flat faces have a detailed appearance. The idea of taking geometric details from a high polygon model was introduced in "Fitting Smooth Surfaces to Dense Polygon Meshes" by Krishnamurthy and Levoy, Proc. SIGGRAPH 1996, where this approach was used for creating displacement mappi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Phong Shading

In 3D computer graphics, Phong shading, Phong interpolation, or normal-vector interpolation shading is an interpolation technique for surface shading invented by computer graphics pioneer Bui Tuong Phong. Phong shading interpolates surface normals across rasterized polygons and computes pixel In digital imaging, a pixel (abbreviated px), pel, or picture element is the smallest addressable element in a Raster graphics, raster image, or the smallest addressable element in a dot matrix display device. In most digital display devices, p ... colors based on the interpolated normals and a reflection model. ''Phong shading'' may also refer to the specific combination of Phong interpolation and the Phong reflection model. History Phong shading and the Phong reflection model were developed at the University of Utah by Bui Tuong Phong, who published them in his 1973 Ph.D. dissertation and a 1975 paper.Bui Tuong Phong, "Illumination for Computer Generated Pictures," Comm. AC ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Normal (geometry)

In geometry, a normal is an object (e.g. a line, ray, or vector) that is perpendicular to a given object. For example, the normal line to a plane curve at a given point is the infinite straight line perpendicular to the tangent line to the curve at the point. A normal vector is a vector perpendicular to a given object at a particular point. A normal vector of length one is called a unit normal vector or normal direction. A curvature vector is a normal vector whose length is the curvature of the object. Multiplying a normal vector by results in the opposite vector, which may be used for indicating sides (e.g., interior or exterior). In three-dimensional space, a surface normal, or simply normal, to a surface at point is a vector perpendicular to the tangent plane of the surface at . The vector field of normal directions to a surface is known as '' Gauss map''. The word "normal" is also used as an adjective: a line ''normal'' to a plane, the ''normal'' component of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Texture Mapping

Texture mapping is a term used in computer graphics to describe how 2D images are projected onto 3D models. The most common variant is the UV unwrap, which can be described as an inverse paper cutout, where the surfaces of a 3D model are cut apart so that it can be unfolded into a 2D coordinate space (UV Space). Semantic Texture mapping can both refer to the task of unwrapping a 3D model, the abstract that a 3D model has textures applied to it and the related algorithm of the 3D software. Texture map refers to a Raster graphics also called image, texture. If the texture stores a specific property it's also referred to as color map, roughness map, etc. The coordinate space which converts from the 3D space of a 3D model into a 2D space so that it can sample from the Texture map is called: UV Space, UV Coordinates, Texture Space. Algorithm A simplified explanation of how an algorithm could work to render an image: # For each pixel we trace the coordinates of the screen ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |