|

Troponym

In linguistics, troponymy is the presence of a 'manner' relation between two lexemes. The concept was originally proposed by Christiane Fellbaum and George Armitage Miller, George Miller. Some examples they gave are "to nibble is to eat in a certain manner, and to gorge is to eat in a different manner. Similarly, to traipse or to mince is to walk in some manner". p.567 Troponymy is one of the possible relations between verbs in the semantic network of the WordNet database. See also *Hyponymy and hypernymy * * Is-a ** Hypernymy (and supertype) ** Hyponymy (and Subtyping, subtype) * Has-a ** Holonymy ** Meronymy * Lexical chain * Ontology (information science) * Polysemy * Semantic primes * Semantic satiation * Thematic relation, Thematic role * Word sense * Word sense disambiguation References Inline citations Sources * {{Lexicology Semantic relations ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Polysemy

Polysemy ( or ; ) is the capacity for a Sign (semiotics), sign (e.g. a symbol, morpheme, word, or phrase) to have multiple related meanings. For example, a word can have several word senses. Polysemy is distinct from ''monosemy'', where a word has a single meaning. Polysemy is distinct from homonymy—or homophone, homophony—which is an Accident (philosophy), accidental similarity between two or more words (such as ''bear'' the animal, and the verb wikt:bear#Etymology 2, ''bear''); whereas homonymy is a mere linguistic coincidence, polysemy is not. In discerning whether a given set of meanings represent polysemy or homonymy, it is often necessary to look at the history of the word to see whether the two meanings are historically related. Lexicography, Dictionary writers often list polysemes (words or phrases with different, but related, senses) in the same entry (that is, under the same headword) and enter homonyms as separate headwords (usually with a numbering convention such ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

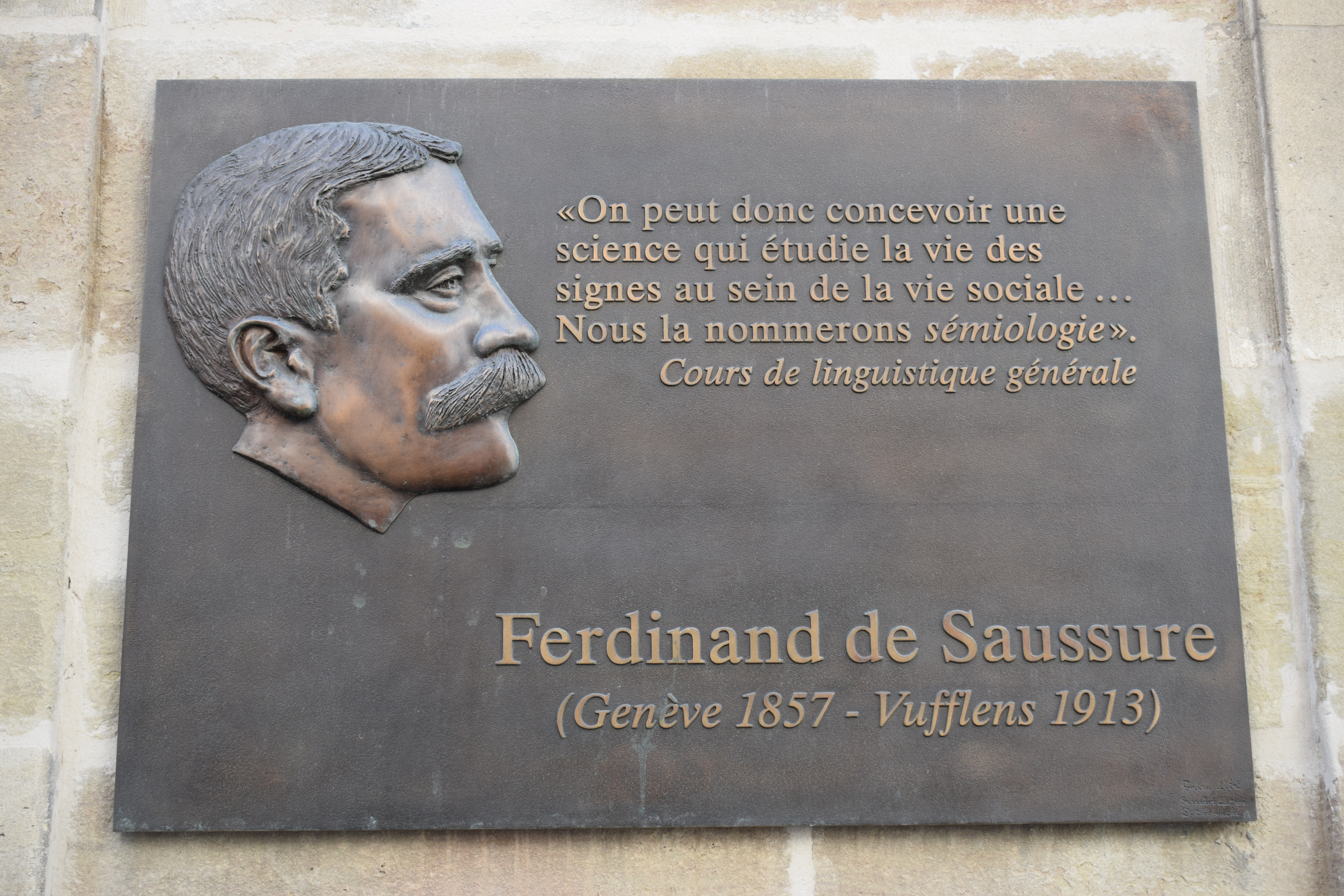

Linguistics

Linguistics is the scientific study of language. The areas of linguistic analysis are syntax (rules governing the structure of sentences), semantics (meaning), Morphology (linguistics), morphology (structure of words), phonetics (speech sounds and equivalent gestures in sign languages), phonology (the abstract sound system of a particular language, and analogous systems of sign languages), and pragmatics (how the context of use contributes to meaning). Subdisciplines such as biolinguistics (the study of the biological variables and evolution of language) and psycholinguistics (the study of psychological factors in human language) bridge many of these divisions. Linguistics encompasses Outline of linguistics, many branches and subfields that span both theoretical and practical applications. Theoretical linguistics is concerned with understanding the universal grammar, universal and Philosophy of language#Nature of language, fundamental nature of language and developing a general ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Has-a

In database design, object-oriented programming and Object-oriented design, design, has-a (has_a or has a) is a Object composition, composition relationship where one object (often called the constituted object, or part/constituent/member object) "belongs to" (is object composition, part or member of) another object (called the composite type), and behaves according to the rules of ownership. In simple words, has-a relationship in an object is called a member field of an object. Multiple has-a relationships will combine to form a possessive hierarchy. Related concepts "Has-a" is to be contrasted with an ''is-a'' (''is_a'' or ''is a'') relationship which constitutes a taxonomic hierarchy (subtyping). The decision whether the most logical relationship for an object and its subordinate is not always clearly ''has-a'' or ''is-a''. Confusion over such decisions have necessitated the creation of these metalinguistic terms. A good example of the ''has-a'' relationship is containers in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Word Sense

In linguistics, a word sense is one of the meanings of a word. For example, a dictionary may have over 50 different senses of the word "play", each of these having a different meaning based on the context of the word's usage in a sentence, as follows: In each sentence different collocates of "play" signal its different meanings. People and computers, as they read words, must use a process called word-sense disambiguationR. Navigli''Word Sense Disambiguation: A Survey'' ACM Computing Surveys, 41(2), 2009, pp. 1-69. to reconstruct the likely intended meaning of a word. This process uses context to narrow the possible senses down to the probable ones. The context includes such things as the ideas conveyed by adjacent words and nearby phrases, the known or probable purpose and register of the conversation or document, and the orientation (time and place) implied or expressed. The disambiguation is thus context-sensitive. Advanced semantic analysis has resulted in a sub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Thematic Relation

In certain theories of linguistics, thematic relations, also known as semantic roles or thematic roles, are the various roles that a noun phrase may play with respect to the action or state described by a governing verb, commonly the sentence's main verb. For example, in the sentence "Susan ate an apple", ''Susan'' is the doer of the eating, so she is an Agent (grammar), agent; ''an apple'' is the item that is eaten, so it is a Patient (grammar), patient. Since their introduction in the mid-1960s by Jeffrey Gruber and Charles J. Fillmore, Charles Fillmore, semantic roles have been a core linguistic concept and ground of debate between linguist approaches, because of their potential in explaining the relationship between syntax and semantics (also known as the syntax-semantics interface), that is how meaning affects the surface syntactic codification of language. The notion of semantic roles play a central role especially in functionalist linguistics, functionalist and language-com ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Semantic Satiation

Semantic satiation is a psychological phenomenon in which repetition causes a word or phrase to temporarily lose meaning for the listener, who then perceives the speech as repeated meaningless sounds. Extended inspection or analysis (staring at the word or phrase for a long time) in place of repetition also produces the same effect. History and research Leon Jakobovits James coined the phrase "semantic satiation" in his 1962 doctoral dissertation at McGill University. It was demonstrated as a stable phenomenon that is possibly similar to a cognitive form of reactive inhibition. Before that, the expression "verbal satiation" had been used along with terms that express the idea of mental fatigue. The dissertation listed many of the names others had used for the phenomenon: James presented several experiments that demonstrated the operation of the semantic satiation effect in various cognitive tasks such as rating words and figures that are presented repeatedly in a short time, v ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Semantic Primes

Natural semantic metalanguage (NSM) is a linguistic theory that reduces lexicons down to a set of semantic primitives. It is based on the conception of Polish professor Andrzej Bogusławski. The theory was formally developed by Anna Wierzbicka at Warsaw University and later at the Australian National University in the early 1970s, and Cliff Goddard at Australia's Griffith University. Approach The natural semantic metalanguage (NSM) theory attempts to reduce the semantics of all lexicons down to a restricted set of semantic primitives, or primes. Primes are universal in that they have the same translation in every language, and they are primitive in that they cannot be defined using other words. Primes are ordered together to form explications, which are descriptions of semantic representations consisting solely of primes. Research in the NSM approach deals extensively with language and cognition, and language and culture. Key areas of research include lexical semantics, gramm ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ontology (information Science)

In information science, an ontology encompasses a representation, formal naming, and definitions of the categories, properties, and relations between the concepts, data, or entities that pertain to one, many, or all domains of discourse. More simply, an ontology is a way of showing the properties of a subject area and how they are related, by defining a set of terms and relational expressions that represent the entities in that subject area. The field which studies ontologies so conceived is sometimes referred to as ''applied ontology''. Every academic discipline or field, in creating its terminology, thereby lays the groundwork for an ontology. Each uses ontological assumptions to frame explicit theories, research and applications. Improved ontologies may improve problem solving within that domain, interoperability of data systems, and discoverability of data. Translating research papers within every field is a problem made easier when experts from different countries mainta ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lexical Chain

The sequence between semantic related ordered words is classified as a lexical chain. A lexical chain is a sequence of related words in writing, spanning narrow (adjacent words or sentences) or wide context windows (an entire text). A lexical chain is independent of the grammatical structure of the text and in effect it is a list of words that captures a portion of the cohesive structure of the text. A lexical chain can provide a context for the resolution of an ambiguous term and enable disambiguation of concepts that the term represents. Examples include: * Rome → capital → city → inhabitant * Wikipedia → resource → web About Morris and Hirst introduce the term ''lexical chain'' as an expansion of ''lexical cohesion.'' A text in which many of its sentences are semantically connected often produces a certain degree of continuity in its ideas, providing good cohesion among its sentences. The definition used for lexical cohesion states that coherence is a result of c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Meronymy

In linguistics, meronymy () is a semantic relation between a meronym denoting a part and a holonym denoting a whole. In simpler terms, a meronym is in a ''part-of'' relationship with its holonym. For example, ''finger'' is a meronym of ''hand,'' which is its holonym. Similarly, ''engine'' is a meronym of ''car,'' which is its holonym. Fellow meronyms (naming the various fellow parts of any particular whole) are called comeronyms (for example, ''leaves'', ''branches'', ''trunk'', and ''roots'' are comeronyms under the holonym of ''tree''). Holonymy () is the converse of meronymy. A closely related concept is that of mereology, which specifically deals with part–whole relations and is used in logic. It is formally expressed in terms of first-order logic. A meronymy can also be considered a partial order. Meronym and holonym refer to ''part'' and ''whole'' respectively, which is not to be confused with hypernym which refers to ''type''. For example, a holonym of ''leaf'' mig ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Subtyping

In programming language theory, subtyping (also called subtype polymorphism or inclusion polymorphism) is a form of type polymorphism. A ''subtype'' is a datatype that is related to another datatype (the ''supertype'') by some notion of substitutability, meaning that program elements (typically subroutines or functions), written to operate on elements of the supertype, can also operate on elements of the subtype. If S is a subtype of T, the subtyping relation (written as , , or ) means that any term of type S can ''safely be used'' in ''any context'' where a term of type T is expected. The precise semantics of subtyping here crucially depends on the particulars of how ''"safely be used"'' and ''"any context"'' are defined by a given type formalism or programming language. The type system of a programming language essentially defines its own subtyping relation, which may well be trivial, should the language support no (or very little) conversion mechanisms ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |